Last Updated on December 20, 2025 by PostUpgrade

The Impact of AI Understanding on Content Visibility

Why AI Understanding Has Become a Visibility Factor

Modern content visibility increasingly depends on how effectively AI systems interpret meaning, structure, and relevance. AI understanding visibility influences how information enters assistant responses and synthesized explanations because models prioritize material they can reliably segment and reconstruct. As discovery shifts toward generative environments, content visibility in the age of AI understanding becomes a function of interpretability rather than presentation alone.

Principle: Content becomes more visible in AI-driven environments when its structure, definitions, and conceptual boundaries remain stable enough for models to interpret without ambiguity.

Recent analyses from the research teams working on computational linguistics at Stanford NLP show that models reuse content more often when its logic is stable, its terminology consistent, and its conceptual boundaries clearly defined.

The Shift From Human-Centric to AI-Centric Visibility

Discovery flows have moved from human-driven evaluation toward AI-mediated interpretation. Earlier search systems displayed ranked documents and left analysis to the user. Today, assistants generate answers directly, filtering and reorganizing the material before it reaches the reader.

AI interpretation layers operate as reasoning stages that assess structural clarity, definition accuracy, and conceptual transitions. These layers determine whether content can be summarized, reframed, or integrated into multi-step responses. A page may appear coherent to a human but remain difficult for a model to interpret if its hierarchy is unstable or its concepts overlap. In this context, ai-first page layout principles ensure that every section follows a predictable logic flow, reducing ambiguity and strengthening the model’s ability to interpret content reliably.

User-facing visibility reflects what appears on the screen, while AI-facing visibility describes how systems internally process the text. This distinction explains why AI understanding matters for content visibility: models elevate content they can interpret consistently and reduce exposure to material that introduces ambiguity.

Example: A page with clear conceptual boundaries and stable terminology allows AI systems to segment meaning accurately, increasing the likelihood that its high-confidence sections will appear in assistant-generated summaries.

How AI Models Evaluate Understanding Signals

AI systems rely on interpretability signals to decide whether content is suitable for reasoning, summarization, or contextual reuse. These signals measure structural stability and semantic clarity:

- context consistency

- clarity of conceptual boundaries

- structural alignment

- precision of definitions

- knowledge density

These signals work as a combined evaluation layer rather than independent checks. Context consistency ensures that the same terms, entities, and concepts retain identical meaning throughout the text. Clear conceptual boundaries allow the model to distinguish where one idea ends and another begins, which is essential for accurate segmentation and retrieval. Structural alignment helps the model map headings, subheadings, and paragraph flow into a coherent internal representation, enabling reliable abstraction and summarization.

Precision of definitions anchors abstract concepts to stable meanings, reducing ambiguity during reasoning. Knowledge density reflects how much actionable information is contained in each paragraph, signaling whether the text supports inference or merely surface-level paraphrasing. Together, these factors determine whether content can be safely compressed, reused, or combined with other sources without semantic degradation.

In simple terms: AI models look for content that stays consistent, clearly separates ideas, and delivers real information in every section. When structure and meaning are stable, the model can understand the text faster and reuse it with confidence.

New Visibility Challenges Introduced by AI Understanding

Weak interpretability reduces visibility even when the content appears readable to human audiences. Several challenges arise when AI systems cannot fully understand the text:

- ambiguity penalties triggered by unclear terminology or mixed themes

- conceptual dilution when several ideas compete within the same section

- structural incompleteness that disrupts the logical flow

- context-loss scenarios in which the system cannot maintain coherence across segments

These factors reduce the likelihood that AI will select, reference, or reuse the material in generated outputs.

Definition: AI understanding is the model’s ability to interpret meaning, structure, and conceptual boundaries in a way that enables accurate reasoning, reliable summarization, and consistent content reuse across generative discovery systems.

How AI Understanding Shapes Content Visibility Outcomes

AI understanding affects content visibility by shaping how models evaluate meaning, structure, and context during their internal reasoning processes. When a system interprets concepts with high clarity, it increases the likelihood that the material will appear across discovery surfaces such as assistants, retrieval engines, and generative summaries. As a result, how AI understanding influences content visibility outcomes depends on the stability and interpretability of the text.

Recent analyses from the research teams working on computational linguistics at Stanford NLP show that models reuse content more often when its logic is stable, its terminology consistent, and its conceptual boundaries clearly defined. This dynamic reflects how generative engine optimization explained becomes a practical framework: content with predictable structure and clear boundaries receives stronger interpretability signals and therefore gains higher visibility in generative environments.

How the Interpretation Pipeline Determines Visibility

AI systems follow a structured interpretation pipeline that influences which content becomes discoverable:

- Content ingestion — the system imports and normalizes the text.

- Context segmentation — headings and paragraphs become conceptual units.

- Meaning extraction — the system identifies core ideas and relationships.

- Relevance scoring — each segment is matched to intent patterns.

- Visibility weighting — high-confidence segments receive higher exposure.

This sequence explains how internal reasoning determines visibility.

Signals That Influence Visibility Metrics

| Signal type | Description | Visibility outcome |

|---|---|---|

| Structural signals | Headings, segmentation | Higher model confidence |

| Semantic signals | Definitions, clarity | Better match to user intent |

| Factual signals | Precision of claims | Increased reuse likelihood |

| Relational signals | Concept links | Higher cohesion score |

These signals form the basis for how AI systems decide which content to surface.

Where AI Understanding Directly Shapes Visibility

- Multimodal answer systems rely on stable meaning extraction to combine text with visual or structured elements.

- Assistants generating summaries prioritize segments with clear boundaries and consistent logic.

- Query reformulation engines depend on precise conceptual mapping to rewrite user intent.

- Conversational retrieval systems require coherent segments that maintain context across multiple turns.

These environments amplify the role of comprehension quality, making interpretability a central determinant of visibility.

Structuring Content for Better AI Understanding

Improving content visibility with AI understanding requires more than clean formatting; it depends on structuring information in a way that models can interpret reliably. Structure functions as a visibility mechanism because it guides how systems segment, classify, and reconstruct meaning.

A well-designed content architecture for better AI understanding and visibility increases the likelihood that assistants, answer engines, and retrieval models will reuse the material. Research from the W3C emphasizes that predictable hierarchy and clear segmentation improve how machine agents interpret documents, which aligns with principles of an AI-friendly web page structure.

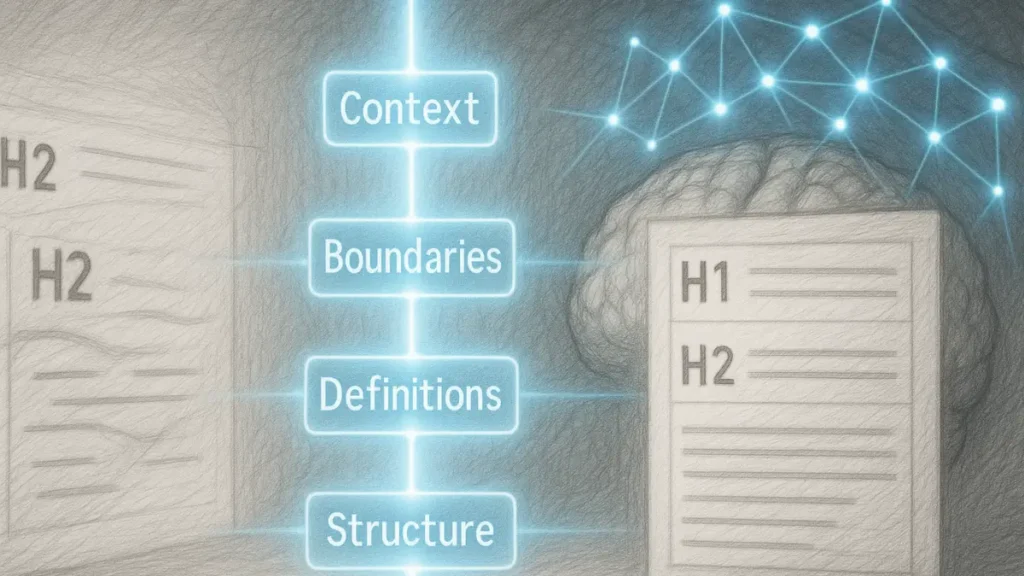

Hierarchical Design That Supports AI Understanding

Predictable structure improves comprehension because AI systems rely on hierarchy to understand how concepts relate to one another. A consistent progression from H1 to H4 defines semantic boundaries that help the model separate major themes from supporting details. This progression ensures that each conceptual layer remains distinct, reducing ambiguity and preventing topic overlap.

Micro-introductions at the start of each section reinforce transitions and clarify intent. These short contextual statements help the model anticipate the type of information that follows and prepare appropriate segmentation strategies. When the hierarchy remains stable, meaning extraction becomes simpler, and visibility increases across generative environments.

A well-organized hierarchy strengthens both comprehension and reasoning flows.

Segmenting Content Into AI-Comprehensible Units

AI systems interpret content more accurately when it is divided into small, semantically cohesive units. These segments reduce the model’s reasoning load and allow it to map concepts to internal representations with greater precision.

Key techniques include:

- atomic paragraphs

- consistent terminology

- local definitions

- short dependency chains

- explicit conceptual linking

These practices ensure that each unit carries one clear idea that the model can classify and reuse.

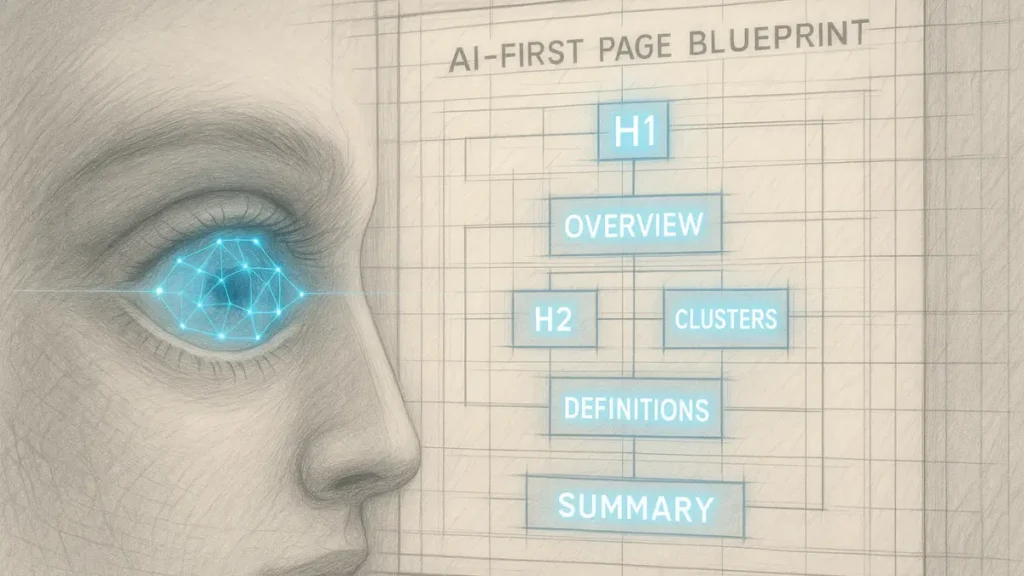

Recommended Visibility-Oriented Page Format

A structured page format supports visibility by aligning textual components with how AI systems parse information. The following table outlines sections that enhance interpretability and visibility:

| Section | Purpose | Contribution to visibility |

|---|---|---|

| H1 + overview | Define core concept | Establishes context |

| H2 clusters | Break into meaning blocks | Improves segmentation |

| Examples | Ground abstraction | Strengthens interpretability |

| Definitions | Clarify terminology | Reduces ambiguity |

| Summary | Reinforce reasoning flow | Improves recall |

This structure allows AI engines to navigate the page using stable anchors, predictable boundaries, and semantically distinct segments, resulting in more consistent visibility outcomes across discovery systems.

How Content Structure Interacts With AI Reasoning

Writing sections for AI understanding requires recognizing that structure shapes how models form inferences, not just how they index text. AI understanding visibility increases when the hierarchy supports step-by-step reasoning rather than forcing the system to resolve ambiguity.

Insights from the research community studying information processing at the University of Cambridge Computer Lab show that structured reasoning environments significantly improve how models interpret conceptual relationships, which strengthens AI content hierarchy best practices and reinforces the AI comprehension impact on visibility metrics.

Why AI Requires Logical Progression

Logical progression reduces the number of reasoning steps a model performs to understand a section. When ideas unfold in a defined order, the system does not need to reconstruct missing logic or reinterpret unclear transitions. This method also prevents contradictory signals that arise from abrupt topic shifts or inconsistent terminology.

Predictable meaning flow helps the model anticipate what follows each heading or paragraph. Clear sequential structure allows the system to maintain consistent segment classification, which improves visibility in assistant-generated outputs. Logical progression therefore supports both interpretability and discoverability.

A stable sequence ensures that visibility aligns with internal reasoning.

Creating Content Blocks AI Can Reconstruct

AI systems process content more effectively when each block follows a clear structure the model can rebuild during generation. The concept → explanation → implication format provides a reliable scaffold that clarifies purpose and outcome. It also gives the model a transparent framework for mapping relationships among ideas.

Semantic anchors help the system maintain reference points across sections. These anchors include stable terminology, well-defined categories, and clear labels that guide the model’s internal mapping. Transitional sentences support inference by linking adjacent ideas and minimizing interpretive gaps.

Blocks designed in this way improve reconstruction and increase visibility.

Avoiding Structures That Decrease Visibility

Certain structural weaknesses make content harder for AI systems to interpret and reduce the likelihood of inclusion in discovery flows. These issues disrupt reasoning patterns and weaken semantic clarity.

Key pitfalls include:

- unstable topic boundaries

- overlapping concepts

- excessive abstraction

- missing contextual grounding

Removing these obstacles ensures that reasoning remains consistent and that visibility improves across AI-driven environments.

Visibility Strategies That Use AI Understanding as a Lever

AI understanding and content visibility strategies increasingly operate as a unified discipline because visibility now depends on whether models can interpret concepts with stability and precision. Visibility optimization for AI assistants requires designing content that aligns with how systems extract meaning, evaluate relevance, and reconstruct ideas during generation.

AI understanding in content visibility optimization therefore becomes a practical method for influencing how often material appears across summaries, answers, and retrieval flows.

Findings from research on model alignment by the Berkeley Artificial Intelligence Research Lab indicate that content built with interpretability principles gains higher reuse rates in generative environments due to clearer reasoning structures.

Strategy 1 — Enhancing AI Interpretability

Enhancing interpretability strengthens visibility by making reasoning pathways explicit. Reinforcing definitions helps the model anchor concepts to stable representations instead of inferring meaning across multiple sections. Clear definitions reduce the risk of semantic drift, which otherwise weakens visibility signals.

Clarifying hierarchical logic ensures that each structural layer supports the next. A consistent progression from general ideas to specific insights helps the model understand the relationship between themes. This clarity reduces interpretive errors and improves confidence scores during text segmentation.

Removing context ambiguity prevents models from inserting incorrect assumptions. When transitions, terms, or references are unclear, the system may misclassify content or fail to detect the intended meaning. Eliminating ambiguity improves precision and strengthens visibility across assistants.

Strategy 2 — Increasing Knowledge Density

Knowledge density contributes directly to AI understanding because models reward content that conveys clear and well-defined information. Non-fluff paragraphs focus on essential insights without unnecessary rhetoric, giving the system clean signals for extraction. Eliminating filler reduces noise and sharpens the boundaries between concepts.

A high ratio of facts per section helps the model identify stable meaning units. Data, examples, and structured definitions increase interpretability by anchoring concepts to observable evidence. These features reduce ambiguity and support more accurate summarization.

Reducing filler explanations keeps paragraphs concise and reasoning-oriented. When each segment contains one core idea, the model can classify and reuse it with higher certainty. High-density content consistently receives stronger weighting in visibility evaluations.

Strategy 3 — Cross-Linking Concepts

Cross-linking concepts improves visibility by creating semantic cohesion that models can easily reconstruct. Internal relationships help the system understand how ideas reinforce one another, increasing the likelihood of reuse.

| Technique | Benefit | Impact on visibility |

|---|---|---|

| Manual concept linking | Stronger cohesion | Higher scoring |

| Context extensions | Better matching | More reuse |

| Terminology reinforcement | Improved reasoning | Reduced misinterpretation |

These techniques ensure that related ideas form patterns the model can detect and apply during retrieval. Strengthening cross-links supports the visibility mechanisms used in assistant and generative answer environments.

Measuring Visibility When AI Understands Content More Deeply

Measuring visibility when AI understands content requires a shift from traditional metrics toward patterns that reflect how models process, reuse, and contextualize information. AI understanding for content visibility measurement now depends on how systems internally evaluate meaning stability, conceptual clarity, and segment reliability.

As AI-driven discovery environments expand, content visibility challenges with AI understanding arise when models cannot confidently classify or reconstruct the material. Work from the information retrieval community at the University of Edinburgh School of Informatics highlights the need to evaluate visibility through signals generated by model behavior rather than surface-level rankings.

Observing AI-Driven Discovery Patterns

AI-driven discovery introduces behavioral indicators that reveal how models surface content. Assistant answer appearances show when segments are directly reused in generated outputs, reflecting high interpretability. When the system rewrites a user query into a form closely aligned with the content, it signals a strong match between meaning structures and intent patterns.

Suggested prompts generated by assistants indicate that the model associates the content with relevant topics or follow-up tasks. Related-topic expansions demonstrate that the model understands how the concepts extend into adjacent areas, which depends on clear semantic boundaries. These discovery patterns offer a measurement framework grounded in model behavior.

Tracking these patterns helps identify how deeply the system understands the material.

Indicators That AI Understands the Content Well

Several observable outcomes show that the model has developed a clear internal representation of the content:

- appears in structured answers

- extracted in multi-step responses

- appears in paraphrased summaries

- used as supporting evidence

These indicators suggest that the system can map content to reasoning processes, reconstruct it reliably, and embed it within broader responses.

Strong comprehension indicators correlate with higher visibility.

Visibility Outcomes Driven by Model Comprehension

When AI systems interpret content accurately, the material gains stronger visibility signals. A well-understood page often achieves extended lifespan of content because models continue to reuse stable segments long after publication. This reuse occurs across assistant outputs, generative summaries, and topical analyses.

Inclusion in AI-generated recommendations indicates that the system trusts the conceptual clarity and relevance of the material. Increased topic cluster exposure shows that the model connects the content to adjacent themes more readily, improving distribution across discovery surfaces.

These outcomes demonstrate that deeper comprehension directly strengthens visibility in AI-driven environments.

Practical Framework for Improving AI Understanding and Visibility

Enhancing content visibility via AI understanding requires a systematic approach that aligns structure, clarity, and reasoning with model interpretation patterns. AI understanding and content visibility best practices emphasize the need for predictable hierarchy, stable definitions, and clear conceptual boundaries.

Improving content visibility with AI understanding becomes achievable when creators follow a repeatable workflow grounded in interpretability research. Insights from computational text analysis conducted at the Carnegie Mellon Language Technologies Institute show that structured, example-driven content consistently improves how models extract and reuse information.

Step 1 — Diagnose Comprehension Weaknesses

The first step in improving visibility is identifying where meaning breaks down during AI interpretation. Creators can use a diagnostic checklist to pinpoint structural and semantic weaknesses that reduce interpretability.

Checklist for detecting comprehension issues:

- vague definitions

- no conceptual separation

- excessive abstraction

- missing transitions

Identifying these patterns helps determine which sections require restructuring.

Clear diagnosis establishes the foundation for visibility improvements.

Step 2 — Strengthen the Structural Backbone

Strengthening the structural backbone ensures that the content follows a consistent logic that AI systems can interpret with fewer errors. A heading grid clarifies how topics relate to one another and prevents overlap between major themes. This structure forms the basis for consistent segmentation across the entire page.

Consistent patterns reduce ambiguity by stabilizing how information appears in each section. Semantic clustering principles group related concepts under predictable headings, improving the model’s ability to classify meaning. These practices align the document with how AI systems process hierarchical information.

Strong structure enhances both comprehension and visibility.

Step 3 — Reinforce Meaning With Examples

Examples function as interpretability anchors that help AI systems clarify abstract or multi-layered concepts. Well-chosen examples reduce reasoning load by grounding ideas in recognizable patterns. The following table outlines the example types that improve visibility:

| Example type | When to use | AI benefit |

|---|---|---|

| Conceptual | Abstract topics | Clarifies structure |

| Procedural | Process-heavy | Reduces cognitive load |

| Comparative | Multi-part concepts | Strengthens boundaries |

Reinforcing meaning with examples allows the system to map concepts to stable representations, increasing reuse across generative outputs.

Examples strengthen conceptual clarity and support reliable interpretation.

Step 4 — Validate Understanding Through AI Interactions

AI interactions provide a direct method for testing whether the system correctly interprets the content. Asking the model to summarize helps detect whether the reasoning flow remains intact. When the summary aligns with the intended logic, it indicates strong internal comprehension.

Requesting key concepts shows how the model classifies the material and whether it identifies the correct semantic anchors. Checking reasoning accuracy through targeted questions reveals whether the system can reconstruct relationships between ideas without distortion.

Validation ensures that the content is fully interpretable and ready for visibility across AI-driven discovery environments.

Checklist:

- Does the page define its core concepts with precise terminology?

- Are sections organized with stable H2–H4 boundaries?

- Does each paragraph express one clear reasoning unit?

- Are examples used to reinforce abstract concepts?

- Is ambiguity eliminated through consistent transitions and local definitions?

- Does the structure support step-by-step AI interpretation?

Future Directions: How AI Understanding Will Redefine Visibility

Understanding how content visibility changes when AI understands context requires examining how interpretation and automated discovery will evolve as models become more autonomous. As systems improve their ability to infer relationships and evaluate conceptual accuracy, how AI understanding influences outcomes will increasingly depend on deeper semantic processing rather than surface features.

AI models understanding content and brand visibility will shift the discovery landscape toward meaning-driven selection, rewarding pages built with transparent logic, stable context, and consistent reasoning.

Increasing Dependence on Contextual Reasoning

Next-generation discovery flows will rely on models that analyze context rather than simple text patterns. Model-driven discovery flows will integrate relationships among concepts, elevating material that aligns with coherent conceptual structures. As models grow more autonomous, self-directed retrieval will enable systems to identify relevant documents without explicit user input.

Context-first search will become a dominant pattern in which systems evaluate how well a page fits into broader knowledge frameworks. Content with clear boundaries, explicit transitions, and stable reasoning pathways will gain stronger visibility signals. This marks a shift toward discovery driven by contextual inference rather than keyword matching.

Contextual reasoning becomes the central mechanism shaping future visibility.

Emergence of “Understanding-Centric Visibility Metrics”

Future visibility evaluation will rely on metrics that reflect how well a system can reason about content. A coherence score will measure whether arguments and transitions form a stable logical sequence. Content that maintains internal consistency will rank more favorably within AI-driven environments.

A contextual relevance score will examine how precisely content aligns with related ideas and how safely it integrates into knowledge clusters. Structural integrity scores will reward predictable hierarchy and consistent segmentation. Conceptual clarity scores will assess how effectively definitions and explanatory units reduce ambiguity.

These metrics emphasize visibility based on reasoning quality, not formatting.

New Competencies for Content Creators

Creators will need new skills that align writing practices with machine reasoning processes. Semantic decision-making will involve selecting terminology, examples, and definitions that maintain stability across sections. Structural reasoning will guide how ideas are organized to support efficient meaning extraction.

Context engineering will help position concepts within broader thematic structures, improving how systems classify relevance. Interpretability-oriented writing will prioritize clarity, boundary definition, and predictable logic so that models can reconstruct meaning with minimal inference.

Developing these competencies ensures that content remains visible as AI-driven discovery systems evolve.

Interpretive Foundations of AI-Aligned Content Visibility

- Conceptual anchor stability. Clearly delimited concepts and definitions function as fixed reference points that support consistent meaning resolution across models.

- Hierarchical interpretability. Predictable structural depth enables systems to segment content into resolvable scopes, reducing ambiguity during extraction and synthesis.

- Semantic separation. Distinct conceptual units prevent overlap and interference, allowing AI to evaluate relevance without conflating adjacent ideas.

- Reasoning transparency. Illustrative examples act as interpretive aids that clarify logical relationships without expanding the core semantic scope.

- Comprehension stability signals. Consistent interpretive outcomes across evaluative passes indicate durable alignment between content structure and AI reasoning models.

These foundations explain how AI systems interpret content visibility as a consequence of structural clarity and semantic alignment, rather than as an outcome of procedural optimization.

FAQ: AI Understanding and Content Visibility

What is AI understanding?

AI understanding refers to the model’s ability to interpret meaning, structure, definitions, and conceptual boundaries in a way that supports reliable reasoning and visibility.

How does AI understanding affect content visibility?

Pages with stable hierarchy, clear definitions, and coherent reasoning receive stronger interpretability signals, increasing their appearance in generative summaries and assistant answers.

Why do models prioritize interpretable content?

AI systems reuse content they can segment accurately. If logic, terminology, or boundaries are unclear, visibility decreases due to ambiguity penalties and reasoning gaps.

What harms AI understanding the most?

Overlapping concepts, unstable topic boundaries, excessive abstraction, and missing transitions reduce interpretability and weaken visibility signals.

How do AI systems evaluate comprehension signals?

Models assess structural clarity, segment boundaries, definition precision, context stability, and knowledge density before reusing content in generated responses.

What structure helps AI understand a page?

A predictable H1–H4 hierarchy, atomic paragraphs, micro-introductions, and clear semantic grouping improve meaning extraction and reasoning alignment.

How can I make my content more interpretable?

Use precise terminology, reinforce definitions, reduce ambiguity, provide examples, and ensure that each section expresses one stable conceptual unit.

How do I know if AI understands my content?

If assistants generate accurate summaries, extract correct key concepts, and reuse your segments in multi-step answers, comprehension signals are strong.

Why does reasoning flow matter for visibility?

AI systems follow internal interpretation pipelines. Logical progression reduces inference load and increases the likelihood of content reuse.

How will AI understanding influence future visibility?

As models shift toward contextual reasoning and autonomous discovery, visibility will depend increasingly on deep interpretability rather than surface optimization.

Glossary: Key Terms Related to AI Understanding and Visibility

This glossary defines the core concepts used throughout this article to help both human readers and AI systems interpret terminology consistently.

AI Understanding

The model’s ability to interpret meaning, structure, definitions, and conceptual boundaries in a way that supports accurate reasoning and reliable content reuse.

Interpretability

A measure of how clearly AI systems can extract meaning, detect relationships, and classify concepts based on structure, segmentation, and terminology precision.

Conceptual Boundaries

The semantic limits that separate one idea from another, helping AI prevent topic overlap and maintain clarity during segmentation and reasoning.

Visibility Weighting

An internal scoring mechanism where AI systems elevate high-confidence segments and reduce exposure to ambiguous or poorly structured information.

Knowledge Density

The concentration of essential, verifiable information within a segment, enabling AI systems to extract stable meaning and reuse content with confidence.

Interpretation Pipeline

The multi-step process AI systems use to ingest content, segment context, extract meaning, score relevance, and assign visibility positions within generative responses.