Last Updated on January 1, 2026 by PostUpgrade

The Hidden Importance of Semantic Containers

Semantic containers importance now defines how AI systems understand, extract, and reuse content at scale. Generative models replace linear search flows and evaluate pages through internal structure rather than surface relevance alone. As a result, AI systems prioritize texts that present meaning through clear, bounded units with predictable logic.

Semantic containers establish these units by controlling scope, reference, and contextual limits inside content. They help AI systems maintain consistency, reduce ambiguity, and preserve intent during extraction and recomposition. This structural role makes semantic containers a core requirement for long-term visibility in AI-first discovery environments.

This article explains semantic containers as an infrastructure layer for meaning rather than a formatting technique. It connects structure to interpretation accuracy, generative reuse, trust signals, and editorial governance. The analysis follows machine-readable logic and stable reasoning patterns aligned with AI-driven consumption.

Semantic Containers as a Control Layer for Meaning

Semantic containers importance defines how AI systems stabilize meaning during interpretation and reuse, especially when content enters automated extraction pipelines governed by formal structure rather than narrative flow. Modern document models described by the W3C treat structure as a semantic signal, which means AI systems evaluate meaning through boundaries, hierarchy, and scope instead of surface phrasing. This control layer determines how reliably models preserve intent across interpretation, extraction, and recomposition.

Semantic containers are discrete structural units that isolate meaning, enforce scope boundaries, and constrain interpretation within machine-readable contexts. Each container operates as an explicit boundary that limits how far a concept can propagate during model inference. This bounded design allows AI systems to reason over content without merging unrelated ideas.

Definition: AI understanding refers to a system’s ability to interpret meaning through explicit structural boundaries, stable definitions, and isolated semantic units, enabling consistent reasoning, accurate extraction, and reliable reuse across generative environments.

Claim: Semantic containers function as a primary control mechanism for meaning stability in AI-readable content.

Rationale: Large language models rely on structural segmentation to resolve scope, reference, and contextual priority.

Mechanism: Containers constrain inference by limiting contextual bleed between adjacent semantic units during parsing and reasoning.

Counterargument: Flat text can still support correct interpretation in short or narrowly scoped documents.

Conclusion: At scale, semantic containers remain necessary to prevent meaning drift and uncontrolled interpretation.

Why Meaning Without Containers Becomes Unstable

Semantic containers meaning stability depends on the presence of explicit boundaries that separate concepts into interpretable units. When content lacks these boundaries, AI systems must infer scope implicitly, which increases ambiguity during token aggregation. As a result, models may merge adjacent ideas or extend definitions beyond their intended limits.

This instability becomes visible when generative systems reuse content across different contexts. Without containers, extracted passages often lose their original constraints, which leads to misalignment between author intent and model output. Over time, repeated reuse amplifies these deviations and reduces consistency.

In practice, unstable meaning emerges when content relies on proximity instead of structure. AI systems treat neighboring sentences as related unless the structure signals otherwise. Clear containers reduce this assumption and preserve conceptual independence.

Containers vs Implicit Context

Semantic containers context control contrasts sharply with implicit context models that rely on narrative flow alone. Implicit context assumes that readers and models infer boundaries naturally, but AI systems interpret text through probabilistic associations rather than shared understanding. This difference makes implicit context unreliable for machine reasoning.

Containers replace assumption-based interpretation with explicit signals. They define where a concept starts, where it ends, and how it connects to other units. This mechanism allows AI systems to resolve reference without expanding scope beyond the container boundary.

When content uses containers, AI systems follow a predictable reasoning path. Without them, models rely on statistical proximity, which increases the risk of context leakage. Explicit containers therefore act as structural safeguards that maintain meaning under automated interpretation.

Semantic Containers and AI Interpretation Accuracy

Semantic containers interpretation accuracy determines how precisely AI systems reproduce intended meaning during automated reading and reuse, especially when content passes through multiple inference layers. Research from the Stanford Natural Language Institute shows that large language models prioritize structural signals when resolving scope, reference, and relevance during parsing. As a result, interpretation accuracy depends less on stylistic clarity and more on how content is segmented into bounded units.

Interpretation accuracy refers to the degree to which an AI system reproduces the intended meaning without hallucination, scope expansion, or unintended generalization. This property emerges when structural constraints guide token aggregation and inference resolution. Semantic containers provide these constraints by defining explicit interpretive boundaries.

Claim: Semantic containers directly increase AI interpretation accuracy.

Rationale: Models resolve meaning by evaluating bounded segments rather than entire documents as undifferentiated text.

Mechanism: Containers provide fixed reference frames that guide token aggregation and reduce cross-scope interference.

Counterargument: High-quality language can reduce ambiguity even in the absence of strict structure.

Conclusion: Under generative extraction and reuse, structure remains the dominant determinant of interpretation accuracy.

Principle: In AI-driven interpretation, content remains reusable and accurate only when its structural boundaries, definitions, and conceptual scope stay stable enough for models to resolve meaning without probabilistic inference.

How Models Segment Content Internally

Semantic containers AI understanding aligns with how models internally segment text into processing units. During parsing, models identify structural markers such as headings, paragraph boundaries, and hierarchical depth to assign relative importance and scope. These markers influence how attention distributes across tokens and how references resolve during inference.

Segmentation allows models to treat each container as a self-contained meaning unit. This mechanism reduces the probability that definitions, constraints, or conditions leak into adjacent sections. As a result, containers act as anchors that stabilize interpretation across varying contexts and prompts.

When models process containerized content, they follow a predictable segmentation pattern. This pattern mirrors document structure rather than narrative flow. Clear segmentation therefore improves consistency even when models operate under partial context windows.

Error Propagation Without Container Boundaries

Semantic containers meaning isolation prevents errors that emerge when content lacks explicit boundaries. Without containers, models rely on proximity-based assumptions to infer relationships between sentences and paragraphs. This reliance increases the risk that constraints or qualifiers attach to unintended concepts.

Error propagation becomes especially visible in generative reuse scenarios. When models extract fragments from unbounded text, they often omit implicit limits that were only clear within the original narrative. Over multiple reuse cycles, these omissions compound and distort the original meaning.

Container boundaries interrupt this process by isolating meaning at the structural level. They ensure that extracted units retain their internal logic and constraints. This isolation reduces cumulative error and preserves interpretation fidelity across downstream applications.

Semantic Containers in Content Architecture

Semantic containers importance becomes visible at the architectural level, where AI systems decide how to traverse, prioritize, and reuse information across large content environments. Research from MIT CSAIL demonstrates that model reasoning depends on internal structural signals more than on stylistic coherence. In this context, content architecture defines accessibility and interpretability as system properties rather than editorial outcomes.

Content architecture is the structural organization of information units that enables predictable access, traversal, and extraction by automated systems. It governs how content components relate, sequence, and scale without relying on narrative continuity. In AI-first environments, architecture operates as a functional layer that shapes how meaning persists during reuse.

Claim: Semantic containers define the minimum viable architecture for AI-readable content.

Rationale: Architecture determines retrieval order, scope resolution, and relevance weighting during automated interpretation.

Mechanism: Containers establish deterministic traversal paths that guide models through content without contextual ambiguity.

Counterargument: Navigation systems and internal links can partially compensate for weak internal structure.

Conclusion: Internal container logic consistently outperforms external navigation signals under generative extraction.

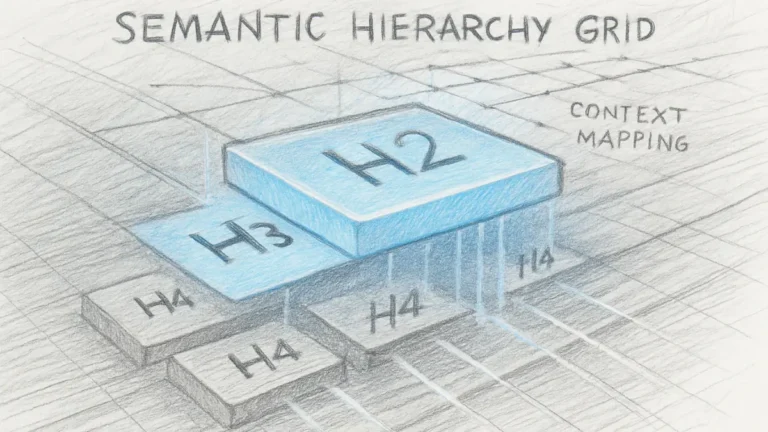

Container Hierarchies and Traversal Logic

Semantic containers importance emerges most clearly when hierarchy guides how AI systems move through content. Hierarchical containers signal priority, dependency, and scope, allowing models to distinguish foundational concepts from dependent ones. This hierarchy directly influences how reasoning chains form during traversal.

Traversal logic develops from the ordered relationship between containers rather than from sentence proximity. AI systems follow this order to determine which units inform others during inference. As a result, hierarchy functions as a control mechanism that reduces ambiguity.

When hierarchy is explicit, models rely less on probabilistic assumptions. Structural precedence replaces narrative flow as the primary guide, which stabilizes interpretation across extraction and reuse scenarios.

Container Depth vs Flat Content Models

Semantic containers structural meaning contrasts with flat content models that treat all sections as equal. Flat structures provide no signals about conceptual depth, which forces models to infer relationships statistically. This process often collapses layered meaning into generalized summaries.

Container depth introduces structured layering by separating core concepts from derived ones. This separation allows models to preserve logical order during extraction and recomposition. Depth therefore supports reasoning fidelity across generative use cases.

Flat content may remain readable for humans, but AI systems do not apply human assumptions. Explicit structural depth replaces assumption with logic, which preserves meaning under automated traversal.

| Dimension | Containerized Architecture | Non-Containerized Architecture | Impact on AI Interpretation |

|---|---|---|---|

| Traversal logic | Deterministic | Probabilistic | Predictability vs ambiguity |

| Meaning isolation | Enforced | Implicit | Reduced vs amplified leakage |

| Reuse safety | High | Low | Stable vs degraded outputs |

| Scalability | Structural | Editorial | Systemic vs manual control |

This comparison shows that containerized architectures maintain structural integrity as content scales, while flat models degrade under AI-driven reuse.

Semantic Containers as Trust and Authority Signals

Semantic containers trust signals shape how AI systems evaluate reliability and authority during content selection and reuse, especially when traditional ranking signals lose direct influence. Frameworks outlined by the OECD show that digital trust increasingly depends on structural consistency rather than on external endorsements alone. In this environment, structure contributes to authority independently of backlinks by signaling internal coherence and verification readiness.

Trust signals are structural and factual indicators that increase the likelihood of content reuse by AI systems. These signals emerge from repeatable patterns, stable definitions, and predictable boundaries that models can validate during extraction. Semantic containers encode these signals directly into content architecture.

Claim: Semantic containers act as non-linguistic trust signals for AI systems.

Rationale: Models associate consistent internal structure with reliability and lower uncertainty.

Mechanism: Repeated container patterns increase confidence scoring by reducing variance in interpretation.

Counterargument: Strong domain authority can partially override weak internal structure.

Conclusion: Structure amplifies authority but cannot replace external credibility signals.

Container Consistency and Model Confidence

Semantic containers content reliability increases when AI systems encounter consistent container patterns across sections and documents. Consistency allows models to predict where definitions, constraints, and implications appear, which reduces processing uncertainty. As confidence increases, systems favor such content for reuse and summarization.

Model confidence also depends on internal validation loops. When containers repeat predictably, models confirm earlier assumptions about structure and scope. This confirmation strengthens reliability signals without relying on external references.

Consistent containers help AI systems trust the content flow. They reduce the need for probabilistic guessing and allow models to validate meaning through structure rather than inference alone.

Structural Signals vs Stylistic Signals

Semantic containers content authority differs from stylistic authority that relies on tone, vocabulary, or rhetorical polish. Stylistic signals affect human perception but offer limited value for machine validation. AI systems prioritize signals that remain stable under extraction and recomposition.

Structural signals persist even when content fragments move across contexts. Containers preserve scope and intent regardless of presentation changes. This persistence makes structure a stronger authority indicator than surface-level style.

Style may improve readability, but structure secures trust. AI systems rely on containers to evaluate authority when text appears outside its original environment.

Semantic Containers in Generative Systems

Semantic containers importance becomes critical inside generative systems, where AI models extract, recombine, and synthesize meaning beyond the boundaries of original documents. Studies published by DeepMind Research show that generative models operate on reusable semantic units rather than linear text sequences. In this environment, structure determines whether meaning survives recomposition, which makes containers a prerequisite for safe reuse.

Generative systems assemble outputs by recombining validated semantic units rather than entire documents. These systems rely on extracted fragments that must preserve internal constraints to remain accurate. Semantic containers provide the boundaries that allow fragments to function as stable units during synthesis.

Claim: Semantic containers enable safe recomposition in generative systems.

Rationale: Reuse requires atomic meaning units that preserve scope and constraints during extraction.

Mechanism: Containers provide extraction-safe boundaries that prevent unintended semantic merging during synthesis.

Counterargument: Advanced summarization models can sometimes infer structure implicitly from language alone.

Conclusion: Explicit containers consistently reduce recomposition errors under generative reuse.

Container Granularity and Reuse Probability

Semantic containers importance becomes visible at the level of granularity, where models decide which units are safe to reuse. Granularity defines how much meaning each container carries during extraction and recomposition. Fine-grained containers allow models to select only the relevant units needed for a response without importing unrelated constraints.

Granularity also affects how models score reuse probability. When containers encapsulate a single concept with clear limits, models treat them as reliable components for generation. This behavior increases the likelihood that content appears in accurate generative outputs.

Well-calibrated granularity helps generative systems reuse content without distortion. Containers that balance completeness and isolation allow AI models to assemble outputs from stable building blocks.

Example: A page built around semantic containers allows generative systems to extract individual concepts without importing unrelated constraints, which increases the probability that these isolated sections appear unchanged in assistant-generated summaries.

Failure Modes in Containerless Generative Outputs

Semantic containers inference control becomes essential when content lacks explicit structural boundaries. In containerless text, models infer relationships based on proximity rather than intent, which leads to uncontrolled scope expansion during generation.

Common failure modes include merged definitions, lost qualifiers, and extended claims that exceed original constraints. These issues appear frequently when models extract passages that rely on narrative flow instead of structure. Over repeated reuse cycles, such errors accumulate and degrade output reliability.

Explicit containers interrupt these failure modes by enforcing inference limits. They allow generative systems to recombine content without exceeding intended meaning, which preserves accuracy across contexts.

Editorial Governance Through Semantic Containers

Semantic containers importance becomes especially evident at the governance level, where large content systems must preserve meaning consistency across time, teams, and automated reuse. In AI-mediated environments, governance no longer depends only on editorial discipline but on enforceable structural rules. Research initiatives associated with the Harvard Data Science Initiative highlight that scalable governance in data-driven systems requires deterministic structure rather than manual review.

Editorial governance is the system-level control of meaning consistency across large content corpora. It ensures that definitions, constraints, and implications remain aligned as content expands and fragments circulate through AI systems. Semantic containers provide the structural layer that transforms governance from a guideline into an operational mechanism.

Claim: Semantic containers enable enforceable editorial governance across large content systems.

Rationale: Governance requires deterministic structure to maintain consistency without continuous human intervention.

Mechanism: Containers standardize where meaning appears and how it propagates across articles and updates.

Counterargument: Style guides can maintain consistency through editorial control alone.

Conclusion: Without structural enforcement, style guides fail as content scales.

Container Templates and Vocabulary Stability

Semantic containers importance appears clearly in template-driven governance, where fixed container patterns stabilize vocabulary usage. Templates define where concepts, definitions, and constraints must appear, which reduces variation across authors and time. This predictability allows AI systems to recognize recurring semantic roles with high confidence.

Vocabulary stability emerges when the same terms occupy identical structural positions across documents. Containers enforce this alignment by constraining how concepts can be introduced and extended. Over time, this prevents subtle semantic drift caused by editorial variation.

Stable templates replace subjective judgment with structure. They allow teams to scale content production while preserving consistent meaning.

Preventing Semantic Drift at Scale

Semantic containers importance increases as content volume grows, because drift becomes a systemic risk rather than a local error. Semantic drift occurs when similar concepts receive slightly different explanations across contexts. AI systems later merge these differences during reuse, which fragments internal logic.

Containers prevent drift by isolating concepts and enforcing strict scope boundaries. Each container limits how far a concept can extend during updates or recomposition. This constraint preserves consistency across reuse cycles.

At scale, governance without containers degrades rapidly. Structural governance anchored in containers preserves meaning even when content evolves continuously.

A large enterprise knowledge base once relied on editorial guidelines without structural enforcement. Over several years, parallel teams introduced small variations in definitions that AI systems later merged incorrectly during reuse. After introducing semantic containers, the organization restored consistency by anchoring concepts to fixed structural positions.

Semantic Containers and AI Reasoning Support

Semantic containers importance becomes visible when AI systems perform multi-step reasoning that depends on strict control of premises and conclusions. Research conducted at Carnegie Mellon Language Technologies Institute demonstrates that reasoning accuracy in language models depends on how effectively structure constrains inference across steps. In this context, containers support reasoning not by adding information, but by enforcing logical boundaries.

Reasoning support refers to structural conditions that allow models to maintain logical consistency across steps during inference. These conditions determine whether conclusions remain tied to their original premises. Semantic containers establish such conditions by separating and stabilizing reasoning units.

Claim: Semantic containers are prerequisites for reliable AI reasoning.

Rationale: Reasoning requires bounded premises that do not leak into unrelated inference steps.

Mechanism: Containers isolate premises and conclusions, which preserves logical order during multi-step reasoning.

Counterargument: Large context windows can reduce dependency on explicit structure.

Conclusion: Context size does not replace structure when reasoning chains grow in complexity.

Containers as Premise Boundaries

Semantic containers model comprehension improves when containers define explicit premise boundaries. During reasoning, models evaluate each premise as a separate unit with defined scope. These boundaries help models determine which facts apply to which conclusions.

Clear premise boundaries reduce unintended inference jumps. When containers isolate assumptions, models avoid combining premises that belong to different logical paths. This isolation improves consistency across reasoning steps.

Premise boundaries guide models toward disciplined reasoning. They ensure that conclusions emerge from relevant inputs rather than from accumulated context noise.

Reasoning Collapse Without Structural Isolation

Semantic containers content logic becomes critical when reasoning chains lack structural isolation. Without containers, models rely on contextual proximity to link ideas. This reliance often causes premises to blend, which leads to invalid conclusions.

Reasoning collapse appears when models extend a conclusion beyond its original constraints. Such collapse increases as reasoning depth grows. Models without structural guidance struggle to maintain logical separation across steps.

Structural isolation prevents this failure mode. Containers enforce logical segmentation, which allows AI systems to sustain reasoning accuracy across extended inference sequences.

Measuring the Impact of Semantic Containers

Semantic containers impact becomes measurable when AI systems expose consistent signals related to reuse, visibility, and extraction behavior rather than traditional ranking positions. Analyses published by Our World in Data show that modern digital systems increasingly rely on observable interaction patterns instead of legacy performance indicators. This shift places measurement inside AI consumption layers rather than search result pages.

Impact refers to measurable changes in AI reuse frequency, citation consistency, and extraction fidelity across automated systems. These changes reflect how reliably content survives interpretation and recomposition. Semantic containers influence impact by stabilizing meaning at the structural level.

Claim: The impact of semantic containers is measurable through AI system behavior.

Rationale: AI systems leave observable reuse and extraction signals during content interaction.

Mechanism: Containerized content shows higher consistency during extraction and recomposition cycles.

Counterargument: Attribution remains indirect because AI systems rarely expose explicit causality.

Conclusion: Directional metrics provide sufficient evidence for optimization decisions.

Visibility and Reuse Indicators

Semantic containers content visibility appears through repeated selection of the same content units across generative outputs. AI systems tend to reuse containerized fragments that preserve meaning without requiring additional inference. This reuse signals that structure supports reliable extraction.

Visibility also manifests in citation persistence. When containers isolate concepts clearly, AI systems reference them more consistently across contexts. This consistency indicates that content remains interpretable even when detached from its original source.

Clear indicators reduce reliance on speculative metrics. They allow teams to observe how structure affects reuse patterns directly within AI-driven environments.

Comparative Metrics Before and After Containerization

Semantic containers value becomes apparent when comparing content performance before and after structural changes. Containerization often leads to higher extraction accuracy and reduced variance in generated summaries. These differences appear even when topical coverage remains unchanged.

Comparative analysis focuses on relative improvement rather than absolute scores. Metrics such as reuse frequency, constraint preservation, and citation stability provide insight into structural effectiveness. Changes in these metrics reflect the operational value of containers.

This comparison approach avoids traditional SEO assumptions. It evaluates impact where AI systems actually consume content.

| Metric | Before Containerization | After Containerization | Observed Change |

|---|---|---|---|

| AI reuse frequency | Inconsistent | Stable | Increased reuse |

| Extraction accuracy | Variable | Consistent | Reduced errors |

| Meaning consistency | Fragmented | Preserved | Improved fidelity |

These metrics show that containerization produces measurable improvements in how AI systems interact with content, which confirms its structural impact.

Strategic Implications of Semantic Containers

Semantic containers significance defines how content strategy evolves in AI-mediated environments, where reuse outweighs momentary visibility. At the same time, semantic containers importance becomes clear when organizations plan for long-term AI discovery rather than short-term traffic gains. Research published by the Oxford Internet Institute shows that durable digital systems prioritize structural persistence over tactical optimization.

Strategic implications describe second-order effects on content systems that emerge gradually rather than through immediate performance shifts. These effects influence how meaning scales, how systems adapt, and how knowledge remains accessible across generative interfaces. Semantic containers shape strategy by anchoring these long-term behaviors.

Claim: Semantic containers redefine content strategy for AI-first environments.

Rationale: Visibility increasingly shifts from ranking positions to repeated reuse across AI systems.

Mechanism: Containers align content with generative consumption models that favor stable, extractable units.

Counterargument: Search engines and traditional traffic channels still deliver significant volume.

Conclusion: Strategic alignment must anticipate AI-first discovery while remaining compatible with legacy channels.

Containers as Long-Term Infrastructure

Semantic containers significance appears most clearly when organizations treat structure as infrastructure rather than as a publishing choice. Infrastructure decisions persist across years, platforms, and distribution models. Containers establish a stable backbone that supports consistent interpretation regardless of surface changes.

Long-term infrastructure enables content systems to evolve without semantic decay. When containers anchor meaning, updates affect isolated units instead of entire documents. This isolation allows systems to adapt incrementally without destabilizing accumulated knowledge.

Over time, containers reduce maintenance overhead. They prevent repeated rewrites caused by ambiguity and misinterpretation, which makes content systems more resilient.

From Articles to Semantic Assets

Semantic containers information framing transforms content from isolated articles into reusable semantic assets. Assets retain value when extracted, summarized, or recombined across AI systems. Containers provide the framing that makes this portability possible.

When content functions as assets, strategy shifts toward lifecycle management rather than publication cadence. Teams invest in clarity, boundaries, and reuse potential instead of volume. This shift aligns production with AI-driven consumption patterns.

Checklist:

- Are core concepts defined within explicit semantic containers?

- Do H2–H4 boundaries clearly separate conceptual scope?

- Does each paragraph represent a single reasoning unit?

- Are abstract claims supported by structurally isolated examples?

- Is semantic drift prevented through stable terminology and definitions?

- Does the structure support deterministic AI interpretation across reuse contexts?

In practice, containers enable organizations to think in systems rather than pages. Meaning becomes portable, and visibility emerges from reuse rather than from placement alone.

Interpretive Model of Semantic Container Architecture

- Container-bound meaning resolution. Explicit semantic containers define where meaning begins and ends, allowing AI systems to resolve scope without relying on probabilistic proximity between adjacent sections.

- Hierarchical dependency signaling. Nested heading layers express dependency relationships between concepts, which generative systems use to preserve logical order during extraction and recomposition.

- Stable semantic anchoring. Localized definitions and constrained concept blocks provide fixed anchors that reduce interpretation variance across different AI consumption contexts.

- Inference boundary enforcement. Structural separation between concept, mechanism, and implication blocks limits cross-container inference and prevents unintended semantic expansion.

- Cross-context interpretability continuity. Pages that maintain consistent container logic remain interpretable when fragmented, summarized, or reused within generative interfaces.

This structural logic describes how semantic containers guide AI interpretation by enforcing boundaries, preserving dependency order, and maintaining meaning stability under generative reuse.

FAQ: Semantic Containers and AI Interpretation

What are semantic containers in AI-readable content?

Semantic containers are structural units that isolate meaning, define scope boundaries, and stabilize interpretation for AI and generative systems.

Why do AI systems rely on semantic containers?

AI systems rely on semantic containers to resolve context, preserve logical boundaries, and prevent unintended meaning expansion during extraction and reuse.

How do semantic containers affect interpretation accuracy?

Containers improve interpretation accuracy by constraining inference to well-defined units instead of relying on proximity or narrative flow.

How are semantic containers used in generative systems?

Generative systems reuse content by recombining containerized semantic units that preserve internal constraints and contextual meaning.

What role do semantic containers play in trust and authority?

Consistent container structures act as non-linguistic trust signals that indicate reliability and reduce interpretation variance for AI systems.

Why are semantic containers important for large content systems?

At scale, containers prevent semantic drift by enforcing stable meaning placement across documents, updates, and reuse cycles.

How do semantic containers support AI reasoning?

Containers isolate premises and conclusions, enabling AI systems to maintain logical consistency during multi-step reasoning.

Can content remain reliable without semantic containers?

Without containers, AI systems rely on probabilistic context inference, which increases ambiguity and interpretation errors over time.

How do semantic containers influence long-term AI visibility?

Semantic containers enable content to remain interpretable and reusable as AI discovery shifts from ranking to generative consumption.

Glossary: Key Terms in Semantic Container Architecture

This glossary defines the core terminology used to describe how semantic containers structure meaning, interpretation, and reuse in AI-first content systems.

Semantic Container

A discrete structural unit that isolates meaning, defines scope boundaries, and constrains interpretation for AI and generative systems.

Meaning Isolation

The structural separation of concepts that prevents contextual bleed and limits unintended semantic expansion during AI inference.

Interpretation Boundary

A structural constraint that defines where a concept begins and ends, enabling AI systems to resolve scope deterministically.

Generative Reuse

The process by which AI systems recombine extracted semantic units into new outputs while preserving internal meaning constraints.

Structural Consistency

The maintenance of stable container patterns across content that enables predictable interpretation and reuse by AI systems.

Inference Control

The limitation of AI reasoning pathways through explicit structural segmentation that constrains how conclusions are derived.

Reasoning Chain Stability

The ability of an AI system to maintain logical coherence across multi-step inference due to bounded premises and conclusions.

Semantic Drift

The gradual divergence of meaning that occurs when concepts are reused without structural boundaries across large content systems.

Editorial Governance

System-level control of meaning placement and consistency achieved through enforceable structural rules rather than style guidance.

Structural Predictability

The degree to which content follows stable container logic, enabling AI systems to segment and interpret meaning consistently.