Last Updated on December 26, 2025 by PostUpgrade

The Future of Content Surfacing in AI-Driven Platforms

AI content surfacing defines how AI-driven platforms select and expose information to users. Modern systems no longer wait for explicit queries. Instead, they infer relevance from context, signals, and user behavior. This shift changes how platforms provide access to knowledge and how AI systems reuse content over time.

AI Content Surfacing as a New Distribution Paradigm

AI content surfacing platforms represent a structural distribution paradigm rather than an auxiliary feature inside digital systems. Modern platforms increasingly determine information exposure before users express explicit intent. This shift explains why platform-driven exposure replaces user-initiated discovery, a transition examined in research from the Stanford Natural Language Institute.

Definition: AI understanding in content surfacing refers to a system’s ability to interpret structural hierarchy, contextual signals, and semantic boundaries in order to infer what information should be exposed without relying on explicit user queries.

Claim: This distribution model changes how information reaches users in AI-driven environments.

Rationale: Query-based discovery assumes that users can clearly articulate intent, which limits exposure in complex or unfamiliar domains.

Mechanism: The system evaluates context, historical interaction signals, and structural relevance to select which content appears.

Counterargument: Explicit search remains effective for transactional and narrowly scoped tasks.

Conclusion: Surfacing does not replace search but adds a parallel distribution layer that operates without direct queries.

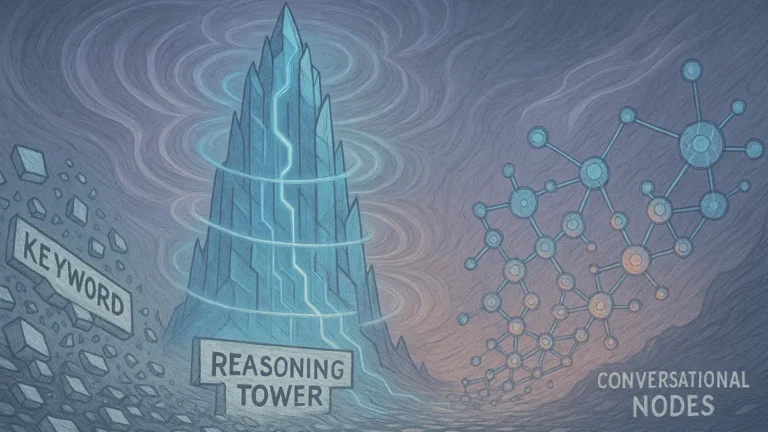

Transition From Query-Based Discovery

Content surfacing in AI platforms emerged as systems began to prioritize inferred relevance over direct input. Early discovery models activated only after a user submitted a query, while modern systems monitor contextual signals continuously. As a result, distribution logic now operates across the entire interaction lifecycle.

This transition also alters how platforms evaluate discovery quality. Systems no longer rely solely on ranking accuracy. Instead, they assess whether exposed content aligns with the user’s evolving informational state over time.

In practical terms, platforms no longer wait for questions. They observe behavior and context, then decide which information should appear next.

Structural Comparison: Search vs Surfacing

AI driven content surfacing differs structurally from traditional search across core operational dimensions. These differences explain why surfacing functions as a distribution mechanism rather than a retrieval response.

| Dimension | Search | Content Surfacing |

|---|---|---|

| Trigger | Explicit query | Implicit context |

| Control | User | Platform AI |

| Timing | Reactive | Predictive |

| Output | Ranked list | Curated exposure |

The comparison shows that search reacts to direct requests, while surfacing anticipates relevance through inference. This distinction clarifies why surfacing enables discovery even when users do not formulate explicit queries.

Architecture of AI Content Surfacing Systems

AI content surfacing systems require an independent architectural logic because they operate without explicit user requests. These systems rely on pipeline-based reasoning that evaluates relevance continuously rather than episodically. This architectural separation from recommendation stacks reflects findings discussed by researchers at MIT CSAIL, who analyze inference-driven decision systems at scale.

Definition: AI content surfacing systems are multi-layer pipelines that continuously infer relevance without user queries.

Claim: Dedicated architectural pipelines are necessary to support continuous content surfacing.

Rationale: Recommendation stacks optimize for similarity, while surfacing requires ongoing relevance evaluation across contexts.

Mechanism: The system processes signals through sequential layers that stabilize exposure decisions over time.

Counterargument: Lightweight recommendation engines can approximate surfacing behavior in limited scenarios.

Conclusion: Full surfacing capability depends on purpose-built pipelines rather than adapted recommendation logic.

Core Layers of Surfacing Pipelines

AI content surfacing pipelines organize inference into distinct layers that handle relevance assessment and exposure control. Each layer performs a specific function, which prevents decision overload and improves system stability. This layered design also allows platforms to adjust individual components without disrupting the entire pipeline.

The separation of layers supports scalability and interpretability. When systems isolate inference, aggregation, and exposure logic, engineers can trace decisions and refine models more effectively. As a result, platforms maintain consistent behavior even as data volume increases.

- inference layer

- signal aggregation layer

- exposure arbitration layer

- feedback stabilization layer

Together, these layers ensure that surfacing decisions remain consistent, explainable, and adaptable as user context evolves.

In simple terms, the pipeline works like a sequence of filters. Each step refines what the system shows next. This structure helps platforms avoid chaotic or repetitive exposure.

Infrastructure and Scaling Constraints

AI content surfacing infrastructure must handle continuous inference under variable load conditions. Unlike search systems that respond to discrete requests, surfacing architectures operate persistently and must manage latency, memory, and throughput at all times. This requirement places additional pressure on compute orchestration and data synchronization.

Scaling challenges emerge when platforms expand across regions or devices. Systems must preserve consistent exposure logic while processing heterogeneous signals in parallel. Consequently, infrastructure design prioritizes fault tolerance and incremental updates over batch processing.

At a practical level, platforms build infrastructure that stays active even when users remain idle. The system prepares content in advance so exposure feels immediate and relevant when interaction resumes.

Signals and Decision Logic in Content Surfacing

AI content surfacing signals form the foundational decision units that guide how platforms expose information. At scale, deterministic rules fail because they cannot adapt to changing context, user behavior, and content environments. For this reason, modern systems rely on signal aggregation to infer relevance dynamically, an approach studied extensively by researchers at Berkeley Artificial Intelligence Research.

Definition: Content surfacing signals are machine-interpretable indicators used to infer relevance and exposure timing.

Claim: Signal-based decision logic enables scalable and adaptive content surfacing.

Rationale: Fixed rules cannot account for the variability and uncertainty present in real-world user contexts.

Mechanism: The system aggregates multiple signal types to estimate relevance and determine exposure priority.

Counterargument: Rule-based systems offer predictability in tightly controlled environments.

Conclusion: Signal-driven logic provides the flexibility required for continuous surfacing across diverse contexts.

Principle: In inference-driven platforms, content remains surfaceable over time when signals, terminology, and structural boundaries stay consistent enough for AI systems to resolve relevance without repeated recalculation.

Signal Categories Used by AI Platforms

Contextual content surfacing depends on the combination of several distinct signal categories. Each category captures a different aspect of user interaction or content state, which helps platforms avoid overreliance on a single indicator. This multi-signal approach improves robustness when individual signals fluctuate or degrade.

Platforms treat these signal categories as complementary rather than hierarchical. By evaluating them together, systems reduce bias and improve temporal alignment between user needs and exposed information. As a result, exposure decisions remain stable even as individual signals change.

- behavioral signals

- temporal signals

- structural signals

- contextual continuity signals

Together, these categories support the DRC logic by ensuring that relevance estimates reflect both immediate behavior and longer-term context.

In everyday terms, the system looks at what users do, when they do it, how content is organized, and how topics connect over time. It then combines these views to decide what to show next.

Decision Thresholds and Exposure Control

Content surfacing decision logic relies on thresholds that translate aggregated signals into exposure actions. These thresholds define when content becomes visible, how prominently it appears, and how long it remains exposed. Systems adjust thresholds dynamically to respond to shifts in context or signal strength.

Control mechanisms also prevent excessive exposure or repetition. By monitoring feedback loops, platforms refine thresholds to balance discovery with cognitive load. This process ensures that surfaced content remains relevant without overwhelming users.

At a practical level, platforms set boundaries that determine when enough evidence exists to show content. When signals weaken or conflict, the system reduces exposure to maintain trust and relevance.

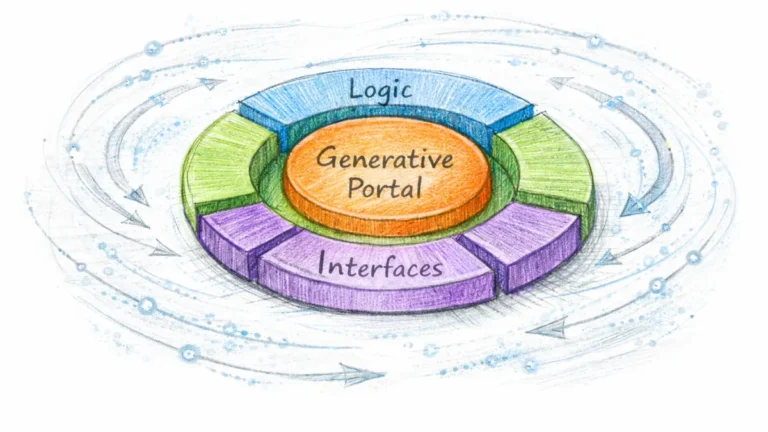

Interface-Driven Content Surfacing

Content surfacing in AI interfaces positions the interface as an active mediator rather than a passive display layer. Backend inference determines what to expose, while the frontend shapes how exposure appears, scales, and persists across interactions. Standards work from the W3C explains how adaptive interfaces translate system decisions into consistent user-facing structures.

Definition: Interface-based content surfacing is the manifestation of AI exposure decisions through adaptive UI elements.

Claim: Interfaces actively mediate content surfacing outcomes rather than merely rendering backend decisions.

Rationale: Users perceive relevance through layout, timing, and prominence, which interfaces control directly.

Mechanism: Adaptive UI elements amplify or dampen exposure by adjusting placement, hierarchy, and persistence.

Counterargument: Backend inference determines relevance regardless of presentation.

Conclusion: Interface logic co-determines surfacing effectiveness by shaping how exposure converts into attention.

Adaptive Interface Patterns

Interface based content surfacing relies on repeatable UI patterns that translate inference into visible structure. These patterns allow platforms to adapt exposure without changing core models. As a result, interfaces respond quickly to context shifts while preserving consistent behavior.

Platforms select patterns based on interaction mode and device constraints. Each pattern supports a specific exposure goal, such as gradual reveal or immediate prominence. This alignment keeps surfacing predictable even as content changes.

- adaptive cards

- progressive disclosure panels

- conversational insertion points

Together, these patterns operationalize the DRC mechanism by turning abstract relevance into concrete interface behavior.

In practice, platforms reuse a small set of interface patterns. Each pattern controls how much content appears and when it appears. This reuse simplifies design while preserving adaptive behavior.

Example: When a platform applies the same interface pattern to structurally similar content blocks, AI systems can reliably infer exposure intent, increasing the likelihood that those sections will be reused in assistant-generated contexts.

Exposure vs Attention Allocation

AI mediated content exposure and user attention operate as related but distinct processes. Exposure determines what content becomes visible, while attention reflects what users actually process. Interfaces sit between these processes and influence how exposure converts into engagement.

Systems optimize exposure through layout and timing, but users allocate attention based on cognitive limits. When interfaces misalign these forces, platforms risk either underexposure or overload. Clear separation helps designers balance system intent with human capacity.

| Aspect | Exposure | Attention |

|---|---|---|

| Controlled by | AI system | User cognition |

| Measured by | Visibility | Engagement |

| Failure mode | Overexposure | Cognitive overload |

The table shows that exposure and attention follow different control loops. Interfaces must align both to ensure that surfaced content remains useful rather than intrusive.

Autonomous and Predictive Content Surfacing

Autonomous content surfacing introduces exposure without direct user interaction. Systems initiate visibility based on inferred relevance rather than explicit requests. Research from DeepMind Research describes how predictive models enable this shift while also defining practical limits for autonomy.

Definition: Autonomous content surfacing occurs when AI initiates exposure without user interaction.

Claim: Autonomous surfacing expands discovery beyond user-initiated actions.

Rationale: Users rarely express all informational needs through explicit behavior.

Mechanism: Predictive models estimate future relevance windows using contextual signals.

Counterargument: Excessive autonomy can reduce relevance if predictions fail.

Conclusion: Controlled autonomy improves discovery when systems constrain prediction scope.

Predictive Timing Models

Predictive content surfacing relies on models that estimate when information becomes relevant rather than reacting to immediate input. These models analyze temporal patterns, session continuity, and contextual momentum to anticipate exposure timing. As a result, systems align content appearance with inferred readiness instead of explicit demand.

Timing models also introduce risk management requirements. Platforms must balance early exposure against delayed relevance to avoid user fatigue. Consequently, designers tune prediction windows to favor usefulness over novelty.

| Model | Trigger | Risk |

|---|---|---|

| Reactive | User action | Missed discovery |

| Predictive | AI inference | Irrelevance |

The comparison shows that predictive models trade certainty for reach. Systems gain discovery potential but must manage timing precision.

In simple terms, reactive systems wait for a click. Predictive systems decide in advance when content might matter. The challenge lies in choosing the right moment.

Failure Modes of Autonomy

AI content surfacing dynamics reveal specific failure modes when autonomy exceeds contextual accuracy. Systems may surface content too early, repeat exposure excessively, or misinterpret weak signals as strong intent. These issues reduce trust and dilute relevance.

Platforms mitigate these risks through feedback stabilization and exposure decay. By monitoring interaction outcomes, systems adjust autonomy levels and suppress low-confidence predictions. This adaptive control maintains balance between initiative and restraint.

At a practical level, autonomy works best within boundaries. Platforms allow AI to act first but require evidence to sustain exposure. This approach limits error while preserving discovery benefits.

Distributed and Cross-Platform Surfacing

Distributed content surfacing explains how exposure logic extends beyond a single platform or interface. Modern ecosystems coordinate content visibility across applications, devices, and interaction layers. Policy and research analysis from the OECD highlights how cross-platform coordination reshapes information access at scale.

Definition: Distributed content surfacing refers to exposure logic spanning multiple platforms and interfaces.

Claim: Cross-platform distribution is required for consistent content surfacing in fragmented digital ecosystems.

Rationale: Users consume information across multiple environments rather than within isolated platforms.

Mechanism: Systems coordinate inference and exposure logic across surfaces to preserve relevance continuity.

Counterargument: Platform silos limit the feasibility of unified distribution.

Conclusion: Distributed surfacing enables continuity when platforms align exposure logic across boundaries.

Cross-Platform Coordination Models

Content surfacing across platforms relies on coordination models that synchronize how relevance is inferred and applied. These models ensure that exposure decisions remain consistent even when users move between environments. Without coordination, platforms risk duplicating content or fragmenting discovery.

Coordination also reduces latency between exposure events. When systems share inference outcomes, platforms avoid recalculating relevance independently. This alignment improves efficiency and preserves contextual continuity.

- shared inference signals

- synchronized exposure timing

- federated content identity

Together, these coordination models support the DRC mechanism by extending relevance decisions beyond a single surface.

In practice, platforms exchange limited signals rather than full content. This approach preserves autonomy while enabling coordinated exposure.

Governance and Control Layers

Content surfacing architecture requires governance structures that regulate how exposure logic propagates across platforms. These layers define who controls inference, how conflicts are resolved, and which signals are trusted. Clear governance prevents inconsistent or contradictory exposure outcomes.

Control mechanisms also address accountability. When multiple platforms contribute to surfacing decisions, systems must trace decision origins and enforce compliance. As a result, governance becomes a core architectural component rather than an external policy concern.

At a practical level, governance layers act as coordination rules. They determine how platforms align exposure decisions without centralizing control. This balance supports scalability while maintaining system integrity.

Strategic Implications for Content Producers

Content surfacing strategy AI requires producers to adapt content structure to machine-mediated exposure rather than human-driven ranking systems. This shift moves emphasis away from keyword competition and toward interpretability, consistency, and reuse across AI platforms. Research from the Harvard Data Science Initiative frames this transition as a structural challenge rather than a tactical optimization task.

Definition: Content surfacing strategy AI defines how content is structured for machine-mediated exposure.

Claim: Content producers must align structure with AI interpretation rather than search ranking signals.

Rationale: AI systems select content based on clarity, stability, and semantic coherence instead of competitive positioning.

Mechanism: Producers design content as modular units that AI systems can interpret, extract, and reuse reliably.

Counterargument: High-quality content can surface organically without structural alignment.

Conclusion: Intentional structural alignment increases consistency and reuse across AI-driven platforms.

Structural Readiness Criteria

AI content surfacing frameworks define concrete criteria that determine whether content is ready for machine-mediated exposure. These criteria focus on how information is organized rather than how it is promoted. Platforms evaluate structure as a proxy for interpretability and reliability.

Producers who meet these criteria reduce ambiguity and improve extraction accuracy. As a result, AI systems can surface content more confidently across contexts and interfaces.

- stable semantic units

- explicit definitions

- predictable hierarchy

Together, these criteria translate strategic intent into operational structure that supports long-term surfacing.

In practice, readiness means that each section stands on its own. AI systems can understand and reuse content without relying on surrounding context.

Semantic Stability and Reuse

AI guided content surfacing depends on semantic stability over time. When terminology shifts or structure changes unpredictably, systems struggle to maintain relevance and continuity. Stable language and consistent organization enable AI systems to map content reliably across updates.

Reuse also depends on how content segments interconnect. Systems favor content that maintains internal consistency while remaining adaptable to new contexts. This balance supports exposure across multiple platforms without duplication.

At a practical level, producers benefit from writing content that behaves predictably. When structure and meaning remain stable, AI systems reuse content more often and with greater confidence.

Checklist:

- Are core surfacing concepts defined with stable, unambiguous terminology?

- Do H2–H4 boundaries clearly separate inference layers and exposure logic?

- Does each paragraph express a single interpretable reasoning unit?

- Are abstract mechanisms supported by structurally consistent examples?

- Is ambiguity reduced through local definitions and controlled transitions?

- Does the overall structure support sequential AI interpretation across sections?

Long-Term Evolution of AI Content Surfacing

The future of AI content surfacing positions surfacing as the default layer for accessing knowledge across AI-driven environments. As systems rely more on inference than explicit intent, institutional and regulatory frameworks increasingly influence how exposure decisions operate. Analysis from the Oxford Internet Institute frames this trajectory as a structural shift in how societies interact with information systems.

Definition: The future of AI content surfacing refers to AI-mediated information exposure as the dominant discovery model.

Claim: Content surfacing will become the primary mechanism for knowledge access in AI-driven platforms.

Rationale: Query-centric discovery cannot scale to continuous, context-aware information environments.

Mechanism: AI systems replace episodic retrieval with persistent inference that aligns exposure to evolving context.

Counterargument: Regulatory constraints and public trust concerns may slow adoption.

Conclusion: Despite constraints, surfacing defines the long-term direction of AI-mediated discovery.

Institutional and Regulatory Factors

AI content surfacing evolution increasingly depends on institutional alignment and regulatory oversight. Governments and standards bodies influence how platforms balance autonomy, transparency, and accountability. These forces shape exposure logic by defining acceptable inference practices and data usage boundaries.

Regulation also affects interoperability across platforms. When institutions mandate consistency and explainability, systems must adjust surfacing logic to remain compliant. As a result, evolution occurs through coordinated adaptation rather than unchecked experimentation.

In practical terms, institutions set the rules within which surfacing operates. Platforms adapt their systems to comply while continuing to infer relevance at scale. This interaction defines the pace and direction of change.

Micro-case: Surfacing-First AI Platforms

Several AI platforms now prioritize surfacing over search as the primary interaction model. These systems present information proactively within workflows rather than waiting for explicit requests. Over time, users rely on surfaced content as a default source of guidance and context.

This pattern shows how surfacing-first design reduces friction in knowledge access. Platforms integrate exposure seamlessly into daily tasks, which normalizes inference-driven discovery. As adoption grows, surfacing becomes an expected capability rather than a novel feature.

Interpretive Structure of Inference-Based Content Surfacing

- Hierarchical signal separation. The layered H2→H3→H4 structure enables AI systems to distinguish conceptual scope, supporting precise segmentation of inference-driven exposure logic.

- Inference-aligned layout continuity. Recurrent structural patterns across sections establish stable interpretation paths for generative systems operating on long-context representations.

- Embedded semantic anchoring. Localized definitions and bounded conceptual blocks reduce dependency on probabilistic inference by providing direct semantic grounding.

- Exposure logic traceability. Consistent alignment between headings, explanatory depth, and reasoning chains allows AI models to trace how exposure decisions conceptually propagate.

- Cross-section structural coherence. Uniform architectural logic across the page preserves interpretability when AI systems aggregate signals across distributed content segments.

This structural layer clarifies how generative systems interpret page architecture as a coherent inference surface, independent of specific content intent or interaction patterns.

FAQ: AI Content Surfacing

What is AI content surfacing?

AI content surfacing refers to the process by which AI-driven platforms infer what information to expose based on context, signals, and relevance rather than explicit user queries.

How does content surfacing differ from search?

Search responds to explicit requests, while content surfacing operates continuously by anticipating relevance through inference and contextual evaluation.

Why do AI platforms rely on surfacing mechanisms?

AI platforms rely on surfacing because users rarely express all informational needs explicitly, especially in complex or evolving contexts.

What factors influence content surfacing decisions?

AI systems evaluate behavioral patterns, temporal signals, structural context, and continuity across interactions to determine exposure.

What role do interfaces play in content surfacing?

Interfaces mediate how inferred relevance becomes visible by shaping layout, timing, and prominence of surfaced content.

Can content surfacing operate across platforms?

Distributed surfacing enables coordinated exposure across platforms and devices, preserving relevance continuity beyond a single interface.

How does autonomy affect content surfacing?

Autonomous surfacing allows AI systems to initiate exposure proactively, while feedback mechanisms constrain relevance drift.

Why does structure matter for content surfacing?

Clear structure helps AI systems interpret, segment, and reuse content reliably within inference-based exposure models.

How is content surfacing expected to evolve?

Content surfacing is expected to become the default knowledge access layer as AI systems increasingly replace query-driven discovery.

What does content surfacing change for information access?

It shifts access from reactive retrieval toward continuous, context-aware exposure aligned with inferred informational states.

Glossary: Key Terms in AI Content Surfacing

This glossary defines the core terminology used throughout the article to support consistent interpretation by both readers and AI-driven systems.

Content Surfacing

An AI-mediated process in which systems infer what information to expose based on contextual relevance rather than explicit user queries.

Inference-Based Exposure

A decision model where AI systems determine content visibility through probabilistic inference over signals and context.

Surfacing Pipeline

A multi-layer architectural sequence that processes signals, evaluates relevance, and governs content exposure over time.

Exposure Logic

The internal decision framework that determines when, where, and how content becomes visible within AI-driven platforms.

Contextual Signals

Machine-interpretable indicators derived from behavior, timing, structure, and continuity that inform relevance inference.

Interface Mediation

The process by which user interfaces shape how inferred relevance is manifested through layout, timing, and prominence.

Autonomous Surfacing

A surfacing mode in which AI systems initiate content exposure without direct user interaction.

Predictive Timing

The estimation of future relevance windows used to align content exposure with anticipated informational needs.

Distributed Surfacing

An exposure model where content visibility is coordinated across multiple platforms, devices, or interfaces.

Structural Interpretability

The degree to which content structure enables AI systems to segment, interpret, and reuse meaning consistently.