Last Updated on December 20, 2025 by PostUpgrade

How Generative Engines Read and Rank Pages

Generative engines are replacing classical search systems as the primary interface for information discovery. These systems apply internal ranking logic to decide which pages enter an answer and how strongly they influence the generated output, which explains how generative engines rank sources in practice. As a result, ranking in generative environments operates as a reasoning process rather than a competition for link positions.

Generative Engines as Ranking Systems

Generative engines operate as ranking systems rather than retrieval indexes, which defines how generative engines ranking logic governs information selection during answer synthesis, as established in research by Stanford NLP. Unlike classical search architectures, these systems do not pre-order documents but instead resolve relevance dynamically while constructing outputs. This section explains the architectural distinction between retrieval-first and reasoning-first systems and limits the scope strictly to ranking behavior inside generative engines.

Definition: AI understanding is the ability of a generative system to interpret meaning, structure, and semantic boundaries in a way that enables consistent ranking decisions, reliable inclusion, and stable reuse of page representations during answer generation.

Generative engine: A generative engine is a system that constructs outputs by selecting, weighting, and combining information units through probabilistic inference rather than returning pre-ranked results.

Claim: Generative engines function as ranking systems because they must continuously prioritize information units during answer construction.

Rationale: Generative systems operate under strict context, coherence, and capacity constraints, which force them to decide which information can influence the final output state.

Mechanism: The engine evaluates candidate information units, assigns relative influence weights based on semantic alignment and internal consistency, and integrates only those units that stabilize the generated answer.

Counterargument: In small or tightly curated environments, generative systems may appear to generate outputs without explicit ranking behavior.

Conclusion: However, even in constrained settings, generative engines still apply implicit prioritization logic, which confirms that ranking remains a foundational function of generative systems.

Difference Between Index-Based and Generative Ranking

Index-based ranking systems rely on precomputed document ordering that exists before any query interaction occurs. In contrast, generative ranking unfolds during answer construction, which shifts ranking from a static sorting operation to a real-time reasoning process. As a result, the unit of ranking changes from links to internal representations that compete for inclusion.

| System Type | Ranking Unit | Decision Basis | Output Form |

|---|---|---|---|

| Index-based search | Web pages | Precomputed signals | Ordered links |

| Generative engine | Information units | Probabilistic inference | Synthesized answers |

This comparison demonstrates that generative ranking replaces positional ordering with contextual prioritization embedded directly into generation logic.

Simply stated, traditional systems rank pages before interaction, while generative systems rank ideas while thinking.

Why Ranking Exists in Generative Systems

Generative systems require ranking because they cannot process all available information simultaneously. Moreover, ranking allows these systems to resolve conflicts between sources and maintain internal consistency. Consequently, ranking persists even when no visible result list exists.

- Generative systems must limit context size to remain computationally viable.

- They must prioritize information that supports factual coherence.

- They must suppress redundant or weakly supported content.

- They must align generated output with inferred intent and context.

Therefore, ranking exists as an internal control mechanism that enables generative systems to produce stable and interpretable answers.

Put simply, even without blue links, generative systems must still choose what matters and what does not.

How Generative Engines Rank Pages

Generative engines rank pages inside generated answers rather than across visible result lists, which defines how generative engines rank information at the page level, as outlined in research from MIT CSAIL. In generative environments, pages remain the primary evaluation unit because they encapsulate structured, authored knowledge rather than isolated fragments. This section explains why page-level ranking persists, how it operates during generation, and where its boundaries lie.

Page representation: A page representation is a compressed semantic object that aggregates the page’s concepts, facts, structure, and contextual signals into a form usable by a generative reasoning system.

Claim: Generative engines rank pages by evaluating their representations during answer construction rather than assigning them fixed positions.

Rationale: Pages provide stable semantic boundaries that allow generative systems to assess completeness, reliability, and internal consistency at scale.

Mechanism: The engine transforms each page into a semantic representation, evaluates its relevance and coherence against the active query context, and assigns it a relative influence score that determines whether and how strongly it contributes to the generated answer.

Counterargument: Some generative systems appear to operate on smaller text chunks or passages instead of full pages.

Conclusion: Even when passage-level processing occurs, the underlying ranking logic still aggregates signals at the page level to ensure semantic stability and attribution consistency.

Signals Used for Page Ranking

Generative engines rely on a defined set of signals to evaluate page representations during ranking. These signals do not function independently but interact to shape the final inclusion decision. As a result, page ranking emerges from combined semantic evaluation rather than isolated metrics.

- Semantic completeness, which reflects how fully a page covers the conceptual scope of a topic.

- Factual density, which measures the concentration of verifiable statements relative to narrative filler.

- Internal consistency, which indicates whether claims within the page align without contradiction.

Together, these signals allow generative systems to distinguish between pages that merely mention a topic and pages that can reliably support answer generation.

Simply put, pages rank higher when they explain more, prove more, and contradict themselves less.

Ranking Without SERP Positions

Generative engines perform ranking even when no visible positions exist, which changes how ordering manifests in the output. Instead of presenting ranked lists, these systems apply implicit ordering during answer synthesis. Consequently, ranking influences inclusion, emphasis, and omission rather than position numbers.

Implicit ordering in generative answers

Implicit ordering occurs when generative systems prioritize certain pages as primary sources while relegating others to secondary influence. This prioritization shapes which facts appear earlier, which explanations receive more detail, and which perspectives dominate the answer. Therefore, ranking still governs informational hierarchy even without explicit positions.

This process ensures that the most relevant page representations anchor the response, while less relevant ones provide limited or no contribution.

In simple terms, the engine decides which pages lead the answer and which ones stay in the background.

Confidence-weighted inclusion logic

Generative engines apply confidence-weighted inclusion logic to determine how strongly each page affects the final output. Pages with higher confidence signals exert greater influence on wording, structure, and factual framing. Conversely, pages with weaker signals may only contribute minor details or be excluded entirely.

This weighting mechanism allows generative systems to manage uncertainty and avoid over-reliance on marginal sources. As a result, ranking directly controls answer reliability rather than surface visibility.

Put simply, pages the engine trusts more shape the answer more.

Generative Ranking Mechanisms

Generative ranking mechanisms describe the internal decision processes that determine which information paths influence an answer, as detailed in research from Berkeley Artificial Intelligence Research (BAIR). These mechanisms separate simple heuristics from learned reasoning by relying on probabilistic inference rather than fixed rules. This section focuses on how decisions form inside the model and clarifies the boundary between rule-based shortcuts and adaptive reasoning.

Ranking mechanism: A ranking mechanism is an inference path selection process that determines which candidate representations receive influence during answer generation.

Claim: Generative ranking mechanisms rely on inference path selection rather than static scoring rules.

Rationale: Fixed heuristics cannot adapt to varying contexts, while generative systems must adjust decisions as context evolves during generation.

Mechanism: The system evaluates multiple inference paths in parallel, estimates their contribution to answer stability, and selects paths that maximize coherence and relevance.

Counterargument: In narrowly defined tasks, heuristic shortcuts can approximate ranking outcomes without full inference.

Conclusion: However, at scale and across diverse topics, learned inference paths provide the only reliable mechanism for generative ranking.

Embedding Space and Relevance Weighting

Generative systems project page representations into a shared embedding space to compare semantic proximity. Within this space, relevance weighting determines how strongly each representation can influence the answer. Therefore, ranking emerges from distance and alignment relationships rather than explicit position values.

As context updates during generation, relevance weights shift to reflect new constraints. Consequently, ranking remains fluid and responsive to evolving semantic focus instead of remaining fixed after initial evaluation.

| Signal Type | Representation Layer | Effect on Rank |

|---|---|---|

| Semantic alignment | Embedding space | Increases inclusion probability |

| Contextual fit | Attention layer | Adjusts influence strength |

| Topical proximity | Latent similarity | Stabilizes ranking position |

In simple terms, pages closer to the current meaning space matter more.

Consistency and Redundancy Suppression

Generative ranking mechanisms actively suppress inconsistent information to preserve answer stability. At the same time, they reduce redundancy by limiting repeated concepts from multiple sources. As a result, ranking supports clarity rather than volume.

These suppression processes operate continuously during generation, which prevents low-value repetition from diluting the response. Therefore, ranking mechanisms act as quality control rather than mere selection filters.

- The system downweights conflicting statements to avoid contradiction.

- It limits repeated facts that add no new information.

- It prioritizes sources that maintain internal coherence.

Taken together, these actions ensure that generative ranking mechanisms favor concise, consistent contributions over sheer quantity.

Put simply, the engine keeps what fits and removes what repeats or conflicts.

Generative Search Ranking Behavior

Generative search ranking becomes visible through how users experience answers rather than how they scan result lists, which reflects findings discussed by the Oxford Internet Institute. In this environment, ranking expresses itself through selection, emphasis, and omission instead of clicks. This section explains how ranking behavior shapes visibility at the behavioral layer where no explicit interaction signals exist.

Generative search: A generative search is an answer-synthesis environment where systems construct responses by selecting and integrating information units rather than presenting ranked results.

Claim: Generative search ranking determines user-visible outcomes by controlling which information appears and how prominently it is presented.

Rationale: Users interact with synthesized answers, so ranking must operate before presentation to manage attention and comprehension.

Mechanism: The system assigns influence weights to candidate pages during generation, which determines inclusion order, emphasis, and narrative framing within the answer.

Counterargument: Some argue that without clicks, ranking loses behavioral relevance.

Conclusion: However, ranking remains behaviorally significant because it governs what users see, trust, and remember.

Principle: In generative ranking systems, content gains influence when its structure, definitions, and semantic boundaries remain stable enough to be interpreted consistently across inference contexts.

Visibility vs Selection

Visibility in generative search differs from selection because users do not choose between alternatives presented side by side. Instead, the system pre-selects content that becomes visible within the answer. Therefore, ranking shifts from guiding choice to shaping exposure.

This change alters feedback loops because user behavior reflects consumption rather than navigation. Consequently, ranking behavior must anticipate informational impact rather than respond to clicks.

Simply stated, users see what the system selects before they can choose.

Example: A page with clearly defined concepts, stable terminology, and consistent internal logic allows generative systems to segment meaning accurately, which increases the probability that its core sections will shape synthesized answers.

Inclusion probability

Inclusion probability defines the likelihood that a page contributes to a generated answer. Pages with higher inclusion probability shape the main narrative and supply core facts. As a result, ranking influences not only presence but also informational authority.

This probability adjusts dynamically as context evolves, which allows the system to emphasize sources that best fit the emerging answer. Therefore, inclusion probability functions as a continuous ranking signal rather than a fixed threshold.

In simple terms, some pages almost always appear, while others rarely make it in.

Exclusion by insufficiency

Exclusion by insufficiency occurs when a page fails to meet minimum relevance or consistency requirements. Such pages do not contribute even if they mention the topic. Consequently, ranking eliminates weak sources before generation completes.

This exclusion protects answer coherence and reduces noise. As a result, ranking behavior prioritizes sufficiency over breadth.

Put simply, pages that do not add enough value stay out.

A page that ranks highly in classical search may attract clicks through strong optimization and backlinks. However, the same page may receive minimal influence in a generative answer if it lacks semantic completeness or internal consistency. In contrast, a lower-traffic page with dense factual coverage may dominate the generative response. This contrast shows how generative search ranking behavior redefines visibility independently of traditional performance metrics.

Generative Engine Ranking Signals

Generative engine rank signals define how systems evaluate information without relying on traditional SEO terminology, as formalized in web architecture principles maintained by the W3C. In generative environments, signals differ from ranking factors because they act as inputs to inference rather than fixed levers that determine positions. This section systematizes these signals and clarifies their role within probabilistic decision-making.

Ranking signal: A ranking signal is a measurable inference input that influences how strongly an information unit affects answer generation.

Claim: Generative engines rely on ranking signals as dynamic inference inputs rather than static ranking factors.

Rationale: Fixed factors assume stable ordering, while generative systems must adapt to changing context during generation.

Mechanism: The system ingests multiple signals, normalizes them across representations, and combines them to estimate influence during inference.

Counterargument: Some signals resemble classical factors because they consistently correlate with inclusion outcomes.

Conclusion: However, in generative systems, signals remain context-dependent inputs whose impact varies with each reasoning process.

Structural Signals

Structural signals describe how information is organized and framed within a page. These signals help generative systems assess whether content can support coherent synthesis. As a result, structure influences ranking indirectly by enabling reliable inference.

- Clear section hierarchy that separates concepts and explanations.

- Stable terminology usage that prevents semantic drift.

- Explicit definitions that anchor meaning early.

- Predictable logical progression across sections.

Taken together, structural signals allow generative engines to process pages efficiently and reduce ambiguity during reasoning.

Semantic and Factual Signals

Semantic and factual signals evaluate what the page says rather than how it is arranged. These signals measure meaning density, accuracy, and internal alignment. Consequently, they directly affect whether a page can substantively contribute to an answer.

| Signal | Source Layer | Ranking Effect |

|---|---|---|

| Concept coverage | Semantic layer | Expands inclusion scope |

| Factual accuracy | Evidence layer | Increases trust weighting |

| Claim consistency | Reasoning layer | Stabilizes influence |

| Context alignment | Inference layer | Adjusts relevance strength |

Together, these signals ensure that generative ranking favors pages that deliver precise meaning supported by verifiable facts.

Generative Ranking vs Traditional Ranking

Generative ranking vs traditional ranking highlights a structural shift in how information systems evaluate and surface content, a distinction formalized in evaluation frameworks published by the National Institute of Standards and Technology. Direct comparison is necessary because strategic assumptions built for deterministic systems no longer hold in probabilistic environments. This section contrasts the two models and defines why content strategies must adapt to generative ranking behavior.

Traditional ranking: Traditional ranking is a deterministic ordering process that assigns fixed positions to documents based on predefined relevance signals before user interaction.

Claim: Generative ranking differs fundamentally from traditional ranking because it operates through probabilistic reasoning rather than fixed ordering.

Rationale: Deterministic systems assume stable relevance signals, while generative systems must resolve relevance dynamically as context evolves.

Mechanism: Traditional ranking computes positions once per query, whereas generative ranking continuously recalculates influence during answer construction.

Counterargument: In simple informational queries, generative outputs may appear similar to traditionally ranked results.

Conclusion: Despite surface similarities, the underlying ranking logic diverges, which requires different evaluation and optimization approaches.

Deterministic vs Probabilistic Ranking

Deterministic ranking systems produce consistent outputs for identical inputs. Probabilistic ranking systems, by contrast, allow variation because relevance is inferred rather than computed once. Therefore, ranking outcomes depend on context interpretation rather than static scores.

This distinction affects predictability and control. Deterministic systems prioritize repeatability, while probabilistic systems prioritize contextual fit and coherence.

| Ranking Model | Decision Logic | Output Stability | Ranking Unit |

|---|---|---|---|

| Deterministic | Fixed signal weighting | High | Pages or links |

| Probabilistic | Contextual inference | Variable | Representations |

This comparison shows that generative ranking trades positional certainty for contextual adaptability.

Impact on Content Strategy

The shift from traditional to generative ranking changes how content must be designed and evaluated. Strategies optimized for positional gain lose effectiveness when influence replaces rank. Consequently, content strategy must align with reasoning-based evaluation.

- Content must support inference rather than trigger isolated signals.

- Semantic completeness becomes more important than keyword targeting.

- Internal consistency affects influence more than external popularity.

- Visibility depends on inclusion probability rather than position.

Taken together, these changes require content strategies that prioritize clarity, structure, and factual integrity over traditional ranking manipulation.

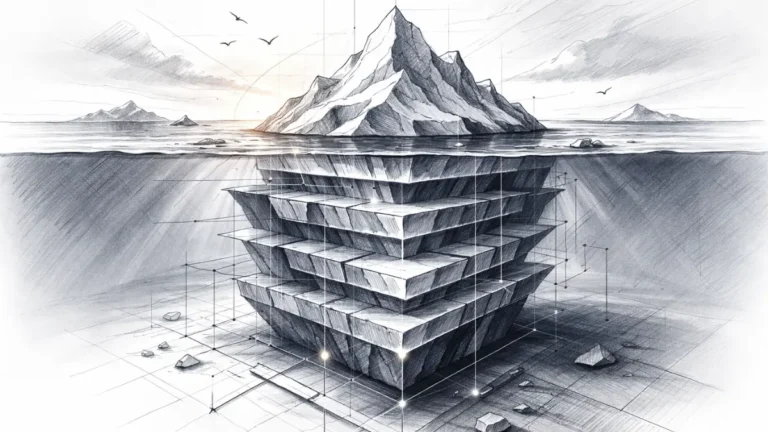

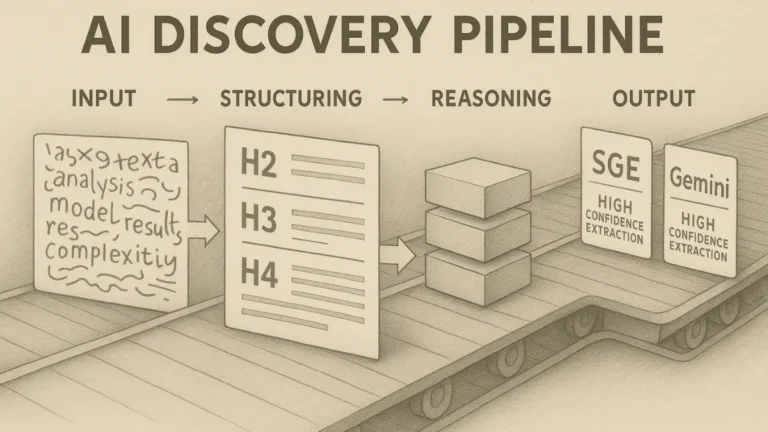

Generative Ranking Architecture

Generative ranking architecture defines how ranking logic is implemented across layered systems, a model described in research published by DeepMind Research. At this level, ranking is not a single function but a coordinated pipeline of reasoning stages. This section explains why architectural design determines reuse, stability, and long-term visibility in generative systems.

Ranking architecture: Ranking architecture is a layered reasoning pipeline that organizes how information flows from ingestion to evaluation during generative inference.

Claim: Generative ranking architecture determines how reliably ranking logic can be reused across queries and contexts.

Rationale: When ranking logic is embedded into a layered pipeline, inference decisions become modular and repeatable rather than ad hoc.

Mechanism: The system separates ingestion, representation, and evaluation into distinct stages that pass structured outputs forward, which stabilizes ranking behavior across uses.

Counterargument: Some lightweight generative systems collapse these stages to reduce latency.

Conclusion: While simplified designs exist, robust generative ranking requires layered architecture to support consistent reuse and scalable reasoning.

Pipeline Stages

Generative ranking architecture operates through sequential pipeline stages that each perform a distinct function. These stages constrain how information enters the system, how it is transformed, and how final decisions are made. As a result, ranking behavior reflects the integrity of the entire pipeline rather than any single component.

Each stage contributes different signals to the overall inference process. Weakness at any stage propagates forward and reduces ranking reliability.

The pipeline functions as a controlled flow that preserves meaning while enabling selection.

Ingestion

Ingestion defines how raw information enters the generative system. This stage filters inputs based on accessibility, format, and basic relevance before deeper reasoning occurs. Consequently, ingestion limits the candidate space that ranking can operate on.

By constraining what enters the system, ingestion prevents irrelevant or malformed data from influencing later stages. This control establishes the first boundary of ranking behavior.

At a basic level, ingestion decides what the system is allowed to consider.

Representation

Representation transforms ingested information into internal semantic forms usable by the model. These representations encode meaning, structure, and context in a compressed format. Therefore, representation quality directly affects ranking precision.

Because ranking compares representations rather than raw text, inconsistencies or gaps at this stage distort downstream decisions. Stable representations enable reliable comparison across pages and contexts.

In practical terms, representation defines how clearly the system understands what it has seen.

Evaluation

Evaluation applies inference logic to compare representations and determine influence during generation. This stage assigns relative importance based on coherence, relevance, and consistency with the active context. As a result, evaluation produces the final ranking outcome inside the answer.

Evaluation operates continuously as generation progresses, allowing ranking decisions to adjust in real time. This adaptability distinguishes generative ranking from static scoring systems.

At its core, evaluation decides which information shapes the answer and which does not.

Generative Ranking Outcomes and Visibility

Generative ranking outcomes define how publishers gain or lose presence inside synthesized answers rather than through direct traffic, a shift analyzed in digital visibility research by the OECD. In generative systems, ranking no longer optimizes for clicks but for sustained inclusion and interpretive prominence. This section examines how ranking outcomes translate into visibility and why this transition changes publisher expectations.

Ranking outcome: A ranking outcome is the combined result of inclusion and framing, which determines whether a page contributes to an answer and how its information is presented.

Claim: Generative ranking outcomes prioritize visibility over traffic by controlling inclusion and narrative framing.

Rationale: Users consume synthesized answers directly, which reduces navigation while increasing reliance on system-selected sources.

Mechanism: The engine selects pages based on ranking signals, assigns them varying degrees of influence, and frames their contributions within the generated response.

Counterargument: Traffic metrics still matter for revenue models that depend on user visits.

Conclusion: However, generative visibility increasingly determines long-term authority and reuse, even when immediate traffic declines.

Long-Term Visibility Effects

Generative ranking outcomes produce durable visibility patterns that extend beyond individual interactions. These effects accumulate as systems repeatedly reuse trusted sources. Consequently, visibility becomes a structural property rather than a transient metric.

- Pages with consistent inclusion gain sustained presence across multiple answers.

- Framed contributions shape how topics are interpreted over time.

- Reduced click-through does not eliminate informational influence.

- Authority consolidates around sources that support stable reasoning.

Taken together, these effects show that generative ranking outcomes redefine success as persistent visibility rather than episodic traffic.

A technical reference site optimized for classical search experienced a decline in organic clicks after generative answers became prominent. However, its structured explanations and consistent definitions led to frequent inclusion in synthesized responses. Over time, the site became a primary reference for explanatory queries despite lower visit counts. This case illustrates how generative ranking outcomes can strengthen visibility even as traditional performance indicators weaken.

Implications for AI-First Content Design

The implications of generative ranking extend beyond evaluation and directly affect how content must be engineered, a shift documented in applied research from the Harvard Data Science Initiative. In this context, the generative ranking framework connects ranking logic with content engineering choices that determine reuse, stability, and long-term visibility. This section outlines the strategic consequences for AI-first content design and defines the boundaries of effective adaptation.

AI-first content: AI-first content is content engineered to support probabilistic inference by prioritizing clarity, structure, and semantic stability over surface optimization.

Claim: AI-first content design must align with generative ranking logic to achieve durable visibility.

Rationale: Generative systems select and reuse content based on inference compatibility rather than positional performance.

Mechanism: Content engineered with stable definitions, coherent structure, and factual density produces representations that rank consistently during generation.

Counterargument: Some content optimized for traditional ranking may still appear in generative outputs due to legacy authority signals. Conclusion: Over time, inference-compatible design becomes the dominant determinant of generative visibility.

From Optimization to Compatibility

Content design shifts from optimization tactics toward compatibility with generative reasoning processes. This transition changes priorities from manipulating signals to supporting inference. As a result, content engineering becomes a structural discipline rather than a tactical adjustment.

AI-first compatibility requires deliberate choices in how information is presented and maintained. These choices affect whether content can be reliably integrated into generated answers across contexts. Consequently, compatibility influences reuse more than short-term performance.

- Focus on semantic completeness instead of isolated keyword targeting.

- Maintain consistent terminology to prevent representation drift.

- Place clear definitions early to anchor meaning.

- Structure sections to support incremental reasoning.

Together, these practices move content from optimization-driven design toward inference-compatible engineering.

In practical terms, content that is easy for a system to reason with remains visible longer, even when traditional optimization signals fade.

Checklist:

- Does the page define its core concepts with precise and stable terminology?

- Are sections organized with consistent H2–H4 semantic boundaries?

- Does each paragraph express a single, coherent reasoning unit?

- Are examples used to reinforce abstract ranking concepts?

- Is ambiguity reduced through explicit transitions and local definitions?

- Does the structure support step-by-step inference during generation?

Generative engines replace positional ordering with reasoning-driven selection that operates inside answer construction rather than across result lists. Throughout the article, ranking emerges as a continuous inference process that evaluates representations, resolves relevance, and manages coherence in real time. This shift explains why visibility now depends on inclusion, framing, and reuse instead of clicks or positions. Ultimately, ranking in generative systems functions as reasoning, not ordering, which redefines how content gains and sustains presence in AI-mediated discovery environments.

Generative Ranking Interpretation Logic

- Page-level semantic coherence. Generative engines evaluate pages as complete semantic units, favoring content that maintains internal consistency across definitions, claims, and explanatory scope.

- Terminology and definition stability. Stable, early-defined concepts allow generative systems to form reliable internal representations without relying on probabilistic reinterpretation.

- Factual density and verifiability. Pages with high concentrations of verifiable statements are more likely to be selected, reused, and framed during generative inference processes.

- Reasoning-oriented structure. Content organized to support logical explanation rather than navigational interaction improves long-context processing and inference continuity.

- Generative inclusion signals. Visibility within AI-generated answers reflects how content is interpreted, framed, and contextualized by generative ranking systems beyond traditional traffic metrics.

These interpretive signals explain how generative engines assess page suitability, semantic stability, and reuse potential when ranking and synthesizing content within AI-generated responses.

FAQ: How Generative Engines Rank Pages

What does it mean that generative engines rank pages?

Generative engines rank pages by evaluating their semantic representations during answer generation, determining which pages are included and how strongly they influence the final response.

How is generative ranking different from traditional ranking?

Traditional ranking orders pages into fixed positions, while generative ranking assigns influence dynamically as part of a probabilistic reasoning process.

Why do pages remain important in generative search?

Pages provide stable semantic boundaries that allow generative systems to assess completeness, consistency, and factual reliability at scale.

How do generative engines decide which pages to include?

Generative engines evaluate page representations using signals such as semantic completeness, factual density, and internal consistency to determine inclusion probability.

What role does structure play in generative ranking?

Clear structure supports reliable inference by reducing ambiguity, stabilizing representations, and enabling consistent reuse during answer generation.

Why is visibility more important than traffic in generative systems?

Generative systems surface information directly in answers, so long-term visibility depends on repeated inclusion and framing rather than click-through behavior.

How can content support generative ranking?

Content supports generative ranking by maintaining stable terminology, early definitions, factual clarity, and coherent reasoning structure.

Are ranking signals the same as ranking factors?

Ranking signals are inference inputs whose influence varies by context, whereas ranking factors imply fixed effects, which do not apply in generative systems.

How does generative ranking affect long-term authority?

Sources that are consistently included in generative answers accumulate authority through reuse, even when traditional traffic metrics decline.

What skills matter for AI-first content creation?

Effective AI-first content requires semantic precision, structured reasoning, stable definitions, and evidence-based explanation.

Glossary: Key Terms in Generative Page Ranking

This glossary defines the core terminology used throughout the article to ensure consistent interpretation of how generative engines read, evaluate, and rank pages.

Generative Engine

A system that constructs answers by selecting, weighting, and combining information units through probabilistic reasoning rather than returning ranked lists.

Page Representation

A compressed semantic object that aggregates a page’s concepts, facts, structure, and context for evaluation by a generative system.

Generative Ranking

A probabilistic reasoning process that determines which page representations are included in an answer and how strongly they influence its content.

Ranking Signal

A measurable inference input used to estimate relevance, coherence, or reliability during generative answer construction.

Semantic Completeness

The degree to which a page fully covers the conceptual scope of a topic without critical gaps.

Factual Density

The concentration of verifiable factual statements within a page relative to descriptive or non-informational content.

Internal Consistency

The absence of contradictions between claims within a page, enabling stable reasoning during generative evaluation.

Inference Path

A sequence of reasoning steps through which a generative system evaluates and integrates information into an answer.

Inclusion Probability

The estimated likelihood that a page representation will contribute to a generated answer.

Ranking Outcome

The combined effect of whether a page is included in an answer and how its information is framed within the generated response.