Last Updated on December 25, 2025 by PostUpgrade

Combining Human Expertise with AI Discovery Systems

AI discovery systems now operate at a scale where automated pattern detection influences strategic, scientific, and regulatory decisions. In this context, human ai collaboration becomes a structural requirement for ensuring that discovery outcomes remain interpretable, accountable, and decision-relevant. This article focuses on enterprise discovery systems, where human expertise and AI operate as coordinated components rather than consumer-facing automation tools.

Human AI Collaboration as a Discovery Paradigm for Enterprise Systems

Discovery complexity in modern organizations exceeds the capacity of any single cognitive agent, whether human or artificial. As data volumes grow and problem spaces expand, human ai collaboration emerges as a necessary paradigm for sustaining reliable discovery across enterprise and research systems. In this context, collaboration defines how intent, validation, and responsibility are distributed, as documented in large-scale research on human–machine interaction by the Stanford Natural Language Institute (NLP).

Human–AI collaboration is a structured model in which human judgment and AI systems jointly participate in discovery, validation, and decision formation.

Definition: Human–AI collaboration in discovery systems is the structural coordination of human judgment and automated exploration that enables interpretable, validated, and institutionally accountable discovery outcomes.

Claim: Human–AI collaboration is required for reliable discovery.

Rationale: AI systems lack contextual accountability when operating in open-ended discovery spaces.

Mechanism: Humans define discovery intent and constraints while AI systems scale exploration across large information domains.

Counterargument: Narrow discovery domains can operate autonomously without human involvement.

Conclusion: Complex discovery environments require collaborative intelligence to maintain reliability.

Collaborative Intelligence Models for Human AI Collaboration

Collaborative intelligence models describe how human reasoning and machine inference interact within a single discovery process shaped by human ai collaboration. These models assume that neither party operates independently, but instead contributes complementary cognitive functions that align toward a shared objective under explicit coordination.

In enterprise environments, collaborative intelligence models formalize roles rather than tasks. Humans retain authority over meaning, relevance, and consequence, while AI systems focus on speed, scale, and pattern extraction. This separation reduces ambiguity because each cognitive function remains bounded by its strengths.

At the same time, collaborative intelligence avoids over-reliance on automation by embedding human judgment at decisive points. This design choice limits the propagation of unverified inferences across organizational systems.

In simple terms, collaborative intelligence means that people decide what matters and why, while AI helps explore everything that could be relevant.

Shared Discovery Boundaries

Shared discovery boundaries define the operational limits within which AI systems can explore information spaces. These boundaries include scope definitions, exclusion rules, and relevance thresholds established by human experts before automated discovery begins. By setting boundaries early, organizations prevent uncontrolled exploration.

From a systems perspective, shared boundaries function as cognitive guardrails. AI systems operate freely inside predefined limits but cannot redefine the problem space without human authorization. This structure ensures that discovery remains aligned with strategic or scientific intent.

Furthermore, shared boundaries support reproducibility. When humans define the discovery frame explicitly, results can be audited, compared, and re-evaluated over time without reinterpreting system behavior.

In practice, shared discovery boundaries ensure that AI explores deeply, but only where exploration remains meaningful.

Research Literature Discovery

Research literature discovery illustrates how collaborative intelligence operates in real-world settings. AI systems can scan millions of publications to identify emerging themes, citation patterns, and conceptual clusters that exceed human reading capacity. However, raw outputs alone do not produce knowledge.

Human experts intervene by selecting which clusters warrant attention and by interpreting their significance within existing research frameworks. This interaction transforms statistical correlations into structured insights that support hypothesis formation or policy decisions.

As a result, discovery becomes iterative rather than terminal. AI surfaces possibilities, and humans determine which paths deserve further exploration.

In everyday terms, AI finds what is new or unusual in the literature, and experts decide what is important.

Hallucination Risk Reduction

Lower hallucination propagation is a direct implication of collaborative discovery paradigms. When AI systems operate without human grounding, unsupported associations can propagate rapidly across downstream decisions. Collaboration introduces validation checkpoints that interrupt this process.

Human oversight enables early detection of implausible or contextually invalid discoveries. By validating intermediate outputs, experts prevent false patterns from being institutionalized as facts.

Consequently, organizations achieve higher trust in discovery outcomes without sacrificing exploration speed. Reliability increases not because AI improves autonomously, but because collaboration constrains error amplification.

Put simply, collaboration reduces the risk that incorrect discoveries spread unnoticed through complex systems.

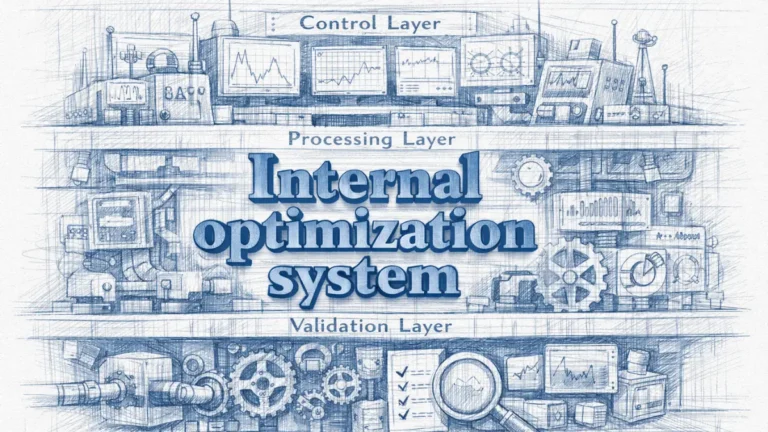

Human Expertise as a Control Layer in AI Systems

Uncontrolled AI discovery introduces systemic risk in environments where decisions carry legal, financial, or scientific consequences. In decision-critical systems, human expertise with ai functions as a control layer that stabilizes discovery processes and prevents misaligned outcomes, a principle consistently emphasized in applied AI governance research from MIT CSAIL. This section focuses on how expert judgment anchors authority, validation, and accountability in enterprise AI systems.

Human expertise is domain-specific judgment used to guide, validate, and constrain AI system outputs.

Claim: Human expertise is essential for AI system control.

Rationale: AI systems cannot independently define which outcomes are acceptable within legal, ethical, or organizational boundaries.

Mechanism: Experts impose constraints, define thresholds, and apply overrides that shape how AI systems explore and finalize results.

Counterargument: Self-alignment and reinforcement techniques reduce the apparent need for continuous expert involvement.

Conclusion: Control authority remains human-anchored even as AI systems increase in autonomy.

Control Responsibilities in Discovery Systems

| Control Function | Human Expert | AI System |

|---|---|---|

| Goal definition | Sets intent and constraints | Executes within bounds |

| Risk evaluation | Judges impact and ethics | Detects anomalies |

| Output approval | Confirms validity | Generates candidates |

This structure shows that authority and accountability remain with human experts.

Expert Validation as a Control Mechanism

Expert validation defines how discovery outputs transition from computational artifacts to decision-ready signals. In controlled systems, experts do not review every intermediate result, but instead validate outputs that cross predefined relevance or risk thresholds. This approach ensures that scale does not eliminate accountability.

Validation operates as a selective filter rather than a bottleneck. AI systems generate candidate discoveries continuously, while experts focus attention on outputs that influence strategy, compliance, or resource allocation. As a result, control remains effective without constraining system throughput.

This mechanism aligns system behavior with organizational standards because validation criteria reflect institutional values rather than statistical convenience.

At a practical level, expert validation means that important outputs receive human confirmation before they affect decisions.

Human Judgment in System Governance

Human judgment in ai systems governs how exceptions, conflicts, and ambiguous signals are resolved. When AI encounters edge cases that fall outside training distributions, human judgment determines whether outputs should be accepted, revised, or discarded. This governance function cannot be fully automated because it depends on contextual interpretation.

Judgment also defines escalation paths. Experts decide when discovery results require additional review or external consultation, especially in regulated environments. These decisions preserve organizational control even when AI systems operate continuously.

Through judgment-based governance, organizations prevent automated systems from redefining acceptable behavior without oversight.

In simple terms, human judgment ensures that AI decisions make sense within real-world constraints.

Implications for Decision-Critical Systems

Decision-critical systems depend on stable authority structures to maintain trust. When AI systems operate without expert control, accountability diffuses and responsibility becomes unclear. Embedding expertise as a control layer restores traceability across discovery and decision pipelines.

This approach also supports auditability. Experts can explain why constraints existed, why overrides occurred, and why certain outputs were accepted. Such explanations remain essential for compliance, risk management, and institutional learning.

As a result, systems that integrate expert control scale safely without sacrificing responsibility.

Put plainly, expert control allows AI to operate at scale while humans remain accountable for outcomes.

AI Discovery Systems with Human Oversight

Discovery now operates at a scale where automated systems evaluate volumes of information that exceed direct human review. Within enterprise and scientific contexts, ai discovery systems require structured oversight to ensure that discoveries remain valid, explainable, and aligned with institutional goals, a necessity reflected in large-scale research practices promoted by the Allen Institute for Artificial Intelligence (AI2). This section explains why oversight is not an auxiliary feature but a core structural requirement.

AI discovery systems are computational frameworks designed to surface unknown patterns or hypotheses from large information spaces.

Claim: Human oversight is mandatory in AI discovery systems.

Rationale: Discovery processes amplify errors faster than verification mechanisms can correct them.

Mechanism: Oversight filters and evaluates outputs before they influence institutional decisions.

Counterargument: Large datasets enable statistical self-correction without human intervention.

Conclusion: Oversight increases the reliability and institutional usability of discovery outcomes.

Human Oversight as a Systemic Concept

Human oversight ai refers to the continuous or staged involvement of human judgment in supervising how discovery systems operate and how their outputs are interpreted. Oversight is not limited to final approval but extends to monitoring assumptions, validating relevance, and detecting misalignment during discovery execution. This role becomes critical when AI systems operate in open-ended or poorly constrained domains.

Oversight functions as a stabilizing layer that absorbs uncertainty introduced by scale. As AI systems explore vast information spaces, they generate outputs that may appear statistically plausible yet lack contextual grounding. Human oversight reintroduces domain context and institutional norms into the evaluation process.

By embedding oversight structurally, organizations prevent discovery systems from redefining success criteria autonomously. Authority over meaning and consequence remains explicit rather than implicit.

Oversight ensures that discovery outputs reflect not only what is detectable, but also what is appropriate to act upon.

Principle: Discovery systems remain reliable in AI-driven environments when human oversight defines validation boundaries that models cannot reinterpret autonomously.

Review Gates as an Operational Mechanism

Review gates define the points at which human evaluation intervenes in automated discovery workflows. These gates can be triggered by confidence thresholds, novelty detection, or risk indicators embedded in system design. Their purpose is to intercept outputs before they propagate downstream.

From an operational perspective, review gates segment discovery into accountable phases. AI systems generate hypotheses or patterns continuously, but only outputs that pass defined gates advance toward decision-making layers. This segmentation reduces the likelihood that unverified discoveries influence strategic or scientific conclusions.

Review gates also support auditability. Because interventions occur at explicit stages, organizations can trace why certain outputs advanced while others were discarded, strengthening institutional memory and compliance.

Through review gates, oversight becomes procedural rather than discretionary, which improves consistency.

Hypothesis Filtering in Discovery Workflows

Hypothesis filtering illustrates how oversight operates in practice within ai discovery workflows. AI systems often produce a large set of candidate hypotheses based on correlations or latent structures in data. Without filtering, this volume overwhelms human evaluators and dilutes decision quality.

Human oversight introduces prioritization criteria that reflect relevance, plausibility, and potential impact. Experts assess which hypotheses merit further investigation and which remain exploratory signals. This filtering transforms raw discovery into structured inquiry.

As a result, discovery becomes iterative. AI expands the hypothesis space, while humans progressively narrow it to manageable and meaningful directions.

Filtering ensures that discovery effort concentrates on hypotheses that justify resource investment.

Trust Signals and Institutional Confidence

Trust signals emerge when discovery systems demonstrate consistent alignment between automated outputs and human validation. Oversight produces these signals by showing that outputs have passed through accountable evaluation processes. Over time, this consistency increases institutional confidence in discovery infrastructure.

In enterprise and scientific environments, trust determines adoption. Decision-makers rely on systems that not only produce results but also explain how those results were vetted. Oversight provides this explanatory layer.

When trust signals accumulate, organizations can scale discovery activities without proportionally increasing risk. Reliability grows through governance rather than through unchecked automation.

Oversight does not slow discovery; it makes discovery dependable enough to use.

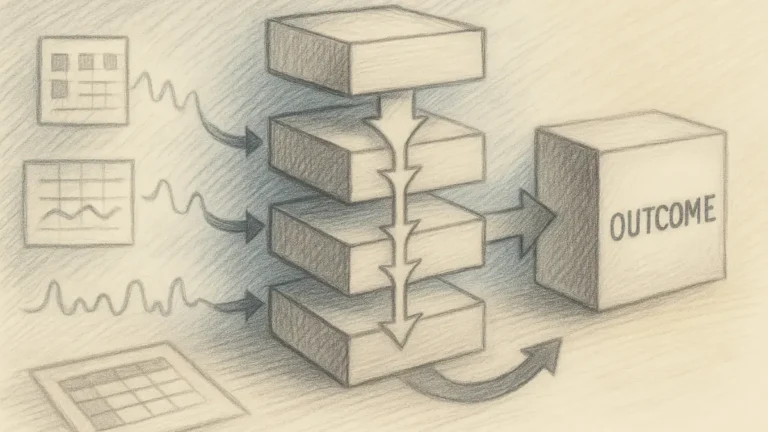

Human-in-the-Loop Validation Models

Iterative AI systems operate through repeated cycles of inference and adjustment, which increases both capability and risk during operational discovery. In this setting, human in the loop ai provides a validation structure that stabilizes outputs across cycles, a practice formalized in applied machine learning research from Carnegie Mellon University LTI. This section explains how validation loops function as an operational safeguard rather than a post-hoc review step.

Human-in-the-loop AI refers to systems where humans intervene during operation to correct or guide outputs.

Claim: Validation loops reduce discovery error rates.

Rationale: Continuous feedback corrects model drift that accumulates during iterative inference.

Mechanism: Human checkpoints reset assumptions and confirm alignment at defined stages.

Counterargument: Loops reduce processing speed and increase operational cost.

Conclusion: Accuracy outweighs speed in discovery contexts where outcomes carry consequence.

Validation Loops in Iterative Systems

Validation loops define how human input integrates into repeated AI execution cycles. In iterative systems, models refine outputs based on prior results, which can amplify early inaccuracies if left unchecked. Validation loops introduce periodic human confirmation to prevent compounding errors.

These loops do not require constant intervention. Instead, they activate when outputs exceed uncertainty thresholds or influence downstream decisions. This selective activation preserves efficiency while maintaining control.

By embedding validation into iteration, organizations ensure that learning processes remain bounded by expert judgment rather than autonomous optimization.

Validation loops maintain direction as systems evolve over time.

Human Checkpoints and Assumption Resetting

Human checkpoints represent moments where experts reassess whether system assumptions remain valid. As data distributions shift or objectives evolve, assumptions encoded in models may no longer hold. Checkpoints provide structured opportunities to reassess these conditions.

During checkpoints, experts can recalibrate thresholds, redefine relevance criteria, or halt exploration paths that no longer align with intent. This intervention prevents silent drift from reshaping discovery outcomes.

Assumption resetting ensures continuity between original objectives and current system behavior.

Checkpoints keep iterative systems aligned with real-world context.

Supervision Models in Operational Discovery

Operational discovery often involves continuous execution rather than discrete projects. In these environments, ai with human supervision ensures that outputs remain usable across time rather than only at isolated milestones. Supervision frameworks define how often humans intervene and which signals trigger review.

Expert review ai outputs focuses on outputs that influence decisions, compliance, or resource allocation. Routine signals may bypass review, while high-impact discoveries require confirmation. This prioritization balances scale with responsibility.

Supervision models therefore function as governance mechanisms rather than technical add-ons.

They allow systems to operate continuously without losing accountability.

Implications for Discovery Reliability

Human-in-the-loop validation models directly affect discovery reliability. By combining automated exploration with periodic human confirmation, organizations reduce false positives and prevent misinterpretation from becoming institutionalized.

Reliability improves because validation occurs during discovery, not after deployment. This timing limits the spread of incorrect assumptions across teams and systems.

As a result, discovery outputs gain credibility and remain actionable over extended operational cycles.

Validation loops transform iteration into a controlled process rather than an uncontrolled acceleration.

Hybrid Intelligence Systems Architecture for Human AI Collaboration

Isolated intelligence systems reach structural limits when operating in complex enterprise environments where uncertainty, accountability, and scale intersect. Hybrid intelligence systems address these limits by combining human reasoning with computational inference inside a unified architecture, a design approach analyzed in institutional research on digital governance and decision systems by the Oxford Internet Institute. This section describes how hybrid architectures organize responsibility and execution across enterprise AI systems.

Hybrid intelligence systems combine human reasoning with computational inference to produce jointly validated outcomes.

Claim: Hybrid architectures outperform isolated systems.

Rationale: Each intelligence type offsets the limitations of the other when operating alone.

Mechanism: Cognitive roles are separated across architectural layers rather than duplicated within a single agent.

Counterargument: Integration complexity increases development and coordination costs.

Conclusion: Performance gains justify architectural complexity in decision-critical environments.

Cognitive Role Separation in Hybrid Architectures

Hybrid architectures rely on deliberate cognitive role separation rather than task-level automation. Humans and AI systems do not compete within the same function but instead operate at different levels of abstraction. This separation reduces ambiguity because each layer has a clearly defined authority scope.

At the architectural level, humans retain responsibility for intent, interpretation, and consequence. AI systems focus on scenario modeling, pattern detection, and execution at scale. This division prevents systems from drifting toward autonomous decision-making without contextual grounding.

Cognitive separation also improves explainability. When outcomes emerge from layered contributions, organizations can trace which reasoning process influenced each stage of discovery or decision.

Role separation creates clarity by design rather than through corrective oversight.

Hybrid Architecture Layers

| Layer | Human Role | AI Role |

|---|---|---|

| Strategic | Defines objectives | Models scenarios |

| Analytical | Frames hypotheses | Detects patterns |

| Operational | Makes final decisions | Executes at scale |

Hybrid systems allocate responsibility by cognitive function, not task volume.

Collaborative Intelligence at the System Level

Collaborative intelligence models emerge naturally from hybrid architectures because cooperation is embedded structurally. Humans and AI systems interact through defined interfaces rather than ad hoc intervention. This design ensures that collaboration remains consistent across teams and use cases.

At the system level, collaboration enables scalability without surrendering control. AI systems extend human capacity by exploring large information spaces, while humans constrain interpretation and action. The result is a stable balance between speed and judgment.

These models also support organizational learning. As systems evolve, humans adjust objectives and constraints while AI adapts execution strategies within those boundaries.

Collaboration becomes predictable because it is architected, not improvised.

Mixed Intelligence Systems in Enterprise Design

Mixed intelligence systems integrate different reasoning modes into a single operational framework. Instead of treating human and machine intelligence as interchangeable, these systems preserve their distinct strengths. This preservation prevents over-automation in areas that require judgment.

In enterprise design, mixed intelligence systems reduce failure modes associated with single-point reasoning. When one intelligence type encounters uncertainty, the other compensates through interpretation or computation. This redundancy improves resilience.

System designers therefore focus less on replacing human effort and more on aligning reasoning flows across layers.

Alignment ensures that intelligence remains complementary rather than conflicting.

Architectural Implications for Performance and Governance

Hybrid architectures influence both performance and governance. Performance improves because AI systems handle scale efficiently, while humans prevent misinterpretation. Governance improves because authority remains explicit and traceable across layers.

Integration complexity does increase initial cost. However, this cost reflects deliberate coordination rather than uncontrolled automation. Over time, hybrid systems reduce remediation effort by preventing large-scale errors.

As enterprises adopt more AI-driven discovery, architecture determines whether intelligence amplifies capability or risk.

Hybrid design ensures that intelligence scales without losing responsibility.

Expert-Driven Discovery Workflows

Enterprise discovery operates within governance constraints that demand alignment with strategy, risk tolerance, and accountability. In this environment, expert driven discovery provides a workflow model that orchestrates how AI exploration begins, progresses, and concludes under human authority, as outlined in applied organizational research by the Harvard Data Science Initiative. This section explains how workflow design, rather than model capability alone, determines discovery relevance in organizational systems.

Expert-driven discovery is a workflow where experts initiate, constrain, and validate AI exploration.

Claim: Expert-driven workflows preserve discovery relevance.

Rationale: Experts align discovery objectives with strategic and institutional goals.

Mechanism: Experts define exploration parameters that govern how AI systems search and evaluate information.

Counterargument: Strict parameterization limits exploratory freedom and novelty.

Conclusion: Strategic alignment outweighs exploration breadth in enterprise discovery.

| Stage | Expert Action | AI Action |

|---|---|---|

| Initiation | Defines scope | Prepares data space |

| Exploration | Reviews findings | Generates candidates |

| Validation | Confirms outcomes | Logs rationale |

Workflow control ensures discovery outcomes remain decision-relevant.

Workflow Orchestration for Human AI Collaboration in Organizations

Workflow orchestration defines how discovery activities move from intent to outcome within an organization. In expert-driven models, orchestration begins with explicit scoping decisions that translate strategic questions into operational discovery tasks. This translation prevents AI systems from exploring tangential domains that dilute relevance.

During execution, orchestration maintains synchronization between expert intent and system behavior. Experts periodically assess whether exploration remains aligned with objectives, while AI systems continue generating candidates within defined boundaries. This coordination keeps discovery focused without micromanagement.

Orchestration also standardizes discovery across teams. When workflows follow shared patterns, organizations reduce variance in how results are generated and interpreted.

Standardized orchestration supports consistency across diverse discovery initiatives.

Parameter Definition and Constraint Design

Constraint design specifies how experts shape the search space before AI exploration begins. Parameters may include inclusion criteria, exclusion rules, confidence thresholds, and relevance signals tied to organizational priorities. These parameters translate abstract goals into executable system behavior.

By defining constraints upfront, experts reduce the need for corrective intervention later. AI systems operate efficiently because exploration remains bounded, and outputs arrive closer to decision-ready states. This approach shifts effort from remediation to planning.

Constraints also enable comparability. When similar discovery tasks use consistent parameters, organizations can evaluate outcomes across projects and time.

Constraint design transforms discovery from open-ended search into directed inquiry.

Example: In expert-driven discovery workflows, AI systems generate candidate hypotheses within predefined constraints, while experts validate which outputs advance to decision-making, preventing exploratory noise from becoming institutional knowledge.

Validation as a Workflow Endpoint

Validation represents the point at which discovery outputs transition into organizational knowledge or action. In expert-driven workflows, validation is not optional and not deferred. Experts confirm whether outputs meet relevance, accuracy, and impact criteria before acceptance.

This endpoint ensures that discovery produces usable results rather than raw signals. AI systems document rationale and provenance, while experts apply judgment to finalize outcomes. Together, these steps preserve accountability.

Validation also creates feedback loops. Accepted or rejected outputs inform future parameter tuning and workflow refinement.

A clear validation endpoint stabilizes discovery across operational cycles.

Human-Guided and Expert-Guided Systems

Human guided discovery emphasizes continuous expert involvement without collapsing into manual control. Experts intervene at defined stages, while AI systems maintain autonomy within those bounds. This balance supports scale while preserving judgment.

Similarly, ai systems guided by experts operate under explicit authority structures. Guidance does not dictate every action but establishes the conditions under which actions are acceptable. This structure prevents systems from redefining goals implicitly.

Guided systems therefore remain adaptable without becoming unaccountable.

Guidance aligns system behavior with organizational intent rather than technical possibility.

Implications for Enterprise Governance

Expert-driven workflows directly influence governance outcomes. When discovery follows explicit workflows, organizations can explain how results were produced and why decisions were made. This transparency supports auditability and regulatory compliance.

Governance also benefits from predictability. Decision-makers know when and how expert input occurs, which reduces uncertainty around system behavior. As discovery scales, this predictability becomes essential.

Ultimately, workflow design determines whether AI discovery serves organizational goals or undermines them.

Expert-driven workflows ensure that discovery remains purposeful, accountable, and aligned.

Strategic Value of Human AI Collaboration in Enterprise Governance

Long-term AI investment decisions increasingly shape how organizations compete, comply, and innovate over time. Within this horizon, human ai strategy determines whether AI capabilities compound value or introduce systemic fragility, a governance issue examined in policy and economic analysis by the OECD. This section focuses on how strategic design, rather than technical performance, defines the durable value of collaboration.

Human–AI strategy defines how decision authority is allocated between humans and AI systems.

Claim: Strategy determines collaboration success.

Rationale: Misaligned authority structures cause AI systems to optimize outcomes that conflict with organizational goals.

Mechanism: Clear governance models specify where human judgment overrides automated inference.

Counterargument: Strategic governance slows experimentation and reduces agility.

Conclusion: Strategy stabilizes innovation by preventing uncontrolled system behavior.

Authority Allocation in Human AI Collaboration Strategy

Authority allocation describes how organizations formalize decision boundaries within human ai collaboration across enterprise systems. This choice affects outcomes, accountability, risk exposure, and the ability to sustain organizational learning at scale.

Strategic allocation formalizes responsibility across discovery, validation, and execution layers. Humans retain authority where consequences extend beyond measurable metrics, while AI systems operate where scale and consistency matter most. This structure prevents silent shifts in decision power.

Over time, clear allocation reduces friction between teams because expectations remain explicit. Strategic clarity replaces reactive governance.

Authority allocation determines how collaboration behaves under pressure.

Governance Models and Institutional Alignment

Governance models translate strategy into operational rules that guide system behavior. These models define escalation paths, approval requirements, and intervention thresholds that apply across enterprise human ai systems. Without governance, collaboration degrades into ad hoc intervention.

Effective governance aligns AI activity with institutional objectives such as compliance, resilience, and long-term value creation. It also establishes continuity as personnel and technologies change. Systems remain interpretable because governance outlives individual implementations.

By embedding governance early, organizations avoid retrofitting control after failures occur.

Governance models make collaboration repeatable rather than situational.

Strategic Trade-offs Between Speed and Stability

Strategic planning introduces trade-offs between rapid experimentation and systemic stability. While unrestricted AI deployment accelerates iteration, it also increases the probability of misalignment. Strategy introduces friction intentionally to protect long-term outcomes.

This friction does not eliminate innovation. Instead, it channels exploration into domains where failure remains tolerable and learning remains constructive. Stable boundaries enable safe experimentation.

As a result, organizations sustain innovation cycles without accumulating hidden risk.

Stability enables innovation to persist rather than collapse.

Scalability Through Strategic Design

Scalable human ai systems depend on strategy more than on model performance. Without strategic structure, scaling amplifies errors as efficiently as it amplifies success. Strategy defines which processes scale automatically and which require continued human involvement.

Strategic design ensures that as systems expand, authority does not diffuse. Humans continue to guide intent, while AI systems extend execution capacity. This balance allows growth without eroding accountability.

Scalability therefore reflects governance maturity rather than technical sophistication.

Strategy determines whether scale increases value or risk.

Implications for Long-Term Value Creation

The strategic value of collaboration emerges over time through accumulated trust, reliability, and institutional learning. Organizations that invest in strategy early experience fewer disruptive corrections later. Their systems evolve predictably rather than reactively.

Long-term value also depends on adaptability. Strategic frameworks allow collaboration models to adjust as technologies and contexts change without losing coherence.

Ultimately, collaboration succeeds when strategy defines how intelligence serves purpose.

Human–AI collaboration creates value when strategy anchors authority, governance, and scale.

Future Operational Models of Human AI Collaboration and Validation

Next-generation discovery systems are evolving toward continuous operation, higher autonomy, and broader organizational reach. In AI-first enterprises, human validated ai systems define how authority, accountability, and trust persist as systems become more capable, a direction reinforced by standards and guidance from the National Institute of Standards and Technology (NIST). This section projects how validation-centric operations will shape future enterprise discovery.

Human-validated AI systems grant authority to AI outputs only after expert confirmation.

Claim: Human validation will remain mandatory.

Rationale: Trust, accountability, and liability requirements persist regardless of model capability.

Mechanism: Validation-first pipelines require expert confirmation before outputs gain operational authority.

Counterargument: Increased autonomy and model maturity may reduce the frequency of validation.

Conclusion: Validation evolves in form and timing but does not disappear from enterprise systems.

Validation-First Pipelines in Human AI Collaboration Systems

Validation-first pipelines reorganize discovery so that confirmation precedes action rather than follows it. In these models, AI systems generate insights continuously, but outputs remain provisional until validated. This ordering prevents premature automation of decisions with material impact.

Operationally, pipelines integrate validation checkpoints directly into execution paths. Systems route outputs to experts based on predefined risk, novelty, or impact thresholds. Lower-risk signals may progress automatically, while higher-impact outputs require explicit confirmation.

This design aligns operational speed with responsibility. Automation accelerates exploration, while validation governs authority.

Pipelines structure autonomy without relinquishing control.

Shifting Validation Timing and Frequency

Future models adjust when and how often validation occurs rather than removing it entirely. Instead of validating every output, organizations validate representative samples, boundary cases, or outputs that trigger downstream actions. This shift preserves efficiency while maintaining oversight.

Adaptive validation schedules respond to system performance over time. As confidence increases, validation frequency may decrease within defined bounds. When context changes or anomalies emerge, validation intensity increases automatically.

Timing flexibility allows systems to scale without normalizing unchecked autonomy.

Validation adapts to context while remaining structurally embedded.

Operational Roles in the Future of Collaboration

As discovery systems mature, operational roles become more specialized. Humans focus on intent setting, exception handling, and interpretive judgment. AI systems focus on continuous sensing, pattern expansion, and execution.

These role distinctions define the future of human ai operational models. Collaboration persists, but interaction points become fewer and more deliberate. Systems rely on human input at moments of consequence rather than during routine operation.

This specialization increases clarity across organizations. Teams understand when human involvement is required and why.

Operational clarity reduces friction as systems scale.

Trust, Accountability, and Institutional Adoption

Trust remains the limiting factor for adoption in high-stakes environments. Human validation provides an auditable record of decision readiness, which supports compliance, risk management, and stakeholder confidence. Institutions rely on this record to justify action.

Accountability frameworks depend on identifiable human responsibility. Even as AI systems recommend or execute actions, validation assigns ownership. This ownership anchors trust beyond technical performance.

As adoption expands, institutions favor systems that make accountability explicit rather than implicit.

Validation enables trust to persist as autonomy increases.

Long-Term Implications for AI-First Enterprises

AI-first enterprises succeed when operational models balance autonomy with governance. Human-validated systems allow organizations to exploit advanced AI capabilities without eroding institutional control. This balance supports resilience over time.

Long-term success depends on adaptability. Validation frameworks that evolve with technology prevent abrupt governance failures. Organizations adjust validation depth and scope without redesigning systems entirely.

The future of enterprise AI depends less on eliminating humans and more on defining when human authority matters.

Human validation ensures that discovery remains actionable, trusted, and accountable as systems evolve.

Checklist:

- Are discovery roles between humans and AI systems explicitly separated?

- Do validation points exist before AI outputs gain decision authority?

- Is human judgment embedded at stages of high impact or uncertainty?

- Are governance boundaries stable across discovery workflows?

- Does the system prevent autonomous redefinition of intent?

- Is accountability traceable to human validation decisions?

Structural Interpretation of Hybrid Discovery Page Design

- Hierarchical reasoning segmentation. A stable H2→H3→H4 depth model allows AI systems to separate strategic claims, operational mechanisms, and contextual explanations into discrete reasoning layers.

- Authority distribution signaling. Structural placement of definitions, reasoning chains, and validation logic communicates where interpretive authority resides between human expertise and automated inference.

- Reasoning chain localization. Self-contained analytical blocks enable generative systems to reconstruct complete argument paths without merging unrelated contextual fragments.

- Semantic boundary enforcement. Consistent paragraph scoping and controlled transitions prevent cross-contamination of concepts during long-context processing.

- Interpretive role separation. Recurrent structural patterns distinguish conceptual framing, system behavior, and institutional implications as independent interpretive signals.

This structural layer functions as an interpretive map for generative systems, explaining how complex human–AI discovery logic is segmented, validated, and preserved during automated understanding.

FAQ: Human Expertise and AI Discovery Systems

What are AI discovery systems?

AI discovery systems are computational frameworks designed to surface patterns, relationships, or hypotheses from large information spaces beyond direct human analysis.

Why is human expertise required in AI discovery?

Human expertise provides contextual judgment, validation, and authority that AI systems cannot generate independently, especially in decision-critical environments.

What does human oversight mean in AI discovery?

Human oversight refers to structured evaluation and control mechanisms that guide how AI discovery outputs are interpreted and applied within institutional systems.

How do validation loops function in discovery systems?

Validation loops integrate expert review at defined stages of AI operation to correct drift, confirm relevance, and prevent unverified outputs from influencing decisions.

What are hybrid intelligence systems?

Hybrid intelligence systems combine human reasoning and computational inference by separating cognitive roles across strategic, analytical, and operational layers.

How do expert-driven workflows shape discovery outcomes?

Expert-driven workflows define how discovery begins, how exploration is constrained, and how outputs are validated to ensure alignment with organizational intent.

Why is governance important for AI discovery systems?

Governance establishes clear authority boundaries, accountability, and escalation paths that prevent uncontrolled automation in enterprise discovery environments.

Will human validation remain necessary as AI systems improve?

Human validation remains necessary because trust, accountability, and institutional responsibility persist even as AI systems increase in capability.

What defines future operational models of AI discovery?

Future models emphasize validation-first pipelines where AI outputs gain authority only after expert confirmation within structured operational frameworks.

Glossary: Key Terms in Human–AI Discovery Systems

This glossary defines the core terminology used throughout the article to ensure consistent interpretation of human–AI discovery concepts by both experts and AI systems.

AI Discovery Systems

Computational frameworks designed to surface patterns, relationships, or hypotheses from large information spaces that exceed direct human analytical capacity.

Human–AI Collaboration

A structured interaction model in which human judgment and AI systems jointly participate in discovery, validation, and decision formation.

Human Oversight

The institutional process of supervising AI discovery outputs to ensure contextual validity, relevance, and alignment with organizational intent.

Human-in-the-Loop Validation

A validation model where experts intervene at defined stages of AI operation to correct drift, confirm assumptions, and validate outputs.

Hybrid Intelligence Systems

Systems that combine human reasoning and computational inference by separating cognitive roles across strategic, analytical, and operational layers.

Expert-Driven Discovery

A discovery workflow where experts define scope, constrain exploration, and validate AI-generated outputs to preserve relevance and accountability.

Validation Loop

An iterative control mechanism that integrates expert feedback into AI execution cycles to prevent error amplification over time.

Discovery Governance

The framework of authority allocation, escalation paths, and validation rules that regulate how AI discovery systems operate within organizations.

Validation-First Pipeline

An operational model where AI outputs remain provisional until expert confirmation grants them decision or execution authority.

Decision Authority

The formally defined responsibility for accepting, rejecting, or acting upon AI-generated discovery outcomes within institutional systems.