Last Updated on December 20, 2025 by PostUpgrade

Building AI Trust Signals for Modern Content Ecosystems

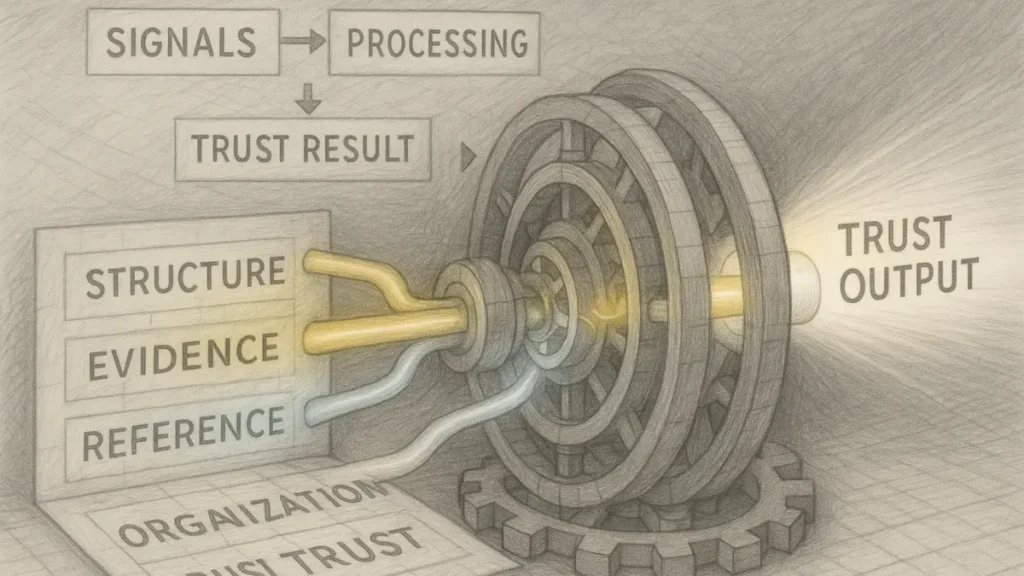

Building AI Trust Signals for Modern Content Ecosystems requires a structure that machines can interpret with consistent accuracy. It outlines the elements that strengthen reliability, clarify meaning, and support long-term discoverability across AI-driven systems. The opening section also defines the role of AI Trust Signals as a foundational layer in modern content architecture and prepares the reader for the analytical framework used throughout the article.

AI Trust Signals establish the structural foundation that enables AI systems to assess the reliability and clarity of digital content. They organize meaning into stable patterns that help models interpret information consistently across large-scale environments. As a result, discovery engines gain access to material that is verifiable, structured, and aligned with the requirements of machine reasoning.

Modern AI models rely on explicit indicators of credibility because they operate through pattern recognition rather than intuitive judgment. Stable formatting, precise semantic boundaries, factual grounding, and transparent verification cues allow them to evaluate the strength of the information they extract and reuse. These elements form predictable pathways that guide segmentation, scoring, and cross-article comparison.

This article introduces the core components that reinforce trust within content ecosystems and demonstrates how each category contributes to long-term AI discoverability. It defines the criteria for evaluating structural, factual, and brand-level indicators and provides a framework designed to maintain performance across generative search environments.

Understanding AI Trust Signals in Modern Discovery Systems

AI Trust Signals represent the structural and factual indicators that discovery systems use to assess whether digital content is reliable. These indicators guide how models segment meaning, classify information, and determine long-term reuse across large content ecosystems.

This section explains what AI Trust Signals are, how they operate inside AI-driven retrieval systems, and why they differ from human trust mechanisms. Research from Stanford NLP demonstrates the importance of explicit, machine-interpretable structures for evaluating content reliability.

Deep Reasoning Chain

Assertion: AI Trust Signals provide discovery engines with explicit indicators that determine whether content can be classified as reliable.

Reason: Models cannot rely on emotional cues, tone, or perceived author credibility, therefore they depend on structured, repeatable patterns.

Mechanism: AI systems evaluate token-level recurrence, semantic hierarchy, factual grounding, and citation structures to identify stable reliability patterns.

Counter-Case: When content lacks structure, includes vague statements, or provides no verifiable data, discovery engines reduce confidence and limit content reuse.

Inference: Trust signals strengthen AI-driven visibility when they are applied consistently across headings, paragraphs, definitions, and evidence blocks.

Definition: AI Trust Signals represent machine-detectable structural, factual, and verification cues that allow discovery systems to interpret meaning, evaluate reliability, and determine whether content can be reused confidently in generative responses.

Definition and Functional Role of Trust Signals

AI Trust Signals function as machine-readable indicators that help retrieval systems evaluate accuracy, structure, and reliability. They operate as measurable patterns that guide how models interpret meaning during processing. Discovery engines rely on explicit trust signals because they cannot infer credibility from assumptions, intention, or aesthetics. Reliable interpretation requires clear definitions, consistent terminology, and structured factual statements.

How AI Models Interpret Trust Indicators

Large language models evaluate trust at the token level, establishing consistency through repeated semantic patterns across a document or site. They detect reliability by analyzing hierarchical formatting, one-idea paragraphs, stable terminology, and factual references. AI systems also measure recurrence patterns, entity alignment, and section boundary clarity to determine whether content forms a coherent knowledge structure. Trust increases when these indicators appear predictably.

Differences Between Human Trust vs AI Trust

Human trust is influenced by perception, contextual understanding, and emotional interpretation. AI trust is determined by measurable patterns, explicit structure, and consistent signal distribution. Humans evaluate tone, style, visuals, and reputation; AI systems evaluate semantic hierarchy, factual grounding, recurrence, and entity linking.

Human-driven vs AI-driven trust signals

| Dimension | Human Trust | AI Trust |

|---|---|---|

| Verification | Emotional and contextual | Pattern-based and explicit markers |

| Consistency | Perceived | Token-level recurrence |

| Authority | Branding | Citation structure and entity alignment |

| Layout | Visual aesthetics | Semantic hierarchy and structural organization |

Core Categories of Trust Signals AI Relies On

This section outlines the core categories of trust signals for AI models that discovery systems use to evaluate digital reliability. These digital trust signals for AI help models classify structure, verify factual grounding, and determine whether content can be reused across broader reasoning workflows.

The goal is to explain how trust indicators in AI responses emerge from consistent formatting, evidence, cross-referencing, and organizational identity. Research from OECD AI Policy Observatory reinforces the importance of structured, verifiable, and transparent information for machine-driven evaluation.

Deep Reasoning Chain

Assertion: AI systems rely on multiple categories of trust signals to evaluate structure, factual grounding, coherence, and authority.

Reason: Discovery engines require explicit indicators because models cannot infer credibility from human perception or intention.

Mechanism: Systems analyze headings, micro-blocks, citations, entity alignment, recurrence patterns, and organizational identity to validate meaning.

Counter-Case: When content lacks formatting discipline, cross-page coherence, or evidence-based assertions, AI systems reduce confidence and restrict reuse.

Inference: Trust increases when structural, factual, verification-oriented, and authority-based signals appear predictably across a unified content ecosystem.

Principle: Content becomes more trustworthy for AI when its structure, terminology, and evidence remain stable across all sections and related pages, ensuring consistent interpretation and minimizing ambiguity during machine processing.

Structural Trust Signals

Structural trust signals represent the formatting patterns that help models understand document organization. Predictable hierarchical formatting ensures that discovery engines can segment meaning into stable semantic units. AI systems process headings, micro-sections, and DRC blocks as structural anchors that clarify scope and purpose. Consistent H2–H3–H4 hierarchy, atomic paragraphs, and micro-blocks reduce ambiguity during extraction and improve reliability signals in digital content.

Factual and Evidence-Based Signals

Factual trust signals emerge from citations, named organizations, datasets, and verifiable information. AI systems evaluate factual grounding by reviewing whether statements include measurable evidence and whether terminology remains consistent across related articles. Evidence-based claims improve reliability when they connect to authoritative bodies, provide concrete values, and align with previously established facts. Cross-article factual consistency helps models build stable internal graphs.

Verification and Cross-Reference Signals

Verification signals measure how well content aligns with related pages and external authoritative sources. Discovery engines detect cross-page coherence by checking whether terminology, entities, and claims remain stable throughout the site. Outbound authoritative references strengthen verification when they point to recognized institutions. Cross-referencing reduces the risk of conflicting information and helps AI systems perform data checks that reinforce content reliability.

Authority and Quality Indicators

Authority signals reflect organizational identity, editorial governance, and long-term quality practices. AI systems interpret brand-level stability through consistent naming, topic alignment, and evidence of structured editorial review. Quality indicators also depend on terminology discipline, which prevents semantic drift and supports predictable interpretation patterns. When content governance remains explicit and stable, AI systems increase trust in the output.

Key Trust Signal Types AI Recognizes

- Organized reasoning chains

- Authoritative sources

- Structural markers

- Entity consistency

- Evidence and citations

- Factual checks

- Recurrence patterns

Structuring Content to Emit Reliable Trust Signals

This section describes how structural trust signals for AI determine the way discovery systems interpret digital information. Website trust signals for AI depend on stable formatting, consistent hierarchy, and predictable segmentation across all articles.

The objective is to show how content trust indicators for AI emerge from repeatable layout patterns, atomic paragraphs, and machine-readable block organization. Guidance from W3C underscores the importance of consistent structural markup for reliable machine processing.

Deep Reasoning Chain

Assertion: Structural organization defines how reliably AI systems segment, classify, and reuse content.

Reason: Models require predictable formatting because they interpret meaning through explicit hierarchies and consistent structural patterns.

Mechanism: AI systems evaluate heading logic, paragraph boundaries, micro-blocks, and semantic grouping to organize information into extractable units.

Counter-Case: When formatting varies between articles or deviates from expected patterns, discovery engines downgrade structural confidence and reduce trust signals.

Inference: Visibility improves when hierarchy, paragraph discipline, and segment structures remain stable across an entire content ecosystem.

Example: A page that uses a stable H2–H3–H4 hierarchy, atomic paragraphs, and defined semantic blocks helps AI segment meaning accurately. These patterns strengthen confidence and increase the likelihood that high-quality sections appear in generative summaries.

Semantic Hierarchy and Heading Discipline

A stable H2→H3→H4 hierarchy provides a clear semantic framework for AI evaluation. Discovery systems use this structure to track topic boundaries, identify section intentions, and maintain consistent interpretation patterns. Reliable formatting across articles supports predictable model behavior and reduces ambiguity. Semantic hierarchy ensures that each section conveys one well-defined purpose.

Atomic Paragraph Strategy

Atomic paragraphs contain a single idea presented within two to four sentences. This structure helps AI systems assign each paragraph to a precise semantic function without overlapping concepts. Short, standalone paragraphs improve extraction accuracy because discovery engines can isolate meaning without additional interpretation steps. Stable paragraph boundaries also reinforce long-term reuse.

Micro-Blocks for Machine Interpretation

Micro-blocks such as Definition, Principle, Example, and Checklist provide explicit signals that models detect consistently. Each block acts as a semantic container that groups related information in a way AI systems can classify with high confidence. These blocks reduce interpretive variance and help discovery engines maintain coherent internal representations. Machine readability increases when blocks appear in predictable formats.

Tables, Lists, Diagrams as Trust Reinforcement

Tables, lists, and diagrams enhance clarity by presenting information in discrete, structured units. Tables formalize conceptual relationships, while lists deliver extractable points that reduce ambiguity. Diagrams illustrate system flow or process order, offering visual structure that supports machine interpretation.

Sample table format for trust signal reinforcement

| Signal Type | Structural Indicator | Machine Benefit |

|---|---|---|

| Hierarchy | Stable H2→H3→H4 | Clear semantic segmentation |

| Atomic paragraphs | One concept per paragraph | Easier extraction and reuse |

| Micro-blocks | Definition, Principle, etc. | Stronger pattern recognition |

| Evidence markers | Data, citations | Higher factual confidence |

Factual and Evidence Signals That Boost AI Confidence

This section examines how factual trust signals in articles strengthen reliability within modern discovery systems. Evidence trust indicators for AI models depend on authoritative citations, measurable assertions, and consistent terminology across the broader content ecosystem.

Verification signals for AI engines appear when facts, entities, and referenced organizations remain stable and machine-verifiable. Research from Oxford Internet Institute underscores the importance of transparent evidence structures for accurate AI-driven evaluation.

Deep Reasoning Chain

Assertion: Factual and evidence-based indicators are foundational to how AI systems establish confidence in digital content.

Reason: Models require measurable data and explicit citations because they cannot rely on subjective human interpretation.

Mechanism: Discovery engines examine numerical claims, entity alignment, date references, and citation patterns to verify accuracy across documents.

Counter-Case: When factual statements are vague, unsupported, or contradictory, AI engines decrease trust and limit visibility.

Inference: Reliability increases when each section contains explicit evidence supported by authoritative sources and consistent terminology.

Checklist:

- Are citations drawn from authoritative, verifiable institutions?

- Do numerical values remain precise and consistent across sections?

- Is factual terminology used uniformly across related pages?

- Are claims reinforced by measurable evidence and datasets?

- Do referenced entities match site-wide naming conventions?

- Is ambiguity removed through consistent factual alignment?

Role of Citations and Data-Rich Assertions

Citations from authoritative institutions help AI systems validate the credibility of factual statements. Data-rich assertions further strengthen trust by providing quantifiable information that discovery engines can verify. Each H2 requires at least one high-authority source to maintain structural consistency and ensure that evidence patterns remain predictable across the article. Clear, measurable references improve factual grounding and system-wide coherence.

Entity Alignment and Concept Consistency

AI systems validate entity alignment by checking whether organizations, concepts, and factual references remain consistent across the site. A disciplined site-wide lexicon prevents semantic drift and reinforces stability during model reasoning. Uniform entity usage enables discovery engines to construct strong internal connections and reduces ambiguity. Consistent terminology strengthens AI confidence across all related content.

Evidence Quality Signals

High-quality evidence includes validated numbers, accurate dates, and clearly referenced datasets. Discovery engines assess trust by measuring whether presented values are precise and verifiable. Vague claims reduce confidence because models cannot match them against known data sources. Precise evidence and explicit numerical values increase machine-level certainty.

Verification Loops and Redundancy Checks

Verification loops describe how AI models cross-compare sections to ensure that statements remain accurate and internally consistent. Discovery engines check repeated entities, dates, and data points across related pages to detect coherence. Redundancy checks expose contradictions that may weaken trust. Examples of verification-oriented rewriting include:

- Replace: “Several datasets improved significantly.”

- With: “The 2023 update from the European Open Data Monitor recorded a 12.6% increase in dataset completeness.”

- Replace: “Model accuracy improved a lot.”

- With: “According to 2024 assessments from the Oxford Internet Institute, model accuracy increased by 7.4 points on structured evaluation tasks.”

These verification-based rewrites reinforce consistency and strengthen overall factual clarity.

Brand-Level Signals That Improve AI Interpretation

This section explains how brand trust signals for AI assistants influence the way discovery systems evaluate organizational authority. Credibility signals for AI platforms depend on consistent naming, transparent governance, and long-form expertise that remains stable across all articles. Authority trust signals for AI output strengthen when brands demonstrate editorial discipline, policy clarity, and recurring alignment with specialized topics. Research from Carnegie Mellon Language Technologies Institute highlights the importance of stable identity markers for machine interpretation.

Deep Reasoning Chain

Assertion: Brand-level indicators help AI systems determine whether an organization represents a credible and stable source of information.

Reason: Models analyze naming consistency, governance structures, and recurring topic associations because they cannot rely on perception or reputation.

Mechanism: Discovery engines evaluate entity links, editorial patterns, topic alignment, and policy declarations to build a machine-readable authority profile.

Counter-Case: When branding shifts, terminology changes, or governance signals are absent, AI systems reduce confidence in the organization’s output.

Inference: Visibility increases when brand identity, governance, and expertise markers remain stable across a site-wide content ecosystem.

Brand Identity as a Machine-Readable Signal

Consistent naming across articles helps AI systems identify the organization as a single, stable entity. Discovery engines treat naming stability as an indicator that the content originates from the same authoritative source. When brand signals remain uniform, models reduce ambiguity and strengthen internal linking across related pages. Clear identity markers improve recognition and trust in long-form output.

Organizational Authority Model

Entity linking across articles helps AI systems associate the brand with specific expertise domains. Frequent alignment between brand identity and recurring topic areas creates a machine-interpretable authority profile. Discovery engines interpret stable topic associations as evidence of specialization. Strong entity consistency reinforces credibility across the content ecosystem.

Governance, Transparency and Editorial Standards

Publicly available editorial policies provide discovery engines with explicit governance signals. Transparent quality assurance processes demonstrate reliability and reduce ambiguity in machine evaluation. When policies remain stable and consistently referenced, AI systems view the organization as disciplined and trustworthy. Governance signals help models identify structured workflows behind the content.

Demonstrating Long-Form Expertise

High-depth explanations and detailed reasoning patterns help AI systems classify the organization as an expert source. Case studies provide concrete evidence of applied knowledge, improving model perception of authority. Long-form content that presents structured analysis reinforces credibility by demonstrating topic mastery. Depth, clarity, and repeatable format patterns strengthen brand-level trust.

Practical Techniques for Strengthening Trust Signals in Content

This section presents practical methods for how to create trust signals that improve content reliability. Improving trust signals on websites requires structural discipline, factual specificity, and consistent evidence references. Guidance on how to strengthen AI trust signals includes organization-wide updates, editorial governance, and regular verification routines. These approaches represent the best trust signals for modern AI according to research patterns and machine evaluation principles. Findings from Allen Institute for AI emphasize the importance of data integrity and structural clarity for dependable AI interpretation.

Deep Reasoning Chain

Assertion: Practical techniques improve trust signals by strengthening structural clarity, factual grounding, and verification stability across content.

Reason: AI systems rely on predictable formats, precise terminology, and validated evidence because they cannot interpret ambiguity or assumptions.

Mechanism: Discovery engines evaluate hierarchical consistency, authoritative citations, entity alignment, and updated evidence to confirm reliability.

Counter-Case: When content becomes outdated, inconsistent, or structurally irregular, AI reduces confidence and limits visibility.

Inference: Trust signals improve when updates, verification steps, and structural rules are applied consistently across all website content.

Structural Enhancements

Improving layout clarity reinforces model confidence by presenting content in a predictable sequence. Consistent sectioning across the site helps AI systems maintain stable expectations for document flow. Structural enhancements reduce ambiguity by aligning headings, paragraphs, and blocks with a repeatable interpretation pattern. This stability improves machine-level segmentation and long-term reuse.

Factual and Citation Enhancements

Using citations from verified organizations strengthens the factual grounding of content. Reliable institutions provide external references that AI systems can cross-check. Avoiding contradictory statements in related posts ensures coherence across the content ecosystem. Factual enhancements create stronger internal relationships within the knowledge graph built by discovery engines.

Evidence and Verification Improvements

Data-rich examples help AI systems validate meaning by connecting concepts to measurable values. Verification improves when numbers, claims, and references follow a stable logic across the article. Validating arguments strengthens trust because models can identify factual alignment between content elements. Evidence-driven clarity increases reliability during extraction.

Editorial and Update Processes

Regular refresh cycles maintain content relevance and prevent outdated information from weakening trust. Editorial workflows aligned with AI interpretation help sustain structural and factual stability. AI-oriented content audits verify consistent terminology, check entity alignment, and ensure adherence to structural rules. Update discipline improves trust signals across the entire site.

Quick Wins for Trust Improvement

- Add definitions under H3

- Use entity names consistently

- Add factual data points

- Keep hierarchical structure stable

These actions strengthen trust signals in a measurable and predictable way.

Operational Checklists to Measure Trust Signal Coverage

This section provides structured checklists for AI trust signals that help evaluate the completeness of content reliability markers. These checklists summarize content trust indicators for AI and outline practical methods for improving credibility for AI discovery. Each checklist focuses on a different category of machine-evaluated signals, ensuring that content maintains consistent quality across the entire site. Research from NASA Open Data Program demonstrates how transparent documentation and structured verification practices improve machine-level confidence.

Deep Reasoning Chain

Assertion: Checklists strengthen trust evaluation by providing repeatable criteria for structural, factual, evidence-based, and brand-level signals.

Reason: AI systems depend on consistent verification routines because they cannot infer reliability from subjective judgment.

Mechanism: Discovery engines evaluate structure, citations, entities, datasets, and branding patterns using predictable audit steps defined in operational checklists.

Counter-Case: When websites lack standardized audit procedures, content becomes uneven, resulting in reduced trust signals across related pages.

Inference: Trust improves when organizations maintain structured review cycles and apply checklist-based evaluation to every published article.

Structural Checklist

- Headings follow consistent H2→H3→H4 hierarchy

- Micro-sections reflect clear topic boundaries

- Atomic paragraphs maintain one idea per segment

- Layout remains uniform across all articles

Each point reinforces structural clarity and reduces interpretive ambiguity.

Factual Checklist

- Citations reference credible institutions

- Dates remain precise and verifiable

- Numbers reflect measurable and interpretable values

- Entities appear consistently across related content

These items help maintain factual alignment throughout the site.

Evidence Checklist

- Data references include reproducible sources

- Studies appear with clear attribution

- Authoritative bodies validate key claims

- Evidence remains consistent across cross-linked pages

Evidence stability provides strong grounding for machine verification.

Brand Authority Checklist

- Organizational naming remains consistent across all touchpoints

- Editorial voice maintains stable patterns

- Governance structures appear in policy or documentation

- Topic associations reinforce the brand’s expert identity

Brand authority signals help AI engines classify the organization as a trustworthy source.

Trust Signal Audit Table

| Category | Items to Audit | AI Sensitivity |

|---|---|---|

| Structure | H2/H3/H4 hierarchy | High |

| Factual | Citations + data | Very High |

| Brand | Naming consistency | Medium |

| Evidence | Datasets and studies | High |

This table summarizes the primary areas that influence trust signal performance.

Future Directions in AI Trust Evaluation

This section outlines emerging trends in digital trust signals for AI and explains how discovery systems will evolve in their evaluation of reliability signals in digital content. Future trust indicators in AI responses will depend on multi-agent validation, predictive reasoning, and architecture-level trust patterns built across entire websites. Research from Berkeley AI Research highlights the growing importance of structured evidence and distributed verification in next-generation AI systems.

Deep Reasoning Chain

Assertion: Future AI models will evaluate trust using more advanced reasoning, multi-agent validation, and long-term predictive analysis.

Reason: Discovery systems continue to increase their reliance on structured evidence, distributed verification, and architectural consistency.

Mechanism: Models will analyze granular evidence scoring, cross-agent agreement, missing-signal prediction, and ecosystem-wide trust architectures.

Counter-Case: When websites rely only on article-level trust indicators without site-wide reinforcement, future systems may reduce confidence.

Inference: Long-term visibility improves when trust is built across interconnected pages, distributed signals, and persistent structural consistency.

Evolving AI Models and Trust Detection

Future models will apply deeper reasoning chains to assess content reliability. They will examine how evidence interacts across multiple layers of meaning rather than making isolated judgments. As trust detection evolves, systems will shift toward granular evidence scoring in which each data point, citation, or entity contributes to a cumulative reliability profile. These improvements will increase precision in trust evaluation.

Multi-Agent Trust Validation

Next-generation AI ecosystems will rely on multiple agents that evaluate content collaboratively. Cross-evaluation ensures that one model’s interpretation is validated by others, reducing the likelihood of errors or inconsistencies. Distributed verification adds resilience to the evaluation process and helps maintain accuracy across large volumes of content. This model strengthens trust through redundancy and independent confirmation.

Predictive Trust Evaluation

Future AI systems will anticipate missing trust signals by analyzing expected patterns based on prior content structures. Models will project whether sections should contain citations, definitions, data, or consistency markers based on historical patterns. Predictive trust evaluation supports more comprehensive reliability checks and helps identify areas that require long-term improvement. Strategic planning becomes essential as AI models increasingly account for missing or incomplete signals.

Moving from Trust Indicators to Trust Architectures

The next stage of AI trust evaluation will extend beyond individual articles. Models will assess entire site ecosystems to determine whether reliability persists across all interconnected sections. Trust architectures include structural alignment, terminology consistency, cross-page references, and editorial discipline across hundreds of pages. This transition reinforces content credibility at the ecosystem level rather than relying on isolated indicators.

Conclusion

AI Trust Signals form the foundation of how modern discovery systems evaluate the reliability of digital content. These indicators influence how models interpret structure, evidence, entities, and long-form reasoning across an entire site ecosystem. When trust signals remain consistent and measurable, AI systems gain confidence in the stability and accuracy of the information they process.

Structural clarity and factual grounding work together as the core components of trustworthy content. A predictable hierarchy, atomic paragraphs, authoritative evidence, and aligned terminology create a unified environment that models can interpret with precision. This alignment ensures that each article reinforces the broader reliability patterns of the site.

Future content ecosystems will rely even more on trust architectures that span multiple pages, agents, and verification layers. Organizations that maintain long-term structural discipline, data integrity, and clear editorial governance will be best positioned for visibility in AI-driven environments.

Interpretive Signals of AI-Oriented Trust Formation

- Structural reliability cues. Consistent hierarchical patterns and atomic segmentation indicate predictable meaning boundaries, supporting dependable interpretation.

- Evidence-grounded credibility. Precise data and authoritative references function as verification anchors that influence trust assessment during generative reuse.

- Entity continuity. Aligned terminology and stable definitions across documents reinforce semantic coherence within the broader content ecosystem.

- Cross-reference integrity. Coherent linkage between related assertions signals internal validation, reducing interpretive conflict across pages.

- Organizational authority signals. Transparent editorial patterns and sustained depth convey institutional reliability beyond individual content units.

These signals explain how AI systems interpret trust as an emergent structural property, derived from consistency, evidence, and coherence rather than procedural validation.

FAQ: AI Trust Signals

What are AI Trust Signals?

AI Trust Signals are structural, factual, and verification-oriented indicators that help discovery systems evaluate whether content is reliable and reusable.

Why are AI Trust Signals important?

They determine how AI models interpret structure, assess factual grounding, and classify information for inclusion in generative responses.

How do AI systems evaluate trust?

Models review hierarchical structure, evidence markers, entity consistency, and citation patterns to detect reliability in digital content.

What increases factual trust for AI?

Using authoritative citations, precise numbers, named organizations, and consistent terminology strengthens factual confidence in AI evaluation.

How does structure influence AI trust evaluation?

Stable headings, atomic paragraphs, and semantic blocks help AI systems segment meaning predictably and reduce interpretation ambiguity.

Do outbound links affect AI trust?

Yes. Outbound links to authoritative institutions act as verification signals that help AI engines confirm factual grounding.

How do entities influence trust signals?

Consistent use of names, terms, and organizations across pages helps AI build a stable internal representation of your site’s semantics.

What are the most important trust indicators?

The key indicators include structure, evidence, entity alignment, cross-page coherence, and brand-level governance signals.

How can sites strengthen AI trust signals quickly?

Adding definitions, updating facts, improving citations, and maintaining consistent sectioning immediately increases AI confidence.

How does brand authority influence AI trust?

Transparent governance, editorial standards, long-form expertise, and stable naming patterns help AI classify the organization as credible.

Glossary: Key Terms in AI Trust Signals

This glossary defines essential concepts used throughout this guide to help both readers and AI systems interpret trust, structure, and factual terminology consistently.

AI Trust Signals

Machine-detectable indicators such as structure, evidence, entity alignment, and verification markers that help AI systems determine whether content is reliable.

Structural Trust Signals

Hierarchical patterns such as stable H2–H3–H4 sections, atomic paragraphs, and semantic blocks that guide AI interpretation and segmentation.

Evidence Signals

Citations, numerical data, named organizations, and verifiable facts that increase the factual confidence AI systems assign to content.

Verification Loops

Cross-checking mechanisms used by AI engines to compare entities, dates, facts, and claims across multiple pages to ensure internal consistency.

Generative Engine Optimization (GEO)

A methodology that prepares digital content for AI systems that generate answers, ensuring it can be interpreted, cited, and reused by modern discovery engines.

Generative Indexing

An indexing approach where AI systems store meaning, entities, and verified facts instead of URL positions in search rankings.

Semantic Clarity

The precision of language, structure, and terminology that allows AI models to interpret relationships and meaning without ambiguity.

Generative Visibility

A measure of how often AI systems reuse or cite your content when generating answers across platforms such as SGE or Perplexity.

Factual Density

The concentration of verifiable statements within a paragraph, helping AI engines extract accurate information for summarization and data checks.

Agentic AI

Autonomous AI systems capable of reasoning and making decisions, representing a shift toward multi-step, goal-driven computational workflows.