Last Updated on December 20, 2025 by PostUpgrade

Autonomous Search Systems: When AI Finds Before You Ask

Search systems historically respond to explicit user queries. A user formulates a request, submits it, and receives ranked results. However, this interaction model no longer describes how AI-driven environments operate in practice. Modern systems increasingly select and surface information without waiting for a direct request. As a result, autonomous search systems emerge as a distinct class of information infrastructure.

Autonomous search systems operate continuously across data streams and contextual signals. Instead of reacting to queries, they evaluate relevance in advance and determine which information should appear. In this context, the system performs selection as an internal process rather than a user-triggered action. Therefore, interaction shifts from explicit requests to system-driven exposure.

This article analyzes how these systems function at the architectural and logical levels. It examines how internal signals replace query-first assumptions. It also explains how non-query interaction becomes a system behavior, not a user feature. Consequently, the focus moves from interfaces to the underlying mechanisms that govern information availability before demand appears.

Defining Autonomous Search Systems

Autonomous search systems appear as AI environments move beyond query-driven interaction toward continuous information evaluation. In this context, the goal of this section is to establish a precise definition and clear conceptual boundaries for autonomous search systems, while separating them from adjacent but distinct approaches. This clarification relies on research foundations developed within the field of computational language understanding, including work associated with Stanford NLP.

Definition: AI understanding is the system’s ability to interpret contextual signals, structural boundaries, and decision logic in order to evaluate relevance and surface information without relying on explicit user queries.

Claim: Autonomous search systems operate without requiring explicit user queries.

Rationale: Traditional search relies on user-initiated requests, which restricts information exposure to articulated intent.

Mechanism: These systems apply autonomous information selection and relevance detection to determine what content should appear.

Counterargument: Performance degrades when contextual signals are sparse, unstable, or contradictory.

Conclusion: Autonomous search requires strict signal governance to maintain reliability and consistency.

Autonomous search behavior

Autonomous search behavior describes how a system continuously evaluates information relevance in the absence of direct requests. Instead of waiting for a trigger, the system monitors contextual inputs, historical interactions, and environmental signals to guide selection. As a result, retrieval becomes a persistent process rather than a reactive event.

At the system level, this behavior shifts responsibility from the user to the infrastructure itself. Therefore, relevance assessment occurs before interaction, not after it. In this way, the system defines exposure logic independently of explicit intent.

Put simply, autonomous search behavior means the system decides when information matters, even if no one asks for it.

Autonomous information systems

Autonomous information systems extend beyond retrieval and include prioritization, filtering, and sequencing of content. These systems treat information as a continuous flow that requires ordering and evaluation at all times. Consequently, search becomes one function within a broader decision framework.

Such systems separate data ingestion from exposure logic. As a result, they can suppress, delay, or elevate information based on contextual relevance rather than query frequency. This separation enables more adaptive information handling.

In simple terms, autonomous information systems manage information like an ongoing process, not a response to a command.

| Traditional Search | Autonomous Search |

|---|---|

| Triggered by explicit queries | Operates without explicit queries |

| User defines timing | System defines timing |

| Reactive retrieval | Continuous evaluation |

| Query-based relevance | Context-based relevance |

Autonomous Retrieval Logic Without Explicit Queries

Autonomous retrieval logic emerges as AI systems move away from a query-first dependency and toward continuous evaluation models. In this context, this section explains how retrieval functions when no direct request initiates the process, focusing strictly on internal logic rather than user-facing interfaces. This shift aligns with foundational research on inference and representation learning conducted at MIT CSAIL, where non-query-driven information access has been studied as a system property rather than a UI feature.

Definition: Autonomous retrieval logic is the internal decision framework that allows a system to select and surface information without receiving an explicit query. It governs how relevance is inferred, evaluated, and acted upon before user intent becomes observable.

Claim: Search without explicit queries relies on internal intent modeling.

Rationale: Users often possess informational needs that remain unarticulated or only partially formed.

Mechanism: Systems infer needs through pre-request information selection combined with ongoing context evaluation.

Counterargument: Inference errors increase noise when contextual signals lack stability or coherence.

Conclusion: Reliable autonomous retrieval depends on bounded inference models that constrain speculative selection.

Principle: In autonomous search environments, information becomes accessible to AI systems when its structure, definitions, and contextual signals remain stable enough to support reliable non-query interpretation.

Internal intent modeling in retrieval systems

Internal intent modeling refers to the system’s ability to approximate informational need without relying on a direct request. Instead of processing explicit input, the system evaluates behavioral patterns, situational context, and environmental cues to estimate relevance. As a result, retrieval becomes anticipatory rather than reactive.

At the same time, this logic shifts uncertainty from the user to the system. Therefore, the quality of retrieval depends on how accurately internal models represent intent under incomplete information. In practice, this requires conservative assumptions and controlled inference depth.

Put simply, the system tries to understand what may be useful before anyone asks for it.

Context evaluation before request formation

Context evaluation occurs continuously and independently of user interaction. The system analyzes temporal signals, historical usage, and surrounding conditions to assess whether information should surface. Consequently, retrieval logic operates even when no visible interaction takes place.

However, context alone does not guarantee accuracy. Therefore, systems must balance sensitivity to change with resistance to noise. This balance determines whether retrieval remains relevant or becomes intrusive.

In simple terms, the system watches the situation and decides if information fits the moment.

Signal categories used before queries

- Behavioral signals derived from past interactions and usage patterns

- Temporal signals related to timing, sequence, and recency

- Environmental signals reflecting device state, location context, or operational conditions

- Content signals based on semantic proximity and informational density

Together, these signal categories allow autonomous retrieval logic to function without relying on explicit queries, while still maintaining controlled relevance.

Autonomous Relevance Detection and Ranking

Relevance assessment becomes complex when systems operate without direct requests. In this context, this section explains how autonomous relevance detection functions as an internal ranking process rather than a response to expressed intent, focusing strictly on ranking logic. Research from Berkeley BAIR provides foundational insight into how models evaluate relevance through signal composition instead of query matching.

Definition: Autonomous relevance detection is the process by which a system evaluates informational importance without relying on explicit user queries. It determines priority by interpreting contextual, behavioral, and environmental signals as substitutes for declared intent.

Claim: Relevance can be assessed without direct queries.

Rationale: Contextual and behavioral signals can replace explicit intent indicators.

Mechanism: Autonomous ranking logic evaluates confidence-weighted signals to establish ordering.

Counterargument: Ranking accuracy declines in environments with sparse or unstable signals.

Conclusion: Autonomous relevance depends on both signal diversity and calibrated weighting.

Signal-based relevance formation

Signal-based relevance formation relies on combining multiple inputs to approximate informational importance. Instead of matching keywords, the system evaluates how signals interact across time and context. As a result, relevance emerges from pattern alignment rather than direct instruction.

However, signal interpretation requires normalization to avoid bias. Therefore, systems assign weights based on reliability and historical performance. This approach reduces volatility while preserving responsiveness.

Put simply, the system decides what matters by comparing signals, not by reading a request.

Confidence weighting in ranking logic

Confidence weighting assigns relative importance to signals based on their predictive value. The system increases weight for signals with consistent outcomes and reduces weight for noisy inputs. Consequently, ranking stabilizes even when inputs fluctuate.

At the same time, weighting must adapt to change. Therefore, models recalibrate weights as environments evolve. This balance maintains accuracy without freezing relevance logic.

In simple terms, the system trusts some signals more than others and adjusts that trust over time.

Example: A system that organizes information into clearly defined relevance units with stable terminology enables autonomous ranking logic to compare signals consistently, increasing the probability that high-confidence information is surfaced without user initiation.

Ranking factors and signal types

| Ranking factor | Signal type |

|---|---|

| Temporal alignment | Time-based activity patterns |

| Behavioral consistency | Repeated interaction indicators |

| Contextual proximity | Environmental and situational cues |

| Semantic density | Informational richness of content |

Together, these factors allow relevance judgment without queries while supporting confidence-based information selection through structured ranking logic.

Context Analysis and Signal Interpretation

As AI systems move beyond keyword matching, they increasingly rely on environmental understanding rather than textual triggers. In this context, the purpose of this section is to explain how autonomous context analysis enables systems to interpret situations and guide selection decisions, focusing exclusively on signal interpretation. This perspective reflects research on digital context and information environments developed by the Oxford Internet Institute.

Definition: Autonomous context analysis is the process by which a system constructs and updates an internal representation of a situation using non-textual and contextual signals. It allows relevance decisions to reflect conditions surrounding information use rather than isolated content features.

Claim: Context determines autonomous information selection.

Rationale: Signals encode situational meaning that extends beyond text and keywords.

Mechanism: Behavioral signal interpretation feeds continuous context evaluation across time and conditions.

Counterargument: Context drift introduces misalignment as environments and behaviors change.

Conclusion: Stable context models require regular recalibration loops to preserve accuracy.

Behavioral signal interpretation

Behavioral signal interpretation focuses on how user actions, system interactions, and temporal patterns inform contextual understanding. The system observes sequences rather than isolated events to infer situational relevance. As a result, meaning emerges from behavior over time, not from single interactions.

However, behavior alone does not guarantee clarity. Therefore, systems must distinguish between routine actions and context-shifting events. This distinction prevents overreaction to noise while preserving sensitivity to change.

Put simply, the system reads patterns in behavior to understand what situation it is operating in.

Environment-aware information systems

Environment-aware information systems extend context modeling beyond user behavior. These systems incorporate device state, operational conditions, and situational constraints into relevance decisions. Consequently, information selection adapts to where and how interaction occurs.

At the same time, environmental signals vary in reliability. Therefore, systems assign differing importance based on stability and recurrence. This approach reduces false context assumptions.

In simple terms, the system considers surroundings and conditions, not just actions.

Continuous context evaluation

Continuous context evaluation ensures that contextual models remain current rather than static. The system updates its understanding as signals evolve, allowing relevance logic to adjust in near real time. As a result, selection decisions stay aligned with present conditions.

Nevertheless, constant updates can introduce instability. Therefore, evaluation cycles require smoothing and thresholds to prevent oscillation. These controls maintain coherence across time.

Put simply, the system keeps checking whether the situation has changed and adjusts its understanding carefully.

Decision Routing and Information Prioritization

Decision-centric systems shift responsibility for exposure from the user to the system itself. In this context, this section explains how autonomous decision routing governs what information appears and when it appears, focusing specifically on routing logic rather than presentation. This approach aligns with formal decision and control principles defined by the National Institute of Standards and Technology in its work on trustworthy and adaptive information systems.

Definition: Autonomous decision routing is the internal process by which a system determines the order, timing, and priority of information exposure before user interaction occurs. It functions as a control layer that manages informational flow under limited attention conditions.

Claim: Autonomous systems prioritize information before exposure.

Rationale: Human and system attention capacity remains finite, even as information volume increases.

Mechanism: Decision-driven information access ranks outputs by confidence and urgency to regulate exposure.

Counterargument: Excessive prioritization can suppress exploratory information and reduce discovery breadth.

Conclusion: Balanced routing preserves informational diversity while maintaining relevance.

Priority assignment in routing logic

Priority assignment defines how a system decides which information should surface first. The system evaluates available content against internal thresholds for relevance, urgency, and expected utility. As a result, information prioritization by AI becomes a structured filtering process rather than a passive ranking outcome.

However, priority assignment must remain adaptive. Therefore, routing logic adjusts thresholds as context shifts. This adaptability prevents rigid ordering that ignores situational change.

Put simply, the system decides what deserves attention first and what can wait.

Confidence and urgency as routing criteria

Confidence reflects how strongly the system believes information matches the current context. Urgency reflects time sensitivity and potential impact if information is delayed. Together, these criteria shape decision-driven information access across system outputs.

At the same time, confidence and urgency must remain independent signals. Therefore, systems avoid collapsing them into a single score. This separation reduces bias and preserves control granularity.

In simple terms, the system weighs how sure it is against how quickly something matters.

Enterprise dashboard routing microcase

In enterprise dashboards, autonomous routing often determines which alerts, metrics, or reports appear without user selection. For example, compliance anomalies may surface automatically when confidence exceeds a defined threshold and urgency rises due to regulatory deadlines. Meanwhile, exploratory analytics remain available but deprioritized. As a result, decision-makers receive critical information first without losing access to broader context.

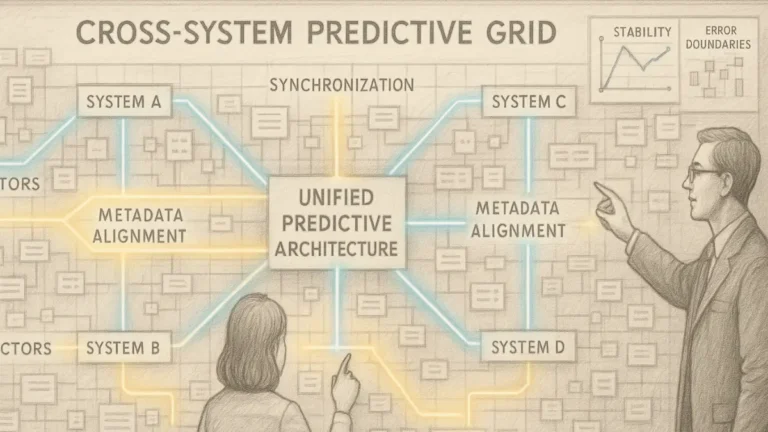

System Architecture of Autonomous Search

Autonomous search systems differ structurally from traditional search platforms because they must operate continuously rather than reactively. In this context, this section explains how autonomous search infrastructure organizes system layers to manage complexity, focusing on infrastructure and logic layers rather than deployment specifics. This architectural perspective aligns with structural principles defined by the World Wide Web Consortium in its work on layered web and data architectures.

Definition: Autonomous search infrastructure is the layered system architecture that supports continuous information evaluation, signal processing, and decision execution without relying on explicit user queries. It separates ingestion, interpretation, and exposure into distinct operational layers.

Claim: Autonomous search requires layered architecture.

Rationale: Single-layer systems cannot manage the volume, diversity, and volatility of contextual signals.

Mechanism: Signal-driven retrieval systems operate above data ingestion layers, translating raw inputs into actionable relevance signals.

Counterargument: Tight coupling between layers increases fragility and complicates system evolution.

Conclusion: Modular design improves autonomous resilience and long-term adaptability.

Layer separation and responsibility boundaries

Layer separation defines how responsibilities distribute across the system. Data ingestion layers collect raw inputs such as content streams, behavioral events, and environmental signals. Above them, interpretation layers normalize and contextualize these inputs before any retrieval decision occurs.

As a result, higher layers remain insulated from data volatility. Therefore, changes in input formats or sources do not directly disrupt decision logic. This separation stabilizes autonomous search workflows across evolving environments.

Put simply, each layer handles a specific job so that the whole system remains stable when parts change.

Signal-driven retrieval systems in practice

Signal-driven retrieval systems translate interpreted signals into selection decisions. These systems evaluate relevance, confidence, and timing before passing outputs to routing components. Consequently, retrieval becomes a controlled process rather than a direct reaction to incoming data.

At the same time, these systems must remain adaptable. Therefore, retrieval logic updates independently of ingestion mechanics. This independence allows autonomous systems to refine selection behavior without restructuring the entire stack.

In simple terms, the system decides what to retrieve based on signals, not raw data.

Architectural modularity and system resilience

Modularity ensures that components evolve without breaking system integrity. Each module communicates through defined interfaces, limiting unintended dependencies. As a result, failures remain localized rather than cascading across layers.

However, modularity requires disciplined design. Therefore, interfaces must remain explicit and stable. This discipline preserves resilience as autonomous capabilities expand.

Put simply, modular architecture helps autonomous systems survive change.

| Layer | Primary responsibility |

|---|---|

| Data ingestion | Collect raw content and signals |

| Signal interpretation | Normalize and contextualize inputs |

| Retrieval logic | Evaluate relevance and select information |

| Routing control | Manage timing and exposure priorities |

| Output interface | Deliver information to downstream systems |

Enterprise and Knowledge Work Applications

Autonomous search systems reach their practical value when deployed inside organizations that depend on timely, accurate information. In this context, this section explains where autonomous search use cases deliver measurable impact, focusing strictly on enterprise environments and knowledge work scenarios. Evidence from policy and data-driven organizational research published by the OECD supports this shift toward system-led information delivery.

Definition: Autonomous search use cases describe operational scenarios in which systems surface relevant information inside organizational workflows without requiring explicit user queries. These use cases emphasize latency reduction, decision support, and workflow continuity.

Claim: Autonomous search improves decision latency.

Rationale: Information becomes available before explicit demand delays action.

Mechanism: Autonomous search for knowledge work embeds retrieval and prioritization directly into workflows.

Counterargument: Blind automation reduces human oversight and increases error risk.

Conclusion: Human-in-the-loop design remains essential for enterprise reliability.

Autonomous search in enterprise systems

Autonomous search in enterprise systems integrates retrieval logic directly into internal platforms such as dashboards, document repositories, and monitoring tools. Instead of requiring manual lookup, the system evaluates context and pushes relevant information into active workspaces. As a result, employees receive inputs aligned with current tasks rather than abstract queries.

However, enterprise environments impose governance constraints. Therefore, autonomous systems must respect access controls, compliance boundaries, and role-specific relevance. This alignment ensures that automation supports organizational structure rather than bypassing it.

Put differently, enterprise autonomous search works best when it fits existing systems and rules.

Autonomous search and decision support

Autonomous search and decision support operate together when systems prioritize information that influences operational or strategic choices. The system evaluates confidence, urgency, and contextual fit before surfacing content. Consequently, decision-makers spend less time searching and more time evaluating options.

At the same time, decision support requires transparency. Therefore, systems must expose reasoning signals or confidence indicators alongside outputs. This visibility allows users to assess reliability without reverting to manual search.

In practical terms, the system assists decisions by preparing relevant information ahead of time.

Microcase: legal research and compliance monitoring

In legal and compliance teams, autonomous search often monitors regulatory updates, case law changes, and internal policy deviations. When new guidance aligns with active matters, the system surfaces summaries directly within case management tools. Meanwhile, compliance risks trigger alerts based on confidence and urgency thresholds. As a result, teams respond faster without continuously scanning external sources.

Limitations, Risks, and Governance

As autonomous systems assume responsibility for selecting and prioritizing information, they introduce systemic risks that do not exist in query-driven models. In this context, this section defines the boundaries of the autonomous search paradigm, with a focus on failure modes and governance constraints rather than operational benefits. Analysis and reporting by IEEE Spectrum document how autonomous decision systems increase oversight requirements when actions occur without direct user prompts.

Definition: Autonomous search paradigm is the system model in which information exposure results from internal decision logic rather than explicit user requests. It defines how authority, responsibility, and accountability shift from users to automated processes.

Claim: Autonomous search increases governance complexity.

Rationale: Decisions occur without user prompts, which reduces direct human control over exposure timing and content.

Mechanism: Autonomous search interpretation layers require auditability to trace how signals produce decisions.

Counterargument: Excessive governance slows system responsiveness and limits adaptability.

Conclusion: Governance must remain proportional and adaptive to preserve system effectiveness.

Failure modes in autonomous systems

Autonomous systems fail differently than reactive search tools. Errors often emerge from misinterpreted signals, outdated context models, or compounding inference assumptions. As a result, failures can persist unnoticed because no explicit request triggers validation.

Therefore, detection mechanisms must operate continuously rather than episodically. This requirement increases system complexity and monitoring cost. Consequently, organizations must plan for failure identification as a permanent process.

In other words, errors appear quietly and must be actively detected.

Governance requirements and control boundaries

Governance in autonomous environments focuses on controlling decision authority rather than content access. Policies define which decisions systems may take independently and which require escalation. As a result, governance frameworks resemble control systems rather than permission checklists.

At the same time, governance must integrate with technical architecture. Therefore, audit hooks and logging mechanisms embed directly into the autonomous search interpretation layer. This integration enables accountability without manual intervention.

Put differently, governance works only when it is built into the system.

Core risk categories in autonomous search

- Signal misinterpretation due to sparse or noisy inputs

- Context drift caused by environmental or behavioral change

- Feedback loops that reinforce incorrect relevance assumptions

- Oversuppression of information through aggressive prioritization

- Reduced human oversight in automated decision flows

Together, these risks define the boundaries within which an autonomous search model can operate safely and effectively.

Checklist:

- Are core concepts defined in a way that supports non-query interpretation?

- Do sections follow stable H2–H4 boundaries that reflect system logic?

- Does each paragraph represent a single decision-relevant unit?

- Are contextual signals separated from presentation details?

- Is terminology consistent across relevance, routing, and governance layers?

- Does the structure allow autonomous systems to evaluate information step by step?

Strategic Implications for Content and Systems

Autonomous systems introduce long-term changes that extend beyond retrieval mechanics and into how information must be produced and maintained. In this context, this section defines the system-level shift created by autonomous search logic design, focusing on strategic implications rather than implementation detail. Research from the Allen Institute for AI highlights how structured representations enable machines to reuse and reason over information without direct user mediation.

Definition: Autonomous search logic design is the strategic approach to structuring information and systems so that relevance, priority, and exposure can be determined without explicit user selection. It governs how content and signals are prepared for machine-led interpretation.

Claim: Autonomous search changes how information must be structured.

Rationale: Content increasingly reaches users through system-driven selection rather than direct choice.

Mechanism: Systems favor structured, interpretable information units that support reliable evaluation and reuse.

Counterargument: Unstructured domains resist automation and limit autonomous effectiveness.

Conclusion: Structure becomes a prerequisite for visibility in autonomous environments.

Structural requirements for autonomous search behavior

Autonomous search behavior depends on predictable informational units that can be evaluated independently. Systems analyze content at the level of discrete statements, entities, and relationships rather than narrative flow. As a result, loosely organized material becomes less accessible to autonomous processes.

Therefore, content strategies must prioritize clarity, stable terminology, and explicit boundaries between ideas. This approach allows systems to extract meaning without inference-heavy interpretation. Consequently, structure directly influences whether information participates in autonomous selection.

In essence, autonomous behavior favors content that exposes its logic clearly.

Platform-level implications for digital environments

Autonomous search in digital platforms alters how visibility emerges across applications, feeds, and internal systems. Platforms increasingly rely on internal signals to surface information proactively rather than responding to navigation or search actions. As a result, exposure becomes a function of system logic rather than user exploration.

At the same time, platforms must balance automation with accountability. Therefore, strategic design must include auditability and control over how structure influences exposure. This balance ensures that autonomous systems remain aligned with platform goals.

In practical terms, platforms reward content that systems can interpret without ambiguity.

Strategic preparation of information units

Preparation of information units requires aligning content creation with system logic rather than presentation aesthetics. Each unit must express a single, verifiable idea that can stand alone. Consequently, systems can compare, rank, and reuse information without reconstructing meaning.

However, this preparation introduces constraints. Therefore, organizations must adapt workflows to support structured authoring and validation. These changes define long-term competitiveness in autonomous environments.

Put another way, strategy shifts from attracting attention to enabling interpretation.

Interpretive Foundations of Autonomous Search Systems

- Information structure interpretability. Autonomous search systems depend on clearly segmented and well-scoped content units that minimize ambiguity during non-query-based interpretation.

- Structured interpretation layers. Machine-readable layers such as schema-defined entities and document boundaries enable autonomous systems to evaluate information independently of presentation interfaces.

- Factual statement stabilization. Informational units that express a single verifiable claim allow autonomous retrieval logic to maintain precision without speculative inference.

- Internal conceptual relationships. Explicit connections between related concepts support contextual evaluation and routing logic within autonomous information environments.

- Autonomous behavior visibility. How information surfaces across AI-driven systems reflects underlying interpretive alignment rather than direct user interaction.

These interpretive foundations explain how autonomous search systems prioritize, contextualize, and expose information without relying on explicit user queries.

FAQ: Autonomous Search Systems

What are autonomous search systems?

Autonomous search systems are information systems that select, prioritize, and surface content without relying on explicit user queries.

How do autonomous search systems differ from traditional search?

Traditional search responds to user queries, while autonomous search evaluates relevance continuously and determines exposure before a request occurs.

Why is autonomous search important for modern AI systems?

Autonomous search reduces decision latency by delivering relevant information before users articulate informational needs.

How do autonomous systems determine relevance without queries?

They rely on contextual, behavioral, and environmental signals to evaluate relevance in the absence of explicit intent.

What role does structure play in autonomous search?

Structured information allows systems to interpret, compare, and prioritize content without reconstructing meaning.

Why is governance critical in autonomous search?

Because decisions occur without user prompts, systems require auditability and control to maintain accountability.

Where are autonomous search systems commonly applied?

They are used in enterprise platforms, decision support tools, monitoring systems, and knowledge work environments.

What are the main risks of autonomous search?

Key risks include signal misinterpretation, context drift, feedback loops, and reduced human oversight.

How can organizations prepare for autonomous search?

Organizations must structure information into clear units, maintain stable terminology, and embed governance mechanisms.

What skills are required to design autonomous search systems?

Effective design requires expertise in system architecture, signal interpretation, and decision governance.

Glossary: Key Terms in Autonomous Search

This glossary defines the core terminology used throughout this article to ensure consistent interpretation by both readers and autonomous systems.

Autonomous Search Systems

Information systems that select, prioritize, and surface content through internal decision logic without relying on explicit user queries.

Autonomous Retrieval Logic

The internal mechanism that enables systems to infer relevance and retrieve information before a user expresses intent.

Autonomous Relevance Detection

A process by which systems evaluate informational importance using contextual and behavioral signals instead of query matching.

Context Analysis

The continuous construction and updating of situational models based on environmental, behavioral, and temporal signals.

Decision Routing

The system process that determines when and how information is exposed based on priority, confidence, and urgency.

Signal Interpretation

The evaluation of behavioral, contextual, and environmental signals to infer relevance in the absence of explicit intent.

Layered Architecture

A system design approach that separates data ingestion, interpretation, retrieval, and routing into independent layers.

Decision Latency

The time between the emergence of informational relevance and the moment information becomes available to a user or system.

Interpretation Layer

A system layer responsible for translating raw signals into structured representations used for relevance and routing decisions.

Governance Framework

A set of controls, audit mechanisms, and policies that regulate how autonomous systems make and expose decisions.