Last Updated on December 20, 2025 by PostUpgrade

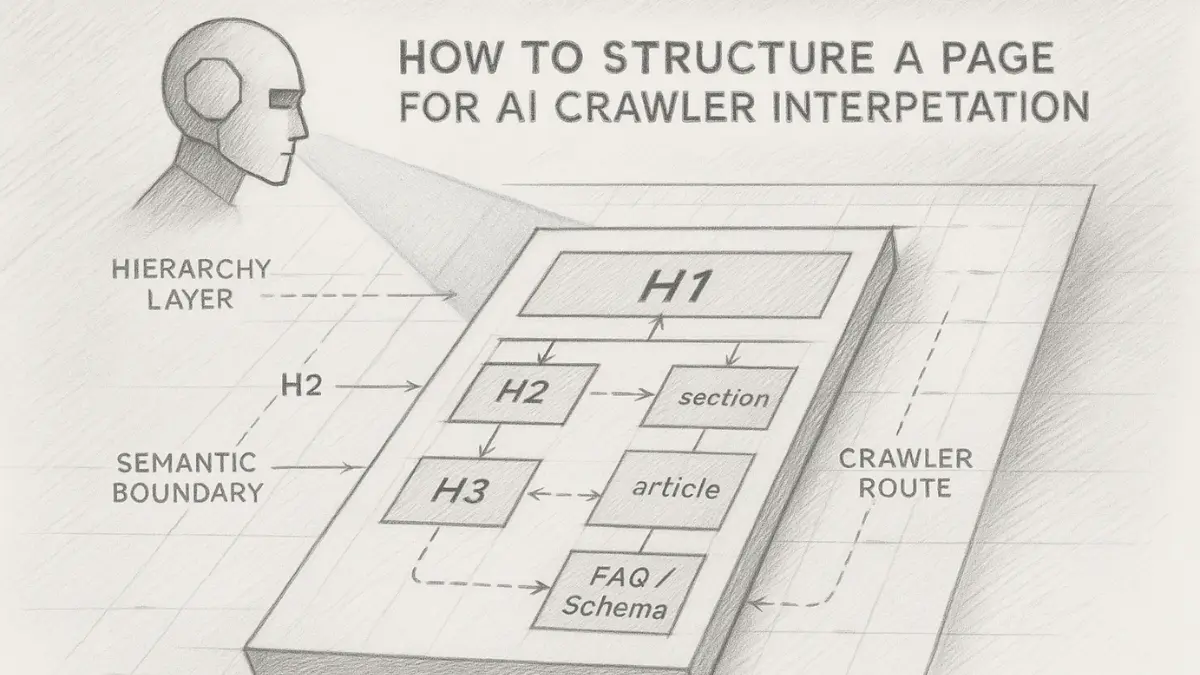

How AI Crawlers Navigate Structured Layouts

Definition: AI crawler site structure is the arrangement of headings, sections, navigation links, semantic tags and content blocks that enables crawlers to interpret meaning, classify concepts, and reuse content reliably across generative discovery systems.

Why Structured Layouts Matter for AI Crawlers

Modern systems evaluate the ai crawler site structure to understand how meaning is organized across a page. Structure-driven interpretation enables crawlers to segment information into reusable units through a stable arrangement of headings, sections, and semantic markers.

A predictable layout improves readability and page structure consistency, which strengthens downstream visibility and aligns with the principles described in the W3C HTML Living Standard.

Deep Reasoning Chain

Assertion: Structured layouts determine how efficiently AI crawlers interpret and reuse page content.

Reason: Clear boundaries between sections reduce ambiguity during meaning extraction.

Mechanism: Crawlers follow hierarchical signals, semantic markers, and consistent formatting patterns to separate concepts, identify relationships, and assign contextual meaning.

Counter-Case: When layouts rely on irregular formatting or visually styled but semantically empty elements, crawlers fail to interpret relationships between concepts.

Inference: Pages with clean structural patterns achieve higher clarity because AI systems align content meaning with stable markup.

Principle: Pages structured with predictable hierarchy, stable semantic boundaries and clear layout patterns perform better in AI-driven discovery because crawlers interpret structure more effectively than unstructured formatting.

The Shift From Keyword Parsing to Layout-Driven Understanding

AI crawlers moved from keyword extraction toward layout-based interpretation because patterns in structure provide more reliable meaning cues. Systems prioritize organization, segmentation, and semantic alignment over density or phrasing. Predictable layouts help crawlers map boundaries between concepts, and semantic patterns reduce interpretive noise across complex pages.

Example: A blog post using H1 for title, sequential H2/H3 headings, clearly separated tags, and internal links that map related topics will allow crawlers to segment meaning and boost visibility within the ecosystem.

How Structure Shapes Content Accessibility for AI Systems

Stable markup ensures that crawlers can map the internal logic of a page without depending on visual presentation. Consistent formatting removes ambiguity by giving each section a clear interpretive role. Pages that maintain structural repetition across templates allow AI systems to recognize meaning units and index them with higher precision.

Role of HTML Semantics in Machine Visibility

Semantic elements such as <header>, <nav>, <main>, <article>, <section>, and <footer> define the functional zones of a page. These components help AI crawlers understand where navigation, core content, and supporting information reside. Semantics provide meaning because they classify information into discrete interpretive roles rather than controlling appearance, which increases HTML semantics for AI crawler visibility and overall interpretability.

Core Components AI Crawlers Analyze in a Structured Layout

AI crawlers evaluate structural elements to determine how meaning is organized throughout a page. Systems rely on clear segmentation, predictable boundaries, and stable formatting patterns to extract concepts efficiently. When HTML components follow consistent logic, crawlers parse the internal hierarchy with higher precision, which strengthens interpretation and reuse.

Deep Reasoning Chain

Assertion: Core structural components determine how accurately AI crawlers interpret the internal meaning of a page.

Reason: Each element provides a specific signal about topic scope, section relationships, and hierarchical boundaries.

Mechanism: Crawlers examine heading levels, sectioning tags, captions, and content boundaries to construct a layered representation of the page.

Counter-Case: When elements are missing, misused, or inconsistently ordered, crawlers cannot map meaning layers and fail to recover the intended structure.

Inference: Pages that apply systematic structural components produce clearer interpretive pathways because AI crawlers align meaning with stable and predictable markup.

Hierarchical Headings and Meaning Layers

Heading structures guide machine reasoning because they establish the internal map of topic relationships. AI crawlers parse HTML heading hierarchy to understand how subjects and subtopics connect across multiple layers. Errors in heading ordering disrupt this interpretive flow, causing crawlers to misjudge the scope or relevance of content. A consistent multilevel heading strategy helps crawlers identify parent topics, subordinate explanations, and detailed segments.

Content Sections as Interpretive Blocks

Content sections act as semantic containers that define how information is grouped. Elements such as <section>, <article>, <aside>, and <figure> give crawlers clear boundaries for separating meaning. Stable meaning limits allow systems to extract each block without merging unrelated concepts. AI crawler page sections semantic structure becomes more reliable when each section has a defined purpose and minimal structural noise.

Semantic Micro-Patterns That Improve Clarity

Micro-patterns such as labels, captions, and structured annotations increase interpretive precision. Crawlers rely on these patterns to understand context, identify supporting details, and link descriptions to corresponding elements. Dividing topics into atomic blocks creates consistent logic that strengthens crawler comprehension. AI crawler and content sectioning tags gain additional clarity when micro-patterns are applied consistently.

Structural Elements and Their Crawlable Meaning

| Element | Purpose for AI Crawler | Errors to Avoid |

|---|---|---|

| H1 | Defines the topic | Multiple H1s |

| H2/H3 | Logical segmentation | Random order |

| Section tags | Boundary markers | Over-nesting |

| Figures | Visual meaning units | Missing alt text |

Textual guidance on sectioning and hierarchical patterns aligns with principles described in the W3C HTML5 Sectioning Model.

How AI Crawlers Interpret Metadata and Structured Enhancements

Structured markup functions as a secondary interpretive layer that guides AI crawlers beyond the visible layout. Systems use metadata to validate context, detect factual relationships, and assign meaning to entities that appear across the page. When structured annotations follow predictable patterns, AI crawlers improve structured data accessibility and interpret content with higher precision.

Deep Reasoning Chain

Assertion: Metadata and structured markup significantly influence how AI crawlers interpret meaning beyond the surface structure.

Reason: Markup provides explicit signals about entities, relationships, and factual attributes that are not always visible in plain HTML.

Mechanism: Crawlers evaluate schema markup, JSON-LD blocks, and microdata attributes to map entities into structured representations used for reasoning and reuse.

Counter-Case: If metadata is inconsistent, misplaced, or incomplete, crawlers cannot match page elements with their intended meaning, which weakens interpretive accuracy.

Inference: Pages that implement clean, well-organized metadata produce more reliable meaning extraction because structured annotations reinforce the logical structure defined by semantic HTML.

Schema Markup and Its Structural Role

Schema markup supplements semantic HTML by adding explicit meaning declarations that help AI crawlers identify entities, relationships, and descriptive attributes. It does not replace semantic structure but enhances it by providing standardized fields for concepts that require precise interpretation. Modern systems prioritize AI crawler and JSON-LD markup because JSON-LD keeps structured data independent from layout and offers a clearer machine-readable format. This approach improves AI crawler schema markup importance by separating meaning from presentation.

Microdata and Embedded Semantics

Microdata improves clarity when meaning must be attached directly to specific HTML elements, especially in contexts such as product attributes or localized metadata. AI crawlers use microdata to associate values with individual components when granularity is important. However, inline annotation can clutter the codebase and reduce maintainability. Understanding how AI crawler reads microdata is essential for determining when inline semantics provide more value than embedded JSON-LD blocks.

Metadata Placement and Accessibility Rules

Metadata should be placed consistently to help crawlers interpret structural relationships without ambiguity. Clean separation between factual blocks prevents conflicts when multiple entities appear on the same page. AI crawler and structured metadata layout becomes more predictable when scripts, declarations, and structured annotations follow a uniform placement strategy within the document head or in clearly defined sections of the markup.

JSON-LD vs Microdata vs RDFa

| Type | Strengths | Weaknesses | Best Use Case |

|---|---|---|---|

| JSON-LD | Highest clarity | Detached from HTML | Pages with rich entities |

| Microdata | Local meaning | Can clutter code | Product pages |

| RDFa | Flexible | Rare use | Enterprise documentation |

Structured data practices described in the Schema.org Implementation Guide support these approaches and provide additional clarity for modern crawlers.

How Navigation, Internal Linking, and Hierarchy Shape Crawler Movement

Navigation structure determines how AI crawlers traverse a site and how efficiently they interpret relationships between pages. Systems depend on clear internal pathways to understand topical connections, segment content, and map the overall hierarchy. When navigation elements follow predictable patterns, AI crawler internal linking discoverability improves and enhances internal linking for AI comprehension across the entire site.

Deep Reasoning Chain

Assertion: Navigation and internal linking patterns directly influence how AI crawlers interpret the conceptual relationships between pages.

Reason: Internal pathways reveal which topics belong together and how information is structured across multiple levels.

Mechanism: Crawlers analyze menu hierarchy, link placement, anchor context, and cross-page connections to build an internal map of site meaning.

Counter-Case: When navigation is inconsistent or links are hidden behind script-driven elements, crawlers fail to construct accurate relationships, weakening hierarchical understanding.

Inference: Sites with clear navigational structure improve reasoning accuracy because crawlers align page-to-page connections with stable, machine-readable patterns.

Why Internal Links Act as Conceptual Pathways

Internal links signal conceptual relationships by connecting pages that share topics, entities, or functional roles. These connections help crawlers understand how subjects evolve across sections and how deeply a site covers a domain. When links follow logical patterns, crawlers interpret the hierarchy more effectively and identify the relative importance of each page in the broader structure.

Navigation Menu Structure and Crawl Efficiency

A clear navigation menu provides a structured overview of the site, allowing crawlers to enter major sections with minimal ambiguity. AI crawler navigation menu structure becomes more effective when menus are logically grouped and follow consistent naming patterns. Mega-menus and script-rendered navigation can reduce crawl efficiency because they obscure structural relationships or limit accessibility when JavaScript rendering is restricted.

Link Context and Interpretive Guidance

Anchor text provides context that helps crawlers determine the meaning and purpose of each link. When anchors accurately describe the destination page, crawlers integrate the link into their conceptual model of the site. Link grouping also shapes how crawlers interpret topic clusters, influencing how concepts are organized and aggregated during processing.

Effective Internal Linking Patterns

- Thematic grouping

- Multi-level navigation

- Selective cross-section linking

Navigation and link modeling align with structural guidance described by the W3C Information Architecture Techniques, which emphasize clear pathways and logically organized menus.

How Page Templates and Layout Patterns Influence AI Crawler Recognition

AI crawlers depend on predictable layout templates because repeatable patterns allow them to recognize content types and interpret meaning consistently across similar pages. When templates follow stable structures, crawlers can identify which elements carry topical value and which represent navigational or auxiliary components.

This increases AI crawler page template layout structure reliability and improves AI crawler blog layout crawlability, product interpretation, and FAQ extraction across diverse page formats.

Deep Reasoning Chain

Assertion: Page templates and layout patterns determine how accurately crawlers identify content types and assign meaning to their internal components.

Reason: Predictable patterns help crawlers reuse structural expectations learned from similar templates across the site.

Mechanism: Systems evaluate block order, spacing, semantic grouping, and template-specific components to classify pages and extract information.

Counter-Case: When templates vary significantly or rely on visually styled but semantically inconsistent layouts, crawlers cannot reliably map meaning and fail to reuse structural logic.

Inference: Sites that maintain consistent templates across blogs, articles, products, and FAQs improve recognition accuracy because crawlers associate meaning with template-defined structures.

Blog Layouts and Section Reuse

Blog templates rely on repeatable structure to present titles, introductions, main sections, and concluding elements in a predictable order. Crawlers favor these consistent patterns because they simplify segmentation and reduce the interpretive variability associated with irregular layouts. AI crawler blog layout crawlability increases when recurring blocks follow stable formatting and maintain uniform sectioning across all entries.

Article Layout Requirements for AI Interpretation

Article templates require clear segmentation, optimal block spacing, and logical ordering to support crawler interpretation. Consistent transitions between introduction, core argument, supporting evidence, and summary sections improve meaning extraction across multiple articles. AI crawler article layout structure becomes more reliable when each article follows the same layout rules and maintains predictable content boundaries.

Product Layouts and Entity-Driven Information

Product pages rely on structured blocks containing features, specifications, pricing, availability, and related metadata. AI crawlers interpret these pages more accurately when product data is grouped into stable sections that highlight key attributes. AI crawler product page layout structure benefits from clear separation of entity-driven content, enabling more precise extraction and reuse across search interfaces and recommendation models.

FAQ Layouts and Fragment Extraction

FAQ templates use short factual blocks that align well with crawler expectations for concise information extraction. When questions and answers follow consistent patterns, systems can identify, isolate, and reuse fragments as independent meaning units. AI crawler FAQ section layout structure improves when question–answer pairs maintain uniform formatting, predictable placement, and clear semantic boundaries.

Content-type template consistency reflects principles emphasized in the W3C Architecture of the World Wide Web, which underscores the importance of stable and predictable structures for machine interpretation.

Handling Interactive Elements, Scripts, and Rendering Models

Interactive components influence how AI crawlers interpret a page, especially when functionality depends on script-driven behavior. Dynamic layers introduce uncertainty because rendering may differ between human browsers and machine agents. When interactive elements are not paired with stable fallbacks, systems struggle to maintain consistent visibility across the entire AI crawler site structure.

Deep Reasoning Chain

Assertion: Script-dependent layouts reduce crawler reliability when key content requires dynamic rendering.

Reason: AI crawlers cannot consistently execute complex scripts or replicate full browser environments.

Mechanism: Systems evaluate static HTML first, apply limited JavaScript execution, and defer elements that fail to resolve within restricted rendering cycles.

Counter-Case: Pages that rely on reactive components without fallback markup cause metadata, sections, or content blocks to remain unreadable to crawlers.

Inference: Sites that provide predictable, script-independent alternatives improve visibility because crawlers interpret structure from stable, accessible HTML.

JavaScript and Rendering Challenges

JavaScript introduces partial rendering because not all crawlers support full execution, throttled environments, or complex hydration processes. AI crawler JavaScript rendering limits affect how much of the interface becomes visible during processing. Modern systems degrade gracefully by interpreting static layers first, but dynamic components may be skipped entirely if they fail to load within restricted execution windows.

Expandable Sections and Hidden Content

Expandable components create visibility issues because hidden content is often excluded from structural interpretation. Elements dependent on toggles, collapsible regions, or scroll-triggered displays fall under AI crawler collapse/expand layout issues when no static equivalent exists. Structured summaries placed outside collapsed elements help crawlers understand context even when interactive details remain inaccessible.

Overloaded Frameworks and Crawl Delays

Heavy frameworks increase load times and obstruct crawler access to underlying content. AI crawler blocking JS frameworks layout problems occur when rendering pipelines exceed crawler time limits or when hydration fails. Mitigation requires reducing bundle size, limiting reactive dependencies, and ensuring key structural elements appear in raw HTML.

Techniques for Making Interactive Elements Crawl-Friendly

- Pre-rendering

- Static fallback

- Server-side rendering

- Clean hydration

These approaches align with render accessibility recommendations published in the W3C Client-Side Scripting and Accessibility Guidelines.

Mobile and Responsive Layouts for AI Crawler Accessibility

Mobile-first design influences how crawlers interpret structure because most systems evaluate mobile HTML as the primary version of a page. When the mobile template follows clean patterns, AI crawler mobile layout structured HTML becomes easier for systems to segment and reuse. Mobile responsiveness also affects the stability of the AI crawler site structure, which requires consistent patterns across breakpoints to maintain accurate interpretation.

Deep Reasoning Chain

Assertion: Mobile and responsive templates shape how crawlers interpret structural relationships across different display contexts.

Reason: AI systems analyze the mobile version first and rely on it to extract the primary meaning of each page.

Mechanism: Crawlers evaluate mobile layout hierarchy, responsive patterns, navigation visibility, and breakpoint behavior to determine how structure changes across devices.

Counter-Case: When mobile and desktop structures diverge significantly, crawlers misinterpret scope, omit sections, or incorrectly map relationships between elements.

Inference: Pages with stable mobile-first patterns improve visibility because AI systems interpret meaning from predictable, responsive structures.

Why AI Systems Prioritize Mobile Layout

AI systems prioritize mobile layout because mobile HTML often loads faster, maintains simpler hierarchy, and exposes critical elements without complex styling overhead. Structural differences between mobile and desktop versions influence how crawlers interpret relationships, and this affects the stability of the AI crawler site structure. When mobile layout is clean and semantically consistent, crawlers build more accurate representations of page meaning.

Responsive Patterns and Breakpoint Stability

Responsive templates introduce layout shifts as screen width changes, and these shifts can disrupt interpretation if they alter the order or visibility of key components. AI crawler responsive layout structure becomes unreliable when major blocks collapse or reorder across breakpoints. Stability requires maintaining consistent hierarchy regardless of viewport size to ensure that crawlers interpret meaning from the same structural cues.

Mobile Navigation and Collapsed Elements

Collapsed mobile navigation hides links that crawlers may not access if they require interaction or script execution. Hidden menu behaviors reduce hierarchical clarity and may prevent crawlers from identifying important pathways. Explicit links and stable markup allow crawlers to interpret navigation correctly and maintain complete visibility across mobile contexts.

These principles align with recommendations in the W3C Mobile Web Best Practices, which emphasize structural stability and predictable responsive behavior for machine accessibility.

Image Semantics, Alt Text, and Visual Structure Interpretation

Images influence how systems interpret meaning because visual components create additional semantic signals that complement textual structure. When images follow predictable patterns, crawlers identify how they relate to the surrounding blocks and integrate them into the AI crawler site structure. Effective placement and annotation strengthen AI crawler image layout semantics and help systems extract context from both visual and textual elements.

Deep Reasoning Chain

Assertion: Image semantics shape how AI crawlers interpret contextual meaning across visual and textual blocks.

Reason: Alt text, captions, and placement patterns provide additional cues that support meaning extraction beyond HTML structure.

Mechanism: Crawlers evaluate alt text, spatial grouping, and metadata to determine how images relate to nearby content and to classify the type of information each image conveys.

Counter-Case: When images lack descriptive metadata or appear in visually dense clusters, crawlers fail to map them to the intended content, resulting in incomplete interpretation.

Inference: Pages that establish consistent visual semantics improve interpretive accuracy because crawlers rely on predictable metadata and structured placement.

How AI Crawlers Read Alt Text as Semantic Clues

Alt text provides metadata that enables crawlers to interpret the purpose and context of an image. Systems use alt text as a semantic clue that complements structural signals on the page, making the AI crawler alt text layout role essential for content understanding. Precision is critical because crawlers extract meaning from clear, factual descriptions rather than decorative language or stylistic wording.

Visual Layout and Content Grouping

Image placement affects how crawlers understand relationships between visual and textual elements. Images positioned close to relevant content blocks help systems associate them with the correct concepts, reinforcing AI crawler image layout semantics within the broader structure. Pairing images with structured captions further clarifies their purpose and ensures that crawlers map the visual component to the appropriate section.

Image-Heavy Layouts and Structural Noise

Pages with excessive or ungrouped images create structural noise that disrupts meaning extraction. Large galleries without semantic grouping prevent crawlers from understanding which images are relevant to the topic and which serve decorative roles. Maintaining structured placement and consistent grouping ensures that the AI crawler site structure remains clear and interpretable across visually complex layouts.

These practices follow visual accessibility expectations described in the W3C Alt Text and Image Description Guidelines, which emphasize clarity and semantic alignment.

Layout Validation, Diagnostics, and Structural Auditing

Validation and diagnostics determine how effectively crawlers interpret structural meaning before publication. Clear verification processes ensure that each page maintains consistent patterns that support the AI crawler site structure and prevent structural drift across templates. When teams apply AI crawler layout validation tools consistently, layout clarity improves and reduces interpretation conflicts during machine processing.

Deep Reasoning Chain

Assertion: Systematic layout validation ensures that crawlers interpret structure accurately across all published pages.

Reason: Validation exposes weaknesses that disrupt meaning extraction, such as missing headings, inconsistent hierarchy, or excessive script dependencies.

Mechanism: Teams use HTML validators, structured data analyzers, and template-level audits to detect errors that influence how crawlers process layout.

Counter-Case: When validation is skipped or inconsistent, structural issues accumulate and cause crawlers to misinterpret the AI crawler site structure over time.

Inference: Consistent auditing produces predictable markup because each page aligns with stable patterns verified through automated and manual checks.

Tools for Layout Validation

Validation begins with automated tools that identify defects in markup and structural logic. HTML5 validators highlight syntax issues and missing attributes that influence crawler interpretation. Structured data analyzers evaluate metadata accuracy, and DOM structure checkers reveal problems that break layout consistency. Applying AI crawler layout validation tools before publication ensures that structural errors are addressed early.

Structural Weaknesses Crawlers Commonly Flag

Crawlers frequently detect missing headings that break hierarchical continuity and reduce interpretive accuracy. Over-fragmentation creates noise by dividing content into excessively small blocks, while redundant div-nesting increases complexity without improving clarity. These issues contribute to layout ambiguity and weaken AI crawler markup audit for layout stability.

Pre-Publication Structural Checklist

A structured checklist improves layout consistency by enforcing essential rules before content goes live. Stable hierarchy ensures reliable segmentation, clean sectioning separates topics into meaningful units, and annotated metadata clarifies relationships between elements. Non-interactive priority content ensures that essential blocks remain accessible even when scripts fail or dynamic components are not rendered.

Structural Errors and Fixes

| Error | Impact | Fix |

|---|---|---|

| Broken heading order | Meaning confusion | Rebuild hierarchy |

| Excess JS | Rendering failure | Provide fallback |

| Hidden text | Incomplete indexing | Use visible anchors |

These validation principles align with the markup review processes described in the W3C Markup Validation Service, which emphasizes structural precision and consistent page logic.

Templates and Repeatable Patterns That Improve Machine Interpretation

Predictable and modular templates help systems interpret meaning consistently because repeated structures enable crawlers to recognize patterns across the AI crawler site structure. When templates follow stable logic, crawlers map topics, segments, and relationships with higher accuracy. Consistent patterns also enhance AI crawler layout and content chunks by aligning meaning with clearly defined structural boundaries.

Deep Reasoning Chain

Assertion: Repeatable templates improve crawler interpretation by creating predictable pathways for meaning extraction.

Reason: Cralwers rely on structural repetition to identify which elements carry semantic weight and which represent auxiliary or decorative components.

Mechanism: Systems evaluate chunk boundaries, template consistency, and block-level organization to map content into reusable meaning units.

Counter-Case: Without repeatable patterns, crawlers misinterpret relationships between blocks and fail to distinguish meaningful content from unstructured noise.

Inference: Pages that follow modular templates support stable interpretation because crawlers use template patterns to analyze and reuse content predictably.

Chunk-Based Content Architecture

Chunk-based architecture improves reuse by dividing information into coherent meaning units that support segmentation. Atomic blocks give crawlers precise boundaries that enhance AI crawler layout and content chunks and enable efficient extraction. When each block focuses on a single concept, crawlers interpret structure with greater confidence and reuse content across multiple contexts.

Structured vs Unstructured Layout Differences

Structured layouts provide clear boundaries that separate topics into logically organized segments. Crawlers interpret these segments by following predictable patterns that highlight the hierarchy and internal logic of the page. Unstructured content disrupts interpretation because it lacks consistent grouping, creating ambiguity that weakens AI crawler layout vs unstructured content reliability.

Removing Redundant or Decorative Layout Elements

Pages containing unnecessary visual components create noise that distracts crawlers from essential meaning. Removing redundant layout elements improves interpretability by reducing complexity and highlighting core structural components. Clean interfaces reduce AI crawler redundant layout elements and strengthen the clarity of the AI crawler site structure, ensuring that crawlers focus on content rather than decorative blocks.

These principles align with modular design guidance described in the W3C Design Principles, which emphasize predictable structure and reusable patterns for consistent interpretation.

Checklist:

- Does the page use a single H1 and a logical H2–H4 sequence?

- Are sections defined using semantic tags like

<section>,<article>,<aside>with clear purpose? - Does each paragraph focus on a single idea to support AI extraction?

- Are key terms defined locally to build a reusable knowledge graph?

- Is the internal linking structure clear and contextual to support crawler pathways?

- Is the mobile layout consistent and maintains semantic structure across breakpoints?

Best Practices for Maintaining Layout Stability Over Time

Long-term stability ensures that crawlers interpret structure consistently as a site evolves. Sustainable design practices prevent structural drift and preserve the integrity of the AI crawler site structure across updates. When teams follow structured layout crawlability for AI bots and apply AI crawler page layout best practices over time, systems interpret meaning predictably even as the site expands.

Deep Reasoning Chain

Assertion: Maintaining stable layouts over time preserves crawler understanding and prevents structural degradation.

Reason: Crawlers build internal expectations about how a site organizes meaning, and these expectations depend on consistent templates and predictable updates.

Mechanism: Teams apply governance rules, validate templates, and review structural behavior after each release to confirm that changes do not disrupt visibility.

Counter-Case: Frequent redesigns, unplanned template variations, or inconsistent markup introduce drift that weakens structured layout crawlability for AI bots and breaks established interpretive patterns.

Inference: Stable, methodical updates support long-term clarity because crawlers rely on persistent layout logic when mapping meaning across a growing site.

Version-Safe Layout Updates

Maintaining version-safe updates requires avoiding changes that disrupt the hierarchy or break established patterns. Templates should evolve gradually to preserve structural continuity and ensure AI crawler page layout best practices remain intact. Stable design reduces the risk of unexpected segmentation issues when crawlers process new or updated pages.

Long-Term Structural Governance

Governance practices maintain structural consistency across large content ecosystems. Documentation ensures that teams follow uniform rules when creating or updating templates, and template libraries reduce variability by providing predefined patterns. Reusable components further strengthen the AI crawler site structure by keeping recurring blocks consistent across hundreds of pages.

Monitoring Crawlability Through Change Cycles

Monitoring ensures that structural performance remains stable after each deployment. Teams track crawler behavior to identify regressions, confirm that layout changes remain accessible, and validate hierarchical integrity. Running diagnostics after updates verifies that structured layout crawlability for AI bots remains consistent and prevents unnoticed structural drift.

These maintenance practices align with recommendations described in the W3C Quality Assurance Framework, which emphasizes consistency, validation, and long-term structural governance.

Final Recommendations and Operational Summary

Maintaining a stable structure ensures that crawlers interpret meaning accurately across expanding content ecosystems. A clear page hierarchy for AI engines strengthens segmentation, while semantic page architecture and ai-focused content layout support consistent interpretation regardless of template or content type.

Deep Reasoning Chain

Assertion: Operational consistency ensures that crawlers interpret structure and meaning reliably across all pages.

Reason: Stable hierarchy, predictable segmentation, and clear metadata patterns help systems extract meaning with minimal ambiguity.

Mechanism: Governance rules, template standardization, and periodic audits keep structural components aligned across updates and new content.

Counter-Case: Without long-term operational discipline, layouts drift, metadata becomes inconsistent, and crawlers lose the ability to map relationships correctly.

Inference: Pages remain aligned with crawler expectations when teams apply structured governance and maintain semantic stability over time.

Key Patterns to Maintain

Predictable hierarchy improves interpretive clarity by giving crawlers a consistent pathway through each page. Meaning-driven segmentation ensures that each block represents a single concept, and metadata consistency supports accurate contextual mapping across the site.

Structural Governance Framework

Governance relies on structured checklists that define essential requirements for each page. Templates maintain uniform layouts that reduce variability, and layout audits ensure that structural patterns remain stable as the site evolves.

Long-Term Alignment With AI Crawlers

Long-term stability requires keeping pages aligned with emerging crawler capabilities. This includes reviewing structural behavior, updating patterns when necessary, and ensuring that semantic architecture remains clear as models improve understanding over time.

Interpretive Patterns of AI Crawler Layout Parsing

- Layout boundary recognition. Crawlers interpret pages through detected zones such as headings, navigation areas, and content regions that define semantic responsibility.

- Hierarchical regularity. Predictable heading depth and semantic HTML elements act as primary signals for scope resolution and content prioritization.

- Metadata-assisted context framing. Descriptive metadata layers provide auxiliary context that supports layout interpretation without overriding on-page structure.

- Pathway continuity. Internal navigation and contextual links are read as semantic pathways that connect related meaning clusters across the site.

- Template-level interpretive stability. Consistent crawler behavior across page templates indicates durable structural logic rather than page-specific optimization.

These patterns describe how AI crawlers interpret structured layouts as coherent semantic maps, where hierarchy, boundaries, and pathways guide understanding independently of procedural optimizatio

FAQ: AI Crawlers and Structured Layouts

How do AI crawlers understand page structure?

AI crawlers interpret structure by analyzing headings, semantic tags, section boundaries, and internal patterns that reveal the hierarchy of ideas.

Why is semantic HTML important for AI crawlers?

Semantic HTML provides explicit interpretive roles for content zones, helping crawlers classify navigation, core topics, and supporting information.

What layout signals improve crawler accuracy?

Clean heading order, consistent sectioning, predictable block templates, and minimal noise improve segmentation and meaning extraction.

How do AI crawlers treat internal links?

Internal links act as conceptual pathways that reveal relationships between pages, helping systems map topical structure across a site.

Can AI crawlers parse interactive elements?

Crawlers handle static HTML reliably but may skip script-dependent or hidden content unless fallback markup is available.

How does mobile layout affect crawler interpretation?

AI crawlers prioritize mobile-first HTML, so structural clarity on mobile directly influences meaning extraction and visibility.

What metadata helps AI crawlers the most?

WebPage, Article, BreadcrumbList, and Organization schema strengthen contextual understanding and reinforce semantic boundaries.

Do images influence structural interpretation?

Images contribute meaning when paired with precise alt text, captions, and consistent placement within related content blocks.

What causes crawlers to misinterpret layout?

Irregular heading order, excessive JS rendering, hidden text, over-nesting, and inconsistent template patterns reduce structural clarity.

How do templates improve crawler reliability?

Predictable templates create stable interpretive patterns, enabling crawlers to reuse structural expectations across similar pages.

Glossary: Key Terms for AI Crawler Interpretation

This glossary defines essential structural and semantic terms that improve machine interpretation across AI crawlers and generative indexing systems.

AI Crawler Site Structure

The hierarchical arrangement of headings, semantic tags, navigation elements, and content sections that determines how AI crawlers interpret meaning across a page.

Semantic HTML

HTML elements that provide functional meaning rather than visual styling, helping crawlers classify content zones such as header, navigation, main content, and supporting blocks.

Structural Hierarchy

The ordered sequence of H1–H2–H3 headings that defines how ideas and subtopics relate, enabling crawlers to reconstruct conceptual layers accurately.

Sectioning Tags

HTML elements such as <section>, <article>, <aside>, and <figure> that divide content into discrete meaning units recognizable by AI crawlers.

Layout Predictability

A consistency property of templates that allows crawlers to reuse structural expectations across similar pages, improving segmentation and meaning extraction.

Metadata Layer

Structured annotations such as JSON-LD, microdata, and schema markup that provide explicit factual and contextual signals beyond visible HTML.