Last Updated on December 20, 2025 by PostUpgrade

From Layout to Logic: Structuring for AI Interpretation

Modern AI systems derive meaning from explicit structural signals rather than visual layout. This shift highlights AI Content Structuring as a core requirement for accurate interpretation. The article introduces the principles and mechanisms that support high-fidelity understanding across generative engines.

The Foundations of Machine-Readable Structuring

AI Content Structuring defines how information is organized for consistent interpretation across retrieval and generative systems. This section introduces the core patterns that support machine-readable clarity. Its purpose is to establish the foundation for conceptual, operational, and architectural consistency, drawing on interpretability principles demonstrated by research groups such as Stanford NLP.

Machine-readable structuring is the deliberate organization of content using stable labels, predictable hierarchy, and explicit boundaries that allow AI systems to build accurate internal meaning graphs.

Claim: Structured content produces more consistent and accurate AI interpretations.

Rationale: Predictable hierarchies allow models to reduce ambiguity and align meaning across tokens.

Mechanism: AI systems use explicit section markers, sentence boundaries, and semantic anchors to map relationships.

Counterargument: Unstructured text may still be parsed, but interpretation becomes unstable and may degrade over time.

Conclusion: Stable structure is a prerequisite for scalable AI comprehension.

Definition: AI understanding refers to a model’s capacity to interpret structured meaning, hierarchical signals, and conceptual boundaries in a way that enables accurate reasoning, stable summarization, and consistent reuse of content across generative systems.

Concept Structure in Machine Interpretation

Concept structure defines the categorical boundaries used by AI systems to classify meaning, and it operates as a foundational layer within AI Content Structuring. This subsection explains how structured reasoning layout supports reliable classification. Its purpose is to provide a conceptual framework for later mechanistic sections.

Effective concept organization begins with establishing discrete categories that reflect stable semantic units. AI models interpret these units as nodes within a larger meaning graph, enabling consistent recognition across contexts and reinforcing the internal logic of AI Content Structuring.

Clear categorization reduces noise and supports high-resolution interpretation when documents scale.

Concept structure also determines how systems generalize meaning. When categories are presented with precision, AI engines avoid blending unrelated ideas and maintain coherent segmentation during extraction and summarization. This stability strengthens all downstream interpretive tasks and aligns directly with the goals of AI Content Structuring.

Hierarchical Concept Placement

Hierarchical concept placement determines how models align concepts with contextual depth. This layer supports consistent interpretation across document sections. The goal is to establish positional clarity.

Hierarchies enable engines to differentiate between primary and secondary information, allowing them to structure terms, definitions, and mechanisms according to their conceptual importance. This reduces interpretive ambiguity by guiding models through predictable levels of detail.

When concepts consistently appear at the correct structural depth, engines develop stable internal mappings that contribute to higher accuracy in reasoning and retrieval. These mappings form persistent interpretive anchors that models reuse across document layers.

Concept Block — Core Elements of Conceptual Structuring

- Definition of conceptual nodes that establish discrete meaning units.

- Purpose of category precision to ensure unambiguous classification.

- Dependency relations that indicate how concepts link across hierarchical segments.

These elements support interpretable meaning graphs that models can consistently reuse during reasoning.

Boundary Signals in Machine-Readable Layouts

Boundary signals are structural markers used by models to segment meaning. This subsection describes how layout logic for AI influences interpretation accuracy. Its purpose is to define segmentation rules for AI systems that depend on predictable boundaries.

Boundary signals help models establish the limits of each semantic unit, preventing unintended blending of adjacent content. They define where interpretive focus should shift, enabling models to segment text into coherent, retrievable components. This segmentation ensures that each unit can be independently classified, summarized, or reused.

Models rely on boundary regularity to maintain stability during long-context processing. When boundaries are predictable, models devote fewer resources to recalculating context windows, which results in more accurate reasoning and structured extraction.

Structural Boundaries and Section Logic

This subsection explains how structural expression rules reinforce segmentation reliability. It focuses on predictable positioning that enables stable extraction across engines.

Section logic ensures that each segment expresses a single purpose and contains coherent informational units. When authors apply section logic consistently, engines treat these segments as self-contained meaning clusters, reducing interpretive variance across models.

Positioning strategy also influences interpretability. Placing definitions, mechanisms, and implications in predictable locations improves an engine’s ability to reconstruct meaning during inference and retrieval processes.

Mechanism Block — Boundary Reinforcement Processes

| Boundary Type | Functional Role | Influence on AI Interpretation |

|---|---|---|

| Hierarchical markers | Identify segment position | Improve relational clarity between sections |

| Sentence-level cues | Indicate meaning transitions | Support deterministic contextual segmentation |

| Section delimiters | Establish macro boundaries | Increase extraction precision across models |

These boundaries improve interpretive stability by enabling systems to consistently isolate meaning segments.

Hierarchical Depth as a Signal for Generative Systems

Hierarchical depth provides generative engines with explicit cues for distributing meaning across multiple layers. This section defines depth as an interpretative signal and describes how multi-level organization guides model behavior, following principles demonstrated in structural analysis research from MIT CSAIL. Its purpose is to detail how multi-level structuring affects reasoning, segmentation, and context reconstruction across generative systems.

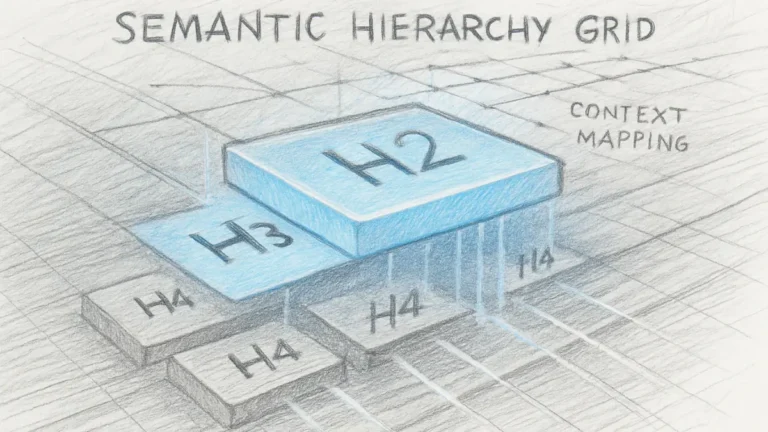

Hierarchical depth is the layered organization of content into H2→H3→H4 units that encode semantic proximity and contextual relevance by establishing stable interpretative boundaries.

Claim: Hierarchical depth enables more accurate multi-layer reasoning.

Rationale: Depth boundaries clarify relational dependencies within content sequences and reduce semantic drift.

Mechanism: Generative models map hierarchical depth to internal attention hierarchies that govern token relationships.

Counterargument: Flat content may appear simpler, but the absence of structural layers limits inferential resolution and decreases interpretive precision.

Conclusion: Structured depth improves interpretability and long-term reusability by guiding engines through predictable meaning levels.

Depth-Controlled Meaning Distribution

Depth controls how meaning flows across sections by influencing the interpretive span that models allocate to different content layers. This subsection explains distribution rules for meaning-driven layout and clarifies how depth defines the relationship between conceptual, mechanistic, and contextual units. Its purpose is to outline how hierarchical separation supports consistent meaning reconstruction.

Depth-based distribution enables models to process complex information through structured interpretive windows. Each level of depth introduces a confined semantic unit that models can evaluate independently before integrating it into broader meaning sequences. This reduces overlap between unrelated concepts and supports higher-resolution inference across long-form documents.

Depth structuring also stabilizes interpretive priority. High-level segments establish conceptual scope, while nested units introduce functional details or domain-specific constraints. Models rely on this vertical organization to determine which statements carry structural authority and which details serve as subordinate refinements.

Depth Signals for Model Reasoning

Depth signals help models assign relational weights to concepts by distinguishing structural prominence and contextual specificity. This subsection describes how structured logic signals reinforce logical boundaries and maintain stable meaning across nested units. Its goal is to ensure that models interpret depth as a consistent and predictable semantic cue.

Depth signals strengthen interpretive clarity by mapping conceptual importance directly onto structural positioning. Models use these positional cues to regulate attention allocation, preventing overemphasis on secondary elements and preserving the intended reasoning path. This improves reliability in tasks such as summarization, segmentation, and structured retrieval.

Depth cues also enhance long-context reasoning by reducing ambiguity in hierarchical transitions. When engines detect stable depth-layer patterns, they reconstruct meaning with fewer interpretive errors, which increases resilience across diverse generative architectures.

Semantic Scaling Through Nested Sections

Nested sections expand a document’s capacity for layered reasoning by distributing content across progressively granular structural units. This subsection introduces scaling logic within deep structural formats and explains how nested organization supports multi-phase interpretation. Its purpose is to clarify how depth increases semantic precision without increasing cognitive complexity.

Semantic scaling allows engines to interpret each nested layer as a functionally distinct meaning zone. High-level statements establish the conceptual baseline, intermediate layers present mechanisms or structured evidence, and lower layers refine, contextualize, or limit the interpretation. This tiered structure enables generative models to maintain coherence across expansions.

Nested structures also facilitate controlled redundancy. When a concept reappears in lower layers, it is interpreted as elaboration rather than repetition, because depth provides a clear functional signal. This enables models to unify dispersed information into a single, consistent semantic representation.

Precision Scaling in Deep Structures

Precision scaling ensures that meaning remains consistent across nested layers by defining parameters for hierarchical refinement, which functions as a critical component within AI Content Structuring. This subsection outlines how multi-tier layout planning increases structural coherence and improves interpretive uniformity. Its objective is to optimize the reliability of meaning across complex documents.

Precision scaling reinforces clarity by ensuring that each nested layer tightens, rather than broadens, interpretive scope. Engines rely on these narrowing patterns to regulate inferential steps and maintain alignment between conceptual claims and their operational expansions. This prevents reasoning drift and preserves logical sequencing across frameworks shaped by AI Content Structuring.

Precision parameters also stabilize interpretive reuse. When models encounter well-calibrated depth sequences, they generate more consistent outputs across summarization, transformation, and retrieval tasks. This makes structured documents more adaptable to future generative environments and strengthens the long-term value of AI Content Structuring.

Mechanism Block — Depth as a Functional Scaling System

| Depth Function | Structural Role | Effect on Interpretation |

|---|---|---|

| Concept anchoring | Establishes top-level meaning | Improves recognition of primary intent |

| Mechanism refinement | Adds operational detail | Enhances multi-step reasoning fidelity |

| Context narrowing | Defines interpretive limits | Reduces drift across nested layers |

These depth functions support consistent reasoning by aligning conceptual expansion with structural placement.

Principle: Content becomes more interpretable for generative engines when its hierarchical depth, segmentation patterns, and conceptual boundaries remain predictable enough for models to map meaning without ambiguity or structural drift.

Predictability as a Core Mechanism for AI Interpretation

Predictability improves AI accuracy by reducing structural variability and guiding engines toward stable interpretive pathways. This section defines predictable patterns and evaluates their interpretative value, drawing on consistency research published by groups such as Berkeley BAIR. Its purpose is to establish predictability as a primary design factor that influences reasoning, extraction, and long-context alignment.

Predictability is the controlled consistency of structural patterns that allow AI systems to infer meaning without ambiguity by reducing variance in syntactic and hierarchical forms.

Claim: Predictable structures improve interpretive precision.

Rationale: Models rely on structural regularity to stabilize parsing.

Mechanism: Predictable sentence architecture reduces interpretive variance.

Counterargument: Excessive predictability may restrict stylistic expression.

Conclusion: Structural predictability enhances comprehension reliability.

Example: A document with consistent hierarchical boundaries, clear definition placement, and stable terminology enables AI systems to segment meaning accurately, increasing the probability that high-confidence blocks appear in generative summaries and retrieval outputs.

Pattern Stability in Structural Design

Pattern stability defines how consistent formats support model comprehension by creating recurring interpretive cues. This subsection describes stable structural configurations and explains how predictable structure design supports reliable meaning extraction. Its purpose is to outline constraints that improve the consistency of model outputs.

Stable structural design allows AI systems to form expectations about how meaning will be distributed across a document. When models can anticipate where definitions, mechanisms, or implications appear, they reduce the computational cost of parsing and improve alignment between local and global meaning. This leads to more consistent outcomes in summarization, retrieval, and reasoning tasks.

Pattern stability also supports multi-model transferability. Engines using different architectural approaches still benefit from predictable textual structures, because structural similarity reduces ambiguity in token-level relationships. This enables consistent behavior across generative systems with varied inference strategies.

Controlled Variability Within Predictable Formats

Controlled variability balances clarity with the flexibility required for expressive writing, functioning as a complementary layer within AI Content Structuring. This subsection defines acceptable variability thresholds and explains how logical formatting framework principles maintain interpretability while allowing refined stylistic adjustments. The objective is to preserve structural predictability without eliminating authorial control.

Controlled variability enables writers to adjust syntactic and organizational patterns without disrupting the underlying interpretive cues that generative engines follow. When modifications occur within predictable limits, models retain their ability to map meaning accurately and identify the functional purpose of each structural unit, reinforcing the stability expected in AI Content Structuring.

Variability thresholds also determine how much deviation a document can tolerate before interpretability decreases. Small adjustments in tone or pacing may improve readability for humans while leaving model comprehension intact, provided the skeleton of structural consistency remains unchanged. This balance ensures that documents retain expressive range while still conforming to the structural expectations defined by AI Content Structuring.

Concept Block — Stability Principles for Structural Predictability

- Repetition of structural patterns reinforces model expectations.

- Clear functional labeling promotes stable meaning recognition across segments.

- Reduced syntactic variance decreases misinterpretation in long-context workflows.

These principles strengthen interpretive alignment by giving models predictable cues for mapping semantic intent.

Mechanism Block — Predictability Controls and Their Functions

| Predictability Control | Structural Role | Impact on Interpretation |

|---|---|---|

| Fixed section ordering | Establishes hierarchical expectations | Improves model alignment during multi-step reasoning |

| Uniform sentence patterns | Reduces syntactic ambiguity | Enhances stability in parsing and inference |

| Consistent formatting cues | Maintain interpretive boundaries | Support accurate segmentation across engines |

These controls reinforce the stability of interpretive signals by constraining variability within predictable structural limits.

Structural Coherence as a Driver of Machine Accuracy

Structural coherence aligns meaning across hierarchical units and stabilizes interpretation within generative engines. This section describes how coherence links segments into consistent semantic pathways, drawing on interpretability work produced by the Allen Institute for AI. Its purpose is to define coherence as an operational requirement for machine comprehension.

Structural coherence is the logical alignment of adjacent sections, maintained by predictable sequencing and consistent semantic transitions.

Claim: Structural coherence increases model accuracy in complex reasoning tasks.

Rationale: Logical transitions reduce contextual drift across hierarchical layers.

Mechanism: Engines map coherence patterns onto attention pathways to maintain stable meaning.

Counterargument: Coherence demands strict discipline in structural planning, which may increase authoring constraints.

Conclusion: Coherence is a foundational factor in maintaining interpretive accuracy across generative systems.

Sequential Alignment of Meaning Blocks

Sequential alignment ensures that meaning progresses in controlled increments by organizing content into predictable relational steps, forming one of the operational foundations of AI Content Structuring. This subsection explains how structured idea mapping reinforces interpretability by giving engines stable cues for how one unit leads to the next. Its purpose is to maintain logical progression across sections and reduce ambiguity in transitional reasoning.

Sequential alignment strengthens model comprehension by establishing explicit dependencies between adjacent meaning blocks. When sections follow a consistent interpretive trajectory, engines can anticipate the semantic role of each unit and reconstruct the broader conceptual pathway with greater accuracy. This reduces the likelihood of misaligned inference during long-context processing and aligns directly with the sequencing principles used in AI Content Structuring.

Aligned sequences also help models differentiate between primary and secondary information. When transitions follow a stable directional flow, engines can allocate attention according to structural intent rather than recalculating meaning relationships. This improves the fidelity of reasoning tasks that depend on multi-step interpretation and increases the overall coherence expected in AI Content Structuring.

Alignment Techniques for Multi-Section Documents

Alignment techniques define how sections reinforce each other by controlling the relational spacing between conceptual, mechanistic, and contextual units. This subsection outlines alignment protocols that support reasoning-safe layout and strengthen the distribution of meaning across multi-section documents. Its goal is to maintain semantic uniformity throughout the structure and ensure the alignment standards expected in AI Content Structuring.

Effective alignment relies on the consistent placement of definitions, claims, mechanisms, and implications within predictable zones. This allows engines to associate specific structural functions with specific formatting cues, creating stable interpretive expectations. When structure aligns with function, engines minimize contextual misclassification across segments, reinforcing the organizational logic central to AI Content Structuring.

Alignment protocols also govern how cross-references and reiterations occur. When references are introduced at consistent depths and intervals, models interpret them as reinforcement rather than noise, sustaining clarity across extended reasoning sequences. These protocols help maintain the internal coherence that AI Content Structuring depends on for reliable multi-layer interpretation.

Concept Block — Coherence Principles in Sequential Structuring

- Logical transitions preserve meaning relationships between adjacent segments.

- Consistent sequencing reduces ambiguity across hierarchical layers.

- Aligned pathways reinforce model expectations for reasoning continuity.

These principles strengthen multi-step interpretation by anchoring conceptual flow to stable structural markers.

Continuity Signals in Hierarchical Structures

Continuity signals preserve relational meaning between sections by establishing persistent interpretive patterns across structural layers. This subsection describes placement and formatting strategies used to maintain continuity and increase the reliability of long-range reasoning. Its purpose is to support stable interpretation when models process extended documents and to reinforce the continuity standards required in AI Content Structuring.

Continuity is reinforced when related concepts recur at predictable intervals or appear within structurally consistent contexts. Engines identify these recurrence patterns as cohesion signals, allowing them to maintain semantic alignment even when topics expand into multiple segments. This patterned recurrence strengthens the interpretive scaffolding expected in AI Content Structuring.

Continuity signals also govern how transitions behave across structural depth. By controlling the consistency of headings, markers, and framing patterns, authors give engines the cues needed to maintain stable relationships between local and global meaning. These patterned transitions form a continuity layer that integrates cleanly into the broader framework of AI Content Structuring.

Continuity-Based Reinforcement in Structured Documents

Reinforcement techniques help systems interpret relationships across layers by standardizing structural depth cues within a document. This subsection discusses controlled structural depth mechanisms that support continuity and minimize interpretive drift. The objective is to ensure that models sustain strong semantic cohesion from top-level ideas to granular operational details.

Structured reinforcement enables models to detect which segments belong to the same conceptual thread, even when they appear at different hierarchical depths. Systems rely on these cues to track thematic continuity and ensure that meaning does not fragment across layers.

Depth-based reinforcement also improves reasoning precision, because engines learn to differentiate expansion from deviation. When structural depth is applied consistently, engines maintain stable cognitive pathways throughout generative processing.

Mechanism Block — Coherence Reinforcement Processes

| Coherence Factor | Structural Role | Impact on Interpretation |

|---|---|---|

| Transitional consistency | Aligns meaning flow | Reduces contextual drift across layers |

| Recurrent framing | Maintains thematic stability | Supports long-range semantic recall |

| Depth-linked cues | Establish hierarchical relationships | Strengthens multi-level reasoning accuracy |

These coherence mechanisms improve alignment and support reliable interpretation across generative systems.

The Function of Clarity in Generative Interpretation

Clarity reduces interpretive ambiguity and improves retrieval quality across generative models. This section examines clarity as a structural input and demonstrates how explicitness shapes interpretive fidelity, reflecting principles studied at the Carnegie Mellon LTI. Its purpose is to outline clarity rules that enhance meaning extraction and ensure deterministic interpretation across engines.

Clarity refers to the precision, explicitness, and consistency of content elements that enable deterministic interpretation.

Claim: Clarity increases deterministic interpretation across generative engines.

Rationale: Explicit meaning reduces reliance on probabilistic inference.

Mechanism: Generative systems align clear statements with direct semantic mappings.

Counterargument: Over-optimization for clarity may limit expressive range.

Conclusion: Clarity is a necessary input for consistent generative visibility.

Structural Markers That Improve Extraction

Structural markers guide AI extraction processes by providing explicit cues for segmenting meaning units. This subsection introduces placement strategies that enhance extraction reliability and demonstrates how clarity-aligned layout principles strengthen interpretive stability. Its purpose is to define markers that generate predictable extraction outcomes.

Markers such as consistent headings, explicit contextual statements, and stable formatting patterns allow engines to isolate relevant information without recalculating structural intent. When these markers appear predictably, retrieval workflows become more accurate, and models generate fewer false associations between unrelated segments.

Clarity-oriented markers also support multi-engine consistency. Since different generative systems use distinct attention mechanisms, cross-platform clarity emerges when content adheres to cues recognized by all major architectures. This improves the replicability of extraction results.

Extraction-Safe Formatting Controls

Extraction-safe formats ensure stable meaning outputs by organizing text into layered units that reflect semantic depth. This subsection outlines controls for structured topic layering that reinforce clarity and minimize interpretive variance. The goal is to produce consistent extraction patterns across models.

Formatting controls standardize the appearance and function of structural cues so that models consistently interpret them as segmentation boundaries. When engines identify headings, spacing conventions, or boundary markers as reliable signals, they reconstruct meaning more accurately during retrieval and summarization.

Layered formatting also strengthens internal content mapping. Topic layers that follow predictable depth patterns help engines differentiate high-level statements from detailed mechanisms or contextual qualifiers, ensuring that meaning remains internally coherent.

Concept Block — Clarity Markers for Reliable Extraction

- Explicit section labels define unambiguous functional roles.

- Stable formatting patterns prevent accidental interpretation drift.

- Clear contextual statements reduce reliance on probabilistic inference.

These clarity markers help engines assign meaning deterministically across varied interpretive tasks.

Reducing Ambiguity Through Explicit Framing

Explicit framing reduces ambiguity by defining contextual parameters that guide the interpretive scope. This subsection describes strategies for structuring meaning boundaries and demonstrates how stable content arrangement improves consistency across generative outputs. Its purpose is to stabilize contextual interpretation in complex documents.

Framing helps engines identify the thematic purpose of each structural unit before processing its details. When framing is precise, models can classify segments into conceptual, mechanistic, or contextual layers with greater accuracy, reducing cross-segment misalignment.

Explicit framing also mitigates errors in long-context reasoning. Models frequently rely on framing sentences to determine how new information should interact with previous content. Stable framing structures give engines the continuity needed to maintain coherence across large contexts.

Contextual Boundary Definition Techniques

Boundary definition improves interpretive consistency by establishing the limits of each conceptual or operational unit. This subsection outlines methods to frame context windows using layout coherence rules that reinforce structural clarity. Its objective is to support coherent extraction workflows that perform consistently across engines.

Context boundaries help models distinguish where a topic begins, evolves, or shifts. Engines use boundary cues to avoid blending adjacent meaning units, preventing unintended semantic overlap during retrieval and summarization tasks.

Boundary definition techniques also support precise context-windowing. When structural boundaries follow predictable patterns, models can compress or expand interpretive spans without distorting meaning, which improves downstream classification, reasoning, and search-based extraction.

Mechanism Block — Clarity Reinforcement Strategies

| Clarity Factor | Structural Role | Impact on Interpretation |

|---|---|---|

| Explicit framing | Defines thematic boundaries | Improves contextual stability across segments |

| Structured markers | Guide segmentation | Enhance retrieval consistency |

| Layered formatting | Establish interpretive depth | Increase precision in extraction workflows |

These clarity mechanisms reinforce deterministic interpretation and reduce ambiguity across generative engines.

Structural Logic as a Basis for Model Reasoning

Structural logic provides engines with explicit reasoning cues that support consistent interpretation. This section defines logical structuring principles and reflects analytical practices studied at the Oxford Internet Institute. Its purpose is to explain how structural logic influences internal model behavior and strengthens the reliability of meaning extraction.

Structural logic is the orderly organization of claims, evidence, and transitions that guides AI models through meaning sequences.

Claim: Structural logic improves reasoning fidelity across generative models.

Rationale: Logical relationships guide contextual mapping and reduce uncertainty.

Mechanism: Models interpret logical sequences through structural markers and content dependencies.

Counterargument: Poorly aligned logic can distort inference patterns.

Conclusion: Strong structural logic enhances model reasoning and inference accuracy.

Logic-Guided Structuring Patterns

Logic-guided structures provide predictable meaning flows by organizing content into coherent and sequentially aligned reasoning units. This subsection introduces alignment rules for logical consistency and explains how content logic alignment supports interpretive reliability. Its purpose is to ensure structural coherence across segments.

When logic is embedded in the structure rather than inferred from the text alone, models can interpret reasoning steps without reconstructing intent from ambiguous transitions. This reduces the interpretive burden placed on generative architectures and increases reproducibility across different models or retrieval environments.

Logic-guided patterns also help differentiate between explanatory content, evidential statements, and contextual qualifiers. When these functions appear in predictable configurations, engines consistently identify internal roles and maintain coherent reasoning across extended documents.

Logical Mapping in Multi-Layer Sections

Mapping techniques clarify internal relationships by organizing logical dependencies across hierarchical layers. This subsection explains how structured clarity mapping enhances engine interpretation by defining the pathways through which reasoning flows. Its goal is to improve logical transparency and stabilize interpretation.

When hierarchical layers map to consistent logical segments, engines identify how claims relate to supporting mechanisms, counterexamples, or implications. This mapping reduces semantic noise and allows models to classify meaning according to the functional role of each segment.

Logical mapping also strengthens cross-layer continuity. Models rely on these mapped connections to maintain a stable reasoning trajectory when transitioning from summaries to mechanisms, or from definitions to applications.

Concept Block — Logic-Driven Structural Principles

- Explicit ordering defines how reasoning progresses.

- Predictable transitions reduce interpretive variance.

- Mapped dependencies support multi-step inference.

These principles reinforce clarity in reasoning pathways recognized by generative engines.

Reasoning Chains in Multi-Section Documents

Reasoning chains describe how models process sequential logic across structural layers by following predictable interpretive cues. This subsection defines layout-driven reading flow and outlines how reasoning controls influence the stability of model inference. Its purpose is to structure inference pathways in a way that supports deterministic interpretation and to reflect the sequential logic standards established in AI Content Structuring.

Models interpret reasoning chains by following structural signals that mark claims, supporting evidence, and transitions. When these signals appear consistently, engines associate each structural element with a specific interpretive function, strengthening internal reasoning models.

Reasoning chains also improve the granularity of inference. Engines differentiate between primary argumentative steps and secondary elaborations when structural cues clearly indicate their relative importance. This supports more accurate reconstruction of the author’s intended logic.

Interpretation-Driven Layout Controls

Parsing-safe structure enhances reading stability by ensuring that models receive ordered interpretive cues throughout multi-layer documents. This subsection explains controls that support deterministic interpretation and describes how engines benefit from structural formats designed to guide reasoning. The objective is to reinforce structural guidance in complex contexts.

Interpretation-driven controls include consistent depth markers, explicit topic boundaries, and predictable placement of reasoning components. These controls minimize the risk of interpretive drift when models process long or information-dense documents.

Stable controls also facilitate cross-model consistency. When layout rules follow predictable structures, engines with different architectures arrive at similar interpretations, which improves the robustness of retrieval, summarization, and reasoning tasks.

Mechanism Block — Structural Logic Mechanisms

| Logical Factor | Structural Role | Impact on Model Reasoning |

|---|---|---|

| Ordered reasoning flow | Defines sequence of inference | Improves fidelity of logical interpretation |

| Hierarchical mapping | Aligns layers with conceptual depth | Stabilizes multi-step reasoning |

| Defined transitions | Clarify relational pathways | Reduces uncertainty in contextual mapping |

These mechanisms strengthen the logical foundation that generative engines rely on during interpretation.

Operational Structure for Multi-Model Generative Visibility

Operational structure defines how content interacts with multiple generative engines that rely on different internal reasoning systems. This section describes multi-model compatibility factors and operational structuring rules, integrating principles grounded in research from the Harvard Data Science Initiative. Its purpose is to outline patterns that enhance performance across heterogeneous AI environments and ensure consistent interpretability.

Operational structure is the set of layout, hierarchy, and sequencing patterns that ensure uniform interpretability across different generative systems.

Claim: Multi-model operational structure increases cross-system interpretability.

Rationale: Engines with distinct architectures require consistent input structures to ensure unified interpretation.

Mechanism: Structured elements act as standard alignment signals across models with varied attention mechanisms.

Counterargument: Some engines may optimize for alternative structural assumptions.

Conclusion: Designing for operational compatibility improves generative visibility across platforms.

Cross-Engine Structure Normalization

Normalization aligns structural elements to reduce variability across generative outputs and ensures that meaning remains stable regardless of model architecture. This subsection defines normalization rules for structured format engineering and explains how stable structuring helps engines converge on consistent interpretations. Its purpose is to ensure reliable interpretation among systems with different processing strategies.

Normalization reduces the degree to which each engine must reinterpret structural intent. When headings, transitions, and content layers follow predictable conventions, engines interpret them as shared semantic signals, lowering the cognitive load required to reconstruct meaning. This improves reliability in multi-model evaluation contexts.

Normalized structures also reduce discrepancies in downstream tasks. Retrieval-based engines, summarizers, and generative models often rely on distinct heuristics; normalized inputs allow them to reference the same structural anchors, producing more aligned outputs across platforms.

Structural Equivalence Patterns for Multi-Model Inputs

Structural equivalence ensures meaning stability by defining uniform patterns that produce identical interpretive outcomes across engines. This subsection introduces equivalence rules for meaning-stable layout and explains how consistent structural cues support shared inferential pathways. The goal is to maintain identical semantic outputs across varied systems and to reinforce the uniformity principles central to AI Content Structuring.

Equivalence patterns clarify how conceptual, mechanistic, and contextual layers should appear in consistent depth positions. When the hierarchical structure mirrors a fixed logical architecture, engines anchor meaning to the same structural markers, reducing divergence across models and strengthening the structural consistency expected in AI Content Structuring.

These patterns also support interpretive resilience. Engines with different tokenization, embedding, or attention strategies will still align meaning when structure remains predictably organized around standardized cues. This predictability enhances cross-model consistency and supports long-range interpretive reliability.

Concept Block — Operational Stability Factors

- Normalized headers reduce architectural variability.

- Equivalent depth patterns reinforce shared interpretive boundaries.

- Predictable sequencing improves cross-engine consistency.

These stability factors help engines interpret content uniformly even when their internal mechanisms differ.

Engine-Agnostic Sequencing Patterns

Engine-agnostic sequencing reduces dependence on engine-specific heuristics by defining ordering conventions that function reliably across architectures. This subsection outlines standard sequencing patterns that support tiered content architecture and prioritizes interpretive stability. Its purpose is to produce structure that generalizes effectively across systems.

Sequencing that follows universal ordering principles helps engines construct meaning without relying on model-specific assumptions. When the sequence of claims, context, mechanisms, and implications appears consistently, engines can interpret reasoning chains regardless of their internal structure.

Engine-agnostic sequencing also improves reproducibility. Models trained on diverse datasets tend to develop different expectations about where meaning appears; standardized sequencing allows them to derive similar outcomes despite these differences.

Multi-Model Sequencing Controls

Sequencing controls define universal ordering principles that ensure structured narrative shaping remains stable across engines. This subsection details controls that support consistent narrative construction and reduce interpretive drift across diverse architectures. The objective is to enhance alignment among generative, retrieval, and hybrid systems.

Controls such as consistently placing definitions before mechanisms, mechanisms before implications, and implications before microcases strengthen interpretive alignment by creating predictable cognitive pathways for engines. This reduces variability in how models reconstruct reasoning from text.

Universal sequencing also reinforces cross-system parity. When engines process content organized according to identical ordering logic, they converge on similar interpretive states even when their processing layers differ fundamentally.

Mechanism Block — Multi-Model Operational Processes

| Operational Factor | Structural Role | Cross-Model Impact |

|---|---|---|

| Normalization rules | Reduce structural variance | Improve interpretive alignment across engines |

| Equivalence patterns | Stabilize meaning boundaries | Support consistent semantic reconstruction |

| Universal sequencing | Standardize reasoning order | Enhance reliability across heterogeneous systems |

These operational processes promote consistent interpretation and strengthen generative visibility across diverse AI architectures.

Extraction-Safe Structuring for Search and Retrieval Models

Extraction-safe structuring ensures that retrieval models can isolate, segment, and reuse content without loss of semantic fidelity. This section describes structural patterns that support indexing and key-point generation, grounded in insights from the Johns Hopkins CLSP Lab. Its purpose is to define extraction stability across systems and ensure that meaning remains consistent during retrieval operations.

Extraction-safe structuring is the design of content elements so they can be accurately segmented and retrieved by search or generative indexing systems.

Claim: Extraction-safe structuring improves the consistency of retrieval outputs.

Rationale: Systems depend on predictable structural boundaries when isolating meaning fragments.

Mechanism: Explicit segmentation enables accurate extraction of claims, definitions, and mechanisms.

Counterargument: Overly rigid structures may reduce narrative flexibility.

Conclusion: Extraction safety enhances retrieval scalability and semantic precision.

Layout Constraints for Extraction Accuracy

Layout constraints guide extraction workflows by defining how meaning is divided across structural units. This subsection introduces constraints that support precision in segmenting meaning and describes AI-focused layout rules that improve system reliability. Its purpose is to define protocols that enable retrieval models to extract content without structural misinterpretation and to align these constraints with the broader principles of AI Content Structuring.

Extraction accuracy depends on consistent section boundaries, predictable sentence lengths, and stable formatting patterns. When models can detect these structural markers, they extract meaning blocks with greater precision and avoid blending segments that serve different interpretive functions.

Constraints also support multi-engine compatibility. Different retrieval systems use distinct heuristics for segmentation, but predictable layouts ensure that all engines interpret structural boundaries in consistent ways, reinforcing the stability that AI Content Structuring aims to standardize.

Boundary Patterns for Retrieval Systems

Boundary patterns define segmentation points that engines use to determine where one meaning unit ends and another begins. This subsection describes patterns that strengthen extraction fidelity through logic-aligned section design. The goal is to maintain stable unit boundaries that retrieval systems can process consistently.

Boundary patterns rely on clear placement of definitions, transitions, and reasoning components. When boundaries appear in predictable positions relative to these units, engines can isolate the intended segments without reconstructing the logic manually.

Consistent boundary patterns also reduce the risk of extraction drift during large-scale indexing operations. Search models rely on these structural anchors to maintain alignment between queries and content segments.

Concept Block — Extraction Stability Factors

- Predictable boundaries improve segmentation precision.

- Uniform layout patterns reduce interpretive divergence.

- Explicit markers enable multi-engine extraction consistency.

These factors ensure stable extraction workflows across search and retrieval models.

Structural Cues for Search Engines

Structural cues highlight semantic priority levels by signaling relationships between content elements. This subsection describes cue placement that supports efficient indexing and explains how structural depth planning enhances retrieval visibility. Its purpose is to improve discoverability and strengthen search alignment.

Search engines rely on structural cues to classify text into topical units. When cues such as headings, micro-intros, and depth markers appear consistently, models identify priority information without recalculating contextual intent.

Well-positioned cues also support ranking stability. Engines that use semantic scoring methods gain higher confidence when structural cues indicate the importance and relevance of each unit.

Priority Mapping in Retrieval-Oriented Structuring

Priority mapping reinforces extraction order by establishing a consistent hierarchy for how information should be interpreted. This subsection outlines structured clarity building strategies that strengthen retrieval system visibility. The objective is to support reliable priority assignment during indexing and semantic ranking.

Priority mapping helps search systems distinguish between critical claims, supporting mechanisms, and contextual qualifiers. Engines assign higher relevance to segments that follow consistent placement patterns, increasing retrieval accuracy for targeted queries.

Structured priority layers also reduce noise. When engines recognize the intended hierarchy through structural signals, they avoid elevating secondary details above primary content in search results.

Mechanism Block — Extraction-Safe Processes

| Extraction Factor | Structural Role | Retrieval Impact |

|---|---|---|

| Defined boundaries | Segment meaningful units | Increase extraction precision |

| Structural cues | Guide indexing workflows | Improve discoverability |

| Priority mapping | Aligns interpretive hierarchy | Strengthens semantic ranking |

These processes enhance extraction reliability across retrieval and generative indexing systems.

Progressive Structuring for High-Fidelity AI Reasoning

Progressive structuring increases the precision of generative inferences by controlling how meaning is layered across conceptual and operational levels. This section explains progressive layering and reasoning scaffolds, drawing on principles explored in the University of Toronto Vector Institute research programs. Its purpose is to define how structure supports reasoning depth and strengthens interpretive accuracy in generative systems.

Progressive structuring is the incremental layering of meaning in controlled segments to support multi-step reasoning.

Claim: Progressive structuring improves the depth and resolution of generative reasoning.

Rationale: Reasoning requires sequential layers of meaning calibrated to stable structural boundaries.

Mechanism: Models map progressive structure onto internal multi-step inference chains.

Counterargument: Incorrect progression sequences can distort reasoning quality.

Conclusion: Progressive structuring enhances reasoning precision and interpretability.

Segmented Reasoning Layers

Reasoning layers provide models with incremental logic steps that form a coherent progression across the structural hierarchy. This subsection explains segmentation strategies and demonstrates how interpretation-ready layout principles guide engines through controlled sequences of meaning. Its purpose is to construct reliable reasoning frameworks that support multi-step inference and align these frameworks with the broader objectives of AI Content Structuring.

Segmented reasoning layers function as interpretive intervals that divide complex concepts into consistent, digestible components. When each layer contains a clear logical role—definition, expansion, mechanism, implication—models identify the intended progression without reconstructing implicit logic from unstructured text.

These layers also support reasoning resilience. When generative systems process long documents, segmented structures prevent compression-related errors by preserving the logical chain across multiple reasoning steps, ensuring that inference quality remains stable across extended contexts.

Controlled Inference Sequencing

Inference sequencing defines the order of interpretive steps by arranging structural elements into a coherent reasoning pathway. This subsection describes structured page architecture rules that support controlled sequencing and ensure that engines move through content in a predictable, logically aligned manner. The goal is to enhance inference stability across complex generative tasks.

Controlled sequencing strengthens interpretive discipline by guiding the model from conceptual foundations to operational mechanics. When inference steps are consistently arranged, engines reliably differentiate introduction, analysis, demonstration, and conclusion segments, reducing the likelihood of misaligned reasoning jumps.

Stable sequencing also benefits cross-model consistency. Engines with different tokenization and attention mechanisms converge on similar readings when the structural order remains predictable and formally defined.

Concept Block — Progressive Layering Principles

- Each reasoning layer performs a single logical function.

- Sequential ordering reinforces interpretive clarity.

- Depth controls prevent drift in multi-step reasoning.

These principles form the foundation of progressive reasoning sequences recognized by generative engines.

Progressive Meaning Amplification

Meaning amplification reinforces hierarchical logic by increasing semantic precision as content transitions from high-level concepts to detailed mechanisms. This subsection introduces reinforcement techniques that operate across progressive layers and explains how content section alignment preserves meaning stability. Its purpose is to stabilize interpretation across branching reasoning structures.

Progressive amplification works by deepening meaning at each structural layer without introducing contextual noise. Engines detect these amplification cues and refine internal representations accordingly, improving the resolution of inferential tasks.

Amplification also supports interpretive scalability. When models process extended or technical documents, layered reinforcement helps retain coherence by guiding attention toward structurally emphasized meaning blocks.

Amplification Controls in Structural Layers

Amplification controls maintain clarity across expansions by defining how meaning scales relative to structural boundaries. This subsection outlines alignment-based content modeling strategies that ensure each expansion step enhances interpretative precision rather than dispersing semantic focus. The objective is to strengthen reasoning cohesion through stable layering methods.

Controlled scaling ensures that deeper sections expand upon, rather than diverge from, previous layers. Engines rely on these cues to maintain a coherent internal logic tree that mirrors the structure of the document.

These controls also mitigate interpretive fragmentation. When expansions follow predictable patterns, engines avoid misclassifying elaborations as new topics, preserving the integrity of the reasoning chain.

Mechanism Block — Progressive Structuring Processes

| Progressive Factor | Structural Role | Reasoning Impact |

|---|---|---|

| Layer segmentation | Defines reasoning intervals | Improves multi-step inference clarity |

| Inference sequencing | Orders conceptual flow | Stabilizes interpretive progression |

| Meaning amplification | Reinforces hierarchical depth | Enhances precision in reasoning tasks |

These processes form the operational basis for high-fidelity reasoning in generative systems.

Structural Interfaces That Enhance Generative Visibility

Structural interfaces determine how content becomes accessible to generative systems that rely on surface-level organization and semantic cues. This section outlines interface patterns that reinforce model visibility, reflecting principles examined in the interpretability work of DeepMind Research. Its purpose is to describe structural inputs that improve retrieval, summarization, and reasoning outputs by giving engines reliable access points for meaning extraction.

A structural interface is the combination of layout, hierarchy, and explicit formatting features that guide generative systems in interpreting surface-level meaning.

Claim: Structural interfaces increase visibility across generative engines.

Rationale: Models depend on interface cues to determine semantic salience and extraction priority.

Mechanism: Structured layouts expose consistent anchors that guide retrieval and summarization pathways.

Counterargument: Interface-focused optimization may overlook deeper reasoning requirements.

Conclusion: Well-formed interfaces enhance visibility while preserving interpretability.

Interface-Level Structuring Signals

Interface signals increase surface-level interpretability by providing models with cues about the relative importance and function of different content elements. This subsection describes signaling patterns used by generative engines and explains how structural relevance rules guide extraction at the interface layer. Its purpose is to define the signals that make content more interpretable during initial model pass-through.

Signals such as consistent heading depth, clear topic boundaries, and stable framing statements create recognizable patterns that engines treat as semantic indicators. These cues allow the model to prioritize certain sections for summarization, reasoning, or retrieval workflows without recalculating meaning relationships from scratch.

Interface-level signals also help models resolve ambiguity during the early stages of token processing. Engines rely on these predictable cues to assign preliminary structural roles before advancing into deeper semantic analysis, which increases overall interpretive stability.

Salience-Oriented Formatting Controls

Formatting controls emphasize content salience by assigning visual and structural prominence to key meaning units. This subsection outlines structured layout strategy approaches that strengthen interface signal clarity. The objective is to enhance generative visibility by elevating essential elements through consistent formatting conventions.

Salience-oriented controls include stable heading spacing, predictable paragraph segmentation, and consistent alignment of definitions and claims. These patterns ensure that engines detect important components of the document immediately upon scanning its structure.

Such formatting controls also reduce interpretive friction. When models repeatedly encounter the same salience cues across documents, they build accurate expectations about where essential meaning units reside, increasing the reliability of extraction across diverse generative tasks.

Concept Block — Interface Visibility Factors

- Consistent interface cues identify high-salience units.

- Structured formatting improves surface-level comprehension.

- Predictable placement of anchors supports stable retrieval.

These factors create a robust interface layer that enhances visibility for generative engines.

Visibility Anchors for Model Extraction

Visibility anchors reinforce extraction consistency by providing models with stable positional references for meaning. This subsection introduces anchor placement techniques that strengthen content architecture signals and improve interface-level visibility. Its purpose is to increase the reliability of anchor-based extraction workflows.

Anchors function as structural checkpoints that models use to classify, reorder, or retrieve meaning units. When anchors appear in predictable section positions—such as near definitions, DRCs, or conceptual introductions—engines interpret them as focal points within the document hierarchy.

Visibility anchors also support long-range generative reasoning. When engines can reliably identify structural anchors across multiple layers, they maintain semantic coherence during extended inference sequences and reduce the risk of misclassification.

Generative Visibility Reinforcement Techniques

Reinforcement techniques stabilize visibility across multiple engines by strengthening the relationship between structural cues and meaning representation. This subsection defines structural design principles that reinforce generative visibility and support consistent recall across model architectures. The objective is to enhance cross-system presence in complex retrieval and summarization workflows.

Reinforcement includes repeating structural interfaces at consistent intervals, aligning anchor placement with conceptual depth, and maintaining predictable transitions across layered sections. These patterns help engines recognize recurring structural motifs and treat them as reliable extraction guides.

Visibility reinforcement also increases interpretive parity across models. Engines using different embedding strategies or attention mechanisms converge more effectively when structural features act as strong visibility signals.

Mechanism Block — Interface Structuring Processes

| Interface Factor | Structural Role | Visibility Impact |

|---|---|---|

| Structuring signals | Identify surface relevance | Improve model scanning and prioritization |

| Formatting controls | Emphasize salience | Increase extraction clarity |

| Visibility anchors | Provide positional meaning cues | Strengthen retrieval and summarization accuracy |

These interface processes improve generative visibility by ensuring that engines consistently identify, classify, and extract meaning from structured documents.

Consistency Engineering for Long-Term AI Interpretation

Consistency engineering ensures that content retains interpretability across multiple model generations and indexing cycles. This section explains engineering techniques that maintain structural stability over time, incorporating interpretability principles discussed in the context of OpenAI Research. Its purpose is to address long-term AI-driven accessibility and define practices that preserve semantic clarity as models evolve.

Consistency engineering is the practice of designing content structures that remain interpretable as models evolve and update.

Claim: Consistency engineering preserves interpretability across evolving models.

Rationale: Stable structures ensure meaning continuity as engines update their internal mappings.

Mechanism: Models reuse structural anchors and patterns that remain constant across versions.

Counterargument: Excessive structural rigidity may reduce adaptability to new systems.

Conclusion: Consistency engineering balances long-term stability with flexible interpretability.

Stability Rules for Persistent Interpretation

Stability rules ensure that structures remain readable across model generations by codifying patterns that withstand architectural updates. This subsection describes structural comprehension cues that support persistent interpretability and reduce the likelihood of meaning loss over time. Its purpose is to define guidelines that protect content stability across evolving systems.

Stability rules include maintaining consistent heading hierarchies, predictable sequencing formats, and clear conceptual boundaries. These cues allow newer engines to map content according to known structural expectations even when internal interpretive mechanisms have changed.

Such rules also reduce version-based interpretive drift. When engines process content using stable cues, they form internal alignments that remain compatible with future model variants, improving long-term reliability in retrieval and reasoning workflows.

Persistence Controls for Evolving Systems

Persistence controls preserve clarity under evolving interpretive conditions by reinforcing structural elements that models repeatedly reuse. This subsection explains clarity alignment methods that maintain durable comprehension across versions. The objective is to ensure structural continuity as interpretive algorithms advance.

These controls include aligning terminology with consistent structural placements, maintaining predictable sentence roles, and preserving the functional intent of each section. Engines rely on these cues to anchor meaning, preventing erosion during model updates.

Persistence controls also enhance robustness across indexing cycles. As search and generative systems evolve, structurally aligned content retains compatibility with new extraction methods through the consistency of its interpretive signals.

Concept Block — Long-Term Stability Factors

- Predictable structures maintain interpretability across updates.

- Persistent cues reduce version-based semantic drift.

- Aligned terminology reinforces clarity over time.

These stability factors ensure that content remains machine-readable over successive generations of AI systems.

Semantic Drift Prevention Techniques

Semantic drift weakens long-term interpretation by altering meaning boundaries or shifting conceptual associations across model versions. This subsection presents prevention strategies and contextualizes tone modeling for AI as a stability tool. Its purpose is to maintain identity across versions and protect core semantic structures.

Prevention techniques include defining clear conceptual scopes, maintaining consistent tone across sections, and standardizing how operational and conceptual elements appear in the document. These constraints help engines preserve the intended meaning structure throughout updates.

Drift prevention also relies on reinforcing segmentation logic. When boundaries remain stable, engines maintain stronger connections between related elements even as their mapping functions change internally.

Controlled Terminology Stabilization

Terminology stabilization reinforces interpretive consistency by standardizing the vocabulary used to define conceptual and procedural elements. This subsection outlines predictable tone structure strategies that maintain semantic uniformity across updates. The goal is to prevent drift over time by giving engines fixed lexical anchors that support durable interpretation.

Controlled terminology ensures that concepts retain their position and functional meaning across document revisions. Models learn to associate these stabilized terms with specific structural and conceptual roles, strengthening consistency across generations.

Terminology stabilization also supports cross-system continuity. As engines adopt new embeddings or reasoning frameworks, stable terminology acts as a reference point, helping preserve meaning even when underlying model architectures change.

Mechanism Block — Consistency Engineering Processes

| Consistency Factor | Structural Role | Long-Term Impact |

|---|---|---|

| Stability rules | Maintain structural predictability | Preserve interpretability across versions |

| Drift prevention | Protect semantic boundaries | Reduce degradation during model updates |

| Terminology stabilization | Anchor meaning consistently | Support cross-system clarity |

These engineering processes establish long-term interpretive resilience across evolving AI systems.

Checklist:

- Does the content define key concepts using stable terminology and explicit boundaries?

- Are sections organized with predictable H2–H3–H4 depth that signals hierarchical meaning?

- Does each paragraph express one clear reasoning unit with a discrete interpretive purpose?

- Are examples or micro-cases used to reinforce abstract structural principles?

- Is ambiguity reduced through consistent transitions and localized clarifications?

- Does the document’s structure support step-by-step interpretation across generative engines?

Multi-Layer Structuring for Deterministic AI Output

Multi-layer structuring enables deterministic interpretation by organizing meaning across conceptual, operational, and inferential layers. This section explains multi-layer logic for predictable outputs and connects to principles explored in the interpretability and structured modeling research conducted at the Max Planck Institute for Intelligent Systems. Its purpose is to define deterministic structuring rules that reduce interpretive variance and increase stability across generative engines.

Multi-layer structuring is the orchestration of layered meaning sequences that guide deterministic interpretation.

Claim: Multi-layer structuring increases determinism in generative processing.

Rationale: Deterministic layouts reduce ambiguity in multi-step inference.

Mechanism: Models follow hierarchical signals to reconstruct meaning with minimal variance.

Counterargument: Deterministic design may reduce expressive range in narrative contexts.

Conclusion: Multi-layer structuring produces stable, predictable AI interpretations.

Deterministic Meaning Layers

Meaning layers define deterministic interpretive steps by assigning each structural depth a distinct semantic function. This subsection explains how layered meaning supports predictable outputs and demonstrates how tone quality indicators help engines distinguish between conceptual statements, mechanistic details, and contextual expansions. Its purpose is to define deterministic meaning organization across structural tiers.

Deterministic layers prevent models from blending unrelated meaning units by giving each layer a fixed interpretive purpose. Engines use these layered signals to map internal attention flows toward the correct logical regions, ensuring that inference chains follow the intended path.

Layer differentiation also enhances interpretive resilience. As generative systems encounter increasingly complex documents, deterministic layering helps maintain coherence by signaling the functional role of each structural block.

Structured Determinism Reinforcement

Determinism reinforcement stabilizes meaning boundaries by strengthening the connection between structural form and semantic function. This subsection outlines structured tone guidelines that maintain consistent interpretive behavior across engines. The objective is to support outputs that remain stable regardless of variations in processing strategies.

Reinforcement techniques involve anchoring claims, definitions, and reasoning elements to particular structural depths so that engines repeatedly associate these roles with specific formatting signals. This reduces the likelihood of internal misclassification.

Consistent reinforcement also supports multi-model determinism. Engines using different tokenization schemes or attention mechanisms can still identify deterministic patterns when structural cues are uniform and repeated.

Concept Block — Deterministic Layering Principles

- Each layer performs an unambiguous semantic function.

- Reinforced boundaries reduce meaning overlap.

- Stable tone patterns improve deterministic reconstruction.

These principles establish the foundation for deterministic generative behavior.

Precision-Based Layer Calibration

Layer calibration ensures balanced meaning distribution by adjusting the density and placement of conceptual, mechanistic, and inferential elements. This subsection explains calibration methods that support comprehension-focused writing and reduce interpretive noise. Its purpose is to maintain precision across layered structures.

Calibration techniques include distributing high-level concepts across upper layers while constraining mechanistic expansions to controlled depths. This allows engines to reconstruct meaning using calibrated attention flows that mirror the structure of the document.

Calibration also reduces internal model variance. When the distribution of meaning is controlled and predictable, engines develop stable mapping patterns, increasing reliability across generative tasks such as summarization, reasoning, and classification.

Calibration Controls for Logical Flow

Calibration controls regulate logical sequencing by defining how meaning transitions occur from one layer to the next. This subsection introduces tone-aware content design principles that maintain interpretive order and prevent structural drift. The goal is to improve interpretive determinism by aligning sequencing with predictable tonal patterns.

Controls include placing transitional statements at fixed positions, standardizing the presentation of mechanisms, and using depth-linked framing to unify meaning connections. These strategies allow engines to anticipate transitions, reducing reliance on probabilistic interpretation.

Layer calibration controls also foster stronger cross-layer coherence. When transitions follow stable patterns, models maintain continuous reasoning flow across conceptual, operational, and inferential layers.

Mechanism Block — Multi-Layer Deterministic Processes

| Deterministic Factor | Structural Role | Generative Impact |

|---|---|---|

| Meaning layering | Define semantic boundaries | Increase interpretive predictability |

| Determinism reinforcement | Stabilize tone and structure | Reduce internal inference variance |

| Calibration controls | Maintain logical sequencing | Strengthen deterministic reasoning |

These processes support deterministic interpretation across diverse generative architectures.

Interpretive Logic of AI-Oriented Content Structures

- Hierarchical signal clarity. Depth-aligned heading layers establish explicit contextual boundaries, allowing generative systems to resolve relationships between concepts without inferential ambiguity.

- Pattern regularity. Recurrent structural templates create predictable segmentation cues, supporting stable interpretation across diverse extraction environments.

- Conceptual unit isolation. Discrete, single-focus paragraphs function as atomic meaning carriers, improving precision during retrieval and synthesis.

- Cross-layer coherence. Alignment between adjacent sections preserves semantic continuity, enabling long-context reasoning across nested structural levels.

- Model-agnostic interpretability. Structures that remain consistent under varying extraction strategies indicate robustness beyond individual generative systems.

Together, these elements describe how content structures are interpreted by AI systems as cohesive semantic frameworks rather than linear instructional sequences.

FAQ: AI Content Structuring

What is AI Content Structuring?

AI Content Structuring is the practice of organizing content into predictable hierarchies, boundaries, and semantic units so generative engines can interpret, segment, and reuse meaning with high accuracy.

How does AI Content Structuring differ from traditional SEO?

Traditional SEO focuses on rankings and link signals, while AI Content Structuring focuses on meaning clarity, structural logic, segmentation precision, and machine-readable patterns that improve generative interpretation.

Why is structuring important for AI interpretation?

Generative engines rely on stable hierarchy, explicit boundaries, and consistent formatting to reconstruct meaning. Well-structured content improves interpretability, summarization accuracy, and retrieval precision.

How do generative engines interpret structured documents?

Engines analyze hierarchical depth, segmentation markers, clarity of definitions, and logical transitions to map internal reasoning pathways, selecting the most coherent and contextually aligned sections.

What role does hierarchy play in AI interpretation?

Hierarchy organizes meaning across conceptual, mechanistic, and inferential layers, allowing models to differentiate core claims from expansions, examples, and implications with less ambiguity.

Why are clarity and explicit definitions essential?

AI models build meaning graphs from explicit definitions, stable terminology, and sentence-level clarity. Precise framing reduces probabilistic inference and supports deterministic interpretation.

How do I begin implementing AI-focused structuring?

Start by standardizing headings, adding consistent depth layers, defining boundaries, rewriting ambiguous segments, and ensuring that each paragraph contains one interpretable idea.

What are best practices for AI-ready content?

Use predictable hierarchy, single-purpose paragraphs, explicit definitions, coherent sequencing, stable terminology, and extraction-safe formatting that aligns with machine reasoning patterns.

How does structuring influence multi-model generative visibility?

Consistent structuring ensures that engines with different architectures interpret content in similar ways, strengthening visibility across ChatGPT, Gemini, Claude, Perplexity, and other systems.

What skills are essential for creating structurally aligned content?

Writers need precision, reasoning discipline, hierarchical planning, factual rigor, and the ability to express meaning in stable, machine-readable patterns.

Glossary: Key Terms in AI Content Structuring

This glossary defines the terminology used throughout this guide, supporting precise interpretation of concepts related to structure, hierarchy, clarity, and machine-readable organization for generative systems.

AI Content Structuring

The organization of content into predictable hierarchies, boundaries, and semantic layers that enable generative systems to interpret and reuse meaning with high fidelity.

Atomic Paragraph

A 2–4 sentence unit containing a single interpretable idea, designed to maintain strict semantic boundaries and prevent ambiguity during model parsing.

Semantic Structure

A hierarchical layout of concepts, mechanisms, and contextual layers that guides AI systems in mapping relationships and reconstructing logical flow across a document.

Structural Coherence

The logical alignment of adjacent sections that creates continuous meaning pathways and reduces contextual drift during generative interpretation.

Boundary Signal

A structural marker—such as a heading, segment break, or explicit framing cue—that helps AI systems separate and classify meaning units with precision.

Hierarchical Depth

A multi-layer structural system using H2–H3–H4 levels to encode semantic proximity, define contextual relevance, and support multi-step reasoning.

Reasoning Chain

A structured sequence of claim, rationale, mechanism, objection, and conclusion used to guide generative engines through consistent interpretive logic.

Extraction-Safe Layout

A formatting approach that ensures retrieval models can segment content accurately by using predictable boundaries, consistent patterns, and unambiguous structure.

Structural Predictability

A stability metric describing how consistently content follows fixed patterns, enabling AI systems to anticipate the function of each structural element.

Consistency Engineering

A long-term structuring practice focused on preventing semantic drift, preserving interpretability across model generations, and maintaining stable meaning boundaries as AI systems evolve.