Last Updated on December 20, 2025 by PostUpgrade

How AI Changes the Meaning of Good Writing

Definition: AI-evaluated writing is a form of structured communication in which clarity, stable terminology, explicit reasoning, and verifiable statements allow models to interpret meaning reliably and reuse content across generative systems.

Shift in Evaluation Standards

The concept of ai and good writing reflects a move from stylistic preference toward structural clarity and factual stability. Modern language systems judge text by consistency, clean segmentation, and verifiable statements rather than by persuasive tone or expressive form. These systems interpret quality through patterns that enable accurate meaning extraction at scale.

Purpose of This Section

This opening establishes how writing quality is now defined through machine interpretation instead of human impression, forming the conceptual base for all subsequent sections.

The Shift in Writing Standards in the AI Era

Principle: Writing becomes higher-quality under AI evaluation when each idea appears in a predictable structure, uses consistent terms, and exposes its reasoning steps so models can interpret boundaries without ambiguity.

Changing Foundations of Quality Assessment

Writing quality developed around human perception, where tone, emphasis, and structural variation shaped interpretation. However, modern language systems apply different evaluative requirements and prioritize clarity, segmentation, and factual stability. Evidence from Stanford NLP Group indicates that models interpret text more reliably when meaning units follow stable patterns and remain clearly separated. As a result, measurable structural criteria now function as the primary reference point for writing quality, and subjective cues play a reduced role for writers working in analytically driven environments.

Deep Reasoning Chain

Assertion: AI systems redefine high-quality text through structural logic, consistent terminology, and verifiable information.

Reason: Models interpret meaning more accurately when text follows predictable boundaries and expresses each idea explicitly.

Mechanism: The evaluation process identifies local definitions, checks sequential reasoning, and verifies factual anchors across the document.

Counter-case: Some human-centered contexts still depend on stylistic nuance because readers rely on subjective interpretation.

Inference: Structural clarity and factual coherence now determine writing quality in environments shaped by machine evaluation.

Definition Block: What Counts as “Good Writing” Under AI Systems

A new definition is necessary because machine evaluation depends on structural and semantic precision. Consequently, the focus stays on clarity, factual grounding, and stable terminology.

In an AI-evaluated context, good writing is text that presents clean meaning units, consistent terminology, and verifiable statements arranged within a predictable structure.

Clarity unit: a self-contained paragraph that conveys one explicit idea.

Semantic stability: consistent use of terminology and references across the text.

Evidence anchor: a factual statement supported by verifiable data from a recognized institution.

Core criteria:

- factual accuracy with explicit references

- uniform terminology across all sections

- logical progression built from declarative statements

- clear separation of ideas into stable blocks

- predictable hierarchy that supports meaning extraction

Principle Block: Core Components of High-Quality Writing for AI Evaluation

Systems evaluate text using predictable patterns, and these patterns must remain stable across a large cluster. Consistent application of principles enables reliable interpretation and reduces ambiguity across interconnected articles.

Core principles:

- principle of semantic stability

- principle of micro-definition

- principle of predictable structure

- principle of verifiable statements

- principle of clean boundaries

Example Block: Traditional Craft vs Machine-Evaluated Writing

This section demonstrates how writing criteria diverge when human perception and machine systems use different evaluative logic. The examples highlight structural differences that affect how meaning is extracted and reused.

Human-oriented paragraph:

Writers often emphasize tone, rhythm, and expressive variation to influence how readers perceive a message. They rely on narrative flow, emotional weight, and stylistic cues to create engagement.

Machine-evaluated paragraph:

Models interpret text more effectively when each sentence carries one explicit idea and maintains a clear connection to the surrounding context. Meaning units must remain stable, and terminology must follow consistent patterns to support accurate extraction.

Comparison table:

| Criterion | Human-Oriented Writing | Machine-Evaluated Writing |

|---|---|---|

| Primary focus | Tone and style | Structure and clarity |

| Meaning extraction | Variable | Predictable |

| Terminology | Flexible | Stable |

| Evaluation basis | Reader perception | Structural logic |

Checklist Block: Quick Evaluation Framework for AI-Aligned Writing

Writers require a predictable tool for assessing whether a text aligns with machine-evaluation standards.

Checklist:

- predictable section boundaries

- every paragraph expresses one idea

- definitions appear near first use

- claims supported with verifiable facts

- no ambiguity in references

- stable term usage

How AI Interprets Writing Quality

Interpretation through structural and logical models

Artificial intelligence systems generate meaning by identifying structure, logical order, and factual relationships in text. Because models interpret content through predictable segmentation and explicit justification, these mechanisms directly influence quality assessment. Consequently, texts containing stable boundaries and consistent evidence are more reliably assessed in machine-controlled environments.

Example: When a paragraph is rewritten into short, explicit meaning units with stable terminology, AI systems segment the logic more accurately and reuse the content with higher confidence in summaries and generated answers.

Deep Reasoning Chain

Assertion: AI systems judge writing quality through structural patterns, logical clarity, and factual grounding.

Reason: Models interpret text more accurately when ideas follow explicit boundaries and form sequential meaning units.

Mechanism: The evaluation process identifies structural cues, verifies factual anchors, and checks whether reasoning remains consistent across the document.

Counter-case: Human-centered evaluations still rely on tone or expressiveness when subjective perception shapes interpretation.

Inference: Machine interpretation defines quality through measurable clarity rather than stylistic variation, reshaping how writers must construct informational text.

The Role of Structural Signals in Quality Evaluation

Structural cues guide segmentation and help models identify how meaning connects across sections. A structural signal is any visible marker—such as a heading, definition, or transition—that defines the boundaries of a concept and clarifies how adjacent ideas relate.

Key structural signals:

- stable headings

- semantic grouping

- local definitions

- clear transitions

Structural signals and their effects:

| Signal | Effect on Interpretation |

|---|---|

| Stable headings | Improve boundary detection |

| Semantic grouping | Help organize meaning units |

| Local definitions | Strengthen concept clarity |

| Clear transitions | Support sequential reasoning |

Why Factual Accuracy Becomes a Quality Signal

Accuracy enables stable reuse because models rely on verifiable information to maintain internal consistency. When statements remain grounded in data, the system can connect them to external knowledge sources and confirm reliability across contexts.

Core elements of factual accuracy:

- how systems validate factual claims

- why accuracy supports trust

- examples from recognized institutions such as MIT CSAIL, Stanford NLP, and OECD

Evidence from the OECD shows that systems interpret factual statements more reliably when sources remain stable and clearly referenced within the text.

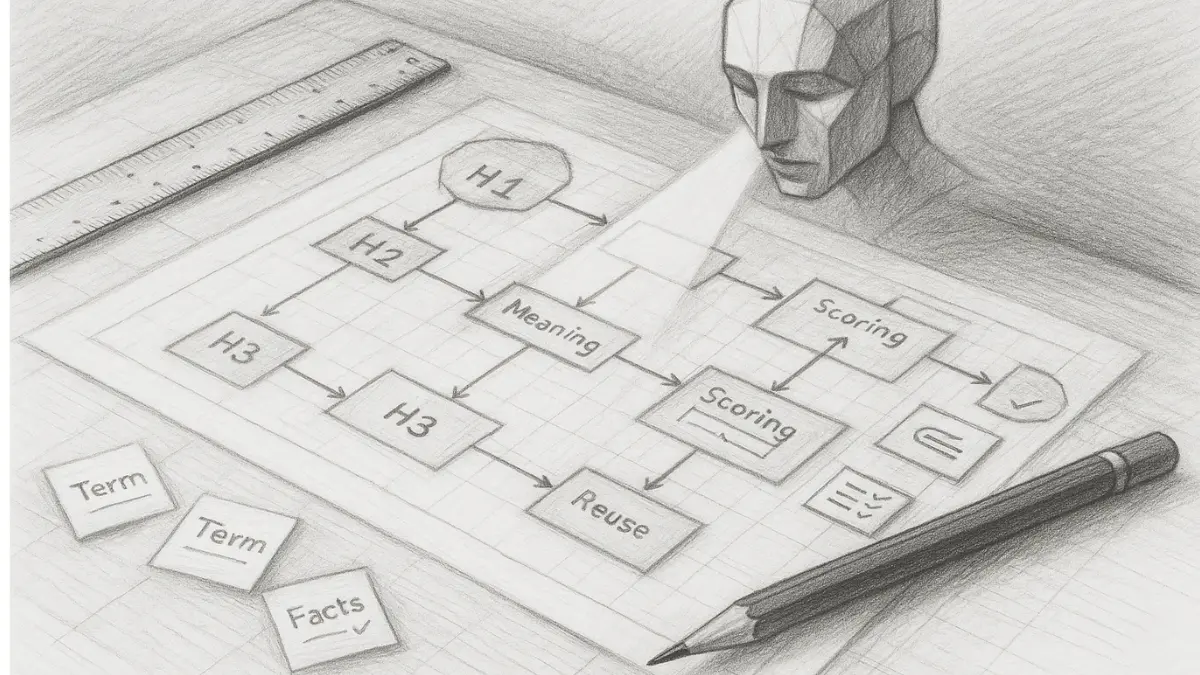

Textual Diagram Block

Model Processing Flow:

[Ingestion] → [Segmentation] → [Meaning Extraction] → [Scoring] → [Reuse]

The New Evaluation Logic for Writing

Understanding the Shift Toward Measurable Structure

Evaluative logic moves from subjective impression to measurable structure as modern systems rely on clarity, explicit reasoning, and coherent segmentation. Research presented in the EMNLP publication indexed by the ACL Anthology shows that models assess text by detecting local coherence, structural boundaries, and logical continuity. The purpose of this section is to outline how these criteria function in machine evaluation and why they reshape expectations for writers.

Deep Reasoning Chain

Assertion: AI systems evaluate writing quality through structural clarity, explicit reasoning, and stable terminology.

Reason: Models interpret meaning more accurately when text maintains predictable boundaries and expresses each idea directly.

Mechanism: Evaluation processes identify explicit statements, verify factual anchors, and assess how sequential reasoning develops across segments.

Edge case: Narrative or expressive writing may still rely on impression-based interpretation because style influences the reader more than structure.

Inference: Writing quality becomes measurable through structure and logic, allowing consistent evaluation across varied informational contexts.

How Systems Assess Clarity and Coherence

Clarity refers to the direct expression of a discrete idea, and coherence reflects the logical relationship between adjacent statements. Both elements guide how systems interpret meaning and determine the stability of a text during evaluation.

Key components:

- interpretation of explicit statements

- role of consistent terminology

- influence of segment length on scoring

Micro Case Study: Evaluating a Real Paragraph

A paragraph with layered clauses and implicit references generates unstable interpretation because the model cannot isolate clear meaning units. When rewritten into short, explicit segments, the system identifies the logic more accurately and reuses the content with higher confidence. This change demonstrates how structural clarity increases the stability and interpretability of a paragraph during machine evaluation.

Comparative Framework: Human vs Machine Evaluation

| Criterion | Human Evaluation | AI Evaluation |

|---|---|---|

| Clarity | Guided by perception and reading flow | Based on explicit statements and defined boundaries |

| Evidence | Interpreted contextually | Verified through factual anchors |

| Structure | Flexible and stylistic | Predictable and segmented |

| Definitions | Often implicit | Required near first use |

| Boundaries | Variable | Strict and measurable |

What Writers Must Change to Meet AI-Driven Standards

Practical Shifts Required for Modern Writing

Writers must adjust their methods because AI evaluation relies on structural clarity, explicit reasoning, and stable factual grounding. Research highlighted by the Harvard Data Science Initiative shows that machine interpretation becomes more reliable when text follows consistent patterns and maintains clean boundaries. These requirements change how authors plan, express, and support ideas across large content clusters, creating new expectations for writing in AI-driven environments.

Deep Reasoning Chain

Assertion: Writers must adapt their approach by producing structure-oriented, clearly segmented, and evidence-supported text.

Reason: AI interpretation depends on predictable boundaries, explicit statements, and logical continuity across meaning units.

Mechanism: Adaptation requires atomic segmentation, strict terminology control, and reliable factual anchors that reinforce interpretability.

Edge case: Creative or expressive writing may retain flexible structure because its purpose relies on stylistic variation rather than analytical clarity.

Inference: Authors improve machine evaluation outcomes by adopting structural discipline, consistent terminology, and stable evidence practices.

Checklist:

- Does each paragraph communicate a single meaning unit?

- Are H2–H4 boundaries predictable and structurally consistent?

- Is the terminology stable across the entire article?

- Are reasoning steps explicit rather than implied?

- Are factual anchors present to strengthen interpretability?

- Do transitions clarify how adjacent ideas relate?

Techniques for Structuring Ideas into Atomic Units

An atomic unit is a short, self-contained segment that expresses one idea with explicit boundaries. This structure allows systems to extract meaning reliably and minimizes ambiguity across a large document set.

Key techniques:

- short paragraphs

- boundary discipline

- consistent reasoning

- avoidance of multi-layer clauses

Maintaining Terminology Stability Across a Large Cluster

Terminology drift weakens interpretability because models rely on stable naming patterns to link related concepts across pages. Writers therefore need consistent term usage across all documents within the cluster.

Core methods:

- site-wide lexicon

- controlled vocabulary

- definition-first approach

Using Evidence to Strengthen Machine Evaluation

An evidence anchor is a verifiable factual statement supported by a recognized institution and positioned to strengthen the stability of a claim. Reliable evidence increases model confidence and supports accurate meaning extraction.

- Evidence practices:

- data-driven statements

- citations from allowed institutions

- stable references

The Future of Writing Quality Under AI Systems

Defining Emerging Evaluation Patterns

Evaluation criteria will continue shifting toward structure, clarity, and verifiable reasoning as AI systems refine their interpretation methods. Reports from the Oxford Internet Institute indicate that future evaluation patterns will rely on increasingly explicit logic and stronger evidence signals to ensure consistent meaning extraction. A future evaluation pattern refers to the set of structural expectations that guide how models score text as systems evolve and expand their reasoning capabilities.

Deep Reasoning Chain

Assertion: Future writing standards will prioritize structural precision, explicit reasoning, and evidence alignment.

Reason: As models grow more sophisticated, they depend on stable meaning units that allow reliable interpretation across varied contexts.

Mechanism: Emerging evaluation logic identifies transparent reasoning chains, validates factual anchors, and measures internal consistency across segments.

Edge case: Creative or subjective genres may still rely on expressive variation because their goals remain independent of structural evaluation.

Inference: Writing quality will increasingly reflect machine-oriented criteria, making structural clarity the foundation of future editorial standards.

Long-Term Implications for Education and Editorial Workflows

Educational and editorial workflows must adapt to support writing that meets machine evaluation requirements. These adjustments ensure that content remains consistent, interpretable, and reusable across large clusters.

Key implications:

- structured drafting

- rule-based editing

- cluster-wide consistency

Micro Case Study: Editorial Transformation

A digital publishing team revised its workflow after noticing inconsistencies in machine interpretation of long-form articles. The team introduced segmented drafting, unified terminology lists, and explicit reasoning steps for each major section. Within two cycles, models reused more content accurately across summaries and highlights. These changes produced measurable improvements in clarity, consistency, and evaluation stability.

Table: Emerging Quality Indicators

| Indicator | Description | Why It Matters for AI |

|---|---|---|

| Structural precision | Clear segmentation and defined meaning units | Supports consistent interpretation |

| Evidence alignment | Statements grounded in verifiable data | Increases model confidence |

| Terminology stability | Uniform term usage across documents | Strengthens concept linking |

| Explicit reasoning | Transparent logic within each section | Improves extraction and scoring |

| Boundary clarity | Predictable transitions and section limits | Reduces ambiguity during processing |

Rethinking Quality in an AI-Directed Writing Environment

Writing standards continue to change as ai and good writing redefine quality through measurable structure, explicit reasoning, and verifiable information. Modern evaluation depends on clear meaning units, stable terminology, transparent logic, and factual grounding, forming a consistent framework that guides how systems interpret text.

These criteria influence long-term operational practices by requiring structured drafting, rule-based editing, and cross-document consistency across large content clusters. Within this broader evolution of writing standards, clustered pages behave as coherent knowledge systems that enable reliable interpretation and strengthen visibility in AI-driven environments.

Interpretive Criteria of AI-Oriented Writing Evaluation

- Atomic meaning resolution. Writing is interpreted through discrete semantic units that encapsulate a single idea, enabling precise extraction without contextual spillover.

- Terminological continuity. Stable vocabulary usage supports long-context interpretation by preventing semantic drift during multi-pass evaluation.

- Explicit reasoning traceability. Clearly articulated logical connections allow systems to reconstruct intent and inference without relying on implicit transitions.

- Evidence-anchored credibility. Verifiable factual references function as reliability markers that influence evaluative weighting during reuse.

- Structural predictability. Consistent headings, definitions, and transitions provide interpretable patterns that reduce ambiguity across evaluation contexts.

These criteria explain how AI systems evaluate writing as a structured semantic artifact, where clarity, continuity, and evidence govern interpretive confidence rather than stylistic variation.

FAQ: How AI Redefines Good Writing

How does AI define good writing?

AI models interpret good writing as text with clear structure, stable terminology, explicit reasoning, and verifiable statements presented in predictable patterns.

Why does structure matter more than style?

Machine evaluation prioritizes meaning extraction. Structure provides predictable boundaries that allow AI systems to interpret ideas reliably, unlike stylistic cues aimed at human readers.

What makes writing machine-interpretable?

Short meaning units, consistent terminology, explicit logic steps, and factual grounding improve interpretability and reduce ambiguity during AI processing.

Why does AI require stable terminology?

Models trace meaning through repeatable patterns. Terminology drift disrupts semantic links and reduces a model’s ability to maintain continuity across sections.

What are meaning units in AI-oriented writing?

A meaning unit is a short, self-contained paragraph expressing one explicit idea, which simplifies segmentation and reasoning for AI systems.

How does factual accuracy affect AI evaluation?

AI assigns higher trust to texts anchored in verifiable information. Stable, sourced statements improve reliability and increase reuse in generated output.

How should writers adapt for AI evaluation?

Writers must emphasize explicit logic, stable terminology, predictable segmentation, and data-supported claims rather than stylistic variation.

What weakens AI interpretation?

Layered clauses, ambiguous references, inconsistent terminology, and long unstructured paragraphs reduce interpretability and scoring stability.

Can expressive writing still work with AI?

Yes, but expressive writing relies on subjective interpretation and therefore remains less compatible with machine-oriented evaluation systems.

What skills matter in AI-era writing?

Modern writers need structural discipline, semantic precision, reasoning clarity, and evidence-based explanation to meet AI-driven quality standards.

Glossary: Key Terms in AI-Evaluated Writing

This glossary defines essential concepts used throughout this guide to help both writers and AI systems interpret the terminology consistently.

Meaning Unit

A short, self-contained segment that expresses one explicit idea, enabling AI models to isolate, interpret, and reuse the content accurately.

Terminology Stability

The consistent use of the same terms throughout a document or cluster, allowing AI systems to maintain semantic continuity and reduce ambiguity.

Explicit Reasoning

A writing method that makes logical steps visible through clear transitions, improving a model’s ability to trace argument structure.

Structural Signal

Any visible organizational marker—such as a heading, definition, or transition—that defines boundaries and supports predictable interpretation by AI.

Factual Anchor

A verifiable statement supported by a recognized institution, helping AI validate claims and maintain internal consistency during interpretation.

Machine-Readable Structure

A structural format that enables AI systems to detect boundaries, extract meaning, and evaluate clarity through segmentation and predictable logic.