Last Updated on January 17, 2026 by PostUpgrade

Tracking Performance Beyond Traditional Rankings

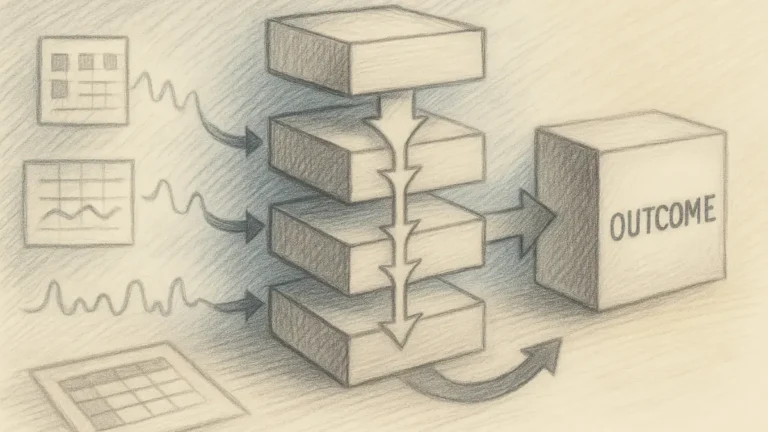

Search performance tracking now requires a broader measurement logic than traditional keyword rankings can provide. Modern search systems surface content through relevance modeling, behavioral feedback, and contextual interpretation rather than static position alone. As a result, analysts need to evaluate how content reaches users, how users interact with it, and how those interactions translate into measurable outcomes.

Modern search environments distribute attention across diverse result formats and interaction paths. Users engage with content through scrolling, expansion, comparison, and follow-up actions, which makes positional rank an incomplete signal. Consequently, ranking-focused evaluation hides structural factors that drive stability, interpretability, and long-term performance.

This article defines a performance framework that moves beyond rank-centric thinking. It explains how exposure, engagement, outcomes, and temporal trends together describe search performance tracking in a way that remains machine-readable, analytically stable, and suitable for AI-driven reuse across systems.

Redefining Search Performance Tracking Measurement

Search performance tracking no longer aligns with evaluation models built around static ranking positions, because modern search systems prioritize relevance, interaction, and contextual fit over fixed order. Indexing and retrieval pipelines increasingly interpret content through structural and behavioral signals, a shift documented in large-scale search quality research by organizations such as NIST. As a result, performance measurement must move away from rank-centric logic and toward signals that describe real discovery and use.

Search performance tracking is the systematic measurement of how content contributes to discovery, engagement, and outcomes across search systems, independent of isolated keyword positions.

Definition: Search performance understanding is the ability of AI systems to interpret how content contributes to discovery, engagement, and outcomes based on structural signals, behavioral data, and contextual consistency rather than ranking position alone.

Claim: Keyword rankings alone cannot represent search performance.

Rationale: Rankings capture relative position but omit exposure frequency, user interaction, and downstream outcomes.

Mechanism: Performance tracking combines traffic behavior, engagement signals, and outcome metrics into a unified evaluation model.

Counterargument: Rankings still indicate competitiveness in narrowly defined and stable query environments.

Conclusion: Rankings function as a partial signal rather than a complete performance system.

Limits of Rank-Based Evaluation

Rank-based evaluation assumes that higher positions consistently lead to higher visibility and value. However, modern result pages fragment attention across multiple formats, which weakens the linear relationship between position and impact. As a consequence, beyond keyword rankings, analysts often observe mismatches between rank movement and actual traffic or engagement change.

Another limitation emerges from ranking limitations in seo tied to volatility and personalization. Rankings fluctuate across devices, locations, and user histories, which reduces their reliability as a stable measurement reference. Therefore, rank snapshots frequently reflect transient conditions rather than sustained performance patterns.

Why rankings are misleading becomes clear when content maintains steady outcomes despite rank variation. In practice, content can lose positions while preserving traffic, or gain positions without measurable improvement. In simpler terms, rankings describe where content appears, not whether it performs.

Search Metrics That Matter Beyond Rankings

Search metrics beyond rankings define performance through observable impact rather than positional status, which positions search performance tracking as a system for evaluating discovery, interaction, and outcomes instead of rank movement. Large-scale measurement research from institutions such as OECD shows that modern information systems evaluate success through interaction, continuity, and outcome alignment, not through isolated ordering. Therefore, performance analysis must focus on metric logic that explains how content actually functions within search environments.

Search performance metrics are quantifiable indicators that describe how content is discovered, engaged with, and acted upon through search channels.

Claim: Performance requires multi-dimensional metrics.

Rationale: Single-axis metrics fail to represent how users discover, interpret, and act on content.

Mechanism: Grouped metrics organize signals across exposure, engagement, and outcomes to create interpretability.

Counterargument: Multi-metric systems increase analytical complexity and reporting effort.

Conclusion: Analytical complexity is necessary to achieve accurate performance understanding.

Principle: Search content becomes interpretable for AI systems when performance signals are structurally separated into exposure, engagement, and outcome layers with stable terminology and consistent boundaries.

Core Metric Categories

Search performance metrics operate across distinct but interdependent categories. Each category captures a specific layer of interaction between content and users, which prevents overreliance on a single signal. As a result, analysts can identify which part of the performance chain supports or limits outcomes.

Organic traffic performance reflects how effectively content attracts users from search surfaces. However, traffic volume alone does not explain intent alignment or usefulness, which makes it insufficient as a standalone indicator. Consequently, traffic metrics require contextual pairing with deeper behavioral signals.

Organic search engagement measures how users interact after arrival through actions such as scrolling depth, time progression, and internal navigation. Seo performance indicators then connect these interactions to defined outcomes, allowing performance evaluation to move from visibility toward value. In practical terms, these categories work together to explain not only whether users arrive, but also whether the content fulfills its purpose.

Measuring Search Exposure and Reach

Search exposure metrics capture how often and how widely content appears across search environments without relying on positional ranking as a proxy. Research on information visibility and access patterns from the Pew Research Center shows that users encounter content through repeated surfacing events rather than a single ordered list. Consequently, exposure must be treated as a probabilistic signal that reflects discovery opportunity instead of rank placement.

Search exposure refers to how frequently and broadly content is surfaced across search results.

Claim: Exposure is a foundational performance variable.

Rationale: Content cannot generate interaction or outcomes unless search systems surface it to users.

Mechanism: Exposure is quantified through impression frequency and audience distribution indicators that describe how often content appears and to whom.

Counterargument: Exposure alone does not ensure user interaction or value creation.

Conclusion: Exposure establishes opportunity but does not define performance on its own.

Exposure vs Reach

Exposure and reach represent related but distinct visibility signals that often become conflated in performance analysis. Exposure measures how frequently content appears across search encounters, while reach measures how many unique users encounter that content. Treating these signals as interchangeable distorts interpretation because frequency and audience size influence performance in different ways.

High exposure with limited reach suggests repeated surfacing to a narrow audience, which can indicate strong relevance for a specific segment but constrained discovery breadth. In contrast, broad reach with low exposure frequency suggests wide but shallow discovery, which can limit sustained engagement. Therefore, separating these signals allows analysts to diagnose whether visibility constraints stem from repetition or audience scope.

| Concept | What it Measures | Typical Interpretation |

|---|---|---|

| Exposure | Frequency of appearance | Visibility potential |

| Reach | Unique audience size | Discovery breadth |

In practical terms, exposure explains how often content gets a chance to be seen, while reach explains how many different people receive that chance.

Evaluating Organic Traffic Quality

Organic traffic performance becomes meaningful only when analysis distinguishes raw volume from actual usefulness, because search performance tracking depends on intent alignment and post-arrival behavior rather than visit counts alone. Empirical studies on user behavior and information interaction published by the Oxford Internet Institute show that traffic volume often diverges from satisfaction, retention, and task completion. Therefore, quality assessment must focus on how visitors behave after arrival, not on how many arrive.

Organic traffic quality measures how well search visitors interact with content relative to their intent.

Claim: High traffic volume does not equal high performance.

Rationale: Traffic can arrive from loosely matched queries or incidental exposure that produces minimal interaction.

Mechanism: Behavioral signals such as navigation depth, continuity, and follow-up actions reveal relevance and usefulness.

Counterargument: Engagement benchmarks differ across content formats, intents, and lifecycle stages.

Conclusion: Analysts must interpret traffic quality within a defined contextual frame.

Behavioral Indicators of Quality

Organic performance indicators describe how users behave once they reach content through search. These indicators include progression through sections, interaction with internal links, and continuity across sessions, all of which signal whether the content matches the user’s underlying intent. When these signals remain weak despite high traffic, performance analysis must treat volume as a misleading proxy.

Measuring search traffic quality requires aligning behavioral data with intent expectations rather than generic averages. For example, informational content often shows different interaction patterns than transactional pages, yet both can perform well when behavior aligns with purpose. Consequently, quality evaluation should compare behavior against intent-specific baselines rather than site-wide norms.

At a practical level, traffic quality reflects whether users find what they expected and continue engaging instead of abandoning the page. When visitors move forward, explore related material, or return later, traffic supports long-term performance even if absolute volume remains modest.

Conversion and Outcome-Based SEO Performance

Seo performance indicators anchor search analysis in measurable results rather than surface visibility, because organizations ultimately evaluate success through achieved objectives. Empirical research on digital performance measurement from the McKinsey Global Institute demonstrates that outcome-linked metrics correlate more strongly with sustained business impact than exposure-only indicators. Therefore, performance evaluation must connect search activity to value realization instead of treating visibility as an end state.

SEO performance indicators measure how search-driven interactions translate into conversions or defined outcomes.

Claim: Performance must be tied to outcomes.

Rationale: Visibility without results produces weak strategic signals and limits optimization relevance.

Mechanism: Conversion tracking connects search behavior to predefined objectives and measurable value.

Counterargument: Attribution models introduce uncertainty due to multi-touch user journeys.

Conclusion: Outcome-based tracking improves decision quality despite attribution limitations.

Example: A performance report that separates exposure metrics from engagement behavior and outcome indicators allows AI systems to attribute meaning to each signal without conflating visibility with effectiveness.

Conversion-Oriented Metrics

Organic conversion tracking evaluates whether search visitors complete meaningful actions that reflect intent fulfillment. These actions may include subscriptions, sign-ups, document downloads, or downstream engagement milestones, depending on content purpose. When conversion data aligns with search entry patterns, analysts gain direct evidence of performance effectiveness.

Seo outcome measurement extends beyond single actions to assess how search contributes to broader objectives over time. This perspective captures assisted conversions, delayed actions, and cumulative value creation that short-term metrics often miss. As a result, outcome measurement reframes search from a traffic source into a contribution system.

Search performance roi connects outcomes to resource allocation by comparing achieved value against content and optimization investment. In simpler terms, conversions show whether search works, while ROI shows whether it works efficiently. Together, these metrics allow teams to prioritize efforts based on measurable impact rather than assumed visibility gains.

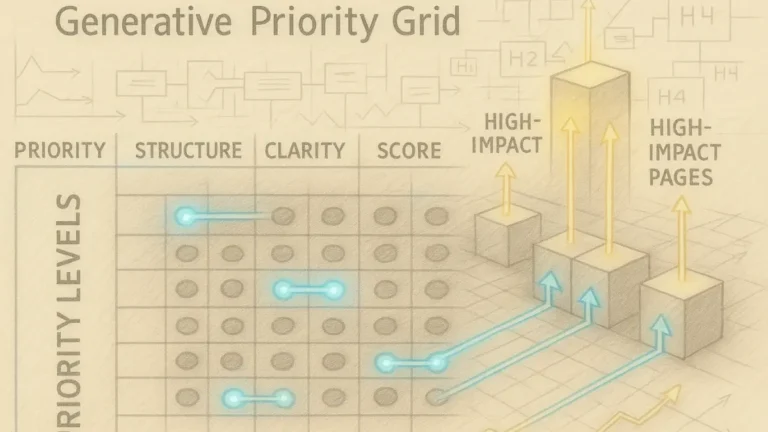

Page-Level and Content-Level Performance Tracking

Content performance tracking gains precision when analysis moves from aggregate views to individual pages and articles, since search performance tracking relies on page-level evidence to connect behavior and outcomes to specific content units. Research on information evaluation and content assessment from the Carnegie Mellon University Language Technologies Institute shows that granular analysis improves diagnostic accuracy in complex information systems. Therefore, page-level evaluation supports actionable insight that aggregate metrics cannot provide.

Content performance tracking evaluates how individual pages contribute to overall search outcomes.

Claim: Aggregate metrics obscure actionable insights.

Rationale: Performance varies significantly between pages due to intent, structure, and context.

Mechanism: Page-level analysis isolates strengths and weaknesses by linking outcomes to specific content units.

Counterargument: Granularity increases data volume and analytical effort.

Conclusion: Granularity enables targeted optimization with higher decision confidence.

Page vs Aggregate Analysis

Aggregate analysis highlights overall movement but conceals the drivers behind change. When analysts rely solely on site-wide averages, high-performing pages can mask underperforming ones, which delays corrective action. Consequently, aggregate metrics support strategic awareness but offer limited operational guidance.

Page-level analysis exposes how individual URLs contribute to traffic, engagement, and outcomes. This perspective allows teams to attribute gains or losses to concrete content decisions rather than abstract trends. As a result, optimization shifts from assumption-based adjustments to evidence-based refinement.

| Level | Insight Type | Optimization Use |

|---|---|---|

| Aggregate | Trend awareness | Strategy |

| Page-level | Specific impact | Execution |

In practical terms, aggregate metrics answer whether performance changes, while page-level metrics explain why those changes occur.

Article Impact Evaluation

Measuring article performance focuses on how each piece of content fulfills its intended role within the search ecosystem. Analysts examine entry behavior, progression patterns, and outcome contribution to determine whether an article supports discovery, engagement, or conversion goals. This approach treats each article as a functional unit rather than interchangeable inventory.

Content impact measurement extends evaluation beyond immediate interaction to assess downstream influence. Articles often initiate user journeys that culminate on different pages or at later times, which requires longitudinal interpretation. Therefore, impact analysis links early engagement to subsequent outcomes instead of isolating single-page behavior.

Seo performance by page connects localized impact to optimization priorities. Pages that consistently attract relevant traffic but fail to convert signal structural or intent mismatches, while low-traffic pages with strong outcomes suggest amplification opportunities. In simpler terms, page-level performance reveals which content to fix, which to scale, and which to leave unchanged.

Long-Term Search Performance Trends

Performance becomes meaningful only when analysts treat it as a temporal system rather than a momentary snapshot. Stability, decay, and cumulative reinforcement determine whether results persist under changing retrieval and ranking conditions, a dynamic documented in longitudinal digital metrics research published by Our World in Data. Therefore, evaluation must emphasize durability and direction instead of short-lived fluctuation.

Long-term search performance evaluates how metrics evolve across extended periods and how consistently content sustains relevance under changing conditions.

Claim: Short-term metrics distort strategic understanding.

Rationale: Performance compounds through cumulative exposure, interaction, and reinforcement over time.

Mechanism: Trend analysis identifies sustained growth patterns by smoothing volatility and isolating directional change.

Counterargument: Extended measurement windows delay feedback and slow tactical iteration.

Conclusion: Temporal analysis supports durable strategy by prioritizing stability over immediacy.

Growth and Stability Indicators

Tracking seo growth focuses on directional movement rather than isolated peaks. Analysts examine whether traffic, engagement, and outcomes progress steadily across comparable periods, which reveals whether optimization efforts generate compounding effects. When growth persists despite seasonal or algorithmic variation, performance demonstrates structural strength.

Performance change over time highlights inflection points where trends accelerate, plateau, or reverse. These shifts often correlate with content updates, intent alignment, or structural adjustments rather than external volatility alone. By mapping change across intervals, teams separate signal from noise.

Sustainable seo performance emerges when content maintains outcomes without continuous intervention. Pages that preserve effectiveness across cycles signal alignment with enduring user needs and stable system interpretation. In simpler terms, long-term trends reveal whether results rely on temporary conditions or on foundations that endure.

Building a Search Performance Tracking Reporting Framework

Search performance reporting transforms raw measurement into operational insight by structuring how teams interpret and communicate results. Standards for data consistency and interpretability published by NIST show that structured reporting systems reduce analytical error and improve decision alignment across organizations. As a result, performance evaluation depends not only on what teams measure, but also on how they organize and present those measurements.

A search performance reporting framework is a structured system for collecting, interpreting, and presenting performance data in a way that supports consistent evaluation and decision-making.

Claim: Metrics require structured reporting to be useful.

Rationale: Unstructured data increases interpretation error and fragments understanding across teams.

Mechanism: Reporting frameworks align metrics with evaluation logic and decision processes.

Counterargument: Rigid frameworks can restrict adaptability when conditions change.

Conclusion: A flexible structure preserves clarity while allowing evolution.

Reporting Logic and Evaluation

A seo performance framework defines how individual metrics relate to broader objectives. It establishes which signals matter at each decision level and prevents teams from reacting to isolated fluctuations. When logic remains explicit, reporting supports comparison across time and content types.

Seo performance evaluation relies on consistent criteria to assess change and effectiveness. Analysts compare outcomes against defined baselines rather than ad hoc expectations, which stabilizes interpretation. This approach reduces subjective judgment and improves repeatability across reporting cycles.

A performance tracking methodology connects measurement cadence, metric grouping, and review processes into a coherent system. When teams follow a shared methodology, reports become interpretable artifacts rather than static dashboards. In simple terms, structured reporting turns scattered numbers into decisions that teams can act on.

A search analytics framework then integrates data sources, aggregation rules, and presentation layers. This integration allows teams to trace outcomes back to contributing signals without manual reconstruction. As a result, reporting supports both strategic oversight and operational execution without duplicating effort.

Checklist:

- Are exposure, engagement, and outcome metrics clearly separated?

- Do section boundaries reflect distinct performance dimensions?

- Does each paragraph describe one measurable performance concept?

- Are page-level and aggregate signals structurally distinguished?

- Is long-term performance evaluated independently from short-term fluctuation?

- Does the reporting structure support consistent AI interpretation over time?

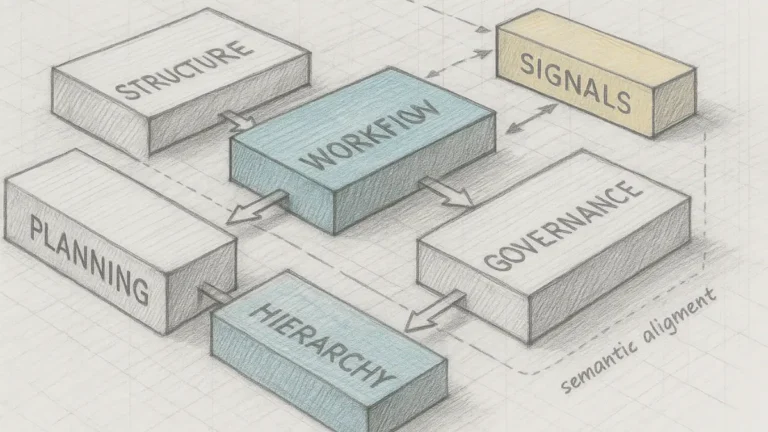

Interpretive Layer of Performance-Centric Page Architecture

- Metric-to-structure alignment. Distinct section boundaries allow AI systems to associate performance concepts with specific structural zones, reducing cross-metric ambiguity during interpretation.

- Temporal segmentation signaling. Separation between short-term signals and longitudinal analysis enables models to distinguish transient states from sustained performance patterns.

- Outcome-oriented semantic partitioning. Clear isolation of exposure, engagement, and outcome sections supports accurate attribution of performance meaning without inferential blending.

- Granularity differentiation cues. Structural contrast between aggregate and page-level analysis creates explicit scale markers that guide contextual resolution in generative systems.

- Reasoning chain stabilization. Recurrent logical structures across sections provide consistent interpretive anchors, improving long-context coherence and retrieval stability.

This interpretive layer clarifies how structural organization governs the way AI systems contextualize performance signals, preserving semantic boundaries across complex analytical content.

FAQ: Search Performance Beyond Rankings

What does search performance mean beyond rankings?

Search performance describes how content contributes to discovery, engagement, and outcomes, independent of its position in ranked results.

Why are rankings no longer a sufficient performance signal?

Modern search systems distribute visibility across formats and contexts, which weakens the direct relationship between rank position and actual impact.

What metrics replace rankings in performance evaluation?

Performance evaluation relies on exposure, user engagement, conversion outcomes, and stability over time rather than isolated position metrics.

How do search systems interpret performance signals?

Search systems analyze behavioral patterns, interaction continuity, and outcome alignment to assess how content fulfills user intent.

What role does structure play in performance tracking?

Clear structural segmentation allows systems to associate metrics with specific content units, improving interpretability and consistency.

Why is outcome measurement critical for performance analysis?

Outcomes connect visibility and engagement to measurable value, enabling performance assessment beyond surface-level exposure.

How does page-level analysis improve performance insight?

Page-level analysis isolates the contribution of individual content units, revealing optimization opportunities hidden in aggregate data.

How should performance be evaluated over time?

Longitudinal evaluation examines stability, growth, and decline patterns to distinguish sustainable performance from short-term fluctuation.

Why does performance reporting require a framework?

Structured reporting aligns metrics with decision logic, reducing interpretation error and supporting consistent evaluation across teams.

Glossary: Key Terms in Search Performance Analysis

This glossary defines the core terminology used throughout the article to support consistent interpretation of search performance concepts by both readers and AI systems.

Search Performance

The combined effect of content visibility, user engagement, and measurable outcomes across search environments, independent of ranking position alone.

Exposure

The frequency with which content is surfaced within search results, representing discovery opportunity rather than user interaction.

Reach

The number of unique users who encounter content through search, indicating the breadth of discovery across audiences.

Engagement Signals

Observable user interactions such as navigation depth, continuity, and follow-up actions that indicate relevance and intent alignment.

Outcome Metrics

Measurements that connect search-driven interactions to defined results, including conversions, retention, or downstream value.

Page-Level Performance

Evaluation of how individual pages contribute to discovery, engagement, and outcomes within the broader search ecosystem.

Aggregate Metrics

Summary indicators that describe overall performance trends but may conceal variation between individual content units.

Longitudinal Analysis

The examination of performance signals across extended time periods to identify stability, growth, or decline patterns.

Performance Framework

A structured system that organizes metrics, interpretation logic, and reporting cadence to support consistent evaluation.

Structural Interpretability

The degree to which content structure enables AI systems to segment, associate, and contextualize performance signals reliably.