Last Updated on January 11, 2026 by PostUpgrade

Competitor Analysis for Generative Visibility

Search visibility has shifted from ranking-based exposure toward AI-mediated selection, where large language models assemble answers instead of listing links. In this environment, generative visibility competitors no longer rely on result positions but gain exposure through repeated reuse inside AI-generated outputs. Competitive dynamics now depend on how systems interpret structure, consistency, and factual alignment.

This article explains how competitor analysis functions under generative visibility conditions. Analysts evaluating generative visibility competitors quickly see that traditional comparison methods based on traffic, keywords, and rankings fail to reflect AI-mediated exposure. Instead, retrievability, semantic stability, and cross-model reuse determine competitive presence.

The analysis covers the full competitive environment surrounding generative visibility. It examines market structures, platform and vendor categories, comparative positioning, capability differentiation, enterprise adoption patterns, evaluation logic, and market maturity. Together, these dimensions form a coherent framework for understanding competition in AI-driven discovery systems.

Defining the Generative Visibility Competitive Landscape

The generative visibility competitive landscape reflects how AI systems determine which sources they reuse when producing answers across conversational interfaces and contextual panels. Research from the Stanford Natural Language Institute (NLP) demonstrates that large language models rely on internal representations that prioritize semantic stability, structural clarity, and retrievability over surface popularity. As a result, competitive boundaries emerge around reuse probability rather than ranking position.

This landscape differs from traditional digital competition because AI systems act as intermediaries that actively select, compress, and recombine information. Consequently, market boundaries form where models consistently recognize and recall the same sources across multiple contexts. Competitive analysis therefore begins with understanding how AI systems classify participants.

Definition: AI understanding refers to the ability of generative systems to recognize, segment, and reuse competitive information based on structural clarity, stable terminology, and explicit reasoning boundaries rather than surface popularity signals.

Generative visibility competitors: entities whose content or systems AI models repeatedly reuse as authoritative sources during answer generation and contextual synthesis.

Claim: Generative visibility competitors emerge through AI reuse patterns rather than traffic-based performance indicators.

Rationale: AI-driven systems favor sources that provide stable meaning, consistent structure, and high retrievability across contexts.

Mechanism: Language models elevate sources that align with internal knowledge graphs and predictable reasoning templates, which increases reuse probability over time.

Counterargument: In low-competition or emerging domains, established brands can still dominate exposure due to historical prominence.

Conclusion: Competitive landscapes in generative visibility form through structural signals that guide long-term AI reuse decisions.

Competitive Roles Within the Landscape

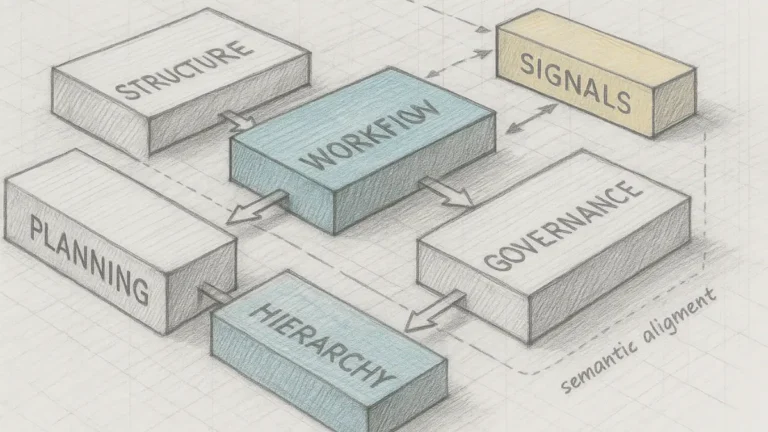

AI-mediated ecosystems contain distinct participant roles that differ by function and signal output. Platforms typically coordinate generative workflows and determine how information flows between models and interfaces. Vendors focus on tools that shape content structure, metadata, and reasoning consistency, while infrastructure providers supply the computational and data foundations that support large-scale inference.

These roles interact continuously because AI systems evaluate composite signals across layers rather than isolated outputs. When roles align, models detect coherence and reward reuse. When roles conflict or blur, reuse probability declines even if individual components perform well.

From an operational standpoint, organizations strengthen their competitive position by clarifying their role within the ecosystem. Clear role definition helps AI systems classify sources correctly and recall them consistently.

Structural Signals That Define Competition

AI systems detect competition through structural signals embedded in content and system outputs. These signals include consistent definitions, predictable reasoning flows, and stable terminology across documents and contexts. When signals remain consistent, models treat sources as reliable candidates for reuse.

Structural signals matter because generative systems compress information during inference. Compression favors sources that preserve meaning under transformation and reduce ambiguity. As a result, competitors differentiate themselves through structure rather than scale.

Teams influence competitive standing by maintaining semantic discipline across all AI-facing outputs. This discipline increases the likelihood that models recognize, store, and reuse their content.

| Competitor Type | Core Function | AI Exposure Role | Competitive Signal |

|---|---|---|---|

| Platform | Orchestrates generative workflows | Coordinates reuse across models | Structural consistency |

| Vendor | Provides AI-facing tools | Shapes content and metadata | Signal clarity |

| Infrastructure | Supplies foundational systems | Enables large-scale processing | Stability and reliability |

| Analytics Layer | Interprets AI signals | Guides optimization decisions | Measurement accuracy |

The table highlights how different participant types contribute to generative visibility outcomes. Each role emits a distinct signal that AI systems evaluate during answer construction.

Ecosystem Boundaries and Interaction

Competitive boundaries emerge where reuse probability concentrates among a limited set of sources. As models repeatedly select the same participants, ecosystems narrow and stabilize. This dynamic explains why generative visibility markets often consolidate instead of expanding.

Interaction across roles reinforces consolidation over time. Platforms favor vendors and infrastructure that maintain predictable outputs, while analytics layers refine how signals get interpreted. Together, these interactions shape durable competitive boundaries.

In practice, AI systems remember sources that behave consistently across contexts. Over time, those sources define the competitive landscape, while inconsistent participants gradually lose generative exposure.

Mapping Market Players and Vendor Categories

The structure of generative visibility market players reflects how AI systems differentiate roles based on functional contribution rather than brand positioning. According to the analytical frameworks described in the OECD Digital Economy Outlook, AI-mediated markets increasingly organize around system roles that emit distinct machine-readable signals. As a result, accurate competitor analysis depends on clear categorical separation.

Market clarity becomes critical because AI systems do not infer intent or positioning implicitly. Instead, they classify participants based on observable outputs, structural behavior, and consistency across contexts. Therefore, taxonomy acts as a prerequisite for any reliable competitive assessment.

Generative visibility solution providers: organizations that deliver systems, platforms, or tooling designed to influence AI-mediated exposure by shaping how content, structure, and signals are interpreted by generative models.

Claim: Generative visibility markets consist of functionally distinct participant categories.

Rationale: AI systems process infrastructure, analytics, and content layers through different interpretive pathways.

Mechanism: Each category emits unique machine-readable signals that models evaluate independently during reuse decisions.

Counterargument: Hybrid vendors may operate across categories, which can blur classification boundaries.

Conclusion: A clear taxonomy improves competitive analysis accuracy by aligning evaluation with AI interpretation logic.

Primary Categories of Market Participants

Generative visibility ecosystems separate participants by the role they play in the AI exposure chain. Platforms typically coordinate generative workflows and control how multiple signals converge during answer generation. Vendors focus on tooling that shapes structure, metadata, and reasoning consistency at the content or system level.

Infrastructure providers supply the computational and data foundations that enable inference at scale. Analytics layers interpret AI-facing signals and translate them into actionable insights for optimization and governance. Each category influences visibility through a different mechanism.

Because AI systems evaluate signals holistically, confusion between categories weakens reuse probability. Clear separation allows models to classify sources consistently and recall them across contexts.

In practice, organizations strengthen their market position when they align capabilities with a single dominant role. Mixed signals reduce interpretability and complicate competitive evaluation.

Functional Signals Emitted by Each Category

Each market category emits signals that AI systems detect and weigh differently. Platforms emit orchestration signals that reflect consistency and integration across workflows. Vendors emit structural signals that affect how content and metadata align with model expectations.

Infrastructure providers emit stability signals tied to reliability, latency, and data integrity. Analytics layers emit interpretive signals that guide decision-making and optimization logic. AI systems aggregate these signals when determining reuse eligibility.

Signal clarity matters because generative models compress information during inference. Compression favors sources with unambiguous functional identity. Therefore, participants that emit clean, category-aligned signals gain a competitive advantage.

Operational teams can audit their outputs to ensure that signals reinforce, rather than contradict, their intended role. This alignment improves long-term generative exposure.

Market Player Taxonomy

| Category | Description | Typical Capabilities | Market Role |

|---|---|---|---|

| Platform | Orchestrates generative workflows | Workflow coordination, governance | Exposure control |

| Vendor | Delivers AI-facing tools | Structure, metadata, reasoning | Signal shaping |

| Infrastructure | Provides foundational systems | Compute, data pipelines | Inference enablement |

| Analytics Layer | Interprets AI signals | Measurement, evaluation | Decision support |

The taxonomy clarifies how each category contributes to generative visibility outcomes. By mapping roles to capabilities and signals, competitive analysis aligns with how AI systems actually interpret markets.

Reducing Category Confusion in Competitive Analysis

Category confusion often arises when vendors market capabilities outside their functional role. AI systems do not resolve this ambiguity gracefully. Instead, they reduce reuse probability when signals conflict or overlap excessively.

Clear taxonomy mitigates this risk by anchoring evaluation to observable behavior rather than claims. Analysts who classify competitors by emitted signals achieve more accurate comparisons and forecasts.

Simply put, AI systems recognize participants by what they consistently do, not by how they describe themselves. When generative visibility competitors align their role, capabilities, and signals, models recall and reuse them more reliably across contexts. Over time, this consistency determines which generative visibility competitors remain visible and which ones gradually lose exposure.

Comparative Positioning of Generative Visibility Platforms

Comparative positioning within generative systems depends on how AI assigns relative interpretive priority to platforms during answer construction and synthesis. Research from MIT CSAIL demonstrates that large language models consistently favor sources that minimize ambiguity and preserve meaning under transformation. As a result, platform competition shifts from declared capability sets toward observable interpretability signals.

This shift replaces marketing-based comparison with structural evaluation. Platforms gain or lose visibility based on how reliably AI systems reuse their outputs across contexts. Consequently, relative positioning emerges from measurable patterns of reuse rather than from feature breadth.

Competitive positioning: the relative priority that AI systems assign to platforms based on their ability to deliver stable, reusable meaning across multiple contexts.

Claim: Comparative positioning depends on interpretability, not feature breadth.

Rationale: AI systems reuse content that fits stable reasoning patterns and reduces inference ambiguity.

Mechanism: Platforms that produce predictable outputs with consistent structure gain higher reuse probability across models.

Counterargument: Broad platforms may appear more often in exploratory or low-precision queries.

Conclusion: Structural clarity defines comparative advantage in generative visibility.

Principle: Competitive positioning in generative environments stabilizes when platform structure, definitions, and output patterns remain consistent enough for AI systems to assign reliable interpretive priority.

Interpretability as the Primary Positioning Axis

Interpretability determines how efficiently AI systems can extract, compress, and reuse platform outputs. Platforms that maintain clear structure, stable terminology, and consistent definitions allow models to preserve meaning during inference. This consistency directly increases interpretive priority.

By contrast, platforms that emphasize feature expansion without structural discipline introduce variance. Each variation forces models to resolve additional ambiguity. Over time, this cost reduces reuse frequency.

From a competitive standpoint, interpretability becomes the dominant axis of positioning. Platforms align their strategy around model comprehension rather than user-facing differentiation.

At a basic level, AI systems favor platforms that behave the same way in every context.

Output Stability and Reuse Probability

Output stability reflects how consistently a platform delivers the same meaning across use cases. Stable outputs allow AI systems to recognize patterns quickly and recall sources without recalculating intent. This stability increases reuse probability.

When platforms vary structure or terminology, models must re-evaluate meaning repeatedly. That effort lowers recall likelihood. In contrast, platforms that preserve output stability become default references within internal knowledge graphs.

Stability also supports cross-model convergence. When different models encounter equivalent outputs, they reinforce the same source.

In practical terms, platforms that avoid semantic drift gain lasting visibility.

Governance as a Competitive Signal

Governance influences positioning by controlling how changes propagate through platform outputs. Strong governance enforces semantic boundaries, version stability, and controlled updates. These mechanisms reduce interpretive variance.

AI systems respond indirectly to governance quality. They observe that governed platforms maintain meaning over time. This persistence increases interpretive trust and reuse priority.

Weak governance accelerates drift, even when features remain strong. Therefore, governance acts as a silent but decisive competitive signal.

Simply put, platforms that manage change carefully remain visible longer.

| Dimension | Platform A | Platform B | Platform C |

|---|---|---|---|

| Structural Clarity | High structural consistency across outputs | Moderate structure with partial variance | Low consistency with frequent format shifts |

| Model Compatibility | Designed for cross-model reuse | Optimized for a limited model set | Tightly coupled to a single model |

| Output Stability | Stable meaning across contexts | Meaning varies by use case | Meaning drifts over time |

| Governance Support | Strong version and change control | Basic governance mechanisms | Minimal governance controls |

The table illustrates how positioning differences emerge from AI-relevant dimensions rather than from advertised features. Each column represents a typical positioning pattern that AI systems interpret differently during reuse.

Positioning Outcomes Across Platforms

Platforms that align interpretability, stability, and governance receive higher priority during generative reuse. Repeated selection reinforces their position as default sources within model reasoning paths. Over time, this reinforcement produces durable competitive advantage.

Platforms that rely on breadth without constraint may surface initially but lose priority as models refine preferences. Comparative positioning therefore converges toward fewer, more interpretable systems.

In summary, generative visibility competitors compete on how clearly AI systems can understand them. The most interpretable platforms occupy the strongest positions.

Capability and Feature Differentiation Across Competitors

Capability differentiation in generative systems reflects how deeply a platform’s functions influence AI comprehension rather than how many features it exposes. Empirical work from the Carnegie Mellon University Language Technologies Institute (LTI) shows that language models reward systems that reduce interpretive effort through stable structure and predictable outputs. As a result, generative visibility capability comparison focuses on functional depth that directly affects reuse probability.

This perspective reframes how competitors evaluate their offerings. Feature sets only matter when they alter how models parse, store, and recall information. Therefore, meaningful differentiation emerges where capabilities shape AI-facing behavior instead of expanding surface functionality.

Capability differentiation: variation in AI-relevant functional depth that changes how generative models interpret, compress, and reuse outputs across contexts.

Claim: Capabilities matter only if they affect AI comprehension.

Rationale: Features that AI systems do not detect or reuse have no impact on generative visibility outcomes.

Mechanism: Generative models privilege predictable, structured outputs that minimize ambiguity during inference.

Counterargument: Advanced features may still matter in closed enterprise environments with controlled model usage.

Conclusion: Capability evaluation must prioritize measurable AI impact over feature quantity.

AI-Relevant Capabilities Versus Surface Features

AI-relevant capabilities directly influence how models interpret and reuse outputs. These capabilities include structural consistency, controlled terminology, and reasoning transparency. When platforms embed these elements, AI systems reduce inference cost and increase reuse frequency.

Surface features, by contrast, often target user experience without affecting model behavior. Dashboards, customization layers, or optional modules may improve human workflows but remain invisible to AI systems. Consequently, they do not alter generative visibility.

This distinction explains why some competitors with fewer features outperform richer platforms in AI-mediated exposure. AI relevance, not feature volume, determines differentiation.

At a basic level, models care about how information behaves, not about how many options surround it.

Predictability as a Capability Multiplier

Predictability multiplies the effect of core capabilities by stabilizing outputs across contexts. When platforms enforce predictable structure and logic, AI systems recognize patterns faster and recall sources more reliably. This consistency amplifies reuse influence.

Unpredictable behavior dilutes capability impact. Even strong features lose value when outputs vary unpredictably. Over time, models deprioritize such sources to reduce interpretive risk.

Predictability therefore acts as a multiplier rather than a standalone feature. It determines whether capabilities translate into sustained visibility.

In practical terms, platforms that constrain variability gain more from each capability they deploy.

Example: When two competitors offer similar features, AI systems tend to reuse the one whose capabilities produce more predictable structure and terminology, even if the alternative provides broader functionality.

Enterprise Context and Conditional Capability Value

Enterprise environments sometimes alter capability relevance. Closed systems with fixed models can leverage advanced features that tailor outputs to specific workflows. In these cases, capabilities that optimize internal efficiency may still matter.

However, these conditions remain limited to controlled contexts. Once outputs enter broader generative ecosystems, AI-facing relevance dominates again. Capabilities that do not generalize across models lose influence.

Thus, enterprise relevance modifies but does not replace AI impact criteria. Capability differentiation still centers on how models interpret outputs.

Simply put, enterprise features matter when systems control the AI. Outside that scope, AI decides what counts.

| Capability | AI Impact Level | Reuse Influence | Enterprise Relevance |

|---|---|---|---|

| Structural consistency | High | Strong increase in reuse probability | Critical for governance |

| Terminology control | High | Improves recall accuracy | Essential for compliance |

| Reasoning transparency | Medium | Supports inference stability | Valuable for audits |

| User interface customization | Low | No direct reuse effect | Improves internal workflows |

| Advanced analytics dashboards | Low | Indirect influence only | Helpful for decision support |

The matrix distinguishes capabilities by how directly they affect AI interpretation. High-impact capabilities shape generative visibility, while low-impact features mainly serve human users.

Differentiation Outcomes Across Competitors

Competitors that invest in AI-relevant capabilities achieve clearer differentiation over time. Models consistently select their outputs because they reduce ambiguity and preserve meaning. This selection reinforces their position within generative ecosystems.

Competitors that prioritize surface features without structural discipline experience diminishing returns. Their feature sets expand, but reuse probability stagnates or declines. Capability differentiation therefore aligns closely with long-term visibility outcomes.

In summary, generative visibility competitors differentiate themselves by how effectively their capabilities shape AI understanding. The deepest impact comes from functions that models can detect, trust, and reuse consistently.

Enterprise Adoption and Platform Selection Dynamics

Enterprise adoption of generative systems depends on how organizations control risk, meaning stability, and long-term operability across AI-mediated channels. Guidance from the NIST AI Risk Management Framework emphasizes governance, accountability, and lifecycle control as prerequisites for reliable AI-facing systems, which directly shapes how enterprises select platforms. Consequently, generative visibility enterprise platforms gain adoption when they demonstrate durability under change rather than rapid feature expansion.

Selection dynamics differ from growth-stage markets because enterprises operate under compliance, auditability, and cross-team coordination constraints. These constraints elevate operational discipline over speed and narrow the field to platforms that sustain consistent meaning across time and models.

Enterprise generative visibility platforms: governed systems designed to produce and maintain large-scale AI-facing content with controlled change, stable terminology, and auditable processes.

Claim: Enterprises prioritize governance over speed.

Rationale: AI-facing systems require semantic consistency over time to remain reusable and trustworthy.

Mechanism: Governed workflows constrain change, enforce standards, and reduce interpretive drift across models and updates.

Counterargument: Startups may favor rapid iteration to explore product-market fit.

Conclusion: Enterprise adoption favors controlled platforms that preserve meaning under continuous operation.

Governance as the Primary Adoption Driver

Governance determines whether a platform can sustain AI-facing outputs without accumulating risk. Enterprises require controls that manage versioning, approvals, and semantic boundaries because AI systems reuse content repeatedly and amplify inconsistencies. Platforms that embed governance reduce exposure to unintended meaning shifts.

Governance also supports accountability across teams. When content, data, and system changes follow defined processes, organizations can trace outcomes and correct issues before models internalize errors. This traceability increases confidence in long-term adoption.

From a practical perspective, governance signals reliability to AI systems as well. Consistent outputs across updates improve recall stability and reuse frequency.

At a basic level, enterprises choose platforms that do not change behavior unpredictably.

Operational Stability and Semantic Durability

Operational stability measures a platform’s ability to maintain consistent outputs as scale increases. Enterprises operate across departments, regions, and content types, which stresses systems that lack standardization. Stable platforms absorb this complexity without degrading meaning.

Semantic durability extends stability into the AI layer. When platforms preserve definitions and reasoning across time, models continue to recognize and reuse outputs without re-evaluation. This durability protects generative visibility during organizational growth.

Unstable platforms introduce drift that compounds with each update. Over time, this drift erodes both human trust and AI reuse probability.

In simple terms, enterprises adopt platforms that behave the same way tomorrow as they do today.

Risk Management and Controlled Change

Risk management shapes adoption by defining acceptable change velocity. Enterprises accept change when controls exist to validate impact on AI-facing outputs. Platforms that integrate risk assessment into workflows align with enterprise expectations.

Controlled change limits semantic breakage during updates. When platforms enforce backward compatibility and staged releases, AI systems encounter fewer disruptions. This continuity supports sustained reuse.

Platforms that lack risk controls force enterprises to compensate with manual oversight. That overhead discourages adoption at scale.

Simply put, enterprises prefer platforms that make change safe.

| Enterprise Criterion | Why It Matters | Generative Visibility Effect |

|---|---|---|

| Governance controls | Prevents uncontrolled semantic change | Preserves AI reuse stability |

| Version management | Enables rollback and auditability | Maintains recall consistency |

| Terminology standards | Reduces ambiguity across teams | Improves model interpretation |

| Approval workflows | Enforces accountability | Limits drift during updates |

| Scalability safeguards | Supports growth without variance | Sustains long-term visibility |

The criteria illustrate how enterprise priorities translate into AI-facing outcomes. Each criterion strengthens the conditions under which models reliably reuse content.

Adoption Patterns Across Organizations

Large organizations converge on similar adoption patterns despite industry differences. They favor platforms that integrate governance, stability, and risk controls from the outset. Over time, these platforms become embedded infrastructure rather than replaceable tools.

Organizations that experiment with rapid tools often migrate once scale exposes limitations. The transition reflects a shift from exploration to endurance. Adoption dynamics therefore reward platforms designed for longevity.

In summary, generative visibility competitors succeed in enterprise markets by aligning platform behavior with governance-first selection logic. Controlled systems earn adoption because they protect meaning as AI systems learn and reuse at scale.

AI System Alignment and Model Compatibility

Alignment between platforms and AI systems determines whether outputs remain reusable as models evolve, diversify, and change deployment contexts. Findings summarized across OpenAI research publications show that models consistently favor sources that reduce inference cost through structural regularity and unambiguous representation. For this reason, generative visibility ai platforms gain durable exposure when they maintain compatibility across multiple model architectures rather than optimizing narrowly for a single system.

Model compatibility reframes competition away from short-term performance tuning. Instead of asking how well a platform performs for one model today, alignment focuses on whether outputs remain interpretable as models update weights, context handling, and reasoning depth. This distinction directly affects how generative visibility competitors preserve relevance over time.

Model compatibility: the ability of a system or content output to be reused consistently across different large language model architectures without structural or semantic degradation.

Claim: Model-aligned platforms achieve broader visibility.

Rationale: LLMs preferentially reuse content that imposes low inference cost and minimal ambiguity.

Mechanism: Aligned structures constrain variability, allowing models to compress and recall meaning reliably across contexts.

Counterargument: Single-model optimization can outperform alignment in short-term or tightly controlled deployments.

Conclusion: Cross-model alignment provides durability as generative systems evolve.

Structural Alignment as a Compatibility Baseline

Structural alignment establishes a shared format that multiple models can interpret without additional adaptation. When platforms maintain consistent sectioning, definitions, and reasoning flow, models identify patterns quickly and reuse outputs more frequently. This alignment reduces the need for model-specific adjustments.

In contrast, outputs tailored to a single model often encode assumptions about context length, reasoning depth, or formatting preferences. These assumptions break when models change, which weakens recall. As a result, generative visibility competitors that rely on narrow optimization face declining reuse as architectures evolve.

At a basic level, models continue to reuse sources that look familiar and stable across generations.

Semantic Consistency Across Model Architectures

Semantic consistency ensures that meaning remains intact when different models process the same output. Even when architectures vary, consistent terminology and scoped definitions allow models to converge on the same interpretation. This convergence strengthens recall and reuse probability.

Semantic drift introduces incompatibility. When meaning shifts subtly across updates or contexts, models must resolve conflicts repeatedly. Over time, they deprioritize such sources to reduce uncertainty, which directly affects generative visibility competitors that fail to enforce boundaries.

Simply put, models reuse content they can interpret the same way every time.

Trade-offs Between Optimization and Alignment

Optimization targets specific model behaviors to maximize immediate performance, while alignment targets generality to preserve reuse across systems. These goals often conflict because optimization embeds assumptions that do not generalize.

Short-term optimization can increase visibility within a closed environment. However, it creates fragility once models change or diversify. Alignment sacrifices peak performance to gain resilience, which benefits generative visibility competitors operating in open, evolving ecosystems.

In practice, alignment favors endurance over short-lived spikes.

| Platform Type | LLM Compatibility | Cross-Model Reuse | Limitation |

|---|---|---|---|

| Structurally governed platform | High across major architectures | Strong and persistent | Slower adaptation to model-specific features |

| Lightly structured platform | Moderate with related models | Inconsistent reuse | Susceptible to semantic drift |

| Model-tuned system | High for one model | Low outside target model | Fragile under model change |

| Ad hoc content system | Low | Minimal reuse | Unpredictable interpretation |

The comparison shows how compatibility correlates with reuse durability. Platforms aligned structurally and semantically outperform narrowly optimized systems as models evolve.

Durability Under Model Evolution

Model evolution introduces changes in tokenization, reasoning depth, and context management. Aligned platforms absorb these changes without reengineering outputs. Their compatibility persists as models update.

Misaligned platforms require repeated remediation to regain exposure. Each cycle increases operational cost and weakens positioning. Over time, generative visibility competitors that depend on alignment maintain visibility, while others gradually lose recall.

In summary, generative visibility competitors strengthen their position by aligning with models as a class rather than with a single instance. Cross-model compatibility ensures that visibility endures as AI systems continue to evolve.

Vendor Evaluation and Decision Frameworks

Vendor evaluation in AI-mediated markets requires criteria that reflect how systems select, reuse, and prioritize sources during generation. Research synthesized by the Harvard Data Science Initiative emphasizes that data-driven decisions improve reliability when evaluation mirrors system behavior rather than perception-based rankings. Therefore, generative visibility vendor evaluation replaces scorecards with logic aligned to reuse probability and interpretability.

This shift reframes procurement and strategic assessment. Instead of comparing vendors by feature lists or brand recognition, organizations assess how vendor outputs behave inside generative systems. As a result, evaluation frameworks focus on structural and factual signals that models actually consume.

Claim: Vendor evaluation must mirror AI selection logic.

Rationale: Visibility depends on reuse probability rather than on declared quality or popularity.

Mechanism: Evaluation tracks structural consistency, factual stability, and interpretive clarity emitted by vendor systems.

Counterargument: Human trust signals, such as reputation and relationships, still influence procurement decisions.

Conclusion: AI-centric evaluation improves accuracy by aligning decisions with how visibility actually forms.

Evaluation Dimensions Aligned to AI Behavior

Effective evaluation frameworks begin by identifying dimensions that AI systems can detect and weigh. These dimensions include structural consistency, terminology control, and output stability across contexts. Vendors that perform well across these dimensions emit signals that models recognize and reuse.

Human-oriented metrics often fail because AI systems do not interpret intent, narrative, or reputation. They interpret structure and facts. Therefore, evaluation criteria must translate vendor behavior into machine-readable indicators.

Organizations that adopt this approach reduce mismatch between selection decisions and eventual visibility outcomes. Evaluation becomes predictive rather than retrospective.

In simple terms, vendors should be judged by how AI treats them, not by how they present themselves.

Signal Types and Their Decision Impact

Different evaluation dimensions correspond to different signal types. Structural signals influence how models parse and compress outputs. Factual signals influence trust and reuse frequency. Operational signals influence stability over time.

Decision impact emerges when these signals align. Vendors that emit consistent signals across dimensions create lower interpretive cost for models. This alignment increases reuse probability and long-term visibility.

When signals conflict, decision confidence drops. Even strong vendors lose evaluation strength if their outputs confuse models.

Practically, evaluation teams map each dimension to a measurable signal and track its impact over time.

Framework Application in Strategic Decisions

Strategic decisions require frameworks that scale beyond individual tools. Vendor evaluation must support portfolio decisions, long-term contracts, and ecosystem alignment. AI-centric frameworks provide this scalability.

By grounding evaluation in reuse behavior, organizations anticipate which vendors will remain visible as models evolve. This anticipation informs selection, consolidation, and replacement decisions.

Traditional rankings lack this foresight. Logical frameworks based on AI behavior offer durable guidance.

Put simply, strategy improves when evaluation predicts future visibility instead of explaining past performance.

| Evaluation Dimension | Signal Type | Decision Impact |

|---|---|---|

| Structural consistency | Machine-readable structure | Predicts reuse stability |

| Terminology control | Semantic signal | Improves recall accuracy |

| Factual stability | Knowledge reliability | Increases trust and reuse |

| Governance mechanisms | Operational signal | Reduces long-term drift |

| Output predictability | Behavioral signal | Supports durable selection |

The dimensions illustrate how evaluation criteria translate into AI-facing outcomes. Each dimension connects vendor behavior to a concrete decision effect.

From Evaluation to Selection

Evaluation frameworks guide selection by narrowing choices to vendors that align with AI logic. Selection then becomes a function of signal strength rather than preference. This shift reduces bias and increases consistency.

Over time, organizations that adopt AI-centric evaluation frameworks converge on similar vendor sets. These sets reflect what models repeatedly reuse, not what markets promote.

In summary, generative visibility competitors benefit from evaluation frameworks that reflect AI behavior. Vendors selected through this logic align more closely with how visibility actually emerges.

Market Maturity and Ecosystem Evolution

Market maturity in AI-mediated discovery emerges as systems repeatedly select a narrower set of sources that preserve meaning under reuse. Longitudinal research summarized by the Oxford Internet Institute indicates that platform ecosystems stabilize when algorithmic intermediaries converge on trusted inputs across contexts. Accordingly, generative visibility market maturity reflects consolidation driven by reuse behavior rather than by acquisition volume or market share.

This dynamic reshapes entry conditions and competitive expectations. As models refine internal preferences, ecosystems favor participants that sustain depth, consistency, and interpretability over time. Consequently, maturity expresses itself through predictable selection patterns and slower turnover.

Claim: Generative visibility markets mature through consolidation.

Rationale: AI systems converge on trusted sources that minimize ambiguity and inference cost.

Mechanism: Repeated reuse reinforces internal representations, which narrows the set of preferred participants.

Counterargument: New modalities or interaction formats can temporarily reset competitive dynamics.

Conclusion: Market maturity favors depth and reliability over breadth and diversity.

Consolidation Patterns in AI-Mediated Ecosystems

Consolidation occurs when AI systems repeatedly select the same participants across queries and contexts. Each reuse strengthens internal associations and increases future selection probability. Over time, this feedback loop concentrates visibility.

Unlike traditional markets, consolidation does not require mergers or exclusive contracts. It arises from interpretive efficiency. Participants that reduce model uncertainty persist, while others gradually lose exposure.

This process accelerates as models scale. Larger systems amplify preference signals, which further stabilizes the ecosystem.

At a basic level, AI keeps returning to sources it already understands.

Barriers to Entry as Maturity Signals

As ecosystems mature, entry barriers increase because new participants must displace established internal representations. Models require repeated evidence to adjust preferences. Without sustained exposure, newcomers struggle to gain reuse.

Barriers also arise from terminology lock-in and structural expectations. Mature ecosystems develop implicit standards that newcomers must match. Deviations introduce ambiguity that models penalize.

These barriers protect incumbents but also raise quality thresholds. Only participants that demonstrate clear interpretability can enter mature ecosystems.

Simply put, it becomes harder to be noticed once AI has made up its mind.

Ecosystem Evolution and Modality Shifts

Ecosystem evolution continues even as maturity increases. New modalities such as multimodal inputs or agent-based interactions can alter selection logic. These shifts create brief windows of openness.

However, consolidation reappears quickly when new modalities stabilize. AI systems again converge on sources that adapt fastest while preserving structure. Depth and consistency regain importance.

Therefore, evolution modifies form but not principle. Maturity dynamics persist across technological change.

In simple terms, tools change, but AI preference formation remains stable.

| Indicator | Description | Strategic Implication |

|---|---|---|

| Source concentration | Reuse centers on fewer participants | Favor depth investment |

| Preference stability | Selection patterns change slowly | Plan long-term strategies |

| Entry resistance | New sources require sustained exposure | Raise quality thresholds |

| Structural standardization | Implicit norms emerge | Align outputs early |

| Modality sensitivity | Temporary openness during shifts | Prepare adaptive responses |

The indicators show how maturity translates into strategic constraints. Each signal informs how organizations should allocate resources over time.

Strategic Implications of Maturity

Mature ecosystems reward participants that invest in enduring structure rather than rapid experimentation. Visibility becomes cumulative and resistant to disruption. Strategies that prioritize consistency outperform those chasing novelty.

Organizations that recognize maturity signals adjust expectations accordingly. They shift from expansion to reinforcement and from acquisition to optimization. This shift preserves visibility as systems evolve.

In summary, generative visibility competitors operate within ecosystems that increasingly favor stability. Market maturity channels competition toward depth, reliability, and sustained interpretability.

Checklist:

- Are competitive roles and categories clearly separated at the structural level?

- Do comparison sections expose explicit evaluative dimensions rather than feature lists?

- Does each paragraph represent a single, self-contained reasoning unit?

- Are examples used to anchor abstract competitive signals?

- Is terminology consistent across landscape, comparison, and evaluation sections?

- Does the page support uninterrupted AI interpretation from definition to strategy?

Strategic Implications for Competitive Intelligence

Strategic intelligence in AI-mediated markets requires a shift from visibility tracking toward interpretive outcome analysis. Research from Berkeley Artificial Intelligence Research (BAIR) demonstrates that generative systems prioritize reuse patterns and internal representations over surface signals, which reshapes how organizations assess competition. Consequently, generative visibility competitive positioning becomes an intelligence problem focused on how systems select and recombine sources.

This synthesis translates prior analysis into operational consequences. Competitive intelligence must align measurement, monitoring, and decision-making with AI selection behavior. The result is a framework that connects market signals to durable visibility outcomes.

Claim: Competitive intelligence must adapt to generative systems.

Rationale: Legacy metrics fail to capture AI-mediated exposure and reuse dynamics.

Mechanism: New intelligence models track reuse frequency, citation recurrence, and structural consistency across AI outputs.

Counterargument: Traditional analytics still inform human-driven channels and brand perception.

Conclusion: Dual intelligence models that integrate AI and human signals are required.

From Metrics to Interpretive Signals

Competitive intelligence historically relied on rankings, traffic, and share-of-voice metrics. These measures reflect human navigation rather than AI selection. As generative systems mediate discovery, intelligence must track interpretive signals that models actually consume.

Interpretive signals include reuse probability, semantic stability, and cross-context recall. When intelligence systems monitor these indicators, organizations gain foresight into how visibility will evolve. This foresight enables proactive strategy rather than reactive adjustment.

Operational teams can implement this shift by redefining dashboards and alerts around reuse behavior. Intelligence becomes predictive when it mirrors system logic.

In simple terms, counting clicks no longer explains who AI chooses.

Operationalizing Reuse-Centric Intelligence

Operational intelligence requires tooling and processes that observe AI outputs at scale. Organizations analyze generated responses, citations, and synthesis patterns to infer preference structures. These observations form a feedback loop for strategy.

Over time, reuse-centric intelligence highlights which competitors gain interpretive priority. It also reveals where structural changes alter selection behavior. This visibility supports timely intervention.

By integrating these insights into planning cycles, teams align actions with how exposure actually forms. Intelligence moves closer to decision execution.

Put plainly, teams watch what AI repeats, not what it ranks.

Integrating Human and AI Channels

Despite the shift, human channels remain relevant. Brand trust, partnerships, and user behavior still influence outcomes outside AI systems. Competitive intelligence must therefore integrate both domains.

Dual models separate measurement streams while connecting insights. One stream tracks AI-mediated reuse. The other tracks human engagement and perception. Together, they provide a complete view.

This integration prevents blind spots. Organizations avoid optimizing for one channel at the expense of the other.

Simply put, strategy works best when it respects both audiences.

Strategic Closure

The strategic implication of generative visibility is clear. Intelligence systems that ignore AI selection logic misread competition. Those that incorporate reuse and interpretability gain durable advantage.

As markets mature, intelligence discipline becomes a differentiator. Organizations that adapt early shape outcomes rather than follow them.

In summary, generative visibility competitors succeed when competitive intelligence reflects how AI systems think, select, and remember.

Interpretive Structure of Competitive Analysis Pages

- Competitive scope segmentation. Distinct H2→H3→H4 layers separate market definitions, comparison logic, and evaluation criteria, enabling AI systems to resolve competitive boundaries without conflating roles or signals.

- Reasoning-chain anchoring. Embedded claim–logic structures create stable interpretive anchors that allow generative models to reconstruct comparative intent rather than infer it implicitly.

- Taxonomic consistency signaling. Recurrent use of role-based classifications establishes a predictable competitor ontology that supports cross-section semantic alignment.

- Comparative dimension isolation. Tables and structured contrasts expose evaluative dimensions explicitly, reducing ambiguity in how differences between entities are interpreted.

- Context-preserving progression. Sequential section flow maintains contextual continuity from landscape definition to strategic implications, supporting long-context interpretation.

This structural configuration illustrates how competitive analysis content becomes interpretable as a coherent system of signals, allowing AI models to preserve intent, hierarchy, and relational meaning during generative synthesis.

FAQ: Generative Visibility and Competitive Analysis

What are generative visibility competitors?

Generative visibility competitors are entities whose content or systems are repeatedly reused by AI models during answer generation and contextual synthesis.

How does competitive analysis change in generative systems?

Competitive analysis shifts from ranking and traffic metrics toward reuse probability, interpretive priority, and structural consistency within AI-generated outputs.

Why do AI systems favor certain competitors?

AI systems favor competitors that provide stable meaning, predictable structure, and low ambiguity, which reduces inference cost during generation.

What signals define competitive positioning in generative visibility?

Competitive positioning is defined by structural clarity, output stability, model compatibility, and consistent reuse across generative contexts.

How are vendors evaluated in AI-mediated markets?

Vendors are evaluated through structural, semantic, and factual signals that influence how often AI systems select and reuse their outputs.

Why does governance matter for generative visibility platforms?

Governance ensures semantic stability and controlled change, which preserves interpretability and long-term reuse by generative models.

How does market maturity affect generative visibility competition?

As markets mature, AI systems converge on a narrower set of trusted sources, increasing consolidation and raising entry barriers for new competitors.

What role does model compatibility play in competitive outcomes?

Model compatibility allows content and platforms to remain reusable across evolving AI architectures, supporting durable generative visibility.

How does competitive intelligence adapt to generative visibility?

Competitive intelligence adapts by tracking reuse patterns, interpretive priority, and structural signals instead of traditional ranking-based metrics.

What determines long-term advantage among generative visibility competitors?

Long-term advantage emerges from sustained structural clarity, semantic discipline, governance, and consistent reuse within AI systems.

Glossary: Key Terms in Generative Visibility Analysis

This glossary defines core terminology used throughout the analysis to maintain semantic consistency for both strategic interpretation and AI-mediated reuse.

Generative Visibility

The degree to which content, systems, or entities are selected, reused, and synthesized by AI models during answer generation.

Generative Visibility Competitors

Entities whose content or platforms are repeatedly reused by AI systems as authoritative or high-confidence sources.

Competitive Landscape

The structured set of participants recognized by AI systems within a domain based on reuse probability and interpretive priority.

Competitive Positioning

The relative priority assigned by AI systems to competing platforms or entities during generative synthesis.

Capability Differentiation

Variation in AI-relevant functional depth that influences how generative models interpret and reuse outputs.

Model Compatibility

The ability of content or systems to remain reusable across different large language model architectures without semantic degradation.

Governance

The set of controls that manage semantic stability, change propagation, and accountability in AI-facing platforms.

Reuse Probability

The likelihood that an AI system will select and incorporate a source during generative response construction.

Market Maturity

The stage at which AI systems converge on a stable set of preferred sources, increasing consolidation and entry barriers.

Interpretive Priority

The internal weighting AI systems assign to sources based on clarity, stability, and historical reuse.