Last Updated on January 10, 2026 by PostUpgrade

Entity-Based Optimization: A New Framework

Search systems no longer rely on isolated keywords as their primary unit of understanding. Modern discovery engines interpret content through identifiable entities, their attributes, and their relationships within structured knowledge graphs. This shift changes how optimization works at a structural level, because relevance now emerges from entity recognition, contextual alignment, and authority propagation rather than from string matching. As a result, optimization decisions increasingly affect how machines model meaning, not just how pages rank for queries.

This article explains entity based optimization as a coherent structural framework rather than a collection of tactical adjustments. It defines how entities function as the core optimization unit and how content systems must adapt to this logic. The scope covers architectural design, recognition mechanisms, authority and trust signals, and implementation patterns suitable for enterprise-scale publishing. The goal is to provide a stable, machine-readable model that supports long-term AI comprehension and consistent generative visibility across evolving discovery systems.

Conceptual Foundations of Entity-Based Optimization

Entity based optimization framework defines optimization as a structural system rather than a tactical adjustment. Modern ranking and retrieval systems replaced keywords with entities because machines interpret meaning through identified concepts, not text patterns. This shift reflects how knowledge is modeled inside entity graphs and semantic networks, as described in foundational explanations of entities and knowledge graphs by Wikipedia.

Definition: Entity-based optimization is an AI-first content framework where meaning is organized around identifiable entities, their attributes, and relationships, enabling consistent interpretation, ranking, and reuse across search and generative systems.

Entity-Based Optimization: a structured optimization approach that aligns content, signals, and architecture around identifiable entities rather than isolated keywords, ensuring consistent interpretation across queries, languages, and interfaces.

Claim: Entity-based optimization framework enables stable interpretation across AI-driven systems.

Rationale: Entities provide persistent identifiers that survive query reformulation and linguistic variation.

Mechanism: Search systems map entities into internal graphs and evaluate relationships instead of relying on string-level matches.

Counterargument: This approach is less effective for ephemeral or non-canonical topics without stable identifiers.

Conclusion: Entity frameworks outperform keyword logic for long-term discoverability because they align with how AI systems store and reuse knowledge.

Entities Versus Strings as Meaning Carriers

Entities represent bounded concepts with defined identities, while strings represent surface text forms. A string changes with wording or language, but an entity remains stable once identified. Because of this difference, systems can track meaning across variations without reinterpreting each expression.

Entity optimization foundations therefore prioritize identity resolution and contextual clarity. Frequency and exact matching lose importance when systems rely on entity identity. As a result, optimization shifts from repetition to precision.

Put simply, keywords describe how something is written, while entities describe what something is.

Entity Graphs as the Structural Mechanism

Entity graphs organize entities through explicit relationships such as hierarchy and association. These structures allow systems to infer relevance through connections rather than text overlap. Meaning flows through relationships instead of isolated signals.

When entities are embedded in coherent graphs, systems can rank, retrieve, and synthesize information consistently. Graph structure replaces keyword proximity as the primary relevance driver.

In everyday terms, graphs help systems understand how concepts relate, not just where words appear.

Durability of Visibility Through Entity Alignment

Visibility becomes durable when meaning is anchored to stable entities. Entity-based optimization supports this durability by separating concept identity from phrasing. As interfaces and query behavior change, entities remain interpretable.

This durability explains why entity-centered content retains relevance longer than keyword-focused pages. Structure, not wording, determines persistence in AI-driven discovery systems.

Simply stated, entities last longer than keywords because systems remember concepts better than text.

Entity Recognition as the Core Optimization Unit

Entity recognition optimization establishes the point at which content becomes visible to ranking and retrieval systems. Before relevance or authority can be assessed, systems must first detect which real-world entities appear in the content and validate their identity with sufficient confidence. This process reflects how modern language models and search pipelines perform named entity recognition and linking, as formalized in research and tooling developed by the Stanford NLP Institute.

Entity recognition optimization: alignment of content signals to ensure accurate detection and classification of real-world entities across language, context, and format variations.

Claim: Accurate entity recognition determines whether content enters ranking consideration.

Rationale: Unrecognized entities cannot be weighted, compared, or contextualized within relevance models.

Mechanism: Models use contextual disambiguation, co-occurrence patterns, and authority signals to resolve entity identity.

Counterargument: Highly ambiguous entities reduce recognition confidence and weaken downstream signal reliability.

Conclusion: Recognition is a prerequisite for all downstream optimization because systems cannot reason about entities they fail to identify.

Principle: AI systems can only evaluate relevance, authority, and relationships after an entity is clearly recognized and contextually bounded within the content structure.

Entity Disambiguation Boundaries

Entity disambiguation boundaries define how systems separate one entity from similar or overlapping candidates. These boundaries rely on contextual precision, topical alignment, and consistency of references. When boundaries are clear, recognition confidence increases and classification stabilizes across models.

Scope-limiting techniques reduce ambiguity by narrowing the interpretation space. Explicit naming, stable attributes, and consistent contextual framing eliminate competing candidates early. As a result, systems converge faster on a single entity interpretation.

In simple terms, disambiguation works when content makes it clear which entity is meant and avoids mixing alternatives.

Contextual Signal Isolation

Contextual signal isolation ensures that each sentence contributes signals to one identifiable entity without semantic overlap. Sentence-level clarity prevents mixed references that confuse entity linking processes. This isolation improves alignment between text signals and entity representations.

Controlled references reinforce isolation by keeping terminology and attributes consistent throughout the text. When references remain stable, models rely less on probabilistic inference and more on direct alignment. This consistency reduces recognition errors and signal noise.

At a basic level, focusing each sentence on one clearly identifiable thing helps systems recognize entities faster and with higher confidence.

Entity Relationships and Graph-Based Optimization Models

Entity relationships optimization defines the structural layer through which relevance propagates across content systems. Modern ranking and retrieval models evaluate meaning by analyzing how entities connect rather than by inspecting isolated documents. This approach reflects graph-based evaluation logic used in semantic web standards and knowledge graph systems maintained by the W3C.

Entity relationship optimization: structuring content so that relationships between entities are explicit, consistent, and machine-readable, enabling systems to assess relevance through graph connectivity.

Claim: Entity relationships amplify relevance beyond individual pages.

Rationale: Graphs propagate trust and topical alignment across connected entities rather than confining relevance to a single document.

Mechanism: Systems score nodes based on relational density and authority transfer between connected entities.

Counterargument: Sparse graphs reduce propagation efficiency because limited connections restrict contextual inference.

Conclusion: Relationship density increases entity visibility by strengthening graph-level relevance signals.

Types of Entity Relationships

Hierarchical relationships organize entities into parent–child structures. These relationships clarify scope and establish authority boundaries within a topic domain. When hierarchy remains consistent, systems infer topical depth and categorical relevance with higher confidence.

Associative relationships connect entities that share functional or thematic relevance without hierarchical order. These links expand contextual reach and enable lateral discovery across related concepts. Stable associations support relevance transfer without forcing rigid classification.

Contextual relationships bind entities through shared situations, environments, or usage conditions. These connections refine interpretation when hierarchy and association alone cannot resolve meaning. Contextual links help systems interpret relevance within specific scenarios.

In simple terms, hierarchy defines structure, association defines relatedness, and context defines situational meaning.

| Relationship Type | Optimization Effect | Risk |

|---|---|---|

| Hierarchical | Establishes scope and authority signals | Overly rigid structure limits adaptability |

| Associative | Expands topical relevance laterally | Weak associations dilute meaning |

| Contextual | Refines situational interpretation | Context drift reduces clarity |

Entity mapping optimization depends on combining these relationship types coherently. When relationships remain explicit and balanced, systems propagate relevance efficiently across the graph. When links are sparse or inconsistent, visibility remains localized and unstable.

Entity Authority and Trust Signal Construction

Entity authority optimization explains how confidence is assigned at the entity level rather than inherited from individual pages. Modern AI-driven systems evaluate trust by aggregating evidence around identifiable entities, not by scoring documents in isolation. This approach aligns with formal trust and identity frameworks defined by the National Institute of Standards and Technology.

Entity authority optimization: increasing confidence scores assigned to entities through consistent evidence, validated references, and alignment with recognized institutions.

Claim: Entity authority determines reuse probability in AI answers.

Rationale: AI systems prioritize trusted nodes when selecting entities for ranking, synthesis, and generative responses.

Mechanism: Authority accumulates through citations, internal consistency, and institutional alignment across multiple sources.

Counterargument: New entities require temporal validation because systems need repeated evidence before assigning trust.

Conclusion: Authority is cumulative and non-linear, strengthening as consistent signals reinforce entity credibility over time.

Trust Signal Types

Institutional references function as high-confidence anchors for entity authority. When entities are consistently associated with recognized organizations, standards bodies, or research institutions, systems assign higher trust scores. These references reduce uncertainty because they link entities to established verification frameworks.

Dataset mentions provide empirical grounding for entity claims. References to structured datasets, benchmarks, or official statistics increase authority by demonstrating measurable backing. Systems treat data-aligned entities as more reliable because evidence can be cross-validated.

Cross-source consistency reinforces authority by confirming that entity attributes remain stable across independent sources. When multiple sources describe the same entity in compatible ways, confidence increases. Inconsistencies weaken authority signals and slow trust accumulation.

In simple terms, entities gain trust when reputable institutions mention them, data supports them, and sources agree about them.

Microcase: Institutional Alignment in Practice

An enterprise knowledge platform aligned its core entities with datasets published by the OECD. Each entity reference consistently pointed to the same statistical definitions and reporting standards. Over time, AI systems increased reuse of these entities in analytical summaries and comparative outputs. The alignment reduced ambiguity and accelerated trust accumulation without relying on page-level authority signals.

Entity trust optimization and entity credibility optimization operate through these mechanisms because authority emerges from evidence convergence. When signals remain consistent and verifiable, entities become preferred building blocks for AI-driven interpretation and reuse.

Entity Context and Semantic Boundary Control

Entity context optimization defines context as the constraint system that prevents semantic drift during interpretation and reuse. In AI-driven retrieval and synthesis, entities remain accurate only when systems can determine the intended scope of meaning without ambiguity. This requirement aligns with research on representation learning and contextual grounding developed by the MIT CSAIL.

Entity context optimization: restricting interpretation scope to preserve meaning stability by controlling how entities are framed, referenced, and bounded within content.

Claim: Controlled context improves entity reuse accuracy.

Rationale: Loose context leads to semantic collisions where multiple interpretations compete for the same entity.

Mechanism: Local definitions and scoped references limit interpretation by anchoring entities to precise conceptual boundaries.

Counterargument: Over-restriction reduces flexibility and may limit reuse across adjacent domains.

Conclusion: Balanced context maximizes precision while preserving adaptability for AI-driven systems.

Context Anchoring Techniques

Definitional anchoring establishes meaning by introducing a concise definition at the point where an entity first appears. This anchor signals to systems how the entity should be interpreted throughout the surrounding scope. When definitions remain stable, models maintain consistent internal representations across sections and documents.

Proximity rules reinforce anchoring by placing supporting attributes, constraints, and references close to the entity mention. Spatial proximity reduces the risk that distant or unrelated information alters interpretation. As a result, systems assign higher confidence to entity meaning because contextual signals remain tightly coupled.

In practice, anchoring works when entities are defined early and supported nearby, which keeps interpretation focused and predictable.

Entity alignment optimization depends on these techniques because alignment emerges from controlled boundaries rather than broad association. When context remains balanced, entities retain precision without becoming isolated. This balance enables reliable reuse across ranking, retrieval, and generative synthesis while minimizing semantic drift.

Entity-Based Content Architecture and Page Design

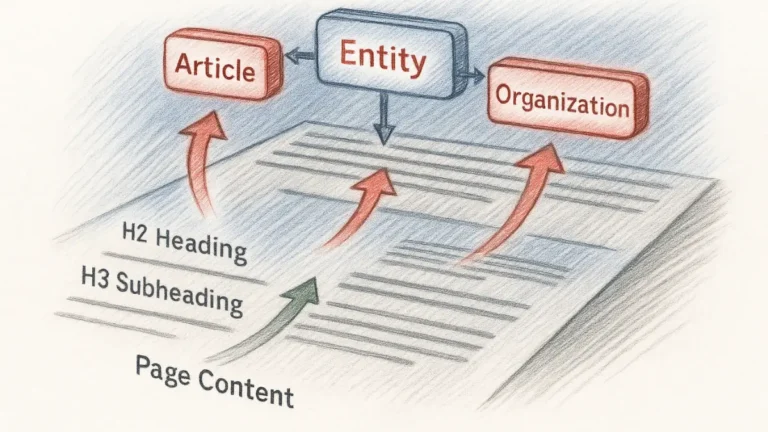

Entity based content optimization explains how page architecture enables machines to comprehend entities with precision. Modern ranking and retrieval systems interpret meaning through structure before content-level signals are applied, which makes architectural design a functional input to entity parsing. This approach aligns with semantic web and document structure standards maintained by the W3C.

Entity-based content optimization: designing pages around entity units rather than broad topics so that systems can isolate, interpret, and reuse meaning with minimal ambiguity.

Claim: Architecture determines how entities are parsed.

Rationale: Models rely on structural cues to segment content and assign meaning boundaries.

Mechanism: Hierarchical sections isolate entity meaning by separating definitions, attributes, and relationships into predictable containers.

Counterargument: Flat layouts reduce clarity because mixed signals blur entity boundaries.

Conclusion: Architecture acts as a ranking signal proxy by shaping how entities are interpreted before relevance scoring.

Structural Patterns

Entity-first sections place the entity at the center of each structural unit. Definitions, attributes, and constraints appear immediately after the entity is introduced, which allows systems to bind meaning early. This pattern reduces the need for inference because the entity context is explicit and localized.

Controlled hierarchy reinforces this pattern by enforcing clear boundaries between sections. When headings follow a strict hierarchy, models can distinguish primary entity information from secondary or supporting content. This separation improves parsing accuracy and reduces semantic overlap between entities.

Simply put, entity-first structure tells systems what the page is about before explaining details, while hierarchy keeps those details from mixing together.

Entity optimization architecture depends on these patterns because structure governs how signals are interpreted. When architecture aligns with entity boundaries, systems process content deterministically. When structure is loose, interpretation becomes probabilistic and less reliable.

Entity-Based Visibility in AI-Driven Discovery Systems

Entity based visibility optimization connects entity optimization logic with generative discovery surfaces where answers are synthesized rather than ranked as links. In these environments, systems assemble responses from reusable knowledge units, which shifts visibility from page exposure to entity reuse. This behavior reflects how large language models and retrieval-augmented systems construct answers from internal and external knowledge representations, as described in research published by OpenAI.

Entity-based visibility optimization: maximizing entity reuse across AI answers by ensuring entities meet confidence, clarity, and authority thresholds required for generative selection.

Claim: Entities, not pages, are reused in AI answers.

Rationale: AI systems extract entity-level facts and attributes rather than referencing entire documents.

Mechanism: High-confidence entities populate response graphs that models traverse during answer construction.

Counterargument: Low-authority or weakly defined entities are excluded from response generation.

Conclusion: Visibility depends on entity fitness rather than document-level optimization.

Example: An entity with a clear definition, stable context, and authoritative references is more likely to be reused in AI-generated answers, knowledge panels, and conversational summaries than a page optimized only for keyword ranking.

AI Surface Types

SGE-style panels assemble responses by aggregating facts tied to recognized entities. These surfaces prioritize concise, validated entity attributes that can be composed into a coherent answer. As a result, entities with stable definitions and clear relationships appear more frequently than pages optimized for keywords.

Chat-based retrieval systems operate through conversational synthesis. They rely on entity grounding to maintain coherence across turns and avoid contradiction. Entities that lack clear boundaries or sufficient authority are less likely to be reused because they introduce uncertainty into dialogue generation.

Knowledge panels present entities as standalone informational units. These panels draw from structured attributes, relationships, and authoritative references. Entity consistency directly determines whether a panel appears and how often it is updated.

In simple terms, AI surfaces select entities they can trust and recombine, not pages they can rank.

Entity based discovery optimization follows from this behavior because discovery occurs through entity selection rather than link traversal. When entities remain precise, authoritative, and well connected, systems reuse them across multiple AI-driven interfaces. When entities lack fitness, visibility declines regardless of page quality.

Implementation Model for Enterprise Entity Optimization

Entity optimization implementation provides an operational model for deploying entity logic at enterprise scale. In large content systems, optimization succeeds only when entities are managed as shared assets across editorial, structural, and data workflows. This requirement aligns with process standardization and data governance principles described in analytical frameworks published by the OECD.

Entity optimization implementation: systematic integration of entity logic into content operations so that identification, relationships, and authority signals remain consistent across production pipelines.

Claim: Entity optimization requires process-level integration.

Rationale: Ad-hoc changes fail at scale because isolated optimizations do not propagate across interconnected systems.

Mechanism: Editorial guidelines, structural templates, and data references align around shared entity definitions and rules.

Counterargument: Small sites may not require full implementation due to limited entity scope and lower coordination overhead.

Conclusion: Scalability depends on systemization rather than isolated optimization actions.

Step-by-Step Framework

Entity inventory establishes a controlled list of entities that the organization actively manages. This inventory defines canonical names, attributes, and scope boundaries. By formalizing entity ownership, teams prevent duplication and semantic drift across content.

Relationship mapping connects entities through predefined relationship types such as hierarchy, association, and context. Mapping ensures that relevance can propagate across the entity graph in predictable ways. Without mapping, entities remain isolated and underutilized.

Authority sourcing assigns evidence and references to each entity. These sources include institutional publications, datasets, and standards bodies. Consistent sourcing strengthens trust signals and supports long-term authority accumulation.

In simple terms, enterprises succeed when they know which entities they manage, how those entities connect, and which sources validate them.

| Step | Input | Output |

|---|---|---|

| Entity inventory | Content corpus and domain scope | Canonical entity list |

| Relationship mapping | Entity list and relationship rules | Structured entity graph |

| Authority sourcing | Institutional references and datasets | Trusted entity profiles |

Entity optimization methodology emerges from this sequence because each step builds on the previous one. Entity optimization best practices emphasize repeatability and governance rather than one-time execution. When implementation follows a structured model, entity logic scales reliably across teams, products, and AI-driven discovery systems.

Limitations, Exceptions, and Future Evolution

Entity optimization in modern search operates within defined boundaries that limit its universal applicability. While entity-centric systems improve stability and reuse, not all domains present conditions suitable for persistent entity modeling. Research on knowledge representation and reasoning at the Allen Institute for Artificial Intelligence highlights these constraints and outlines paths toward adaptive solutions.

Claim: Entity optimization is not universal.

Rationale: Some domains lack stable entities with persistent identifiers and shared references.

Mechanism: Temporal, emergent, or highly subjective topics resist modeling because entity attributes change faster than systems can validate them.

Counterargument: Hybrid models mitigate this issue by combining entity logic with contextual and statistical signals.

Conclusion: Entity optimization will evolve toward adaptive graphs that adjust to change while preserving structural clarity.

Entity optimization analysis shows clear limitations in domains driven by rapid novelty. News cycles, speculative topics, and early-stage technologies often lack canonical definitions or agreed attributes. In these cases, entity boundaries shift frequently, which reduces recognition confidence and delays authority assignment.

Exceptions also appear in domains with strong narrative or opinion-driven content. When meaning depends on perspective rather than shared facts, entity models struggle to converge. Systems compensate by relying more heavily on contextual similarity and behavioral signals instead of entity identity.

In practical terms, entity optimization works best where concepts remain stable and widely referenced, and it weakens where meaning changes faster than validation can occur.

Future evolution focuses on adaptive graph models that update relationships and attributes in near real time. These models integrate temporal signals, probabilistic confidence, and feedback loops to adjust entity fitness dynamically. Rather than abandoning entity logic, systems refine it to handle uncertainty and change.

From an implementation perspective, this evolution implies selective application. Enterprises apply entity optimization where stability exists and hybrid approaches where volatility dominates. This balance defines how entity-based systems will mature within modern search and AI-driven discovery environments.

Checklist:

- Are core entities explicitly defined and consistently referenced?

- Do H2–H4 sections isolate entity meaning without overlap?

- Does each paragraph represent a single reasoning unit?

- Are entity relationships made explicit and logically bounded?

- Is context controlled to prevent semantic drift?

- Can AI systems reuse entity knowledge without relying on full-page context?

Structural Interpretation of Entity-Centric Page Architecture

- Entity-aligned sectioning. Content is segmented around discrete entity concepts rather than thematic narratives, allowing AI systems to bind meaning to identifiable units instead of diffuse topics.

- Hierarchical boundary enforcement. The consistent H2→H3→H4 depth model establishes explicit semantic containment, which reduces cross-entity interference during long-context interpretation.

- Definition-first stabilization. Local micro-definitions placed at section entry points provide immediate semantic grounding, enabling models to resolve entity scope without extrapolation.

- Graph-compatible layout logic. Structural separation of concepts, mechanisms, and implications mirrors graph-based knowledge representations used in entity-centric reasoning systems.

- Interpretation-safe structural repetition. Recurrent structural patterns across sections reinforce predictable parsing behavior, supporting reliable extraction under generative indexing.

This structural configuration clarifies how entity-focused pages remain interpretable as composable knowledge units within AI-driven retrieval, synthesis, and long-context reasoning systems.

FAQ: Entity-Based Optimization

What is entity-based optimization?

Entity-based optimization is a structural approach where content is aligned around identifiable entities rather than isolated keywords, enabling stable interpretation by AI systems.

How does entity-based optimization differ from keyword optimization?

Keyword optimization targets text matching, while entity-based optimization focuses on concept identification, relationships, and contextual grounding used by modern AI systems.

Why do AI systems rely on entities instead of pages?

AI systems operate on reusable knowledge units, and entities provide stable identifiers that can be connected, validated, and reused across multiple responses.

How is entity authority established?

Entity authority emerges through consistent references, institutional alignment, and cross-source agreement rather than through page-level popularity signals.

What role do entity relationships play in optimization?

Relationships allow relevance and trust to propagate across entity graphs, enabling AI systems to evaluate meaning beyond isolated content blocks.

Why is context control important for entities?

Controlled context prevents semantic drift by limiting interpretation scope, ensuring that entities retain consistent meaning across AI-driven systems.

Can entity-based optimization work for all content types?

Entity-based optimization performs best in domains with stable concepts and shared references, while highly emergent topics may require hybrid approaches.

How does entity-based optimization affect AI-generated answers?

AI-generated answers preferentially reuse entities with clear definitions, strong authority signals, and well-structured relationships.

Why is structure critical in entity-based content?

Structural hierarchy helps AI systems isolate entity meaning, resolve boundaries, and maintain interpretation accuracy during retrieval and synthesis.

Glossary: Key Terms in Entity-Based Optimization

This glossary defines the core terminology used throughout the article to ensure consistent interpretation of entity-centric optimization concepts by both readers and AI systems.

Entity-Based Optimization

A structural optimization framework that aligns content, signals, and architecture around identifiable entities rather than isolated keywords.

Entity Recognition

The process by which AI systems detect, classify, and validate real-world entities within content before relevance evaluation.

Entity Graph

A structured representation where entities are modeled as nodes connected through defined relationships used for relevance propagation.

Entity Authority

A confidence score assigned to an entity based on consistency, institutional references, and cross-source agreement.

Contextual Boundary

A constraint that limits how an entity can be interpreted, preventing semantic drift across different contexts.

Relationship Density

The concentration of meaningful connections between entities within a graph, influencing relevance propagation strength.

Entity Context Optimization

The practice of controlling contextual signals to preserve stable entity interpretation across AI-driven systems.

Entity Visibility

The likelihood that an entity is reused within AI-generated answers, panels, or synthesized responses.

Authority Signal

A verifiable indicator such as institutional reference, dataset linkage, or consistent attribution that strengthens entity trust.

Structural Predictability

The consistency of content layout that enables AI systems to segment, interpret, and reuse entity information reliably.