Last Updated on December 26, 2025 by PostUpgrade

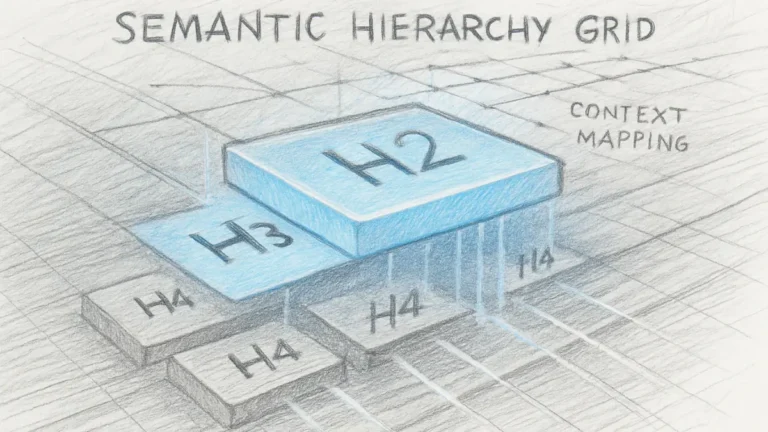

How Grid Systems Influence AI Comprehension

Artificial intelligence systems increasingly rely on structural signals to interpret, prioritize, and reuse digital content. As generative models move beyond keyword-based retrieval, content organization determines how models extract and preserve meaning. In this context, ai grid comprehension describes how models process ordered and repeatable structural patterns.

Definition: AI understanding refers to a model’s capacity to interpret meaning through structural signals such as alignment, positional order, and boundary consistency, enabling stable reasoning, accurate segmentation, and reliable reuse of content within generative systems.

Grid systems introduce predictable alignment, spacing, and sequencing into content environments. These properties reduce ambiguity during parsing, improve segmentation accuracy, and support stable reasoning across long contexts. As a result, grid logic operates as a machine-readable coordination layer rather than a visual design choice.

This article examines how grid systems shape AI understanding across reading, reasoning, and generative stages. It analyzes grid logic from a computational perspective, connects structural consistency to model behavior, and outlines strategic implications for AI-first content engineering.

Grid Systems as a Machine-Readable Ordering Layer

Grid systems define a formal ordering layer that directly affects how AI models parse, segment, and prioritize information, and this section places ai grid comprehension within that structural context. Rather than treating grids as visual aids, this block frames grid logic as a deterministic signal that models use during interpretation, a position supported by structural data standards published by NIST. The scope therefore focuses on grids as a prerequisite for stable and repeatable comprehension in high-density documents.

Definition:

A grid system is a rule-based spatial and logical alignment framework that constrains content placement into predictable coordinates and intervals, enabling consistent interpretation by machine readers.

Claim: Grid systems directly influence ai grid comprehension by enforcing predictable positional logic.

Rationale: AI models depend on consistent spatial and logical cues to minimize variance during parsing and inference.

Mechanism: Grid constraints generate repeatable alignment signals that models internalize as ordering priors during training and inference.

Counterargument: Freeform layouts can still be interpreted when semantic cues remain explicit and locally complete.

Conclusion: Grid ordering increases comprehension stability when documents contain dense or layered information.

Grid systems as structural signals for AI models

Grid systems ai comprehension emerges from the way alignment and spacing create consistent reference points across a document. When headings, paragraphs, and data elements occupy predictable positions, models can infer relative importance and boundaries without re-evaluating structure at each step. As a result, grid influence on ai behavior appears most clearly in long-form analytical content, where structural repetition reduces contextual drift.

At the same time, grid logic for ai operates independently of meaning itself. The grid does not define semantics, but it shapes how models encounter and sequence semantic units. Therefore, consistent placement supports faster segmentation and more reliable attention distribution across sections.

In simple words, grid systems help AI “know where it is” in a document. When every section follows the same alignment rules, the model spends less effort understanding structure and more effort understanding content.

Alignment regularity and spacing normalization

Alignment regularity ensures that similar content types appear in the same relative positions throughout a document. This regularity allows models to associate position with function, which simplifies parsing decisions during ingestion. Consequently, spacing normalization further reinforces these associations by creating uniform distances between related elements.

Positional repeatability strengthens this effect over longer contexts. When spacing and alignment remain stable, models reuse learned patterns instead of recalculating structural relationships, which reduces cumulative interpretation error across extended texts.

To put it simply, when spacing and alignment stay the same everywhere, AI stops guessing where one idea ends and another begins.

Example from enterprise documentation systems

Enterprise documentation portals often apply strict column grids to all technical pages. Internal evaluations of large-scale documentation systems show that such portals achieve higher extraction consistency in internal LLM tooling, particularly during summarization and cross-page synthesis, a pattern discussed in NIST research on structured digital content.

This pattern demonstrates that grid systems act as silent coordination mechanisms. They do not add meaning, but they stabilize how meaning travels through the model pipeline.

In simple words, companies that keep the same grid everywhere make it easier for AI tools to read and reuse their content.

Implications for long-context processing

Reduced ambiguity in long-context processing represents the primary implication of grid-based ordering. When structural signals remain stable, models preserve context boundaries more accurately across thousands of tokens. As a result, downstream reasoning and generation become more consistent and less prone to structural hallucination.

This effect becomes increasingly important as generative systems process longer documents. Grid systems therefore function as foundational infrastructure for AI-first content rather than optional layout choices.

How AI Models Interpret Grid-Based Signals

This section explains how AI models transform recurring grid patterns into interpretive signals, with ai interpretation of grids positioned as a learned structural capability. The scope covers transformer-based models and layout-aware encoders, treating grids as implicit metadata rather than presentation artifacts, a position supported by research from the Stanford Natural Language Institute. The purpose is to clarify how positional regularity shapes interpretation during parsing and inference.

Definition:

A grid signal is a recurring positional pattern that functions as a non-verbal structural cue, allowing models to detect boundaries, priority, and grouping without introducing semantic meaning.

Claim: ai interpretation of grids relies on learned positional correlations acquired during training.

Rationale: Models repeatedly observe that specific positions correlate with consistent informational roles across documents.

Mechanism: Attention mechanisms amplify elements that occupy stable aligned positions, which reinforces predictable interpretation paths.

Counterargument: Models trained mainly on unstructured text may rely more heavily on semantics and ignore spatial regularities.

Conclusion: Grid signals improve interpretive reliability when structural regularity aligns with consistent semantic design.

Learned positional correlations in transformer models

Grid-based ai comprehension develops when transformer architectures treat position as a proxy for functional intent. During large-scale training, repeated alignment patterns co-occur with specific content roles, which enables models to anticipate structural function before resolving detailed meaning. As a result, ai perception of grids emerges as a learned bias that reduces uncertainty during early parsing stages.

At the same time, grid structure ai comprehension improves because consistent alignment constrains interpretation choices. Therefore, models distribute attention more efficiently across tokens, which stabilizes inference over long sequences and limits cumulative structural drift.

When similar content consistently appears in the same relative location, models learn to associate that location with a specific role. This association reduces the need to re-evaluate structural intent for each occurrence, which improves processing efficiency.

Positional embeddings and layout tokenization mechanisms

Positional embeddings encode both relative and absolute order within a sequence, enabling models to differentiate content not only by meaning but also by location. When grid patterns remain stable, these embeddings reinforce alignment expectations and support reliable boundary detection across sections and pages.

Layout tokenization complements this process by converting spatial or structural coordinates into discrete signals processed alongside text. Consequently, alignment and spacing become machine-readable features that persist across contexts and guide attention allocation during inference.

Repeated positional signals allow models to treat location as a stable input feature. Over time, this stability increases confidence in boundary detection and grouping behavior.

Structural features and their interpretive effects

| Grid Feature | AI Signal Type | Interpretation Effect |

|---|---|---|

| Alignment | Positional | Priority weighting |

| Spacing | Boundary | Chunk separation |

| Repetition | Pattern | Semantic grouping |

These features show how structural regularities translate directly into model behavior, which explains why consistent grids improve segmentation accuracy and semantic grouping in complex documents.

Grid signals as implicit structural metadata

Grid signals operate as implicit metadata because they convey hierarchy and organization without explicit labels or markup. As a result, models infer relevance and structural boundaries directly from placement patterns, which reduces dependence on manually defined structure.

Stable grid usage allows models to maintain orientation across long contexts. This stability supports consistent interpretation even when explicit structural annotations remain limited or absent.

Grid Consistency and Comprehension Stability

This section examines consistency as the main variable influencing AI comprehension across long documents, with grid consistency ai comprehension addressed as a structural determinant. The scope focuses on multi-section analytical content and enterprise documentation, drawing on research and systems analysis associated with MIT CSAIL. The objective is to link stable grid application to reduced model uncertainty during long-context processing.

Definition:

Grid consistency is the uniform application of grid rules across all content sections, preserving alignment, spacing, and positional logic throughout a document.

Claim: grid consistency ai comprehension improves long-context reliability.

Rationale: Models penalize structural drift because it increases uncertainty during inference.

Mechanism: Consistent grids reduce re-mapping costs between sections by preserving stable positional expectations.

Counterargument: Short documents with limited scope may not benefit from strict consistency.

Conclusion: Consistency becomes critical when content scales across multiple sections and extended contexts.

Principle: AI systems interpret content more reliably when structural patterns such as grids, section boundaries, and positional hierarchy remain consistent enough to eliminate the need for repeated structural inference.

Consistency as a stabilizing factor in long documents

Grid hierarchy ai comprehension strengthens when models encounter the same structural patterns across consecutive sections. When headings, paragraphs, and data elements maintain identical alignment rules, models can preserve orientation without recalculating structural intent. As a result, interpretation remains stable even as context windows expand.

At the same time, grid clarity for ai improves because repeated alignment reduces ambiguity in boundary detection. Therefore, models maintain clearer distinctions between sections, which limits context blending and reduces inference noise across long sequences.

When structure stays the same from section to section, models do not need to relearn layout rules. This stability allows them to focus on meaning instead of structure.

Context window anchoring through consistent grids

Context window anchoring occurs when structural signals persist across large token spans. Consistent grids provide fixed reference points that models use to anchor attention and preserve internal state. Consequently, transitions between sections require fewer structural adjustments.

As a result, consistent grids lower the cognitive load imposed on the model during inference. This reduction explains why comprehension stability increases as documents grow in length and complexity.

Stable grid usage gives models predictable checkpoints. These checkpoints help maintain orientation as content accumulates.

Implications for summarization and generative outputs

Improved summarization accuracy emerges as a direct implication of grid consistency. When models retain clear structural boundaries, they extract and compress information with fewer omissions or misplacements. This effect becomes visible in generative systems that produce overviews, highlights, or structured answers.

In environments such as SGE and Gemini-style summarization layers, consistent grids support cleaner aggregation across sections. As a result, summaries preserve logical order and maintain higher factual alignment with source material.

Consistent structure helps models decide what belongs together. This decision process leads to summaries that remain coherent and complete even for long documents.

Grid Logic as a Reasoning Constraint

This section treats grids as reasoning constraints rather than layout devices, with grid logic ai interpretation framed as a mechanism that limits interpretive freedom in a productive way. The scope focuses on how constrained structure guides inference paths in analytical content, a pattern observed in policy and research outputs referenced by the OECD. The aim is to show grids as cognitive scaffolds that support clearer reasoning by reducing structural uncertainty.

Definition:

A reasoning constraint is a structural limitation that narrows interpretation paths by restricting how information can be sequenced, compared, and evaluated.

Claim: grid logic ai interpretation reduces reasoning entropy.

Rationale: Fewer structural permutations simplify inference paths and lower interpretive variance.

Mechanism: Models reuse learned grid-to-meaning mappings to resolve relationships between sections more efficiently.

Counterargument: Over-constrained grids may suppress nuance when content requires flexible ordering.

Conclusion: Balanced constraint improves reasoning clarity without eliminating expressive range.

Structural constraint as a guide for inference

Grid patterns ai interpretation emerges when models encounter repeated structural limits that define how content can relate across sections. These limits reduce the number of plausible structural configurations the model must evaluate, which simplifies reasoning decisions during inference. Consequently, constrained structure channels attention toward meaning rather than layout resolution.

At the same time, constraint does not eliminate interpretation. Instead, it narrows the search space in which interpretation occurs. Therefore, models reach conclusions more consistently because fewer structural alternatives compete for attention.

When structure restricts how ideas can connect, models spend less effort deciding where to look next. This shift improves reasoning efficiency without altering semantic content.

Ordering effects on understanding and evaluation

Grid order ai understanding improves because fixed ordering clarifies precedence and dependency between elements. When sections follow a predictable sequence, models infer which information provides context and which information extends or qualifies it. As a result, reasoning chains maintain direction and avoid circular interpretation.

Moreover, consistent ordering supports comparative reasoning. Models align similar elements across sections more easily when order remains stable, which improves evaluation accuracy in analytical and policy-oriented documents.

Predictable order helps models determine what comes first and what depends on it. This determination stabilizes reasoning across extended documents.

Example from policy document analysis

Policy documents produced within OECD frameworks often apply strict grid rules across reports and datasets. Internal evaluations of automated analysis systems show higher answer fidelity when such grid rules remain consistent across sections, particularly in comparative policy summaries and longitudinal analyses.

This pattern illustrates how structural constraint improves reasoning outcomes. By limiting interpretive paths, grid logic enables models to preserve analytical intent across complex documents.

Implications for reasoning-oriented content

Grid logic functions as a reasoning scaffold rather than a visual aid. When applied consistently, it reduces entropy in model inference and supports clearer analytical outcomes. However, designers must balance constraint with flexibility to preserve nuance where required.

This balance defines the practical value of grid logic in AI-first reasoning environments.

Grid Systems in AI Content Reading Pipelines

This section explains how grids affect AI reading flows from ingestion to summarization, positioning ai reading grid systems as a structural optimization layer across the full pipeline. The scope includes crawlers, retrievers, and generative layers, reflecting system-level behaviors described in research synthesized by the Allen Institute for Artificial Intelligence. The goal is to establish pipeline-level understanding of how grid logic shapes efficiency and reliability.

Definition:

A reading pipeline is the sequence of processes that transforms content from ingestion through retrieval into generative output.

Claim: ai reading grid systems optimize pipeline segmentation.

Rationale: Predictable segments improve retrieval alignment by reducing boundary ambiguity.

Mechanism: Grid boundaries align with chunking heuristics used during ingestion and retrieval.

Counterargument: Semantic chunking can operate without grids when content signals remain explicit.

Conclusion: Grids enhance efficiency and stability as pipelines scale in size and complexity.

Grid alignment across ingestion and retrieval stages

Grid layout ai reading improves the ingestion stage because consistent boundaries create clean chunking behavior. When crawlers encounter stable alignment and spacing, they detect content limits with fewer errors, which reduces fragment overlap. Consequently, ingestion outputs remain more uniform and easier to index.

During retrieval, grid structure ai reading supports alignment between stored chunks and query intent. Because chunk boundaries follow predictable patterns, retrievers match segments more accurately and reduce recall noise. As a result, retrieval latency decreases while precision improves.

When boundaries remain consistent, systems do not need to reinterpret structure at every step. This consistency accelerates early pipeline stages and improves downstream reliability.

Grid boundaries and chunking heuristics

Chunking heuristics rely on signals that indicate where information units begin and end. Grid boundaries provide these signals by enforcing uniform spacing and alignment across sections. Therefore, chunk size remains stable even as document length increases.

This stability reduces reprocessing overhead during retrieval and re-ranking. Models spend less effort reconciling mismatched chunks, which improves throughput under load.

Stable boundaries act as anchors during processing. These anchors help systems keep related information together as content flows through the pipeline.

Pipeline effects on generative output

At the generation stage, ordering cues introduced by grids preserve logical sequence. Models reuse structural order learned during ingestion and retrieval, which produces more coherent outputs. Consequently, summaries and explanations follow the original document logic more closely.

This behavior becomes critical in long-form synthesis, where loss of order leads to fragmented answers. Grid-aligned pipelines maintain narrative and analytical continuity.

Predictable ordering helps models decide what to generate first and what to qualify later. This decision improves coherence without adding semantic rules.

Structural roles of grids across pipeline stages

| Pipeline Stage | Grid Role | Outcome |

|---|---|---|

| Ingestion | Boundary cues | Clean chunking |

| Retrieval | Alignment | Faster recall |

| Generation | Ordering | Coherent output |

These roles show that grid systems act as connective tissue across the pipeline. By aligning boundaries, retrieval, and ordering, grids reduce friction between stages and improve end-to-end performance.

Semantic Grids and Meaning Formation

This section explores how grids interact with semantic meaning, with grid semantics ai comprehension treated as a stabilizing force rather than a visual preference. The scope focuses on meaning predictability in analytical content, reflecting research on representation learning and semantic alignment discussed by the Vector Institute at the University of Toronto. The purpose is to connect grid structure to how models preserve and reuse meaning across long contexts.

Definition:

A semantic grid is a grid whose structural arrangement mirrors conceptual hierarchy, mapping positional regularity to relative meaning importance.

Claim: grid semantics ai comprehension strengthens meaning retention.

Rationale: Structural-semantic alignment reduces interpretation conflict during inference.

Mechanism: Models align grid position with concept weight, using placement as a proxy for semantic priority.

Counterargument: Poor semantic design negates grid benefits even when structural consistency remains high.

Conclusion: Semantics must co-evolve with grid rules to maintain stable meaning.

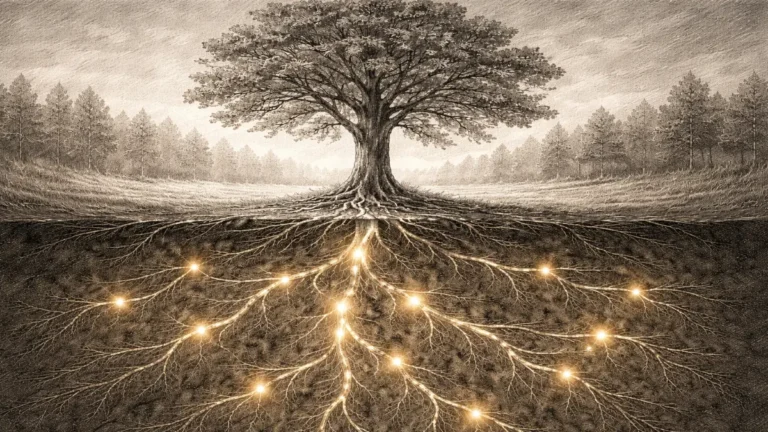

Structural alignment between grids and concepts

Ai semantic grids emerge when structural placement reflects conceptual relationships rather than arbitrary layout decisions. When primary concepts consistently occupy dominant positions and supporting concepts follow predictable subordinate placement, models infer hierarchy without re-evaluating semantics at each step. As a result, meaning stabilizes across sections even as content density increases.

At the same time, this alignment limits semantic drift. When grids reinforce conceptual order, models avoid reinterpreting the same concept differently across contexts. Therefore, grid semantics ai comprehension improves because structure continuously signals how meaning should be weighted and related.

Consistent placement teaches models which ideas matter most. Over time, this consistency reduces ambiguity in how concepts relate to one another.

Grid position as a proxy for semantic weight

Grid-based meaning ai depends on the association between position and importance. During training and inference, models observe that certain positions correlate with definitions, claims, or conclusions. Consequently, they treat these positions as indicators of semantic weight.

This mechanism does not replace meaning extraction. Instead, it accelerates it by narrowing the range of plausible interpretations. Models process high-weight positions first and contextualize lower-weight positions relative to them, which improves coherence in downstream reasoning.

Repeated positional cues reduce the need for constant semantic reassessment. This reduction supports faster and more reliable meaning formation across long documents.

Interaction between semantic design and grid rules

Semantic grids require intentional design choices. When content creators align grid structure with conceptual flow, models benefit from both semantic clarity and structural predictability. However, when grids remain consistent but semantic ordering changes arbitrarily, models receive conflicting signals.

Such conflicts increase interpretation cost and reduce retention accuracy. Therefore, semantic design and grid rules must evolve together rather than independently.

Aligned structure and meaning allow models to preserve intent. Misalignment forces models to resolve contradictions that grids alone cannot fix.

Implications for semantic predictability

Semantic predictability improves when grids consistently reflect conceptual hierarchy. Models reuse learned associations between position and meaning across documents, which supports summarization, comparison, and synthesis tasks. This reuse becomes critical in enterprise environments where content spans multiple documents and timeframes.

By embedding semantics into grid structure, content remains interpretable even as scale increases. This integration defines the practical value of semantic grids for AI-first knowledge systems.

Grid-Based Reasoning in Generative Outputs

This section connects grid structure to downstream generative reasoning, framing grid-based reasoning ai as a determinant of output coherence and logical order. The scope includes summaries, explanations, and analytical responses, reflecting patterns observed in large-scale synthesis systems documented in peer-reviewed studies available on arXiv. The purpose is to explain how structural alignment established during ingestion propagates into generated answers.

Definition:

Grid-based reasoning is an inference process in which structural alignment shapes how models sequence, prioritize, and relate generated statements.

Claim: grid-based reasoning ai improves answer coherence.

Rationale: Models reuse structural order learned during ingestion and retrieval when producing outputs.

Mechanism: Attention follows grid-implied hierarchy, preserving relative importance and sequence during generation.

Counterargument: Creative or exploratory tasks may resist rigid structure and benefit from looser ordering.

Conclusion: Analytical and explanatory outputs benefit most from grid-aligned reasoning.

Structural propagation from input to output

Ai reasoning grids influence generation because models do not discard structural signals after ingestion. Instead, they carry forward learned ordering cues into the generation phase. When input content follows a consistent grid, models replicate that structure when composing summaries or explanations.

As a result, generated outputs maintain clearer progression from definitions to claims and conclusions. This progression reduces logical jumps and improves interpretability, particularly in long-form answers.

Consistent structure gives models a blueprint for how to respond. That blueprint guides generation without constraining meaning.

Example: When grid-aligned documents preserve consistent conceptual boundaries and hierarchy, generative models tend to reproduce that order in summaries and explanations, resulting in outputs with clearer sequencing and reduced structural distortion.

Hierarchical attention during generation

Ai comprehension grid logic becomes visible when attention prioritizes content based on learned structural hierarchy. Elements placed in dominant grid positions receive earlier and stronger attention during generation. Consequently, models surface core ideas before elaborations or qualifiers.

This behavior supports explanatory clarity. Models align generated statements with the inferred hierarchy of the source, which reduces redundancy and misplaced emphasis.

When hierarchy remains clear, models decide what to say first and what to support later. This ordering stabilizes reasoning across generated text.

Example from academic synthesis systems

Academic synthesis tools that aggregate research papers often rely on structured inputs to generate sectioned outputs. Evaluations of systems processing arXiv papers show that grid-aligned inputs lead to clearer separation between background, methodology, and findings in generated summaries.

This pattern indicates that structural alignment directly affects reasoning flow. When grids encode hierarchy, models reuse that hierarchy during synthesis rather than reconstructing it heuristically.

Implications for generative reliability

Grid-based reasoning strengthens reliability by preserving logical sequence and emphasis. Generated outputs remain closer to source intent because structural cues constrain inference paths. This effect becomes increasingly important as models generate longer and more complex responses.

While creative tasks may tolerate structural looseness, analytical generation depends on predictable reasoning paths. Grid alignment therefore functions as a stabilizing layer for AI-generated explanations and summaries.

Strategic Implications for AI-First Content Design

This section translates technical insights into strategic guidance, focusing on how grid system impact ai shapes long-term visibility, reuse, and operational reliability. The scope addresses enterprise content that must remain interpretable across indexing, retrieval, and generation layers, aligning with architectural principles articulated by the World Wide Web Consortium. The aim is to provide operational clarity for teams designing content primarily for machine comprehension.

Definition:

AI-first content design refers to content engineered primarily for machine comprehension, prioritizing structural predictability, extraction stability, and reuse across AI systems.

Claim: grid system impact ai determines long-term content reuse.

Rationale: Reusable structure increases citation probability because models prefer stable, interpretable sources.

Mechanism: Models favor predictable extraction surfaces that reduce ambiguity during parsing and generation.

Counterargument: Over-standardization may reduce human readability in exploratory or narrative contexts.

Conclusion: Dual optimization is required to balance machine efficiency with human usability.

Structural strategy for long-term reuse

Ai understanding content grids becomes a strategic concern when content must persist across multiple AI-driven surfaces. When grid rules remain consistent, models learn to trust the structure and reuse content fragments with minimal reinterpretation. This trust increases the likelihood that content appears in summaries, highlights, and synthesized answers over time.

At the same time, predictable structure supports versioning and updates. Teams can modify semantic content without disrupting structural signals, which preserves continuity for AI systems that track changes across iterations.

Stable grids give models confidence in where information belongs. That confidence increases reuse without requiring repeated validation.

Interpretation consistency across AI surfaces

Grid systems ai interpretation affects how content travels between indexing, retrieval, and generation environments. When grids encode hierarchy and boundaries clearly, models reproduce that structure across different output formats. As a result, explanations, excerpts, and summaries remain aligned with original intent.

This consistency reduces fragmentation across AI surfaces. Content appears coherent whether accessed through search summaries, assistant responses, or internal knowledge tools.

Consistent interpretation reduces the risk of partial or distorted reuse. Structure acts as a safeguard against misalignment.

Balancing standardization and flexibility

Strategic grid design requires restraint. Excessive rigidity can constrain expression and reduce accessibility for human readers. However, insufficient structure undermines machine comprehension and reuse.

Effective AI-first design applies grid rules where stability matters most and allows flexibility where exploration or narrative flow is required. This balance preserves human engagement while maintaining machine reliability.

Balanced grids support both audiences. They guide AI systems without suppressing meaningful variation.

Operational implications for content teams

Grid strategy affects workflows, tooling, and governance. Teams that adopt consistent grids simplify quality control and reduce downstream correction costs. Over time, this approach lowers friction between content creation and AI consumption.

By treating grids as infrastructure rather than decoration, organizations align content operations with AI-driven discovery and reuse. This alignment defines the practical value of grid-aware strategy in AI-first environments.

Checklist:

- Are core concepts anchored through stable structural placement?

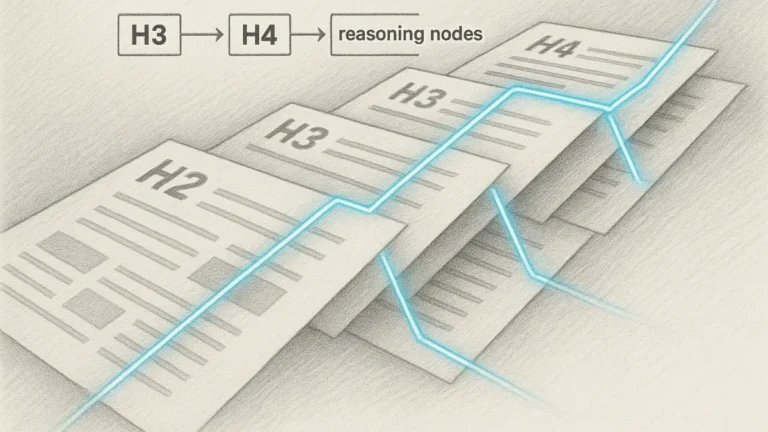

- Do H2–H4 layers preserve consistent positional hierarchy?

- Does each paragraph represent a single reasoning unit?

- Are abstract concepts reinforced through structurally aligned examples?

- Is interpretive ambiguity reduced through local definitions and transitions?

- Does the overall structure support progressive AI interpretation?

Future Research Directions on Grid–AI Interaction

This section outlines unresolved research questions and emerging directions, with grid system impact ai positioned as a growing determinant of model behavior. The scope includes multimodal and adaptive grids as models expand beyond text-only processing, reflecting ongoing advances reported by DeepMind Research. The purpose is to frame future investigation areas where grid logic intersects with layout awareness and learning dynamics.

Claim: grid system impact ai will increase as models gain layout awareness.

Rationale: Multimodal training regimes increasingly emphasize spatial logic alongside textual semantics.

Mechanism: Vision-language models internalize grid cues by jointly learning spatial arrangement and linguistic meaning.

Counterargument: Text-only systems may lag in exploiting grid signals due to limited spatial supervision.

Conclusion: Grid research remains strategically relevant as model architectures evolve.

Multimodal grids and spatial learning

Grid alignment ai models becomes more pronounced as multimodal systems integrate vision, layout, and language signals. When models process documents that combine text with visual structure, grids provide a common coordinate system linking spatial placement to semantic roles. As a result, models learn to associate position, grouping, and hierarchy across modalities.

This integration expands the role of grids beyond text organization. Grids begin to function as cross-modal alignment layers that synchronize how models interpret visual and linguistic inputs together.

Consistent alignment across modalities allows models to reuse spatial knowledge. This reuse accelerates learning and reduces ambiguity in multimodal reasoning.

Adaptive grids in dynamic content environments

Future systems increasingly encounter dynamic content where layout adapts to context, device, or user interaction. Grid logic ai models must therefore generalize beyond fixed templates. Research directions focus on how models can recognize grid intent even when spacing, density, or orientation changes.

Adaptive grids challenge models to infer structural invariants rather than fixed coordinates. Success in this area depends on learning relative relationships instead of absolute positions.

Models that capture invariant grid principles remain robust across formats. This robustness supports interpretation in responsive and adaptive interfaces.

Implications for text-only and hybrid models

Text-only systems may underutilize grid signals because they lack explicit spatial inputs. However, hybrid approaches that encode inferred layout from markup or document structure can partially bridge this gap. Research explores how abstract grid representations can transfer to language-only models.

This line of inquiry determines whether grid benefits remain exclusive to multimodal systems or become accessible across architectures. Outcomes will shape how broadly grid-aware design influences AI comprehension.

Hybrid representations allow models to approximate spatial reasoning. This approximation extends grid benefits without full visual input.

Strategic relevance of grid research

Grid research intersects with model architecture, training data, and deployment contexts. As AI systems increasingly operate on complex, structured content, understanding grid interaction becomes foundational rather than optional. Future work will likely formalize grid-aware objectives within training pipelines.

By treating grids as learnable structural signals, research aligns model development with real-world content organization. This alignment defines why grid–AI interaction remains a priority area for investigation.

Interpretive Logic of Grid-Oriented Page Structure

- Grid-aligned hierarchy resolution. Stable alignment between H2→H3→H4 layers allows AI systems to correlate positional order with semantic depth, supporting consistent boundary recognition.

- Positional regularity encoding. Recurrent grid placement establishes repeatable spatial signals that generative models interpret as structural continuity rather than decorative layout.

- Semantic weight distribution. Grid positioning implicitly communicates relative concept importance, enabling models to prioritize meaning without explicit ranking markers.

- Cross-section structural coherence. Uniform grid logic across sections reduces interpretive re-mapping and preserves contextual orientation during long-context processing.

- Inference stability through layout constraint. Constrained structural variation limits interpretive entropy, allowing reasoning processes to remain anchored to consistent structural cues.

This structural configuration explains how grid-oriented pages remain interpretable as coherent systems, enabling reliable parsing and reasoning within generative and AI-driven interpretation environments.

FAQ: Grid Systems and AI Comprehension

What is AI grid comprehension?

AI grid comprehension describes how artificial intelligence systems interpret content structure through consistent alignment, spacing, and positional order.

Why do grid systems matter for AI understanding?

Grid systems provide predictable structural signals that help AI models segment, prioritize, and relate information across long documents.

How do grid systems influence AI reasoning?

Grids constrain structural variation, which reduces interpretive ambiguity and supports more stable reasoning paths during inference and generation.

Do grid systems affect generative AI outputs?

Generative models reuse learned structural order from grid-aligned inputs, resulting in clearer sequencing and more coherent summaries and explanations.

How do grids support long-context processing?

Consistent grids anchor attention across extended contexts, helping models preserve boundaries and maintain orientation as content length increases.

Are grid systems relevant for multimodal AI models?

Multimodal models learn to associate spatial arrangement with semantic roles, making grid alignment increasingly important as layout awareness grows.

Can AI interpret content without grid systems?

AI can interpret unstructured content, but the absence of grid consistency increases uncertainty and raises interpretation cost in complex documents.

What role do grids play in content reuse?

Grid-aligned content remains easier for AI systems to extract, reference, and reuse across indexing, retrieval, and generation pipelines.

Do grid systems improve semantic stability?

When grid structure mirrors conceptual hierarchy, AI systems retain meaning more consistently and reduce semantic drift across sections.

Will grid importance increase in future AI systems?

As AI models gain stronger layout and spatial awareness, grid-based structural signals are expected to play a larger role in interpretation.

Glossary: Key Terms in Grid-Based AI Comprehension

This glossary defines core terminology used throughout the article to ensure consistent interpretation of grid logic, structural signals, and AI comprehension mechanisms.

AI Grid Comprehension

The capability of AI systems to interpret content meaning through consistent alignment, spacing, and positional order defined by grid systems.

Grid System

A rule-based spatial and logical alignment framework that constrains content placement into predictable coordinates and intervals.

Grid Consistency

The uniform application of grid rules across all content sections, preserving stable structural signals for AI interpretation.

Grid Signal

A recurring positional pattern that acts as a non-verbal structural cue, guiding segmentation and priority without adding semantic meaning.

Semantic Grid

A grid configuration whose structural hierarchy mirrors conceptual relationships, enabling stable meaning formation.

Reasoning Constraint

A structural limitation imposed by grid logic that narrows interpretive paths and reduces reasoning entropy during inference.

Grid-Based Reasoning

An inference process in which structural alignment guides how AI systems sequence, prioritize, and relate generated statements.

Reading Pipeline

The end-to-end sequence through which content moves from ingestion and retrieval to generative output in AI systems.

Structural Stability

The degree to which grid logic remains consistent across sections, enabling reliable long-context comprehension.

Positional Regularity

The repetition of alignment and spacing patterns that allows AI systems to associate position with structural function.