Last Updated on December 20, 2025 by PostUpgrade

Data-Driven Decisions in the AI Visibility Era

Decision-making increasingly occurs in environments where AI systems mediate visibility, prioritization, and interpretation of information. This article explains how data-driven AI decisions are formed, evaluated, and governed when organizational outcomes depend on machine-readable structure rather than human intuition.

The scope covers enterprise strategy, analytics practices, and governance frameworks that define how decisions remain reliable, auditable, and scalable under AI-driven visibility constraints.

Foundations of Data-Driven AI Decisions

Decision-making now operates in environments where AI systems actively filter, rank, and surface information for human and machine consumption, which makes data-driven AI decisions a structural requirement rather than a contextual choice.. In this context, data-driven AI decisions form the structural basis for consistent outcomes, because they allow systems to apply the same logic across identical conditions, as demonstrated in applied work from the Stanford Natural Language Institute. This section establishes the conceptual foundation that enables enterprise decisions to remain reliable under AI-mediated visibility.

Definition: AI decision understanding is the ability of an AI system to interpret decision intent, input signals, and evaluation logic in a way that enables consistent outcomes, traceable reasoning, and reliable reuse of decisions across organizational contexts.

Claim: Data-driven AI decisions replace intuition-based judgment with formalized and machine-interpretable decision logic.

Rationale: AI systems cannot interpret implicit human reasoning, therefore decision logic must exist in explicit, structured form to remain reusable and verifiable.

Mechanism: Organizations encode inputs, thresholds, and rules into structured data pipelines that allow AI systems to evaluate options deterministically.

Counterargument: In fast-changing or low-impact situations, human intuition can react faster than structured decision logic.

Conclusion: However, at enterprise scale, only structured decisions sustain consistency, auditability, and long-term AI-driven accessibility.

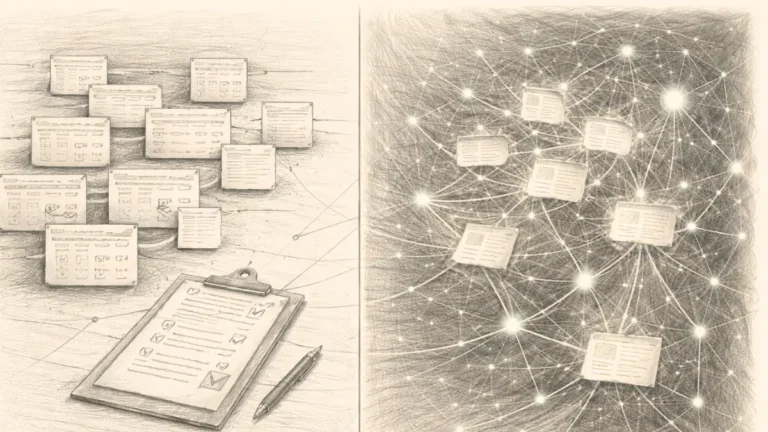

Conceptual Shift from Intuition to Structured Decisions

Traditional decision-making relies on human experience, contextual awareness, and informal reasoning. However, when AI systems mediate visibility, such reasoning loses transferability because other systems cannot read, reproduce, or validate it. As a result, intuition-driven decisions fail to scale across automated environments.

Therefore, structured decisions externalize reasoning into explicit criteria that AI systems can process repeatedly. This approach enables consistent outcomes across datasets and teams, while also allowing organizations to inspect and refine decision logic over time. Consequently, decision quality becomes a property of system design rather than individual judgment.

In simple terms, intuition operates inside human cognition, whereas structured decisions operate outside it, where AI systems can apply the same logic without reinterpretation.

Core Properties of Data-Driven AI Decisions

Data-driven AI decisions rely on a small set of defining properties that separate them from ad hoc or experience-based choices. These properties establish clear boundaries within which AI systems can interpret, evaluate, and reuse decision logic without ambiguity.

As a result, organizations can scale decision-making without losing control or accountability. Without these properties, even advanced AI models produce inconsistent or non-auditable outcomes.

- reproducible logic

- auditable inputs

- measurable outcomes

These properties define the minimum threshold for decisions to qualify as data-driven in AI systems.

Decision Intelligence as an AI Capability

As organizations delegate more evaluative tasks to AI systems, decision logic evolves from a supporting function into a standalone capability. In this context, AI decision intelligence defines how systems assess alternatives, resolve trade-offs, and prioritize outcomes under structured constraints, a distinction explored in systems research from MIT CSAIL. This section explains why decision intelligence differs fundamentally from automation and why enterprises must treat it as a separate layer of capability.

Definition: AI decision intelligence refers to the capability of AI systems to evaluate options, apply explicit constraints, and select outcomes based on structured logic rather than executing predefined tasks.

Claim: AI decision intelligence represents a distinct capability that goes beyond task automation.

Rationale: Automation executes predefined actions, whereas decision intelligence evaluates competing options under variable conditions.

Mechanism: AI systems combine structured data, scoring logic, and constraint evaluation to determine optimal or acceptable outcomes.

Counterargument: In stable environments with fixed rules, traditional automation can deliver sufficient results without decision intelligence.

Conclusion: However, when conditions change or trade-offs emerge, only decision intelligence can maintain consistent and explainable outcomes.

Principle: AI-supported decisions remain reliable only when decision logic, data assumptions, and evaluation criteria stay structurally explicit and stable across time, systems, and use cases.

Decision Intelligence vs Task Automation

Task automation focuses on executing known procedures with minimal variation. It assumes that inputs follow predictable patterns and that predefined rules remain valid across situations. As a result, automation excels in repetitive workflows but struggles when context or priorities shift.

Decision intelligence, by contrast, operates at the level of evaluation rather than execution. It interprets inputs, weighs alternatives, and selects outcomes based on explicit logic models. Therefore, organizations rely on decision intelligence when decisions require comparison, prioritization, or justification rather than simple task completion.

In simpler terms, automation follows instructions, while decision intelligence decides which instruction matters under current conditions.

Where Decision Intelligence Is Applied

Decision intelligence appears most clearly in systems that must choose between competing options rather than perform a single action. These systems operate in environments where priorities change, resources remain limited, and outcomes require justification.

Accordingly, organizations deploy decision intelligence in contexts where logic must remain explicit and reviewable. These applications rely on structured evaluation rather than implicit procedural flow.

· prioritization engines

· resource allocation systems

· risk evaluation pipelines

These applications share a common requirement: explicit decision logic rather than implicit automation.

Data Quality as a Determinant of AI Decisions

AI systems evaluate options based on the signals they receive, therefore data quality in AI decisions directly determines whether outcomes remain reliable under scale. In enterprise environments, even advanced models fail when input data lacks accuracy, consistency, or temporal relevance, a dependency formalized in data governance standards from the National Institute of Standards and Technology. This section explains how data quality shapes decision behavior and why poor data propagates systemic risk.

Definition: Data quality describes the degree to which data remains accurate, complete, consistent, and timely enough to support correct interpretation and evaluation by AI systems.

Claim: Data quality acts as a primary determinant of AI decision outcomes rather than a secondary optimization factor.

Rationale: AI systems apply logic to available data without contextual correction, which means errors in inputs directly translate into errors in decisions.

Mechanism: Structured pipelines ingest, validate, and transform data before decision logic evaluates signals against defined thresholds and constraints.

Counterargument: Large datasets can sometimes compensate for individual data defects through statistical aggregation.

Conclusion: However, at decision level, even small data defects can distort prioritization and undermine trust in AI-driven outcomes.

Why Data Quality Directly Shapes Decisions

AI decision logic operates on the assumption that incoming data reflects reality within defined tolerances. When data maintains internal consistency and temporal alignment, decision models can compare options and assign scores with predictable behavior. As a result, decision outcomes remain stable across repeated evaluations.

However, when data degrades, decision logic amplifies rather than corrects these defects. Inconsistent or incomplete inputs distort scoring functions and shift outcomes away from intended priorities. Therefore, data quality directly shapes not only accuracy but also the internal coherence of AI decisions.

Put simply, AI systems decide based on what data shows, not on what data should have shown.

When Data Quality Becomes a Systemic Risk

Data quality turns into a systemic risk when defects persist across pipelines and influence multiple decision layers. In such cases, AI systems propagate the same distorted signals into prioritization, allocation, and risk assessment workflows. Consequently, errors compound rather than remain isolated.

The following mapping illustrates how specific data issues translate into concrete decision failures across AI-driven environments.

| Data issue | Decision consequence |

|---|---|

| Incomplete data | Incorrect prioritization |

| Biased samples | Systemic skew |

| Delayed updates | Lagging decisions |

These failure patterns demonstrate that unmanaged data quality issues do not remain technical problems but evolve into organization-wide decision risks.

Modeling Decision Logic in AI Systems

Enterprises formalize evaluation criteria when they scale decision-making across AI systems, which makes AI decision modeling a core requirement rather than a technical detail. In this context, organizations encode decision logic in explicit structures so that models can apply the same reasoning across datasets and scenarios, a principle documented in decision system research from Carnegie Mellon University’s Language Technologies Institute. This section explains how decision logic takes shape inside AI systems and why model choice directly affects control and interpretability.

Definition: AI decision modeling refers to the formal representation of decision rules, thresholds, and scoring logic that AI systems use to evaluate options and select outcomes.

Claim: AI decision modeling determines how reliably AI systems can apply decision logic under changing conditions.

Rationale: AI systems require explicit representations of logic to compare alternatives and justify outcomes consistently.

Mechanism: Organizations encode rules, thresholds, and scoring functions into structured models that transform input signals into ranked or classified decisions.

Counterargument: End-to-end learning models can infer decision patterns without explicit logic definitions.

Conclusion: However, explicit modeling remains necessary when organizations require transparency, governance, and controlled decision behavior.

How Decision Logic Is Represented

AI systems represent decision logic through layered structures that separate inputs, evaluation criteria, and outcome selection. At the base level, data pipelines deliver normalized signals, while intermediate layers apply scoring functions or thresholds that reflect organizational priorities. As a result, decision logic becomes inspectable and repeatable rather than implicit.

Moreover, structured representations allow organizations to update logic without retraining entire models. Teams can adjust thresholds, add constraints, or refine scoring rules while preserving overall system behavior. Therefore, decision logic evolves through configuration rather than opaque model changes.

In simple terms, AI systems decide by following explicit rules and scores that organizations design in advance.

Trade-offs Between Model Types

Different model types impose distinct trade-offs between transparency and control. Rule-based systems expose decision logic directly, while probabilistic models balance flexibility with partial interpretability. Deep models, however, prioritize pattern recognition over explicit reasoning, which reduces direct control over individual decisions.

Consequently, organizations must align model choice with decision criticality and governance needs. When accountability matters, transparent models support review and correction. When adaptability dominates, less explicit models may perform better but limit explainability.

Put simply, greater model flexibility often reduces visibility into how decisions form.

| Model type | Transparency | Control |

|---|---|---|

| Rule-based | High | High |

| Probabilistic | Medium | Medium |

| Deep models | Low | Low |

Decision Support Systems in AI Environments

As organizations integrate AI into operational and strategic workflows, AI decision support systems emerge as a critical interface between automated evaluation and human judgment. These systems structure how recommendations appear, how alternatives compare, and how outcomes remain reviewable, a distinction examined in applied systems analysis published by IEEE Spectrum. This section explains how decision support differs from autonomous decision-making and why human oversight remains structurally embedded.

Definition: AI decision support systems are AI-enabled frameworks that assist human decision-makers by evaluating options, simulating outcomes, and presenting structured recommendations without executing final decisions.

Claim: AI decision support systems enhance decision quality by structuring evaluation rather than replacing human authority.

Rationale: Complex decisions require contextual judgment, accountability, and ethical oversight that fully autonomous systems cannot provide.

Mechanism: AI systems analyze inputs, apply decision logic, and surface ranked options while humans retain responsibility for final selection.

Counterargument: In highly standardized environments, autonomous decision execution can outperform human-in-the-loop systems.

Conclusion: However, when accountability and justification matter, decision support systems preserve control while improving analytical rigor.

Human–AI Interaction Model

Human–AI interaction in decision support systems centers on collaboration rather than delegation. AI systems process large volumes of data and apply consistent evaluation logic, while humans interpret results within organizational, ethical, and situational contexts. As a result, neither party operates independently.

Moreover, this interaction model prevents over-reliance on automated outputs. Humans review assumptions, challenge recommendations, and adjust decisions based on external knowledge that models cannot encode. Therefore, decision support systems function as analytical partners rather than decision authorities.

In simple terms, AI systems prepare decisions, and humans finalize them.

What AI Decision Support Actually Provides

AI decision support systems deliver value by structuring complex evaluations into manageable and comparable outputs. These systems do not act, but instead clarify options and consequences so that humans can decide with greater confidence.

Accordingly, organizations rely on decision support to reduce cognitive load while preserving responsibility. The following capabilities define what such systems consistently provide.

- comparative evaluations

- scenario simulations

- ranked recommendations

These functions support judgment without replacing accountability.

Evaluating and Validating AI Decisions

Organizations rely on AI decision evaluation methods to determine whether AI-supported outcomes remain correct, consistent, and aligned with business intent over time. In AI-mediated environments, evaluation moves beyond one-time testing and becomes an ongoing control function, as reflected in empirical assessment frameworks published by the OECD Data Explorer. This section explains how evaluation criteria and validation processes protect decision integrity at scale.

Definition: AI decision evaluation methods are structured approaches used to assess the correctness, reliability, and long-term performance of decisions produced or supported by AI systems.

Claim: Systematic evaluation is essential to maintain trust and reliability in AI-driven decisions.

Rationale: AI systems can continue producing decisions even when underlying assumptions drift, which makes unchecked outcomes increasingly unreliable.

Mechanism: Organizations apply predefined criteria and feedback loops to compare expected and actual decision outcomes over time.

Counterargument: In low-impact or experimental use cases, continuous evaluation may appear unnecessary.

Conclusion: However, at enterprise scale, evaluation and validation function as safeguards against silent decision degradation.

Evaluation Criteria for AI Decisions

Evaluation criteria define how organizations judge whether AI-supported decisions perform as intended. These criteria focus on observable outcomes rather than internal model behavior, which allows consistent assessment across systems and teams. As a result, evaluation remains comparable even when underlying models change.

Moreover, clearly defined criteria prevent subjective interpretation of decision quality. When organizations align on what success means, they can detect deviations early and correct decision logic before risks escalate.

- accuracy

- consistency

- outcome stability

Together, these criteria establish a shared baseline for determining whether AI decisions remain acceptable over time.

In simple terms, evaluation criteria answer whether decisions stay correct, repeatable, and reliable under real conditions.

Validation as a Continuous Process

Validation extends evaluation by embedding checks into the lifecycle of AI decisions rather than treating them as one-time events. Instead of validating only before deployment, organizations monitor outcomes as data, contexts, and priorities evolve. Therefore, validation becomes an ongoing mechanism rather than a static gate.

This continuous approach allows teams to detect drift, bias, or performance decline as soon as it appears. By linking validation to operational feedback, organizations preserve decision integrity without interrupting workflows or delaying outcomes.

Put simply, validation ensures that AI decisions remain trustworthy not only at launch but throughout their use.

A large financial services firm implemented automated credit decisioning based on historical repayment data. Initially, evaluation showed high accuracy, but validation later revealed declining outcome stability as economic conditions shifted. By embedding continuous validation, the organization adjusted decision thresholds and prevented systemic misclassification before losses escalated.

Example: An organization that documents decision criteria, outcome metrics, and validation intervals allows AI systems to attribute results to specific decisions, which increases confidence in future evaluations and supports consistent governance across teams.

Transparency, Explainability, and Accountability

As AI systems influence prioritization and outcomes, AI decision transparency becomes a structural requirement rather than an ethical add-on. Organizations must ensure that decision logic remains inspectable and attributable across systems, a requirement formalized in policy and technical guidance from the European Commission Joint Research Centre. This section explains what transparency entails in practice and how accountability boundaries remain enforceable in AI-mediated decisions.

Definition: AI decision transparency is the degree to which stakeholders can identify the inputs, rules, and reasoning that lead an AI system to a specific decision outcome.

Claim: Transparency enables accountability by making AI decision logic observable and traceable.

Rationale: Without visibility into how decisions form, organizations cannot assign responsibility or correct errors reliably.

Mechanism: Transparent systems expose inputs, logic paths, and outcome explanations in forms that humans and auditors can review.

Counterargument: Full transparency may reduce performance or expose sensitive logic in competitive environments.

Conclusion: Nevertheless, controlled transparency remains essential wherever decisions affect rights, resources, or regulated outcomes.

What Must Be Explainable

Explainability focuses on specific elements of the decision process rather than on entire models. Organizations achieve practical explainability when stakeholders can understand why a particular outcome occurred, even if the underlying model remains complex. Therefore, explainability targets decision artifacts, not model internals.

Moreover, explainability supports error detection and governance. When teams can trace how inputs influence outcomes, they can identify flawed assumptions and adjust logic without dismantling systems. As a result, explainability stabilizes trust while allowing iterative improvement.

- input factors

- decision rules

- outcome reasoning

Together, these elements define the minimum scope required to explain AI-supported decisions in operational settings.

In simple terms, explainability clarifies what influenced a decision and how the system reached its conclusion.

Accountability Boundaries

Accountability boundaries define who holds responsibility at each stage of an AI-supported decision. Organizations retain accountability for decision design, data selection, and deployment, even when AI systems execute evaluations autonomously. Therefore, responsibility does not transfer to the system itself.

Regulatory frameworks reinforce this separation by requiring clear ownership of decisions that affect individuals or organizations. When accountability remains explicit, organizations can investigate failures, remediate harm, and demonstrate compliance without ambiguity.

Put simply, transparency shows how a decision happened, while accountability defines who answers for it.

Strategic and Organizational Implications

When organizations rely on AI to inform long-term direction, AI-assisted strategic decisions reshape how leadership evaluates options under uncertainty. These decisions operate at a different level than operational optimization because they influence capital allocation, structural priorities, and institutional risk, a distinction analyzed in longitudinal research by the McKinsey Global Institute. This section explains how AI-supported strategy changes decision scope and what organizational conditions enable effective use.

Definition: AI-assisted strategic decisions are high-level organizational choices informed by AI-driven evaluation of data, scenarios, and trade-offs, while final authority remains with human leadership.

Claim: AI-assisted strategic decisions improve strategic coherence by grounding long-term choices in structured evaluation rather than isolated judgment.

Rationale: Strategic decisions involve multiple competing objectives that exceed human capacity for consistent comparison across large datasets.

Mechanism: AI systems aggregate signals, model scenarios, and surface trade-offs that leaders evaluate against organizational goals and constraints.

Counterargument: Overreliance on AI analysis may narrow strategic vision or undervalue qualitative factors.

Conclusion: Therefore, AI strengthens strategy when it informs judgment without replacing executive responsibility.

Strategy-Level Decision Domains

Strategic decisions differ from operational choices because they define direction rather than execution. In AI-supported environments, these decisions rely on structured comparisons across time horizons, risk profiles, and resource constraints. As a result, AI contributes most value where complexity exceeds intuitive evaluation.

Accordingly, organizations apply AI-assisted analysis to domains where misalignment carries long-term consequences. These domains require explicit logic and evidence to justify choices to stakeholders and regulators alike.

- investment prioritization

- portfolio balancing

- long-term risk allocation

Together, these domains illustrate where AI-assisted strategic decisions provide clarity without dictating outcomes.

In simple terms, AI helps leaders compare strategic options, but leaders decide which path to follow.

Organizational Readiness Signals

Organizational readiness determines whether AI insights translate into effective strategic action. Even accurate analysis fails when governance structures, decision ownership, or data maturity remain unclear. Therefore, readiness functions as a prerequisite rather than a byproduct of AI adoption.

Clear decision accountability, aligned incentives, and established review processes signal that an organization can absorb AI-generated insights responsibly. When these signals exist, strategy evolves through informed deliberation instead of reactive adjustment.

Put simply, organizations must prepare decision structures before expecting AI to improve strategy.

A multinational manufacturing group deployed AI models to guide capital investment across regions. Early pilots produced strong analytical insights, but leadership initially struggled to act due to unclear ownership of strategic decisions. After redefining decision accountability and review cycles, the organization used AI-supported analysis to rebalance its portfolio and reduce long-term exposure to volatile markets.

Risk, Governance, and Decision Lifecycle

As AI-supported decisions persist over time, the AI decision lifecycle defines how organizations control risk from design through retirement. In enterprise and public-sector contexts, lifecycle governance determines whether decisions remain aligned with objectives as data, incentives, and social conditions evolve, a principle reflected in AI governance frameworks developed by UNESCO. This section explains why lifecycle management anchors decision governance and how unmanaged transitions create systemic exposure.

Definition: The AI decision lifecycle describes the end-to-end progression of an AI-supported decision from initial design and deployment through monitoring, revision, and eventual retirement.

Claim: Effective governance depends on managing AI decisions across their full lifecycle rather than at isolated checkpoints.

Rationale: Decisions that perform correctly at deployment can degrade as data distributions, assumptions, or operating contexts change.

Mechanism: Lifecycle governance assigns ownership, controls, and review points to each stage so organizations can detect drift and intervene early.

Counterargument: Short-lived or low-impact decisions may not justify comprehensive lifecycle oversight.

Conclusion: However, for material decisions, lifecycle management prevents accumulated risk and preserves long-term decision integrity.

Lifecycle Stages of AI Decisions

Lifecycle stages structure how organizations create, operate, and retire AI-supported decisions. Each stage introduces distinct risks, which requires governance to adapt as decisions move from conception to sustained use. As a result, lifecycle clarity enables consistent oversight without constraining execution.

Explicit stages also separate responsibilities across teams. Design defines intent and logic, deployment operationalizes decisions, monitoring evaluates outcomes, and revision or retirement addresses misalignment. This separation strengthens accountability and reduces unmanaged drift.

- design

- deployment

- monitoring

- revision or retirement

Together, these stages ensure that AI-supported decisions remain aligned with organizational objectives throughout their operational lifespan.

In simple terms, decisions require ongoing care, not one-time approval.

Governance Failure Points

Governance failures often emerge at transitions between lifecycle stages rather than within a single stage. Organizations may invest heavily in design and deployment, yet weaken oversight once decisions operate at scale. Consequently, risks accumulate without visibility.

Typical failure points include unclear ownership after deployment, delayed reaction to performance drift, and reluctance to retire outdated decision logic. When governance does not extend across the full lifecycle, decisions continue operating beyond valid conditions, increasing systemic exposure.

Put simply, governance fails when decisions outlast the context they were built for.

Measuring Decision Outcomes in AI Systems

Organizations rely on AI decision measurement to understand whether AI-supported choices deliver intended results under real operating conditions. In AI-mediated environments, outcome measurement connects decision logic to observable impact, a focus emphasized in applied evaluation research from the Harvard Data Science Initiative. This section explains how outcome measurement works, what can be attributed to decisions, and why poorly chosen metrics distort understanding.

Definition: AI decision measurement refers to the systematic assessment of outcomes that result from decisions produced or supported by AI systems, with the goal of attributing effects to decision logic rather than model activity alone.

Claim: Measuring decision outcomes is necessary to determine whether AI-supported decisions create real-world value.

Rationale: Without outcome measurement, organizations cannot distinguish effective decision logic from coincidental results.

Mechanism: Measurement frameworks link decisions to downstream effects using defined indicators, time windows, and attribution rules.

Counterargument: In complex environments, isolating decision impact may appear impractical due to overlapping influences.

Conclusion: Nevertheless, structured measurement remains essential to maintain accountability and improve decision logic over time.

What Can Be Measured

Outcome measurement focuses on the effects of decisions rather than on internal model performance. Organizations measure whether a decision changed behavior, resource allocation, or risk exposure in a way that aligns with stated objectives. As a result, measurement centers on consequences that follow decision execution.

Attribution logic plays a central role in this process. Teams define which outcomes plausibly result from a specific decision and over what timeframe those effects should appear. By narrowing attribution scope, organizations avoid conflating decision impact with unrelated environmental changes.

In simple terms, measurement tracks what happened because a decision occurred, not how the model produced it.

When Metrics Mislead

Metrics mislead when they capture activity without reflecting decision effectiveness. For example, high throughput or model accuracy may improve while decision outcomes stagnate or worsen. Consequently, organizations may overestimate decision quality based on proxy indicators.

Misalignment also arises when metrics incentivize local optimization rather than systemic improvement. When teams optimize for easily measurable signals, they may neglect long-term effects or unintended consequences. Therefore, organizations must align metrics with decision intent rather than operational convenience.

Put simply, metrics mislead when they measure what is easy instead of what matters.

Interpretive Framework for AI Decision Outcomes

- Decision intent formalization. AI decision systems rely on clearly articulated intent boundaries that define objectives, constraints, and acceptable outcome ranges for consistent interpretation.

- Decision logic transparency. Explicit representation of data inputs, evaluation rules, and thresholds enables interpretive traceability across automated decision processes.

- Outcome relevance metrics. Meaningful assessment focuses on indicators that reflect real-world decision impact rather than isolated model performance signals.

- Temporal decision stability. Continuous observation of decision outputs reveals drift introduced by evolving data distributions, contextual change, or shifting assumptions.

- Accountability attribution. Clear ownership structures support governance by linking decision behavior to responsible roles across the decision lifecycle.

This interpretive framework explains how AI-supported decisions are evaluated, governed, and maintained over time to preserve accountability, relevance, and contextual alignment.

Checklist:

- Is the decision intent explicitly defined before evaluation?

- Are data inputs and assumptions documented and reviewable?

- Is decision logic separated from model implementation?

- Are outcome metrics aligned with decision objectives?

- Does validation occur continuously rather than only at deployment?

- Is ownership defined across the full decision lifecycle?

FAQ: Data-Driven AI Decisions

What are data-driven AI decisions?

Data-driven AI decisions are decisions produced or supported by AI systems using structured data, explicit logic, and measurable evaluation criteria rather than intuition.

How do data-driven decisions differ from automated actions?

Automated actions execute predefined tasks, while data-driven AI decisions evaluate alternatives, apply constraints, and select outcomes based on structured logic.

Why is decision logic more important than model accuracy?

Model accuracy measures prediction quality, but decision logic determines how outcomes are selected, justified, and governed in real operational contexts.

What role does data quality play in AI decisions?

Data quality directly shapes AI decisions because systems evaluate available signals without contextual correction, which makes accuracy and consistency critical.

How can organizations evaluate AI-supported decisions?

Organizations evaluate AI-supported decisions by measuring accuracy, consistency, and outcome stability rather than relying solely on model performance metrics.

Why is transparency essential for AI decision-making?

Transparency allows organizations to trace inputs, rules, and reasoning, which enables accountability, error correction, and regulatory compliance.

Who is accountable for AI-supported decisions?

Organizations remain accountable for decision design, data selection, and governance, even when AI systems execute evaluations autonomously.

How does the AI decision lifecycle reduce risk?

The AI decision lifecycle reduces risk by ensuring that decisions are monitored, reviewed, and revised as data and conditions change over time.

What can be measured in AI decision outcomes?

Organizations can measure changes in behavior, resource allocation, and risk exposure that result directly from AI-supported decisions.

When do metrics fail to reflect decision quality?

Metrics fail when they capture activity or volume without reflecting whether decisions achieve their intended real-world impact.

Glossary: Key Terms in Data-Driven AI Decisions

This glossary defines the core terminology used throughout the article to ensure consistent interpretation of AI-supported decision logic by both humans and machine systems.

Data-Driven AI Decisions

Decisions produced or supported by AI systems using structured data, explicit evaluation logic, and measurable outcome criteria rather than intuition.

Decision Intelligence

The capability of AI systems to evaluate alternatives, apply constraints, and select outcomes based on structured decision logic.

AI Decision Modeling

The formal representation of rules, thresholds, and scoring functions that AI systems use to evaluate options and generate decisions.

Data Quality

The degree to which data remains accurate, complete, consistent, and timely enough to support reliable AI decision outcomes.

Decision Support System

An AI-enabled system that assists human decision-makers by structuring evaluations, simulating scenarios, and ranking options without executing final decisions.

Decision Evaluation

The systematic assessment of AI-supported decisions based on accuracy, consistency, and outcome stability over time.

Decision Transparency

The ability to trace inputs, logic, and reasoning paths that lead an AI system to a specific decision outcome.

AI Decision Lifecycle

The full progression of an AI-supported decision from design and deployment through monitoring, revision, and retirement.

Outcome Attribution

The process of linking observed changes in behavior, resources, or risk directly to a specific AI-supported decision.

Decision Governance

The framework of ownership, controls, and review processes that ensures AI-supported decisions remain accountable and aligned with organizational intent.