Last Updated on December 20, 2025 by PostUpgrade

Schema Markup Essentials for Generative SEO

Schema markup seo has become a foundational requirement for publishing content that machines can reliably interpret and reuse across modern discovery systems. This article explains how structured data transforms web pages into machine-readable knowledge units that support consistent interpretation beyond traditional ranking-based search.

The scope covers conceptual foundations, implementation logic, governance practices, and long-term sustainability of schema markup in large-scale content systems. The purpose is to demonstrate why structured data is not an enhancement layer but a prerequisite for generative visibility, semantic stability, and long-term AI-driven accessibility.

Schema Markup as a Machine-Readable Layer

Schema markup basics define how modern web content becomes intelligible to automated systems operating beyond traditional keyword parsing, as formalized by standards maintained by the World Wide Web Consortium (W3C). In contemporary web infrastructure, content is consumed not only by users but also by multiple layers of machines that require explicit semantic instructions to process information reliably. This section explains schema markup as a semantic interface between content and machines, while defining its conceptual and technical boundaries within large-scale content systems.

Definition: Schema markup is a structured data vocabulary that enables machines to interpret content entities, attributes, and relationships consistently across systems.

Claim: Schema markup forms a dedicated machine-readable layer separate from human-facing content.

Rationale: Generative systems rely on explicit structural signals to resolve meaning deterministically and avoid probabilistic inference errors.

Mechanism: Schema vocabularies define entities and properties using standardized predicates that machines can parse without contextual guesswork.

Counterargument: Poorly implemented markup may be ignored or misinterpreted by parsers due to ambiguity or structural conflicts.

Conclusion: Correct schema usage increases semantic stability and enables reliable content reuse across machine-driven environments.

Definition: AI understanding is the ability of machine systems to interpret structured data, entity definitions, and semantic boundaries in a way that enables accurate extraction, reliable reasoning, and consistent reuse of content across AI-driven environments.

Structured Data Markup vs Visual HTML

Structured data markup represents semantic intent, whereas visual HTML primarily represents presentation and layout for human readers. While HTML elements such as headings and paragraphs convey hierarchical cues, they do not explicitly define what an entity is, how it relates to other entities, or which attributes are authoritative. As a result, machines must infer meaning indirectly when only visual markup is present.

In contrast, structured data markup provides explicit declarations that identify entities, assign properties, and define relationships in a standardized format. This separation allows machines to extract meaning without relying on visual patterns, language heuristics, or positional assumptions. Consequently, structured data functions as a parallel semantic layer that complements, rather than replaces, human-readable HTML.

Put simply, HTML shows how content looks, while structured data markup explains what the content actually is. Without this semantic layer, machines can see text but cannot reliably understand its role, importance, or relationships within a broader knowledge structure.

Schema.org Governance and Standardization

Schema.org operates as a collaborative vocabulary initiative governed by major web stakeholders and aligned with semantic web standards. Its governance model ensures that schema definitions remain interoperable across platforms while evolving in response to new content types and machine consumption patterns. This coordination reduces fragmentation and enables consistent interpretation across different systems.

Vocabulary evolution is managed through controlled extensions, deprecations, and community-reviewed proposals. This process balances innovation with backward compatibility, ensuring that existing implementations remain functional while new entity types and properties are introduced in a predictable manner. As a result, schema markup remains stable enough for long-term adoption while adapting to emerging semantic needs.

In practical terms, Schema.org provides a shared language that both publishers and machines can rely on. This shared vocabulary prevents semantic drift and ensures that content meaning remains consistent as it moves across platforms, tools, and automated interpretation layers.

How Search Systems Interpret Schema Markup

Structured data seo plays a central role in how modern interpretation pipelines convert web content into machine-consumable knowledge, as described in the technical guidance published by Google Search Central. Search and generative systems no longer rely solely on surface-level text analysis but instead process explicit semantic signals embedded within structured metadata. This section clarifies the logic behind these processing pipelines and defines how schema markup influences interpretation outcomes across both ranking-based and generative environments.

Principle: Content achieves stable visibility in AI-driven systems when schema markup, entity definitions, and structural boundaries remain consistent enough for models to interpret meaning without inference or ambiguity.

Definition: Structured data SEO refers to optimizing machine-understandable metadata to improve content interpretation accuracy.

Claim: Schema markup reduces ambiguity in content interpretation.

Rationale: Machines prioritize explicit metadata over inferred semantics when resolving meaning.

Mechanism: Parsers map schema entities and properties to internal knowledge graphs that support reasoning and retrieval.

Counterargument: Ambiguous or conflicting schemas reduce trust and may lead to partial or discarded extraction.

Conclusion: Deterministic markup improves extraction reliability across diverse interpretation systems.

Entity Resolution and Attribute Binding

Entity resolution is the process through which systems identify whether a referenced object corresponds to a known, distinct entity. Unique identifiers, such as consistent entity names and contextual properties, allow machines to distinguish between similarly named concepts without relying on probabilistic language cues. This step is critical for avoiding entity collisions that degrade interpretation quality.

Attribute binding connects entity identifiers to normalized properties that define their characteristics. By standardizing attributes such as type, location, or affiliation, systems can compare and aggregate information across multiple sources. In this context, schema markup seo provides a controlled mechanism for ensuring that attributes are interpreted consistently rather than inferred from surrounding text.

In simple terms, entity resolution answers what something is, while attribute binding explains its defining details. When both are explicitly defined, machines can interpret content with minimal uncertainty and higher confidence.

Rich Results vs Generative Summaries

Rich results represent enhanced visual elements within traditional search interfaces, while generative summaries synthesize information into narrative outputs. Although both rely on structured data, their interpretation goals differ significantly, leading to different processing behaviors.

| Feature | Rich Results | Generative Outputs |

|---|---|---|

| Primary function | Visual enhancement | Information synthesis |

| Output format | Structured UI elements | Natural language summaries |

| Dependency on schema | High | Very high |

| Tolerance for ambiguity | Moderate | Low |

Schema markup rich results depend on precise eligibility criteria, but generative systems apply stricter semantic validation before reuse. As a result, schema markup that is sufficient for rich results may still fail to meet the reliability thresholds required for generative summaries.

Put plainly, rich results display structured facts, while generative summaries reinterpret them. This difference explains why schema precision becomes increasingly critical as systems move from presentation toward reasoning and synthesis.

JSON-LD as the Preferred Schema Syntax

Json-ld schema markup has become the dominant syntax for implementing structured data in modern content systems, a position formalized through recommendations published by the W3C Structured Data guidelines. As websites scale and content architectures grow more complex, the choice of schema syntax directly affects stability, maintainability, and machine interpretation accuracy. This section explains why JSON-LD is preferred over alternative syntaxes and clarifies the practical implications of this choice for long-term implementation.

Definition: JSON-LD is a lightweight, script-based schema syntax that represents structured data in a format decoupled from the document object model and visual markup.

Claim: JSON-LD provides the highest implementation stability among schema syntaxes.

Rationale: It avoids tight coupling between semantic data and visual rendering layers.

Mechanism: Parsers extract JSON-LD blocks independently from page layout and HTML structure.

Counterargument: Incorrect nesting or malformed graphs can invalidate schema interpretation.

Conclusion: When implemented correctly, JSON-LD minimizes structural risk and maintenance overhead.

JSON-LD vs Microdata vs RDFa

Different schema syntaxes encode the same semantic information but impose different constraints on how content is authored and maintained. JSON-LD externalizes semantic definitions into a dedicated data block, while microdata and RDFa embed semantics directly within HTML elements. This distinction significantly affects scalability and long-term reliability.

Microdata and RDFa require developers to annotate individual HTML elements, which increases coupling between presentation and semantics. As layouts change, semantic annotations must be updated in parallel, raising the risk of inconsistencies. JSON-LD, by contrast, remains isolated from visual structure, allowing content teams to modify layouts without breaking semantic signals.

In practice, schema markup microdata and schema markup rdfa may be viable for small, static pages but introduce operational friction at scale. JSON-LD offers a cleaner separation of concerns, which is why it is favored in enterprise environments.

| Syntax | Maintenance effort | Scalability | Parsing reliability |

|---|---|---|---|

| JSON-LD | Low | High | High |

| Microdata | High | Medium | Medium |

| RDFa | High | Medium | Medium |

Simply stated, JSON-LD keeps meaning separate from layout. This separation makes it easier to maintain, safer to evolve, and more reliable for machines that need consistent semantic input over time.

Core Schema Types for Enterprise Content

Schema markup seo requires deliberate type selection because enterprise-scale content systems must balance semantic precision with long-term stability, as defined in the official documentation maintained by Schema.org. In complex publishing environments, teams face growing schema selection complexity as content formats expand and overlap. This section prioritizes the schema types that deliver the highest semantic value for content-focused systems while defining clear boundaries for their use.

Definition: Schema types define the semantic category of a content entity and determine how machines classify, interpret, and relate that entity within a knowledge graph.

Claim: Limited, consistent schema types outperform exhaustive coverage across enterprise content systems.

Rationale: Generative systems favor stable and repeatedly observed entity classes when building confidence in semantic interpretation.

Mechanism: Repeated use of the same schema types reinforces internal mappings between content patterns and entity categories.

Counterargument: Over-generalization can reduce specificity when content requires fine-grained distinctions.

Conclusion: Strategic schema type selection improves semantic reuse while preserving interpretive accuracy.

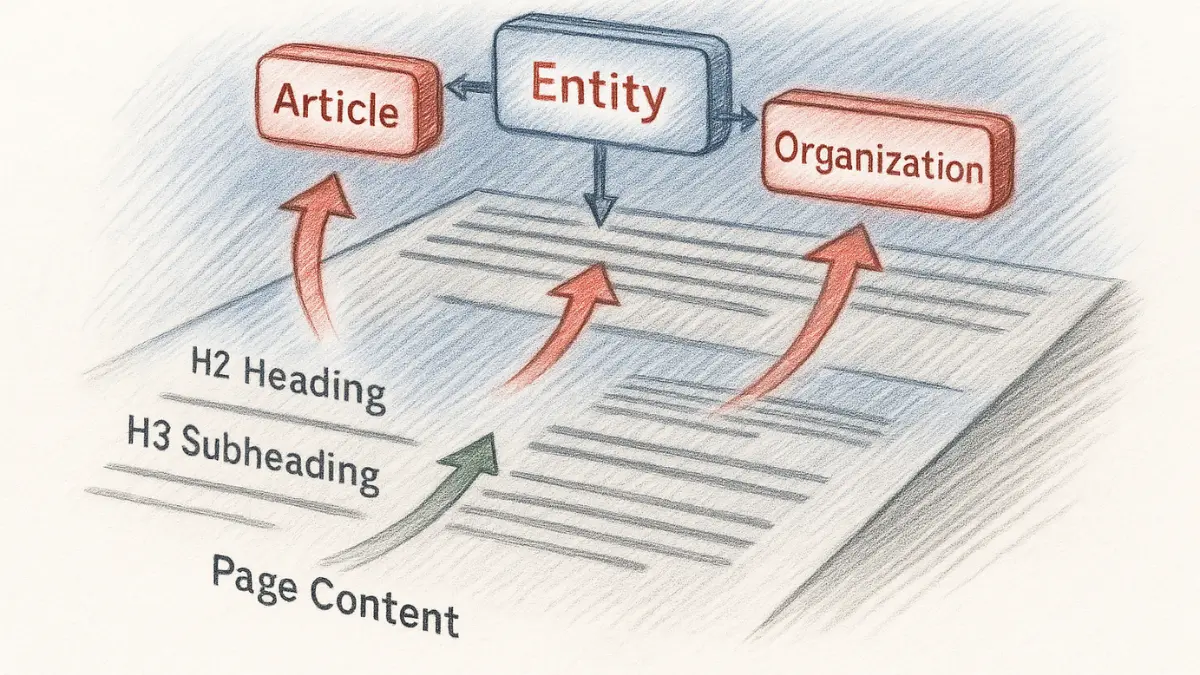

Article, Organization, BreadcrumbList

Article schema establishes a clear boundary between editorial content and other page elements, allowing systems to identify authoritative narrative units. When applied consistently, it signals that the page contains structured informational content rather than transactional or navigational material. This clarity improves how machines segment and summarize long-form text.

Organization schema defines the entity responsible for content creation, ownership, or publication. By associating content with a stable organizational entity, systems can assess provenance and contextual relevance more reliably. BreadcrumbList schema complements this structure by explicitly modeling navigational hierarchy, which helps machines understand page relationships within a site.

In simple terms, these schemas explain what the content is, who stands behind it, and where it sits within the site structure. Together, they create a foundational semantic layer that machines can interpret without relying on layout cues.

Product, Review, FAQ

Product schema represents commercial entities with clearly defined attributes such as identifiers, offers, and availability. This structure enables machines to distinguish transactional content from informational text and to bind product attributes consistently across pages. As a result, interpretation accuracy improves when content references the same product in multiple contexts.

Review and FAQ schemas model evaluative and question-driven content with explicit intent signals. Review schema captures opinions and ratings as structured attributes, while FAQ schema defines discrete question–answer pairs with clear boundaries. These schemas support precise extraction because machines can identify intent and response units without inference.

Put simply, these schemas tell machines what is being sold, how it is evaluated, and which questions the content directly answers. This explicit signaling reduces ambiguity and increases the likelihood of correct reuse in generative systems.

Schema Markup Implementation Workflow

Schema markup seo must move from theory to operational execution to deliver consistent machine interpretation, and production teams rely on guidance aligned with validation tooling documented by Google Rich Results Test. In production environments, schema markup implementation defines how semantic intent travels from content models into machine-readable graphs without manual drift. This section defines a repeatable workflow that fits content architecture constraints and supports long-term scalability.

Definition: Schema implementation is the controlled process of mapping content structures and attributes to machine-readable graphs that parsers can process deterministically.

Claim: Schema should be integrated at the content architecture level rather than applied after publication.

Rationale: Post-hoc markup introduces inconsistency because authors and developers interpret content boundaries differently over time.

Mechanism: Templates and data models enforce predictable schema injection at render time using predefined fields and rules.

Counterargument: Legacy systems resist refactoring due to technical debt and fragmented ownership.

Conclusion: Architectural integration yields stability by aligning content creation with semantic output from the start.

Example: A page that uses schema markup to define entities, properties, and relationships explicitly allows AI systems to segment facts accurately, increasing the likelihood that its structured statements are reused in generated summaries.

HTML Injection and CMS Integration

Schema markup html integration works best when schema data originates from structured fields rather than manual code insertion. In modern content management systems, developers map schema properties directly to content attributes such as titles, authors, dates, and categories. This approach ensures that semantic data updates automatically when content changes.

Schema markup wordpress implementations typically rely on custom fields, block attributes, or server-side rendering hooks that generate JSON-LD consistently. By centralizing schema generation at the CMS layer, teams reduce the risk of missing properties and conflicting definitions across templates. This consistency supports reliable parsing across large content libraries.

In simple terms, CMS-based schema integration removes guesswork. The system produces structured data automatically, which keeps semantic output aligned with published content.

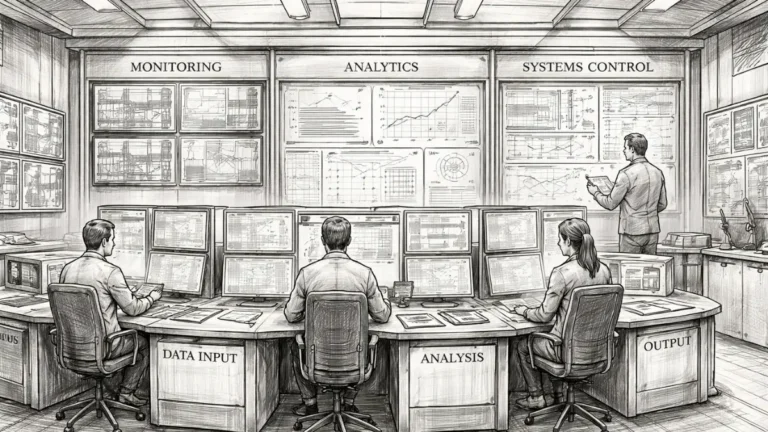

Validation and Testing Loop

Schema markup validation ensures that structured data conforms to expected formats and eligibility rules before systems attempt extraction. Automated testing tools detect syntax errors, missing required properties, and invalid relationships that reduce interpretability. Continuous validation prevents semantic decay as content evolves.

Schema markup testing should occur at multiple stages, including development, deployment, and post-publication monitoring. When errors appear, teams must trace them back to content fields or template logic rather than patching output manually. This workflow treats validation as a feedback loop, not a one-time check.

Put simply, validation confirms that machines can read what was intended. Regular testing catches errors early and keeps structured data reliable as content scales.

Schema Markup Optimization and Governance

Schema markup seo requires continuous optimization and governance to remain reliable as content systems scale, a challenge addressed in policy-oriented frameworks discussed in the OECD Digital Governance reports. In long-term publishing environments, schema markup optimization defines how semantic consistency survives frequent updates, new formats, and organizational changes. This section explains governance models that operate at enterprise scale and prevent gradual semantic erosion.

Definition: Schema governance is the structured process of maintaining semantic consistency, correctness, and continuity of schema implementations across multiple content systems and teams.

Claim: Governance prevents semantic degradation over time in large content ecosystems.

Rationale: High content velocity increases the risk of inconsistent schema usage and conflicting interpretations.

Mechanism: Centralized schema rulesets and approval workflows enforce alignment across templates, teams, and platforms.

Counterargument: Excessive control can slow experimentation and delay content delivery.

Conclusion: Balanced governance sustains semantic trust while preserving operational flexibility.

Schema Checklists and Audits

Schema markup checklist frameworks provide a standardized reference for validating required properties, supported types, and structural constraints before content reaches production. These checklists help teams verify that each schema instance adheres to predefined rules without relying on individual judgment. As a result, semantic output remains predictable even when multiple teams contribute content.

Schema markup tools support periodic audits by scanning published pages for inconsistencies, deprecated properties, or missing attributes. Regular audits surface systemic issues rather than isolated errors, allowing teams to correct root causes in templates or data models. This proactive approach reduces long-term maintenance costs and stabilizes machine interpretation.

In simple terms, checklists define what must be present, and audits confirm that it stays present. Together, they create a repeatable control loop that protects semantic quality at scale.

Measuring Impact Without Rankings

Schema markup benefits extend beyond traditional ranking signals, especially in environments where generative systems reuse content without direct traffic attribution. Instead of focusing on position metrics, teams measure impact through extractability, consistency of entity recognition, and reuse frequency across automated outputs. These indicators reflect whether machines can reliably consume and apply structured data.

Governance frameworks encourage teams to track schema coverage, error rates, and schema stability over time. When these indicators remain stable, content maintains semantic integrity even as formats evolve. This approach shifts optimization from short-term performance toward long-term interpretability.

Put simply, governance success appears when machines keep understanding content correctly. Stable interpretation becomes the primary metric when rankings no longer capture the full value of structured data.

Checklist:

- Does the page define entities and schema types with precise terminology?

- Are schema structures generated consistently across templates?

- Does each section maintain clear semantic boundaries?

- Are examples and definitions aligned with schema properties?

- Is semantic drift prevented through governance and validation?

- Does the structure support deterministic AI extraction?

Schema Markup in Generative SEO Systems

Schema markup seo has become a structural dependency for generative retrieval systems that assemble answers without relying on traditional ranking pipelines, a behavior described in research on knowledge-centric AI systems published by the Allen Institute for Artificial Intelligence (AI2). In non-ranking environments, schema markup structured data determines whether content can be safely extracted, validated, and reused by AI systems. This section connects schema markup to AI extraction logic and explains how structured signals influence reuse beyond classic search results.

Definition: Generative SEO leverages structured signals to enable controlled content reuse and factual grounding in AI-generated outputs.

Claim: Schema markup increases content extractability in generative systems.

Rationale: Generative models prioritize explicitly structured facts over inferred statements when assembling responses.

Mechanism: Schema anchors individual statements to defined entities and properties within internal knowledge representations.

Counterargument: Unverified or inconsistently defined structured data may be excluded from reuse pipelines.

Conclusion: Structured data enables safe and repeatable content reuse in AI-driven environments.

Schema Markup Snippets in AI Outputs

Schema markup snippets function as extraction-ready semantic units that AI systems can isolate without reconstructing meaning from surrounding context. When content defines entities, attributes, and relationships explicitly, generative systems can select and recombine these units with higher confidence. This reduces reliance on probabilistic language inference.

In operational terms, snippets derived from schema markup support factual grounding in AI outputs such as summaries, contextual explanations, and synthesized answers. Systems favor snippets that remain consistent across multiple documents because repetition strengthens semantic trust. Consequently, structured snippets appear more often in AI-generated responses than unstructured passages.

Put simply, schema markup snippets give AI systems clear, bounded facts they can reuse directly. This clarity increases accuracy and reduces semantic drift during generation.

An enterprise publisher standardized schema definitions across its editorial platform and enforced consistent entity usage. Within several months, AI-generated summaries began repeating the same structured facts across different prompts. This behavior reflected increased citation frequency driven by stable schema anchoring rather than surface-level text similarity.

Future-Proofing Schema Markup for AI Systems

Schema markup seo requires a long-term design perspective because AI architectures evolve faster than content standards, a challenge examined in research on machine knowledge representation by MIT CSAIL. As interpretation systems shift toward deeper reasoning and reuse, schema markup guide practices must prioritize durability over short-term optimization tactics. This section defines a long-term strategy for maintaining semantic continuity as AI systems, parsers, and knowledge graphs continue to change.

Definition: Future-proof schema design prioritizes semantic stability and backward compatibility over short-term implementation gains.

Claim: Stable schema vocabularies outlive algorithmic changes in AI systems.

Rationale: AI architectures and extraction models iterate more rapidly than standardized vocabularies.

Mechanism: Backward-compatible schemas preserve meaning by maintaining consistent entity definitions over time.

Counterargument: Emerging domains may require new entity types that existing schemas do not cover.

Conclusion: Conservative schema evolution ensures continuity while allowing controlled expansion.

Version Control and Deprecation Strategy

Schema version control establishes a formal record of changes applied to entity definitions, properties, and relationships. By tracking schema modifications over time, teams can evaluate how updates affect interpretation without disrupting existing content. This practice supports controlled evolution rather than reactive changes.

Deprecation strategies define how outdated properties or types are phased out without breaking downstream consumers. Instead of removing deprecated elements abruptly, systems maintain backward references and transition periods. In this context, schema markup guide practices emphasize predictability and documented change paths.

Put simply, version control and deprecation prevent semantic surprises. Machines continue to understand content even as schema definitions evolve gradually.

Schema as Knowledge Infrastructure

Schema functions not as a technical add-on but as a foundational layer of knowledge infrastructure. When schemas define entities consistently across content systems, they form a stable reference framework that AI systems can reuse across tasks and contexts. This consistency supports long-term reasoning and aggregation.

Treating schema as infrastructure shifts implementation decisions from individual pages to system-wide design. Schema markup seo guide approaches encourage aligning content models, editorial processes, and technical pipelines around shared semantic definitions. This alignment reduces fragmentation and strengthens interpretive trust.

In simple terms, schema becomes the backbone of machine understanding. When treated as infrastructure, it supports durable AI interpretation rather than isolated optimization efforts.

Structural Semantics of Machine-Readable Markup

- Semantic layer separation. A clear distinction between narrative content and formal semantic descriptors establishes parallel interpretive layers without mutual interference.

- Type coherence signaling. Stable semantic classifications act as long-lived reference points, enabling consistent interpretation across indexing and generative reuse cycles.

- Presentation-agnostic meaning. When semantic meaning is architecturally independent from layout, machine systems can resolve intent without reliance on visual structure.

- Entity definition consistency. Non-contradictory entity references reinforce internal semantic alignment, supporting reliable cross-document resolution.

- Interpretive durability. Structurally autonomous semantic layers remain intelligible under partial extraction, transformation, or long-context synthesis.

In this configuration, markup-related structures function as interpretive signals rather than procedural elements, preserving semantic continuity without altering the document’s primary narrative flow.

FAQ: Schema Markup SEO

What is schema markup SEO?

Schema markup SEO focuses on adding structured data that helps machines accurately interpret, classify, and reuse content across search and AI-driven systems.

How does schema markup differ from traditional SEO?

Traditional SEO emphasizes rankings and keywords, while schema markup SEO improves machine understanding by defining entities, attributes, and relationships explicitly.

Why is schema markup important for AI systems?

AI systems rely on structured signals to extract facts safely, so schema markup improves semantic clarity and reduces ambiguity during interpretation.

How do AI systems use schema markup?

AI systems map schema entities and properties into internal knowledge graphs that support extraction, reasoning, and content reuse.

What role does page structure play alongside schema markup?

Clear headings and semantic segmentation complement schema markup by defining content boundaries that machines can process consistently.

Why does structured data matter more than backlinks in AI outputs?

AI-generated answers prioritize verified facts and clear entity definitions, making structured data more influential than link-based authority signals.

How should schema markup be implemented?

Schema markup should be generated from content models using JSON-LD and validated regularly to maintain semantic consistency.

What are best practices for schema markup SEO?

Best practices include stable schema types, consistent terminology, validation workflows, and governance to prevent semantic drift.

How does schema markup affect generative visibility?

Schema markup increases the likelihood that content is extracted and reused accurately in AI-generated summaries and answers.

What skills are required to manage schema markup at scale?

Teams need content modeling, semantic reasoning, and technical validation skills to maintain reliable structured data.

Glossary: Key Terms in Schema Markup SEO

This glossary defines the core terminology used throughout this article to ensure consistent interpretation by both AI systems and human readers.

Schema Markup

A standardized structured data vocabulary that defines entities, attributes, and relationships to make content machine-readable.

Structured Data

Explicitly formatted metadata that enables machines to interpret content meaning without relying on language inference.

Entity

A distinct real-world or conceptual object defined in schema markup with a stable identity and specific properties.

Schema Type

A classification that specifies the semantic category of an entity, such as Article, Organization, Product, or FAQ.

JSON-LD

A script-based syntax for expressing schema markup that remains decoupled from HTML layout and visual structure.

Machine Interpretation

The process by which AI systems parse structured data to extract meaning, relationships, and factual assertions.

Semantic Consistency

The practice of using stable schema types and property definitions to prevent ambiguity and semantic drift.

Schema Validation

The process of verifying structured data syntax, required properties, and entity relationships for correctness.

Generative Visibility

The likelihood that structured content is extracted and reused accurately in AI-generated summaries and answers.

Schema Governance

A set of rules and processes that maintain schema consistency, stability, and correctness across content systems.