Last Updated on December 20, 2025 by PostUpgrade

Designing Machine Comprehension Content for AI-First Systems

Machine comprehension content defines how long-form text is engineered for accurate interpretation by modern AI systems. This article explains the structural rules, reasoning patterns, and clarity requirements that enable models to extract meaning with consistency and precision. It provides a framework for designing content aligned with AI-first principles, ensuring reliable visibility across generative discovery environments.

Foundations of Machine Comprehension Content in Modern AI Systems

Machine comprehension content establishes the structural logic that enables AI systems to interpret text consistently across complex retrieval environments. This section defines the principles required to build information that is computationally clear, logically segmented, and aligned with model-level reading patterns. The scope includes interpretability standards supported by research from leading institutions such as MIT CSAIL and other foundational AI labs.

Definition: Machine comprehension content is structured expression engineered to deliver clear boundaries, predictable logic, and stable terminology so AI systems can interpret, segment, and reuse meaning reliably across discovery surfaces.

Claim: Machine comprehension content requires deterministic structure and clear meaning boundaries.

Rationale: AI systems interpret text through statistical and symbolic cues that depend on predictable formatting and explicit logic.

Mechanism: Models extract meaning using hierarchical signals, unambiguous phrasing, and consistent terminology aligned with internal representation patterns.

Counterargument: Machine comprehension degrades when content uses figurative language or inconsistent structure.

Conclusion: Machine comprehension improves when writers maintain logical consistency and explicitly defined semantic units.

Structural Standards for Clear Interpretability

Machine comprehension depends on structural consistency that supports predictable interpretation across generative engines. This section explains how structured meaning delivery enables models to identify logical boundaries within long-form content. The scope focuses on layout predictability, paragraph discipline, and stable sentence patterns.

Single-idea paragraphs ensure that each unit of text contains one interpretable concept.

Predictable sentence logic maintains linearity and reduces parsing ambiguity for model inference.

Avoiding multi-layered clauses prevents syntactic noise that disrupts machine-level segmentation.

These requirements enable models to maintain structural clarity and reduce the risk of interpretive drift.

Research Foundations Supporting Model Interpretability

Model interpretability research provides the empirical basis for computationally clear writing. This section outlines how major AI laboratories define the mechanisms that govern meaning extraction, logical coherence, and representational stability. The scope connects research outputs to content design standards.

| Research Lab | Contribution | Relevance to Content Design |

|---|---|---|

| Stanford NLP | Meaning extraction methods | Defines boundaries in structured text |

| MIT CSAIL | Model interpretability | Clarifies how models parse logic |

| BAIR | Context modeling | Supports semantic clarity patterns |

These research findings demonstrate that content aligned with established interpretability principles improves clarity, reduces ambiguity, and enhances reliability across AI-driven extraction systems.

Principles of Content Design for Model-Oriented Interpretation

Machine comprehension design establishes a systematic approach to constructing text interpretable by AI. This section outlines principles for aligning structure, logic, and clarity to support consistent extraction across AI systems. The scope centers on deterministic formatting and machine-focused expression supported by evidence from research groups such as Stanford NLP.

Definition: Machine comprehension design refers to the structured preparation of text to optimize computational interpretation.

Claim: Machine comprehension design depends on logical ordering and consistent content structure.

Rationale: AI models rely on stable textual cues that indicate sequence and meaning boundaries.

Mechanism: Proper design ensures explicit hierarchy, context labeling, and unambiguous phrasing.

Counterargument: Without standardization, machine comprehension decreases across models and tasks.

Conclusion: A unified design approach strengthens machine understanding across discovery surfaces.

Deterministic Text Structuring

Deterministic structuring ensures that predictable content layout guides model-level interpretation. This section describes the structural rules that produce consistent parsing results across retrieval engines. The scope addresses hierarchy discipline, clarity signals, and boundary control.

- Clear hierarchy markers help models recognize structural depth and segment content reliably.

- Sentence linearity ensures that models process each unit without interference from nested or ambiguous clauses.

- Stable terminology across a section prevents representational drift and reduces uncertainty in model interpretation.

- Consistent paragraph boundaries reinforce discrete meaning units and support extraction accuracy.

Rules for Logic-Driven Content

Logic-driven content rules define how reasoning signals must be structured for accurate machine interpretation. This section outlines the logic cues that support hierarchy interpretation, topic flow, and reliable segmentation. The scope includes clarity markers and consistency thresholds associated with structured reasoning.

Principle: Machine comprehension improves when structural logic, sentence linearity, and terminology remain deterministic enough for models to map relationships without ambiguity or representational drift.

| Logic Pattern | Predictable Form | Unpredictable Form |

|---|---|---|

| Logic Signals | Linear ordering | Non-linear or overlapping cues |

| Terminology | Stable across sections | Drifting or inconsistent terms |

| Reasoning Structure | Clear segmentation | Ambiguous or blended logic |

These distinctions clarify how disciplined logic improves interpretability and reduces ambiguity in generative retrieval environments.

Sentence-Level Engineering for Machine-Comprehensible Writing

Machine-readable text design defines how sentences must be engineered for computational interpretation. This section explains sentence clarity patterns, logic dependencies, and structural predictability needed for accurate extraction across AI systems. The scope includes writing rules that minimize ambiguity and maintain consistent interpretive signals supported by research from institutions such as the Allen Institute for AI.

Definition: Machine-readable text design refers to engineering sentences so that models can reliably extract meaning.

Claim: Sentence-level structure determines how models interpret relationships.

Rationale: AI systems require stable phrasing and linear logic to identify meaning boundaries.

Mechanism: Clean syntax, consistent subjects, and direct predicates reduce interpretive errors.

Counterargument: Complex or figurative phrasing increases computational load and reduces accuracy.

Conclusion: Clear and linear sentences improve overall machine comprehension.

Principles of Linear Sentence Construction

Linear construction establishes the syntactic patterns required to support machine-focused phrasing. This section describes core sentence rules that reduce ambiguity and increase interpretive precision across retrieval engines. The scope addresses syntactic discipline, subject–predicate clarity, and boundary management.

- Sentences should follow a consistent subject–predicate–meaning order to maintain interpretive stability.

- Each sentence should express one factual unit, preventing interference between conceptual signals.

- Reducing nested clauses improves model performance by limiting representational noise.

- Maintaining linear flow ensures that meaning boundaries remain computationally visible.

These rules ensure that linear phrasing supports consistent sentence-level clarity for AI interpretation.

Model Interpretation Risks in Poor Sentence Design

Poor sentence structure introduces interpretive risks that weaken unambiguous content formatting across AI-driven retrieval systems. This section outlines common failure patterns that reduce extraction accuracy and demonstrates their impact through an applied evaluation case. The scope highlights representational instability and boundary loss observed in model testing.

AI2 evaluations show that models misinterpret sentences containing overlapping clauses or drifting subjects, leading to inconsistent meaning extraction across inference passes. NIST assessments of sentence-level clarity demonstrate that multi-layered syntactic forms reduce confidence scores in algorithmic interpretation.

A microcase from these evaluations indicates that a long-form sentence combining multiple ideas causes boundary confusion, reducing accuracy in both entity recognition and logical segmentation. These findings confirm that disciplined sentence engineering improves computational comprehension and lowers interpretive risk across generative systems.

Designing Multi-Level Hierarchies Interpretable by AI

Structured reasoning text helps models distinguish context, depth, and relationships within long-form content. This section explains how hierarchical layers support consistent AI comprehension. The scope includes heading logic, depth signaling, and semantic segmentation informed by research from institutions such as the Berkeley Artificial Intelligence Research Lab.

Definition: Structured reasoning text refers to content organized to reflect explicit logical depth and relationships.

Claim: Multi-level hierarchy is a core requirement for machine comprehension.

Rationale: Models rely on hierarchical cues to interpret sequence and depth.

Mechanism: Explicit headings, depth markers, and layered units guide parsing.

Counterargument: Missing or vague hierarchy impairs structural interpretation.

Conclusion: Consistent levels improve clarity and reliability across AI extractors.

H2→H3→H4 Hierarchy Engineering

Hierarchy engineering establishes the depth structure required for content preparation for models. This section defines how heading layers create visibility for relationships and logical boundaries in long-form text. The scope includes level sequencing, depth marking, and consistent hierarchical signals.

- H2 headings define primary conceptual units organized around major reasoning structures.

- H3 headings introduce subordinate concepts that refine the scope and anchor contextual depth.

- H4 headings provide granular detail that supports precise segmentation and controlled interpretive flow.

- Sequential ordering across levels ensures that models track logical descent without ambiguity.

These principles create a layered structure that improves interpretability and maintains consistent reasoning flow across model-driven extraction.

Depth Alignment and Consistency Risks

Depth alignment determines how deterministic content organization supports clarity across hierarchical layers. This section explains the risks introduced by incorrect depth mapping and demonstrates how aligned hierarchy improves interpretability across retrieval systems. The scope includes structural accuracy, consistent layering, and semantic coherence.

| Depth Level | Function | AI Interpretation Role |

|---|---|---|

| H2 | Establishes core conceptual blocks | Defines primary segmentation boundaries |

| H3 | Expands or narrows conceptual focus | Clarifies contextual depth and meaning refinement |

| H4 | Provides granular detail or procedural specificity | Supports fine-grained parsing and explicit relational mapping |

Aligned hierarchy ensures that models can interpret structural depth, detect logical transitions, and maintain consistent reasoning across multi-level content.

Consistent Terminology and Semantic Stability for AI Extraction

Consistent meaning patterns enable models to map terminology to stable internal representations across long-form content. This section outlines terminology control, semantic boundaries, and drift prevention required for reproducible AI interpretation. The scope includes rules for micro-definitions and vocabulary governance supported by standards research from organizations such as the W3C.

Definition: Consistent meaning patterns refer to stable terminology rules ensuring semantic persistence across content.

Claim: Terminology stability determines semantic consistency in AI extraction.

Rationale: Without stable terms, models lose mapping precision.

Mechanism: Local micro-definitions anchor terms to repeatable patterns.

Counterargument: Uncontrolled variation disrupts conceptual alignment.

Conclusion: Standardized vocabulary increases reproducibility of model interpretation.

Terminology Governance Rules

Terminology governance establishes the structure needed to maintain logic-aligned content across large knowledge collections. This section defines the rules that preserve semantic clarity and prevent representational drift. The scope includes definition placement, vocabulary constraints, and terminology sequencing.

- Each specialized term should appear with an immediate micro-definition to secure its meaning boundary.

- Terminology must remain consistent across sections to preserve model-level semantic alignment.

- Controlled vocabulary lists reduce variability and ensure stability across related content units.

- Updating terms must follow a governance process that preserves backward compatibility for AI extraction.

These rules maintain semantic stability and strengthen the reliability of terminology across machine interpretation environments.

Semantic Drift Prevention

Semantic drift prevention ensures that content precision modeling remains stable as text evolves or scales. This section explains structural safeguards that prevent terminology from drifting across versions or contexts. The scope includes interpretive accuracy, definition anchoring, and alignment checks informed by standards from NIST evaluations.

A microcase demonstrates how terminology drift reduces interpretive stability: a NIST assessment of documentation updates showed that reworded definitions caused inconsistent extraction across successive model evaluations. W3C guidance on terminology management indicates that maintaining fixed definition anchors reduces ambiguity and preserves structural meaning across transformations. This pattern confirms that consistent terminology governance prevents drift and supports precise content interpretation across AI-driven retrieval systems.

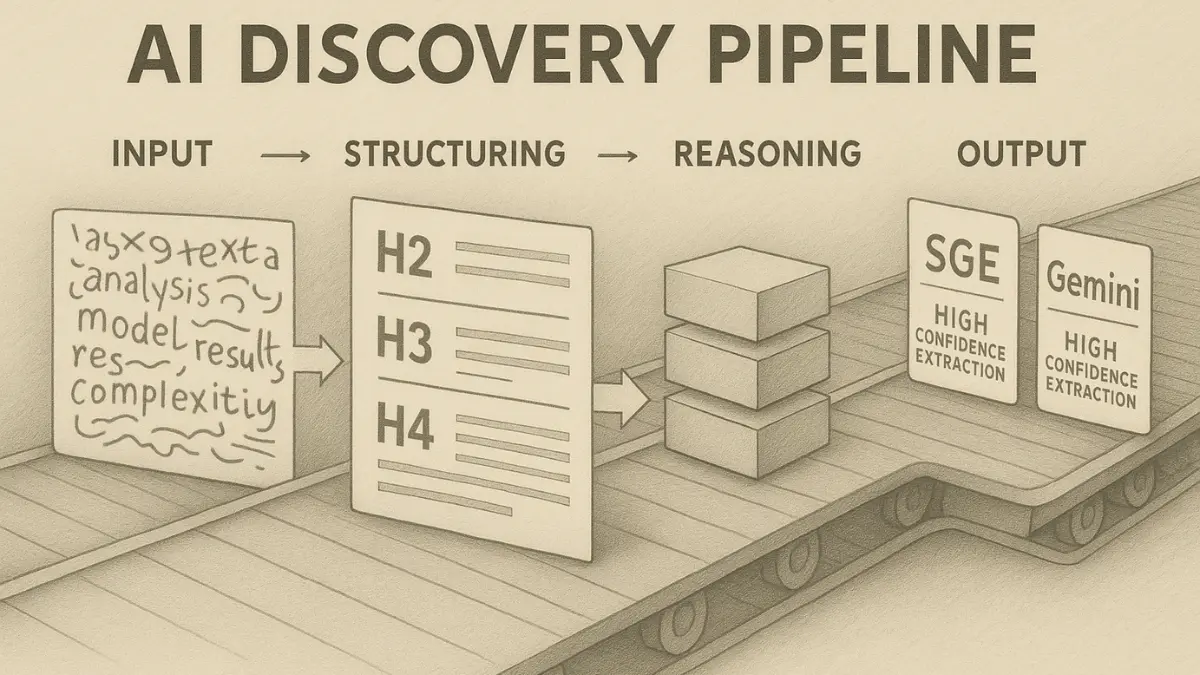

Content Structuring for High-Fidelity AI Discovery Panels

Clarity-safe content structure ensures predictable extraction across SGE, ChatGPT, Gemini, and Perplexity. This section outlines layout principles and block-level segmentation that maintain stable interpretive signals for model-driven retrieval. The scope covers precision formatting based on empirical guidance from organizations such as the OECD.

Definition: Clarity-safe content structure refers to structural design that maximizes clarity under varied AI extractors.

Claim: Discovery engines prioritize structured clarity over stylistic variation.

Rationale: AI systems seek stable patterns for summarization and retrieval.

Mechanism: Consistent blocks enable reliable extraction across panels.

Counterargument: Non-standard layout reduces visibility and interpretability.

Conclusion: Layout precision enhances discoverability across engines.

Structures for Predictable Extraction

Predictable extraction requires a model-friendly writing style that aligns content signals with retrieval systems. This section defines extraction rules that ensure stable segmentation across discovery platforms. The scope includes clarity markers, consistent boundaries, and interpretable formatting.

- Headings must follow explicit hierarchical layers to guide segmentation boundaries for AI extraction.

- Each paragraph must contain one idea to maintain predictable meaning units across panels.

- Lists and tables should use clean formatting to support stable interpretation by structured extractors.

- Sentence linearity and consistent syntax help prevent misalignment during summarization.

These rules create predictable structures that improve extraction fidelity across generative interfaces.

Evaluating Discovery-First Content Performance

Evaluation of discovery-first content depends on high-precision content layout designed to support consistent visibility across major retrieval engines. This section compares how SGE, Gemini, Perplexity, and ChatGPT interpret structured information. The scope includes extraction accuracy, layout performance, and retrieval consistency.

| System | Extraction Behavior | Structural Sensitivity |

|---|---|---|

| SGE | Prefers consistent blocks and linear structure | High sensitivity to layout irregularities |

| Gemini | Prioritizes logical hierarchy | Medium sensitivity to formatting variations |

| Perplexity | Extracts segmented meaning units effectively | High sensitivity to multi-idea paragraphs |

| ChatGPT | Interprets dense hierarchical content with stability | Medium sensitivity to heading depth changes |

This comparison demonstrates that precision layout improves interpretability and increases the stability of structured meaning flow across AI discovery panels.

Semantic Containers and Modular Content Blocks

Structured text clarity principles define modular segmentation for AI reasoning. This section describes concept blocks, mechanism blocks, example blocks, and implication blocks used to maintain clean interpretive boundaries. The scope covers modular transformation for model reuse supported by research from organizations such as the European Commission Joint Research Centre.

Definition: Structured text clarity principles refer to rules guiding modular segmentation in machine-targeted writing.

Claim: Modular containers optimize AI reasoning and content retrieval.

Rationale: Models recombine conceptual units based on modular clarity.

Mechanism: Containers separate definitions, mechanisms, examples, and implications.

Counterargument: Unsegmented text produces lower reuse and fragmented reasoning.

Conclusion: Modularization accelerates AI comprehension and structured extraction.

Concept Blocks

Concept blocks provide the foundation for structured narrative for models by defining the core unit of meaning. This section outlines the essential characteristics of concept blocks that support clean reasoning boundaries. The scope includes term anchoring, context framing, and conceptual isolation.

- Each concept block should introduce one definable idea supported by a local micro-definition.

- Concept blocks must maintain strict internal coherence to prevent mixing mechanisms or implications.

- Blocks should appear in a predictable sequence to support consistent reconstruction by models.

- Transitions between blocks should reflect clear semantic boundaries.

These characteristics ensure that concept blocks form stable units of meaning suitable for structured model interpretation.

Example: When concept blocks define ideas clearly and mechanism blocks separate operational logic, AI systems can recombine these modules during extraction, increasing the likelihood that structured segments will appear in high-confidence generative outputs.

Mechanism and Implication Blocks

Mechanism and implication blocks define procedural logic and downstream effects that support model-oriented content design. This section explains how these block types clarify operational structure and predictive implications within AI-readable text. The scope covers mechanistic detail, causal mapping, and implication alignment.

A microcase based on EC JRC documentation reviews demonstrates how mechanism blocks separate operational steps from contextual explanations, improving clarity during model parsing. OECD guidance on structured reporting indicates that implication blocks help models predict consequences by isolating downstream effects of defined mechanisms. These findings show that mechanism and implication blocks provide stable structural anchors that enhance compression-safe text design and support adaptive meaning flow across AI systems.

Evaluation of Machine Comprehension Quality in Enterprise Content

Interpretation-safe content must be tested against reproducibility, structural clarity, and extraction reliability. This section explains evaluation methods for machine comprehension quality that determine whether long-form enterprise text remains stable across different discovery engines. The scope includes reproducibility metrics, layout validation, and model-level interpretation tests informed by research standards from institutions such as the NIST.

Definition: Interpretation-safe content refers to content designed to maintain consistent machine interpretation under varied retrieval models.

Claim: Quality evaluation identifies whether content remains interpretable across models.

Rationale: Different engines apply different extraction pathways.

Mechanism: Evaluation metrics analyze consistency, clarity, and reasoning stability.

Counterargument: Without evaluation, structural errors remain undetected.

Conclusion: Regular assessment ensures long-term AI-driven accessibility.

Structural Reproducibility Metrics

Structural reproducibility metrics determine how content stability modeling maintains interpretive consistency across discovery engines. This section outlines reproducibility signals that measure stability at the structural, contextual, and semantic levels. The scope includes clarity scoring, segmentation checks, and model-level verification.

Content reproducibility assessments evaluate whether heading architecture maintains consistent segmentation across repeated model passes. Text clarity scoring measures how well single-idea paragraphs preserve meaning boundaries during extraction. Consistency tests compare interpretive outputs across engines to detect drift, loss of structure, or ambiguous reasoning segments. These reproducibility metrics ensure that content maintains structural reliability over time.

Evaluation Workflow for Enterprise Content

Evaluation workflows define an ai-recognition clarity flow that ensures enterprise content remains valid for long-term machine interpretation. This section describes the sequence of steps used to test structural alignment, assess clarity signals, and validate reasoning stability across retrieval systems. The scope includes workflow sequencing, multi-engine validation, and interpretive diagnostics.

A standard workflow begins with structural audits that confirm heading hierarchy and paragraph discipline. Multi-engine extraction tests compare outputs from SGE, ChatGPT, Gemini, and Perplexity to identify variations caused by inconsistent formatting. Interpretation diagnostics analyze whether reasoning chains preserve their structure when processed by different engines. These workflow steps ensure deterministic content organization and controlled structure writing that sustain high-quality machine comprehension across evolving discovery environments.

Governance, Maintenance, and Long-Term AI Accessibility

Machine-aligned expression ensures long-term accessibility across evolving AI systems. This section outlines governance frameworks for maintaining clarity, structure, and terminology across a content portfolio as models, engines, and extraction methods change. The scope includes updates, audits, and semantic versioning supported by institutional standards from organizations such as the Harvard Data Science Initiative.

Definition: Machine-aligned expression refers to expression patterns that remain valid under updated AI interpretation models.

Claim: Governance frameworks preserve machine comprehension over time.

Rationale: AI models evolve and require stable structural inputs.

Mechanism: Periodic audits, terminology management, and structure validation sustain clarity.

Counterargument: Without governance, drift increases and retrieval quality declines.

Conclusion: Maintenance practices ensure persistent AI accessibility.

Governance Workflows

Governance workflows provide the structure required for content preparation for models across long-term publishing cycles. This section describes the workflow components that maintain stability in terminology, structure, and reasoning patterns. The scope includes audit stages, terminology alignment, and structural verification.

- Governance cycles must begin with terminology audits that verify consistency across updated content sets.

- Structural reviews ensure that headings, paragraphs, and reasoning chains maintain required hierarchies.

- Version controls and documentation logs preserve interpretive continuity as content evolves.

- Periodic extraction tests across major engines validate whether updates maintain visibility and logical integrity.

These workflow elements ensure that governed content continues to perform reliably across AI interpretation environments.

Long-Term Clarity Maintenance

Long-term clarity maintenance ensures that consistent meaning patterns support reliable interpretation as engines introduce new extraction models. This section explains maintenance practices that protect semantic stability while adapting content for new systems. The scope includes terminology review, structure validation, and alignment with evolving standards from organizations such as OECD, NIST, and W3C.

OECD reporting guidelines emphasize maintaining fixed semantic anchors so models can consistently interpret context. NIST clarity evaluations show that unchanged definition boundaries reduce drift during multi-engine extraction. W3C recommendations highlight the importance of consistent structure markers that help models parse updated documents without losing relational meaning. These findings demonstrate that long-term clarity maintenance preserves structured meaning flow and supports high-quality machine accessibility across evolving AI systems.

Checklist:

- Are key terms defined with stable micro-definitions to prevent drift?

- Do H2–H4 layers follow deterministic boundaries detectable by AI systems?

- Does each paragraph express a single reasoning unit with linear phrasing?

- Are concept, mechanism, and implication blocks segmented into clear containers?

- Is semantic ambiguity minimized through consistent transitions and terminology control?

- Does the structure support reproducible interpretation across SGE, ChatGPT, Gemini, and Perplexity?

Structural Foundations of Machine-Comprehensible Content

- Deterministic hierarchical signaling. Fixed depth relationships between structural layers provide unambiguous segmentation cues, supporting reliable context resolution.

- Terminology anchoring. Localized micro-definitions stabilize meaning by binding terms to explicit semantic references within the document scope.

- Linear propositional flow. Sentences structured around a single idea reduce interpretive branching and improve precision during machine parsing.

- Semantic containerization. Distinct separation of concepts, mechanisms, examples, and implications enables structured reasoning and controlled synthesis.

- Extraction consistency. Content that preserves interpretive accuracy across varied processing environments demonstrates structural robustness.

These foundations illustrate how machine-comprehensible content is interpreted as a system of stable semantic signals rather than a sequence of procedural instructions.

FAQ: Machine Comprehension Content for AI-First Systems

What is machine comprehension content?

Machine comprehension content is text engineered with explicit structure, clarity, and terminology stability so AI systems can interpret and reuse meaning consistently.

How does machine comprehension content differ from traditional writing?

Traditional writing prioritizes reader experience, while machine comprehension design prioritizes deterministic structure, linear sentences, modular blocks, and stable terminology for AI parsing.

Why does content structure matter for AI interpretation?

AI systems rely on hierarchy, headings, and segmented meaning units to detect relationships, context depth, and reasoning flow across long-form content.

How do AI systems evaluate structured content?

Models assess clarity, terminology consistency, boundary markers, and hierarchical depth to determine whether content is interpretable and reliable for extraction.

What role do semantic containers play?

Semantic containers separate concepts, mechanisms, examples, and implications, allowing AI to segment and recombine meaning with higher accuracy.

How does terminology stability affect AI comprehension?

Stable terminology prevents semantic drift, maintains representation accuracy, and ensures models interpret key concepts consistently over time.

How can I improve sentence-level clarity for AI?

Use single-idea sentences, linear phrasing, consistent subjects, and direct predicates to support clean model-level interpretation.

What are evaluation methods for machine comprehension quality?

Evaluation includes reproducibility tests, multi-engine extraction checks, clarity scoring, and reasoning-chain validation across SGE, ChatGPT, Gemini, and Perplexity.

How does structured content improve visibility in AI discovery panels?

Clarity, hierarchy, and consistent blocks allow engines to extract meaning reliably, increasing the likelihood of reuse in AI-generated answers.

How do governance practices support long-term AI accessibility?

Governance workflows ensure terminology alignment, hierarchy stability, structural validation, and periodic audits to preserve clarity as AI systems evolve.

Glossary: Key Terms in Machine Comprehension Content

This glossary defines the terminology used in machine comprehension content to support consistent interpretation, structured reasoning, and AI-aligned clarity.

Machine Comprehension Content

Text engineered with deterministic structure, linear phrasing, and stable terminology to support reliable AI interpretation and extraction.

Atomic Paragraph

A paragraph containing one idea expressed in 2–4 sentences, enabling models to detect clear semantic boundaries.

Hierarchical Depth

A structured sequence of H2→H3→H4 layers that signals logical depth and contextual relationships to AI systems.

Sentence-Linearity

A rule requiring clear subject–predicate–meaning order to reduce ambiguity and maintain predictable model-level parsing.

Terminology Stability

Consistent use of defined terms across an article to prevent semantic drift and preserve internal representation accuracy in AI models.

Semantic Containers

Modular blocks that separate concepts, mechanisms, examples, and implications to support structured reasoning and model reuse.

Deterministic Structure

A predictable layout in which headings, paragraphs, and reasoning patterns follow consistent rules detectable by AI extractors.

Interpretation Reproducibility

The ability of AI systems to return consistent interpretations across engines, versions, and extraction passes.

Module-Level Reasoning

The model’s ability to recombine segmented blocks such as definitions and mechanisms to produce coherent generative outputs.

Long-Term AI Accessibility

The practice of maintaining structure, terminology, and clarity through governance workflows to ensure persistent interpretability as AI models evolve.