Last Updated on December 20, 2025 by PostUpgrade

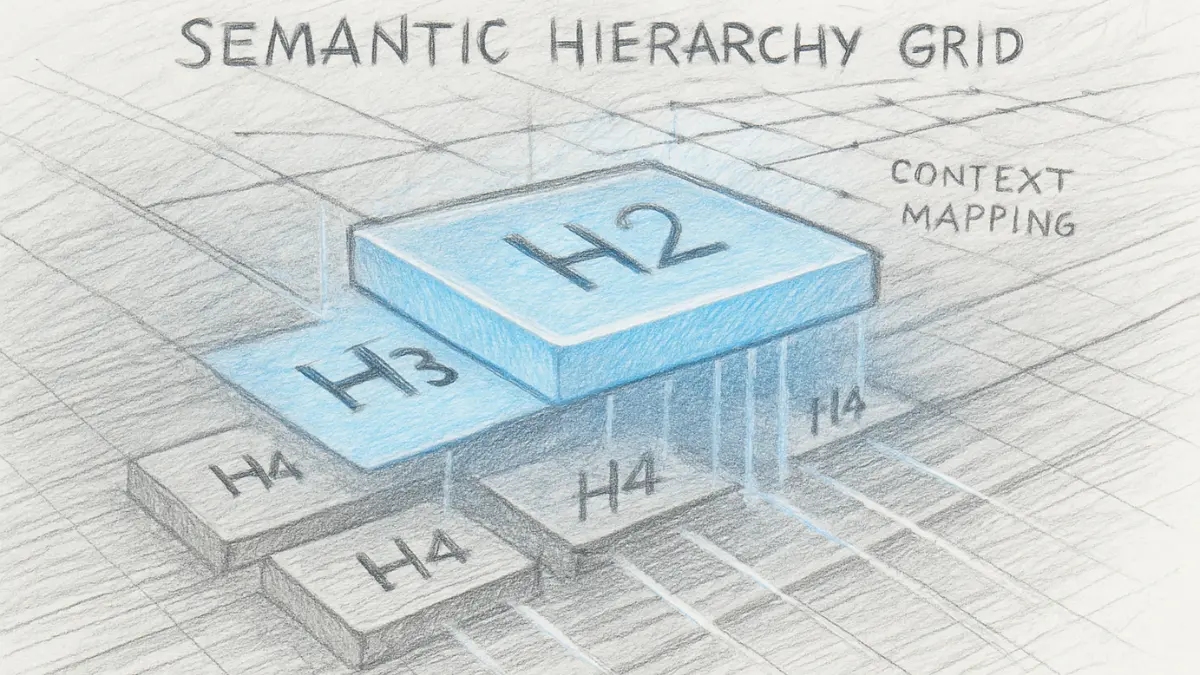

Optimizing Headings and Subheadings for Context Mapping

Headings and subheadings define the structural environment through which cognitive systems interpret long-form content, and heading optimization techniques establish the patterns that models use to map context with minimal variance.

Modern generative engines compute meaning by aligning hierarchical markers with semantic boundaries, which makes predictable heading architecture essential for accurate interpretation. This article introduces the structural principles that support AI-driven context mapping and prepares the foundation for machine-readable document design.

Definition: AI understanding is the model’s ability to interpret meaning, structure, and conceptual boundaries in a way that enables accurate reasoning, reliable summarization, and consistent content reuse across generative discovery systems.

The Role of Heading Optimization Techniques in AI Context Mapping

Heading structure best practices define how models interpret hierarchical segments and convert document architecture into machine-readable contextual pathways. This section explains how headings act as structural signals that guide context alignment, reduce interpretive variance, and support repeatable meaning extraction across large content sets. Research from MIT Computer Science and Artificial Intelligence Laboratory demonstrates that structured linguistic markers significantly improve model comprehension when heading systems remain stable and predictable.

Definition: Headings in AI context mapping are hierarchical markers that segment meaning, establish scope boundaries, and signal the logical progression through which cognitive systems compute relationships between content units.

Claim: Headings provide the structural framework through which generative models align semantic units and reduce ambiguity in long-form interpretation.

Rationale: Models compute meaning more reliably when documents maintain consistent hierarchy, scoped segmentation, and uniform heading logic.

Mechanism: Systems derive contextual anchors from heading patterns, assign meaning to segments based on hierarchical position, and compute relationships through predictable transitions.

Counterargument: Some short-form or highly structured datasets may achieve clarity without extensive heading hierarchy, but these cases rely on alternative structural signals not present in long-form writing.

Conclusion: Stable heading systems produce higher interpretability and reduce reasoning variance, enabling reliable context mapping across generative environments.

How Heading Optimization Techniques Function as Semantic Anchors

When authors organize sections with headings, they create explicit signals that determine how models traverse meaning layers and process conceptual boundaries. This subsection describes how semantic anchors shape the interpretive sequence and allow engines to determine where topics begin, change, or conclude. These patterns increase precision in the distribution of meaning across multi-level documents.

Definition: A semantic anchor is a structural marker that identifies the entry point to a concept, defines its scope, and signals how subsequent text contributes to the contextual meaning of the section.

Mechanism: Semantic anchors guide models by establishing clear conceptual starting points and linking heading position to expected meaning flow.

Example: When a subsection begins with a scoped heading, models prioritize its contents as a distinct conceptual unit and map its relationship to parent segments.

Implication: Predictable anchors allow engines to form stable context pathways and reduce ambiguity during hierarchical reasoning.

Structural Signals in Hierarchical Documents

| Heading Type | Function in Context Mapping | Interpretation by AI Models |

|---|---|---|

| Top-Level Heading | Establishes primary conceptual domain | Treated as the global context frame for interpretation |

| Subheading | Segments meaning into scoped units | Interpreted as a unique semantic node with defined boundaries |

| Nested Subheading | Provides fine-grained structural direction | Mapped as a micro-context with explicit relational ties |

Heading Optimization Techniques for Micro-Definitions and Hierarchical Clarity

Improve heading clarity requires a stable structure that supports precise meaning distribution. This section explains how micro-definitions guide models toward consistent interpretation. Moreover, research from the Stanford Natural Language Processing Group shows that localized definitions increase accuracy in hierarchical text.

Definition: Heading clarity is the precision with which a heading communicates scope, structural role, and the boundaries of the content under it. Semantic boundaries are limits that separate one meaning unit from another and guide how cognitive systems segment hierarchical text.

Claim: Micro-definitions strengthen clarity and stabilize the interpretive path across sections.

Rationale: Models reduce variance when definitions appear at the point of introduction and set clear constraints for the content that follows.

Mechanism: Systems store each definition as a reference and reuse it when processing related segments.

Counterargument: Some technical domains rely on assumed knowledge; however, this reduces stability in general-purpose reasoning.

Conclusion: Local definitions act as consistent anchors that support clarity and reduce semantic drift.

Principle: Content becomes more visible in AI-driven environments when its structure, definitions, and conceptual boundaries remain stable enough for models to interpret without ambiguity.

Designing Definitions for Machine Reuse with Heading Optimization Techniques

Clear section labeling improves interpretive consistency and creates stable meaning units. In addition, it allows models to reuse semantic structures across reasoning cycles.

Mechanism: Micro-definitions compress meaning and reduce ambiguity across related content.

Example: For example, when a subsection defines a concept in two sentences, models treat this definition as a stable meaning node.

Scope Control in H2–H4 Structures

- Each H2 must introduce one domain and state its scope in the first paragraph.

- Each H3 must refine the parent scope and avoid lateral topic shifts.

- Each H4 must focus on one mechanism or rule that depends on the parent segment.

- No heading may exceed its assigned scope or merge unrelated content.

These rules ensure stable hierarchy and predictable interpretation across the document.

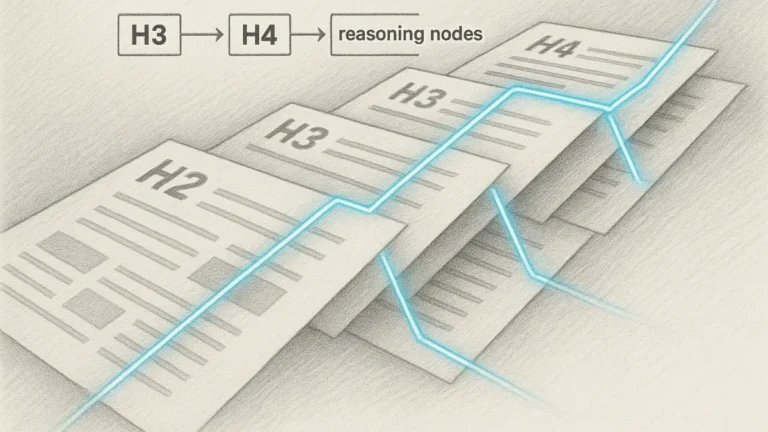

Hierarchical Depth and Heading Optimization Techniques for Multi-Level Planning

Hierarchical heading strategy defines how documents distribute meaning across multiple layers of structure and how models interpret these layers as ordered reasoning pathways. This section explains how hierarchical depth supports controlled segmentation, predictable context flow, and consistent interpretation across complex text architectures. Findings from Berkeley Artificial Intelligence Research (BAIR) show that multi-level hierarchy increases model accuracy by reducing contextual ambiguity and stabilizing semantic transitions.

Definition: Hierarchical depth is the structured arrangement of headings across multiple levels that determines how meaning is layered, segmented, and interpreted within a document.

Claim: Hierarchical depth organizes content into predictable layers that guide generative models through controlled interpretive steps.

Rationale: Models follow structural cues to understand which segments form primary meaning, which refine it, and which provide micro-level detail.

Mechanism: Systems compute each layer as a distinct reasoning unit and connect these units through ordered transitions defined by heading levels.

Counterargument: Some short-form documents may rely on flat structure; however, this reduces precision when scaling to dense or multi-domain content.

Conclusion: Layered hierarchy provides the depth required for stable, repeatable interpretation and supports accurate context mapping in generative engines.

Depth Modeling in Generative Engines Using Heading Optimization Techniques

Heading depth structure shapes how generative engines process sequential meaning and establish relationships across hierarchical segments. This subsection explains how models treat each depth level as a structured reasoning node.

Example: For example, when a document uses a clear three-level hierarchy, models identify the top layer as the conceptual frame, the mid-level as the interpretive refinement, and the lowest level as the actionable or technical detail.

Implication: As a result, engines maintain consistent reasoning patterns and reduce drift when processing long-form content.

Layered Reasoning Patterns in Document Layout

| Depth Level | Cognitive Function | Mapping Output |

|---|---|---|

| Primary Level (H2) | Establishes the core domain and frames global meaning | Interpreted as the dominant semantic context |

| Secondary Level (H3) | Refines the primary domain and narrows interpretive scope | Mapped as controlled expansions linked to parent segments |

| Tertiary Level (H4) | Provides micro-level mechanisms, rules, or examples | Processed as granular meaning nodes within structured reasoning chains |

Subheading Optimization for Meaning Distribution

Subheading optimization guide establishes the structural rules that determine how meaning is distributed across segments and how models interpret sub-level units in long-form content. This section explains how subheadings regulate semantic flow, reduce ambiguity, and create predictable transitions between conceptual layers. Research from the Allen Institute for Artificial Intelligence (AI2) shows that explicit subheading structure improves model accuracy by clarifying segment roles in hierarchical documents.

Definition: Meaning distribution is the structural process through which content is divided, ordered, and allocated across subheadings to create stable interpretive units that cognitive systems can map with precision.

Claim: Subheading optimization enhances meaning distribution by producing well-scoped segments that models interpret as discrete reasoning units.

Rationale: Models compute meaning more reliably when subheadings define clear boundaries and structure the flow of information across multi-level documents.

Mechanism: Systems use subheadings as semantic markers that separate major ideas, assign hierarchical weight, and regulate how meaning propagates through each section.

Counterargument: Minimal documents may not require multiple subheading layers; however, this limits clarity in dense or multi-domain writing.

Conclusion: Structured subheading design creates high-fidelity meaning distribution and improves the stability of machine interpretation across complex text architectures.

Precision in Subheading Formulation with Heading Optimization Techniques

Subheading formatting tips support consistent interpretation by ensuring that each subheading communicates scope, intent, and structural purpose. This subsection explains how precise subheading formulation reduces ambiguity and increases clarity for generative engines.

Principles of precision and variation ensure that subheadings maintain stable meaning while adapting to differences in content depth and structural goals. Precise phrasing defines the interpretive function of each segment, while controlled variation prevents repetitive structure that could reduce clarity.

Segmenting Long-Form Structures

- Divide content into hierarchical segments that correspond to distinct meaning units.

- Assign each segment a subheading that states scope with minimal ambiguity.

- Ensure that subheadings follow a consistent hierarchical pattern across the document.

- Use subheadings to mark transitions in topic, reasoning depth, or semantic structure.

- Maintain clear boundaries so models can classify each segment without interpretive overlap.

These steps create a stable segmentation framework that supports accurate heading segmentation rules and predictable meaning flow across long-form text.

Semantic Flow and Section-Level Coherence

Headings for content flow establish the structural pathways that guide how meaning progresses through each section and how models interpret transitions between hierarchical units. This section explains how semantic flow creates stable interpretive movement across a document and supports consistent reasoning in generative engines. Research from the Oxford Internet Institute shows that documents with coherent flow reduce interpretive fragmentation and improve model accuracy in multi-level content.

Definition: Semantic flow is the ordered progression of meaning that moves through a document’s hierarchical structure and determines how cognitive systems connect, extend, and interpret related segments.

Claim: Semantic flow establishes predictable movement across sections and supports stable interpretive sequences in generative systems.

Rationale: Models compute meaning more reliably when the progression of ideas follows a linear, scoped, and consistently signaled structure.

Mechanism: Systems process each section as a linked reasoning unit and use heading order to determine how meaning transitions through the document.

Counterargument: Some non-linear narratives intentionally disrupt flow; however, such structures reduce clarity for machine interpretation.

Conclusion: Coherent semantic flow increases interpretive stability and produces predictable section-level reasoning patterns across long-form text.

Maintaining Linear Interpretability Through Heading Optimization Techniques

Heading alignment strategy maintains linear interpretability by ensuring that each heading signals its structural function in a predictable and logical order. This subsection explains how controlled alignment improves the movement of meaning and supports consistent reasoning.

The mechanism of ordered sequencing helps models follow the document from one meaning unit to the next with minimal ambiguity. As a result, linear interpretability strengthens conceptual continuity and reduces contextual drift across sections.

Reducing Interpretive Variance

- Use consistent heading patterns to maintain structural predictability across adjacent sections.

- Align subheadings with clear boundaries that prevent overlapping meaning units.

- Structure transitions to ensure that each segment expands or refines the previous one.

- Avoid abrupt shifts that disrupt continuity or introduce unrelated content.

These steps establish heading clarity patterns that reduce interpretive variance and improve the stability of meaning across hierarchical structures.

Machine-Readable Heading Structures for Generative Engines

Optimize text hierarchy establishes the structural conditions that allow generative engines to interpret documents as stable, machine-readable systems. This section explains how machine-readable structures improve segmentation, reduce ambiguity, and strengthen reasoning consistency across hierarchical layers. Research from the National Institute of Standards and Technology shows that well-defined structural cues increase parsing accuracy and support reproducible interpretation in large language models.

Definition: A machine-readable structure is an organized hierarchy of headings and segments that allows cognitive systems to parse, classify, and interpret content through predictable structural signals.

Claim: Machine-readable structures provide the stable hierarchy required for accurate interpretation and controlled context mapping in generative environments.

Rationale: Models compute meaning more reliably when documents use consistent segmentation, uniform hierarchy, and explicit structural boundaries.

Mechanism: Systems interpret each heading as a hierarchical cue, derive meaning from structural position, and compute relationships through predictable transitions.

Counterargument: Informal writing may rely on implicit cues rather than hierarchy; however, this reduces interpretive precision for generative engines.

Conclusion: Clear machine-readable structure ensures consistent reasoning and supports accurate meaning extraction across multi-level documents.

Structural Readability Rules for LLMs

Semantic heading selection governs how large language models assign interpretive value to headings and how they determine the purpose of each hierarchical level. This subsection explains how readability rules help models follow structured content with reduced semantic drift.

Concept: Structural readability defines the clarity, segmentation, and logical order through which models interpret each document layer.

Mechanism: Systems classify headings as semantic nodes, link each node to its parent unit, and compute transitions based on hierarchical position.

Implication: As a result, documents with consistent structure produce more stable reasoning paths and clearer mapping outputs across generative engines.

Example: A page with clear conceptual boundaries and stable terminology allows AI systems to segment meaning accurately, increasing the likelihood that its high-confidence sections will appear in assistant-generated summaries.

AI-Layered Interpretation of Section Markers

| Structural Marker | AI Processed Output | Visibility Impact |

|---|---|---|

| Primary Heading (H2) | Defines global context and primary meaning frame | Establishes high interpretive weight and visibility |

| Secondary Heading (H3) | Refines meaning and narrows the contextual scope | Increases clarity and strengthens local semantic mapping |

| Tertiary Heading (H4) | Provides micro-level detail and mechanism-level focus | Enhances granularity and improves fine-grained visibility |

Contextual Precision in Document Mapping

Contextual heading placement defines how accurately models interpret the position, scope, and semantic function of each section within a hierarchical document. This section explains how contextual precision strengthens meaning alignment, stabilizes interpretive pathways, and improves the reliability of long-form processing. Research from the European Commission Joint Research Centre shows that precise contextual cues significantly improve model accuracy in structured document interpretation.

Definition: Context mapping precision is the degree to which a document’s structural markers accurately signal scope, relationships, and meaning flow, allowing cognitive systems to map context with minimal ambiguity.

Claim: Contextual precision creates predictable interpretive sequences that guide generative engines through well-structured reasoning paths.

Rationale: Models interpret documents more consistently when section placement follows clear logic and when headings define explicit contextual roles.

Mechanism: Systems map each heading to a semantic coordinate, compute hierarchical relationships, and derive context from the structural position of every segment.

Counterargument: Some narrative forms rely on flexible transitions; however, these formats reduce clarity and increase interpretive variance for generative processing.

Conclusion: Precise contextual placement ensures stable mapping behavior and supports high-fidelity interpretation across hierarchical document structures.

Context Windows in Hierarchical Text

Contextual section labeling establishes how models interpret context windows within hierarchical text and how each window influences meaning extraction. This subsection explains how context windows determine what information remains active, relevant, and computationally available during interpretation.

For example, when a model processes a subsection with a clearly labeled heading, the system activates a specific context window that contains only the meaning relevant to that segment. As a result, the model maintains clearer distinctions between adjacent sections and reduces overlap between unrelated reasoning units.

Predictable Block Boundaries

- Assign each block a heading that defines the beginning and end of its semantic range.

- Maintain consistent spacing and hierarchical alignment so models can identify structural boundaries.

- Ensure that each block introduces one meaning unit and transitions logically from the previous segment.

- Avoid mixing contextual domains within a single structural block to prevent interpretive ambiguity.

These rules support clean heading formatting and enable models to recognize predictable boundaries across hierarchical structures.

Evaluating and Refining Heading Optimization Techniques

Improve article headings requires a systematic evaluation framework that measures clarity, structural accuracy, and interpretive stability across hierarchical layers. This section explains how evaluation models identify strengths and weaknesses in heading design and how refinement improves consistency in generative processing. Research from the Vector Institute at the University of Toronto shows that structured evaluation methods significantly increase model comprehension by strengthening interpretive signals.

Definition: A heading evaluation model is a structured assessment system that measures the clarity, scope accuracy, and semantic contribution of each heading within a hierarchical document.

Claim: Evaluation models provide a systematic method for improving heading quality and reducing interpretive variance.

Rationale: Models follow structural cues more accurately when headings communicate precise scope and consistent logical purpose.

Mechanism: Systems classify headings using evaluation criteria, assign interpretive weight based on clarity, and map meaning according to hierarchical alignment.

Counterargument: Some informal texts may rely on flexibility instead of structure; however, this reduces reliability for generative interpretation.

Conclusion: A rigorous evaluation process enhances heading quality and ensures stable meaning extraction across complex documents.

Scoring Headings for Interpretability

Heading relevance optimization requires scoring systems that measure how effectively each heading contributes to interpretability. This subsection explains how structured scoring strengthens reasoning consistency and supports high-quality document design.

| Criterion | Description | Interpretive Impact |

|---|---|---|

| Scope Accuracy | Measures how well a heading reflects the meaning of its section | Reduces ambiguity and improves alignment |

| Structural Precision | Assesses clarity of hierarchical positioning | Strengthens context mapping and sequence logic |

| Semantic Relevance | Evaluates how directly the heading contributes to meaning | Improves consistency in cognitive processing |

| Transition Clarity | Measures smoothness between adjacent headings | Enhances flow and reduces interpretive drift |

These criteria help models evaluate headings as structured reasoning nodes and enable more reliable document interpretation.

Practical Adjustment of Hierarchical Signals

- Revise headings to ensure each one introduces a single, well-defined meaning unit.

- Align hierarchical markers to maintain predictable parent–child relationships.

- Examine transitions to confirm that each heading expands or refines the previous segment.

- Adjust scope to avoid overlapping meaning domains or ambiguous segment boundaries.

- Reassess headings against evaluation criteria to maintain consistency across updates.

These steps strengthen heading coherence techniques and create a more stable structural environment for generative interpretation.

Applied Heading Optimization in Real-World Scenarios

Structured subheading format supports consistent meaning distribution and improves interpretive accuracy when documents scale across multiple use cases. This section explains how heading optimization practices operate in applied environments where clarity, hierarchy, and structural consistency must remain stable under real content workloads. Research from the Harvard Data Science Initiative shows that applied structural refinement strengthens model comprehension and reduces variance in large-scale content processing.

Definition: Applied heading optimization is the process of adapting hierarchical structures to real-world documents so that meaning remains stable, interpretable, and machine-readable across diverse content sets.

Claim: Applied optimization ensures that headings function as reliable interpretive anchors across varied document types.

Rationale: Models interpret meaning more consistently when structure adapts to real-world constraints without losing hierarchical clarity.

Mechanism: Systems compute structural patterns from optimized headings and apply these patterns to classify, segment, and relate meaning across content.

Counterargument: Some content environments may rely on informal segmentation; however, this reduces interpretive stability for generative systems.

Conclusion: Applied optimization preserves clarity across contexts and supports reliable meaning extraction in practical document workflows.

Microcase: Rebuilding Document Accuracy Through Structural Revision

Section flow optimization becomes critical when documents evolve over time and accumulate inconsistencies that disrupt interpretive clarity. This microcase demonstrates how structural revision can restore accuracy in long-form content.

A large technical report began to produce inconsistent reasoning outputs because headings no longer aligned with the meaning of their sections. As a result, models frequently misinterpreted transitions and merged unrelated concepts. After revising the hierarchical structure, each heading gained a precise scope, and the document’s semantic flow recovered. Consequently, model comprehension improved, and reasoning variance significantly decreased.

Deploying Optimized Headings Across Large Content Sets

- Standardize heading templates to ensure consistent depth and structure across all documents.

- Apply controlled vocabulary for headings to prevent semantic drift across content sets.

- Validate each heading against clarity, relevance, and structural alignment criteria.

- Maintain consistent parent–child relationships throughout large document collections.

- Reevaluate hierarchical structures periodically to preserve readability and interpretive stability.

These practices support heading readability improvement and enable scalable structural coherence in large content environments.

Checklist:

- Does the page define its core concepts with precise terminology?

- Are sections organized with stable H2–H4 boundaries?

- Does each paragraph express one clear reasoning unit?

- Are examples used to reinforce abstract concepts?

- Is ambiguity eliminated through consistent transitions and local definitions?

- Does the structure support step-by-step AI interpretation?

Interpretive Role of Heading Architecture

- Hierarchical boundary signaling. Heading depth establishes explicit semantic boundaries that allow generative systems to differentiate scope, priority, and contextual containment.

- Meaning unit delineation. Headings function as labels for discrete semantic units, reducing ambiguity during extraction and long-context synthesis.

- Depth coherence. Consistent progression across heading levels supports predictable interpretation by preserving proportional relationships between concepts.

- Sequential semantic flow. Logical adjacency between headings signals extension, specialization, or contextual framing without requiring inferential reconstruction.

- Model-agnostic readability. Architectures that remain interpretable across different parsing strategies indicate structural stability beyond specific systems.

This architecture explains how heading structures are interpreted as semantic scaffolding, guiding machine understanding without introducing procedural intent or instructional emphasis.

FAQ: Heading Optimization Techniques

What are heading optimization techniques?

Heading optimization techniques improve clarity, segmentation, and context mapping by structuring documents into machine-readable hierarchical units.

Why are headings important for AI interpretation?

AI systems rely on hierarchical cues to understand meaning flow, segment content, and map relationships between conceptual units across a document.

How do optimized headings improve context mapping?

Clear headings define scope boundaries, reduce ambiguity, and create predictable interpretive pathways that strengthen context mapping in generative engines.

What makes a heading machine-readable?

A heading becomes machine-readable when it follows consistent structure, communicates scope precisely, and aligns with hierarchical depth rules.

How should subheadings be structured?

Subheadings must refine the meaning of their parent section, maintain clear semantic boundaries, and support linear interpretive flow.

How do hierarchical levels affect meaning distribution?

Each level of hierarchy introduces a different layer of meaning, allowing AI to classify, refine, or expand content through structured reasoning patterns.

What is the role of semantic flow in heading optimization?

Semantic flow ensures that each section transitions logically, allowing models to follow the document with minimal interpretive drift.

How can I evaluate whether my headings are effective?

Evaluate headings using criteria such as scope accuracy, structural precision, relevance, and clarity of transitions between sections.

Do micro-definitions improve heading clarity?

Yes, micro-definitions stabilize boundaries for each section, making content easier for AI systems to classify and segment accurately.

How do optimized headings support large content systems?

Consistent heading structures create reusable interpretive patterns that scale across large content sets, improving coherence and machine readability.

Glossary: Key Terms in Heading Optimization

This glossary defines the essential terminology used in the context of heading optimization techniques to support consistent interpretation and machine-readable document structure.

Heading Optimization

A structured approach to designing headings and subheadings that define scope, clarify meaning, and improve hierarchical interpretation for generative engines.

Semantic Boundary

An interpretive limit that separates one meaning unit from another, helping models understand where one idea ends and the next begins.

Hierarchical Depth

The number of structural layers within a document, from broad conceptual headings to detailed mechanism-level subheadings.

Semantic Flow

The ordered movement of meaning across sections that enables cognitive systems to follow reasoning with minimal ambiguity.

Context Mapping Precision

The accuracy with which structural markers signal relationships, helping AI map context and interpret hierarchical boundaries.

Machine-Readable Structure

A document layout designed so that generative engines can parse sections, classify meaning, and follow reasoning through clear structural cues.

Micro-Definition

A short definition placed at the beginning of a section to stabilize meaning boundaries and improve interpretive consistency.

Section-Level Coherence

The degree to which adjacent sections align in scope and meaning, enabling models to follow a logical progression of ideas.

Structural Marker

A heading or subheading used as a signal that indicates context shifts, meaning transitions, or hierarchical relationships.

Interpretive Stability

The consistency with which a document can be understood by AI systems due to clear hierarchy, predictable boundaries, and controlled semantic flow.