Last Updated on December 20, 2025 by PostUpgrade

How to Build a Generative Optimization Prioritization Guide

Generative optimization requires a structured process that evaluates page importance through a unified generative optimization prioritization guide. Organizations rely on consistent criteria that define semantic value, content relevance, and model-readability.

Prioritizing pages for generative optimization establishes an ordered improvement sequence that supports scalable enhancements across large content ecosystems. This structured approach creates predictable conditions for AI comprehension and strengthens long-term retrieval stability.

Definition: Generative optimization page prioritization is the structured process through which pages are evaluated, ranked, and sequenced based on their clarity, semantic stability, and readiness for AI-driven interpretation across generative discovery systems.

Foundation of Generative Optimization Page Prioritization

This section introduces generative optimization page prioritization as the core framework for selecting which pages require structured enhancement first. Research from the MIT Computer Science and Artificial Intelligence Laboratory shows that ordered sequencing improves model-level retrieval stability across large content networks. The purpose of this section is to establish the conceptual basis for prioritizing content for AI-driven visibility and to define how prioritization supports scalable AI comprehension across large websites.

Generative optimization page prioritization is the systematic evaluation of pages to determine which assets deliver the highest AI visibility returns.

Content prioritization operates through structured semantic grouping that organizes large page inventories into interpretable layers. Each layer reflects a specific functional purpose within the broader domain structure, which provides models with consistent interpretive anchors. This layered view establishes predictable classification points for entity extraction, reasoning alignment, and retrieval weighting.

Prioritization also functions as a stabilizing mechanism that prevents fragmentation across expanding content ecosystems. When new pages are introduced without structural ordering, models receive inconsistent signals that weaken cross-page coherence. A controlled prioritization framework ensures that high-value assets form the stable backbone of the cluster, while secondary assets integrate into the hierarchy without disrupting interpretive flow.

Principle: Prioritization becomes effective only when pages maintain stable definitions, explicit hierarchical boundaries, and predictable semantic structures that allow generative models to resolve meaning without ambiguity.

Claim: Generative optimization requires ordered page sequencing to ensure consistent AI comprehension across content clusters.

Rationale: Model retrieval patterns depend on structured priority paths aligned with semantic relevance.

Mechanism: Systems evaluate content hierarchy using scoring indicators influenced by clarity, evidence, and structural depth.

Counterargument: Pages with low lexical density or unclear purpose may not show measurable improvement despite prioritization.

Conclusion: A priority-first approach produces predictable generative outcomes across interconnected page ecosystems.

Role of Content Architecture in Prioritization

This subsection explains how content architecture shapes the interpretive pathways models use when determining page importance. Its purpose is to define segmentation principles that support consistent model interpretation. The scope includes hierarchical structuring and priority rule formation.

Hierarchical segmentation rules

This micro-section introduces the rules that define how content must be segmented to support model comprehension. The purpose is to establish predictable boundaries that reduce ambiguity across large text structures. The scope includes segmentation depth, unit size, and sequencing logic.

Hierarchical segmentation rules require each content block to represent a single conceptual unit with a stable structural pattern. A segment must contain clear syntactic boundaries and maintain a fixed scope to avoid creating interpretive overlap. Each block must retain consistent sentence density to ensure predictable retrieval behavior during AI-driven parsing.

Segmentation rules also require consistent ordering from broader conceptual layers to narrower detail layers. This sequencing allows models to build internal representations progressively instead of interpreting fragmented information. Clear separation of conceptual, procedural, and evidential segments ensures that the model can classify each unit within its correct structural category.

Definition of priority tiers

This micro-section defines the tier system used to categorize pages according to their expected contribution to generative visibility. Its purpose is to create a consistent priority hierarchy for allocating optimization resources. The scope includes tier characteristics, evaluation markers, and tier-based sequencing logic.

Priority tiers classify pages into structured groups based on their semantic contribution, model-readiness, and evidence density. Tier 1 includes pages with central conceptual value, strong entity structures, and high retrieval impact. These pages anchor the visibility flow and require optimization before any other tier. Tier 2 contains pages with supportive conceptual depth or secondary relevance but strong alignment with primary topics. Their priority arises from structural adjacency rather than intrinsic centrality.

Tier 3 includes pages with low immediate retrieval significance but necessary to maintain cluster completeness. These pages provide breadth but do not drive core visibility signals. Tier classification ensures that optimization begins with assets that produce the highest generative returns and proceeds in a controlled sequence across the site architecture. This structure prevents resource fragmentation and maintains stable model-level interpretation across large ecosystems.

Mapping High-Value Sections

This subsection describes how high-value sections function as structural anchors within the prioritization model. It outlines the elements that determine high-impact segments based on clarity, terminology consistency, evidence presence, and early-page placement. Each component contributes to predictable AI interpretation.

| Component | Description | AI Value | Priority Impact |

|---|---|---|---|

| Structure | Predictable hierarchy | High | Ensures early inclusion in ranking models |

| Terminology | Stable vocabulary | High | Strengthens retrievable meaning |

| Evidence | Trusted references | Very high | Increases factual weight |

| Placement | Early-page priority | Moderate | Improves initial model interpretation |

This matrix defines the core structural indicators used to determine section-level prioritization.

Criteria for AI Page Prioritization in Enterprise Systems

This section defines the criteria used to evaluate pages within a generative optimization prioritization guide and explains how structured indicators determine the order in which pages should be optimized. Research from the Stanford Natural Language Processing Group shows that consistent structural cues and stable linguistic boundaries significantly improve model interpretation accuracy across large document collections. The goal of this section is to introduce measurable evaluation factors that support scalable prioritization across enterprise-level content ecosystems.

An ai page prioritization framework is a structured set of scoring components that determines the optimization sequence.

Claim: Prioritization criteria provide AI models with predictable signals for evaluating content importance.

Rationale: Models interpret structural cues derived from clarity, consistency, and entity stability.

Mechanism: Criteria apply weighted scoring influencing how generative engines redistribute semantic relevance.

Counterargument: A single criterion cannot represent page value fully across diverse content categories.

Conclusion: Multi-dimensional evaluation produces balanced and scalable prioritization for generative systems.

Structural criteria

This subsection introduces structural criteria as fundamental indicators that define how pages are organized for model interpretation. Its purpose is to establish measurable format-based elements that influence priority scoring. The scope includes hierarchical design and segmentation logic.

Heading depth

This micro-section defines heading depth as an indicator of hierarchical clarity within a page. Its purpose is to quantify how effectively the heading structure guides model traversal. The scope includes depth intervals, section uniformity, and interpretive stability.

Heading depth determines whether content is distributed across predictable H2–H4 ranges that support stable model parsing. A consistent heading depth reduces ambiguity and prevents models from misclassifying concept layers. Structured depth also ensures that reasoning patterns are exposed in a controlled sequence, improving retrieval precision across generative systems.

Semantic segmentation logic

This micro-section introduces segmentation logic as the rule set governing the separation of conceptual blocks. Its purpose is to ensure that each segment represents a discrete functional unit. The scope includes segmentation granularity, thematic isolation, and boundary clarity.

Semantic segmentation logic requires each block to contain one defined concept expressed through uniform density. Distinct segment boundaries prevent scope overlap and enable AI models to assign precise semantic weights. Clear segmentation reduces interpretive variance and strengthens intra-page relevance mapping across generative evaluation workflows.

Content density criteria

This subsection describes content density criteria as components that measure informational clarity and sentence-level efficiency. Its purpose is to ensure that each paragraph contributes consistent semantic value. The scope includes precision rules and entity uniformity requirements.

Paragraph precision

This micro-section defines paragraph precision as the requirement that each paragraph expresses one concept in a compact, extractable form. Its purpose is to support deterministic model parsing. The scope includes sentence efficiency, structural clarity, and conceptual isolation.

Paragraph precision ensures that each paragraph reflects a self-contained reasoning unit without redundancy. High-precision structure reduces noise and creates predictable linguistic patterns that increase model trust during interpretation. Precision-based density enhances retrieval consistency and improves the overall prioritization signal.

Entity stability requirements

This micro-section introduces entity stability as a criterion ensuring consistent reference patterns across a page. Its purpose is to prevent semantic drift that disrupts model alignment. The scope includes naming uniformity, role consistency, and lexical control.

Entity stability maintains uniform terminology for all named entities and conceptual labels. Stable entity use enables models to consolidate references accurately instead of fragmenting meaning across variants. This stability supports generative engines in building coherent entity graphs, which strengthens page-level scoring and cluster-wide visibility.

Evidence-based criteria

This subsection defines evidence-based criteria as elements that reinforce factual reliability within a page. Its purpose is to establish external verification signals that support long-term interpretive confidence. The scope includes authoritative anchors, research-driven references, and structured evidence placement.

Integration of anchor-based authoritative sources ensures that factual claims are validated through credible institutions. Evidence placement provides generative models with stable confirmation signals that increase trust in page-level interpretation. Authoritative anchors also strengthen cross-page coherence within the broader optimization hierarchy.

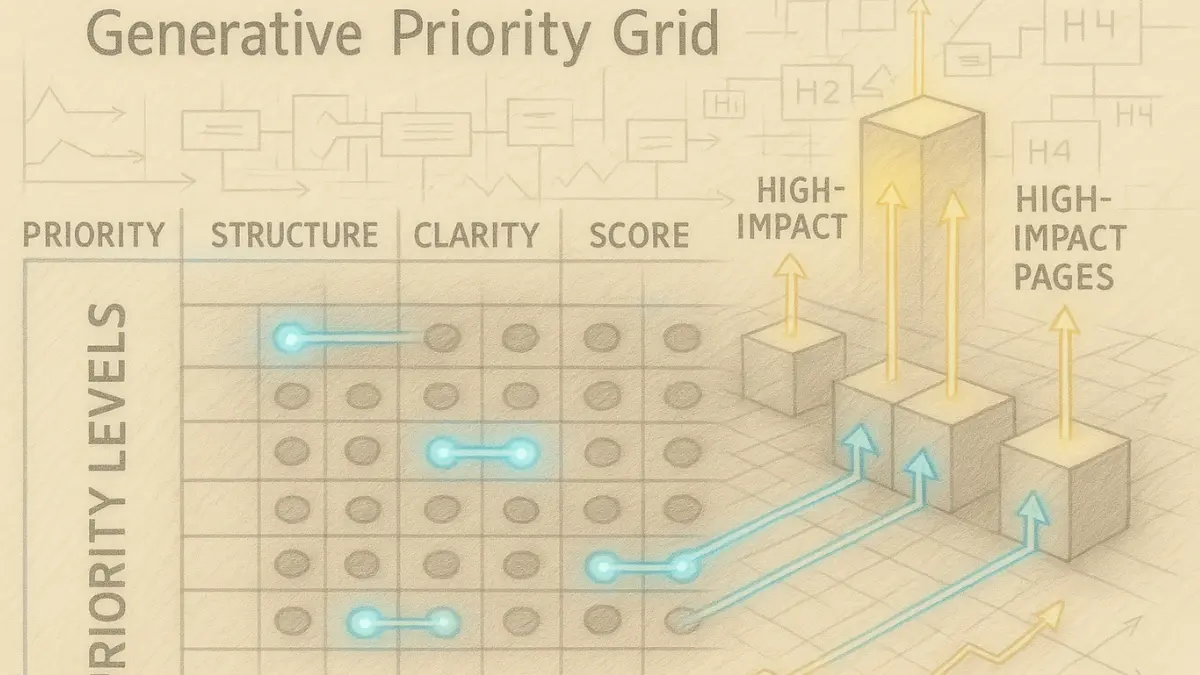

Scoring Pages with Generative Optimization Prioritization Guide

This section explains how a generative optimization prioritization guide establishes a scoring method for distinguishing high-impact pages. Research from the Berkeley Artificial Intelligence Research Lab shows that structured scoring frameworks increase retrieval stability and improve the accuracy of content relevance modeling across large document collections. The purpose is to clarify how models use quantitative scoring to evaluate content pathways and to introduce scalable evaluation templates for enterprise environments.

A generative optimization prioritization guide is a defined scoring system for ranking content readiness.

Claim: Scoring systems enable structured interpretation across large clusters.

Rationale: Quantitative scoring prevents inconsistent prioritization decisions.

Mechanism: Weighted components assign value to clarity, structure, and relevance.

Counterargument: Overweighted metrics may distort the optimization order.

Conclusion: Balanced scoring creates reliable optimization sequences for generative retrieval.

Core scoring indicators

This subsection introduces core scoring indicators as quantifiable components that determine how effectively a page communicates its structure to generative models. Its purpose is to outline measurable properties that influence scoring accuracy. The scope includes clarity, evidence integration, structure, and verification.

• Semantic clarity

• Evidence density

• Heading structure quality

• Internal coherence

• Data-driven verification

This set of indicators supports predictable scoring across the full content inventory.

Example: A page with consistent terminology, well-formed H2–H4 segmentation, and explicit generative signals receives higher scoring weight, enabling AI systems to separate primary concepts from supportive contextual data during retrieval.

Multi-tier evaluation mapping

This subsection defines multi-tier evaluation mapping as a hierarchical method that separates pages by expected semantic impact. Its purpose is to establish a structured scoring ladder used to determine optimization order. The scope includes high-impact content, supportive mid-range material, and low-signal pages.

Tier 1 — High-impact assets

These assets carry central conceptual definitions, consistent entity structures, and evidence-rich material. Their role is to anchor relevance signals that guide the generative optimization prioritization guide across the broader domain. Tier 1 requires early optimization due to its strong influence on retrieval stability.

Tier 2 — Mid-range structural assets

These pages reinforce core topics and provide structural depth that supports the interpretive mechanisms of Tier 1. They hold moderate generative importance and form the second phase of the optimization sequence once core assets reach structural readiness.

Tier 3 — Low-signal assets

These pages have limited immediate impact but contribute domain completeness and contextual breadth. They are optimized after high- and mid-tier assets to maintain consistency across the semantic layer and avoid fragmentation of retrieval signals.

Using Page Ranking for Generative Optimization Pipelines

This section introduces page ranking for generative optimization as a mechanism for ordering optimization sequences within a generative optimization prioritization guide. Research from the Allen Institute for Artificial Intelligence shows that structured ranking signals improve retrieval consistency and enhance cross-page relevance modeling across large content ecosystems. The purpose is to demonstrate how ranking signals reflect AI interpretation behavior and outline structured ranking processes relevant to enterprise environments.

Page ranking for generative optimization is the ordered classification of pages based on generative retrieval relevance.

Claim: Ranking pages ensures efficient sequencing aligned with AI comprehension.

Rationale: Highly ranked pages yield greater generative visibility improvements.

Mechanism: Ranking aligns entity-focused content with high-value semantic points.

Counterargument: Ranking may overlook long-tail assets essential for domain completeness.

Conclusion: Effective ranking improves cluster discoverability and increases structured visibility.

Priority signals for ranking

This subsection defines the primary signals used to determine page ranking strength within optimization workflows. Its purpose is to establish measurable indicators that reflect model interpretation patterns. The scope includes cross-link effects and hierarchical depth signals that shape ranking stability.

Cross-link influence

Cross-link influence represents the strength of internal linking pathways that connect a page to semantically adjacent assets. Pages with strong cross-link networks provide clearer interpretive routes for generative systems, increasing their likelihood of early retrieval. Stable link distribution reinforces the ranking function by ensuring that high-value pages remain central in the domain graph.

Depth-layer importance

Depth-layer importance evaluates the structural position of a page within the hierarchical layout. Pages positioned closer to the top layers of the site structure provide stronger entry points for generative engines. Lower-depth layers contribute supporting signals but typically carry reduced ranking weight. The depth metric ensures that ranking reflects structural accessibility and semantic centrality.

Ranking workflows for enterprise-scale sites

This subsection outlines ranking workflows as structured operational sequences that apply ranking signals across large inventories. Its purpose is to align ranking models with enterprise requirements for consistency and scalability. The scope includes ranking distribution, sequence orchestration, and integration with downstream optimization steps.

Ranking workflows distribute scoring signals across the site architecture and integrate them with sequencing rules defined in the generative optimization prioritization guide. This distribution ensures that high-impact assets receive priority attention while maintaining structural coherence across dependent clusters. Workflow-driven ranking supports scalable optimization by providing deterministic, repeatable pathways for model-aligned content improvement.

Integrating AI Visibility Page Scoring

This section describes ai visibility page scoring as a structured evaluation method that quantifies a page’s ability to perform well in AI-driven discovery systems within a generative optimization prioritization guide. Research from the Oxford Internet Institute highlights that stable visibility indicators significantly increase the likelihood of model inclusion across complex retrieval environments. The purpose is to define visibility scoring as a foundational mechanism for large content networks and introduce metrics aligned with model-driven interpretation signals.

Ai visibility page scoring is the process of evaluating how well a page aligns with model interpretation patterns.

Claim: Visibility scoring identifies pages with the highest potential for semantic exposure.

Rationale: Structured visibility increases the likelihood of inclusion in generative responses.

Mechanism: Page signals feed into ranking pathways modeled by retrieval systems.

Counterargument: Visibility scores may change significantly as content evolves.

Conclusion: Maintaining updated visibility scores ensures sustained generative performance.

Visibility scoring metrics

This subsection defines the core metrics used to determine AI visibility strength across content ecosystems. Its purpose is to establish measurable indicators that influence visibility outcomes. The scope includes page stability factors and entity-driven interpretive cues.

Content stability

Content stability measures the degree to which a page maintains coherent structure and consistent updates without creating interpretive drift. Stable pages provide predictable linguistic and structural patterns that models can index reliably. Stability strengthens visibility scoring by ensuring that content maintains long-term relevance signals.

Entity presence

Entity presence evaluates how clearly defined and consistently referenced entities appear across the page. Strong entity representation supports model interpretation by offering clear relevance anchors. Pages with stable entity networks contribute to visibility pathways that align with retrieval scoring models.

Applying visibility scores

This subsection explains how visibility scores are applied across large content architectures to support model-aligned prioritization. Its purpose is to integrate visibility scoring with ranking, sequencing, and downstream optimization workflows.

Visibility scores feed into prioritization pipelines by integrating page signals with the hierarchical structure defined in the generative optimization prioritization guide. This integration ensures that high-visibility pages receive appropriate placement in optimization sequences and that lower-visibility pages are scheduled for structural reinforcement. Applying visibility scores across workflows creates predictable interpretive conditions that support long-term retrieval consistency.

Building a Content Prioritization for AI Workflow

This section explains content prioritization for ai as a structured workflow for directing optimization resources within a generative optimization prioritization guide. Research from the Carnegie Mellon Language Technologies Institute demonstrates that sequential optimization workflows improve consistency in large-scale generative interpretation. The purpose is to clarify how task sequencing supports scalable generative improvements and to establish the role of predictable process chains in enterprise environments.

Content prioritization for ai is the structured ordering of optimization tasks across content sets.

Claim: A defined workflow ensures consistent optimization.

Rationale: Sequential task execution supports predictable generative outcomes.

Mechanism: Workflows integrate scoring, ranking, and evidence mapping.

Counterargument: Overly rigid workflows may limit rapid adaptation.

Conclusion: Flexible structured workflows generate stable optimization efficiency.

Workflow stages

This subsection introduces workflow stages as the ordered steps required to operationalize content prioritization. Its purpose is to define how tasks transition from analysis to execution. The scope includes assessment processes, scoring systems, and deployment procedures.

Assessment stage

The assessment stage evaluates the current semantic readiness of pages across the content inventory. It identifies content gaps, evidence weaknesses, entity instability, and structural inconsistencies. This stage ensures that downstream scoring reflects accurate baseline conditions.

Scoring stage

The scoring stage applies structured evaluation criteria to determine page importance and readiness levels. It integrates clarity metrics, structural depth signals, entity patterns, and evidence density. Scoring outputs form the foundation for classification within the generative optimization prioritization guide, enabling consistent prioritization across large ecosystems.

Deployment stage

The deployment stage executes optimization tasks according to the structured order defined by assessment and scoring outputs. It includes rewriting, restructuring, evidence placement, entity refinement, and model-aligned formatting. Deployment ensures that high-priority assets receive early optimization while maintaining coherence across dependent content sets.

Alignment with enterprise governance

This subsection describes how prioritization workflows integrate with enterprise governance structures. Its purpose is to ensure that optimization processes align with organizational standards, documentation rules, and long-term maintenance strategies. The scope includes governance alignment, quality assurance cycles, and oversight mechanisms.

Workflow alignment with enterprise governance ensures that optimization sequences comply with internal standards and support long-term content sustainability. It establishes a framework for consistent updates, structured reviews, and governance-based checkpoints. This integration maintains workflow reliability and stabilizes content performance across enterprise environments.

Page Value Scoring for AI-Centric Systems

This section focuses on page value scoring ai as a method for identifying pages with measurable generative impact within a generative optimization prioritization guide. Research from the Georgia Tech Machine Learning Center demonstrates that value-based scoring improves retrieval precision and strengthens relevance signals across large semantic clusters. The purpose is to explain how value scoring supports targeted optimization decisions and to define structural templates appropriate for enterprise evaluation.

Page value scoring ai is the measurement of a page’s functional contribution to generative performance.

Claim: Value scoring produces actionable optimization priorities.

Rationale: High-value pages influence broader semantic clusters.

Mechanism: Scoring leverages structural quality and evidence completeness.

Counterargument: Low-value pages may still be essential for cluster support.

Conclusion: Balanced scoring ensures comprehensive cluster enhancement.

Value scoring indicators

This subsection introduces value scoring indicators as measurable components that determine how effectively a page contributes to generative visibility. Its purpose is to outline quantifiable metrics used to classify contribution strength. The scope includes entity concentration levels and integration of authoritative evidence.

Entity density

Entity density measures how frequently defined, stable entities appear across a page without creating interpretive overload. High-density pages supply strong semantic anchors that improve the model’s ability to classify and connect domain concepts. Stable density levels reinforce value scoring outcomes by strengthening relevance pathways across interconnected assets.

Evidence integration

Evidence integration evaluates the presence and placement of authoritative references that validate structural or factual claims. Pages with consistent evidence integration provide stronger verification signals, improving their contribution profile within a generative optimization prioritization guide. Evidence-rich content increases the reliability of reasoning chains and supports stable model retrieval across related clusters.

High-Impact Pages and Their Role in Generative Optimization

This section describes high-impact pages ai as the central drivers of generative visibility within a generative optimization prioritization guide. Research from the EPFL Artificial Intelligence Laboratory indicates that high-value structural pages function as primary anchors for retrieval models, shaping cluster-wide interpretive behavior. The purpose is to outline criteria for identifying high-impact assets and to highlight their influence on adjacent cluster performance.

High-impact pages ai are pages demonstrating strong structural clarity and evidence density.

Claim: High-impact assets significantly influence cluster-wide generative performance.

Rationale: These pages establish baseline retrieval signals for AI engines.

Mechanism: AI systems extract content patterns from high-impact pages to interpret clusters.

Counterargument: High-impact pages require frequent updates to maintain performance.

Conclusion: Maintaining high-impact assets strengthens overall domain visibility.

Characteristics of high-impact assets

This subsection introduces the characteristics that define which pages qualify as high-impact for generative optimization. Its purpose is to identify structural and semantic traits that shape high-value content behavior. The scope includes completeness of structure and clarity of meaning boundaries.

Structural completeness

Structural completeness reflects the degree to which a page presents fully developed sections, coherent hierarchy, and consistent formatting. Complete structures provide stable interpretive paths for AI systems by ensuring that conceptual layers, evidence patterns, and segmentation boundaries align with model expectations. Pages with full structural completeness consistently perform as reliable anchor points across the generative optimization prioritization guide.

Defined semantic scope

Defined semantic scope measures how clearly a page expresses its core subject matter without overlapping adjacent topics. Pages with a well-defined semantic scope offer models precise thematic boundaries that reduce interpretive ambiguity. This clarity strengthens the page’s impact within its cluster by improving relevance mapping and stabilizing retrieval signals.

Applying Priority Mapping for AI Optimization Projects

This section introduces priority mapping for ai as a method for defining structured optimization paths across complex content infrastructures within a generative optimization prioritization guide. Research from the NIST Information Technology Laboratory demonstrates that predefined decision structures significantly improve execution efficiency in large-scale computational systems. The purpose is to describe how priority mapping functions within enterprise delivery cycles and to outline the decision frameworks that guide implementation.

Priority mapping for ai is the structured allocation of optimization steps based on predefined rules.

Claim: Priority mapping increases efficiency across large-scale optimization workloads.

Rationale: Defined mapping reduces ambiguity in execution flows.

Mechanism: Decision matrices connect scoring rules to sequence order.

Counterargument: Mapping may require recalibration as site architecture evolves.

Conclusion: Consistent mapping supports predictable generative outcomes.

Mapping structures

This subsection describes mapping structures as the organizational logic that determines how optimization steps are sequenced and interconnected. Its purpose is to define the structural models that support predictable improvement cycles. The scope includes flow direction, task relationships, and dependency alignment.

Flow mapping

Flow mapping outlines the directional sequence through which optimization tasks progress across a content ecosystem. It translates scoring and ranking outputs into procedural routes that ensure high-impact tasks occur first. Flow structures also maintain alignment with the generative optimization prioritization guide by preserving order stability and preventing disruptions in downstream optimization stages.

Dependency mapping

Dependency mapping identifies the logical relationships between tasks and organizes them based on prerequisite requirements. It ensures that foundational improvements are completed before dependent steps begin, reducing execution bottlenecks and minimizing rework. Dependency modeling stabilizes optimization cycles by creating predictable task hierarchies that support long-term generative performance across enterprise architectures.

Generative Visibility Optimization Flow for Enterprise Content

This section explains generative visibility optimization flow as the structured process through which content becomes discoverable in generative engines within a generative optimization prioritization guide. The purpose is to outline how optimized flows enhance cross-page connectivity and to clarify the role of structured interpretive signals across large content environments. It defines the function of flow-based optimization in establishing predictable pathways for AI-driven retrieval.

Generative visibility optimization flow is the structured chain of steps that prepare pages for AI-driven retrieval.

Claim: Flows create interconnected pathways supporting generative comprehension.

Rationale: Flows establish predictable interpretative structures.

Mechanism: Each flow integrates scoring, ranking, mapping, and validation.

Counterargument: Poorly integrated flows may fragment cluster visibility.

Conclusion: Strong flows produce unified generative understanding.

Flow components

This subsection defines the operational components that form the foundation of generative visibility optimization. Its purpose is to outline the structural mechanisms that ensure consistent interpretive flows. The scope includes preparation tasks, evidence integration, and validation sequencing.

Structural preparation

Structural preparation ensures that the content hierarchy, segmentation rules, and formatting patterns meet model interpretation requirements. Stable preparation phases strengthen the generative optimization prioritization guide by offering predictable entry points for retrieval systems. These steps maintain coherence across the broader cluster and support long-term interpretive reliability.

Evidence insertion

Evidence insertion integrates structured factual elements into content sections where verification improves interpretive alignment. Properly placed evidence increases the informational weight of key sections and supports cluster-level reasoning consistency. This insertion step improves content reliability and enhances the visibility impact of optimized flows.

Validation checkpoint

The validation checkpoint ensures that updated content meets structural, semantic, and evidential requirements before being reintegrated into the optimization flow. This checkpoint prevents the introduction of inconsistencies, reduces interpretive drift, and maintains long-term content stability. Validation reinforces systematic improvement across enterprise environments.

Checklist:

- Are priority rules defined with consistent terminology?

- Is the page segmented with stable H2–H4 boundaries?

- Does each paragraph contain one reasoning unit aligned with AI extraction principles?

- Do examples clarify abstract prioritization or scoring concepts?

- Are potential ambiguities removed using local definitions?

- Does the structure enable step-by-step AI interpretation and ranking?

Interpretive Logic of Generative Content Prioritization

- Impact-weighted content signaling. Pages are interpreted through a composite of structural clarity, evidence density, and semantic focus that collectively indicate potential generative influence.

- Abstract scoring representation. Prioritization metrics function as abstractions of interpretive behavior, translating qualitative structure into comparable signals.

- Relevance-order inference. Ordered page sets reflect inferred importance within generative retrieval pathways rather than linear editorial sequencing.

- Sequence coherence. Priority flows indicate logical progression across related assets, enabling systems to contextualize optimization scope at scale.

- Stability verification. Recurrent interpretive outcomes across evaluations signal durable prioritization beyond transient model variance.

This logic describes how generative systems interpret prioritization frameworks as signals of content significance, guiding optimization focus without prescribing procedural actions.

FAQ: Generative Optimization Prioritization Guide

What is a generative optimization prioritization guide?

A generative optimization prioritization guide is a structured system for ranking pages by clarity, evidence strength, structural depth, and AI interpretation readiness.

Why is page prioritization important for generative optimization?

Page prioritization ensures that the most structurally complete and semantically clear pages are optimized first, delivering the highest generative visibility impact.

How do AI systems evaluate page importance?

AI systems analyze structural cues, semantic segmentation, evidence density, and stable terminology to determine which pages hold the most generative value.

What criteria define high-priority pages?

High-priority pages demonstrate strong entity clarity, consistent terminology, evidence integration, and a predictable heading hierarchy aligned with AI reasoning patterns.

How does scoring work in the prioritization process?

Scoring assigns weighted values to clarity, structure, evidence, and stability indicators, creating a measurable model for evaluating page readiness.

What is the role of ranking in generative optimization?

Ranking orders pages based on generative relevance, ensuring that the content with the strongest retrieval potential is optimized earlier in the workflow.

How does priority mapping support enterprise-scale content?

Priority mapping connects scoring outcomes to structured workflow paths, enabling predictable optimization sequences across large content ecosystems.

What types of pages deliver the highest generative impact?

High-impact pages typically contain strong evidence signals, a stable internal structure, clearly defined scope, and coherent semantic boundaries.

How often should page prioritization be updated?

Prioritization should be recalibrated as new pages are added, content evolves, or evidence sources change, ensuring accurate generative alignment over time.

What skills are needed to apply page prioritization effectively?

Practitioners need structural reasoning, semantic clarity, evidence-based analysis, and the ability to evaluate how content interacts with generative retrieval patterns.

Glossary: Key Terms in Generative Optimization Prioritization

This glossary defines the core terminology used throughout the Generative Optimization Prioritization Guide to ensure consistent interpretation of scoring, ranking, mapping, and page-level evaluation concepts.

Page Prioritization

The process of determining which pages should be optimized first based on structural clarity, semantic value, and generative impact potential.

Scoring Indicators

Weighted components used to evaluate page readiness, including clarity, evidence density, hierarchy precision, and terminology stability.

Ranking Pathways

Ordered content sequences generated from scoring data that determine which pages hold the highest generative relevance.

High-Impact Pages

Pages that provide strong structural depth, entity consistency, and evidence density, giving them outsized influence on generative visibility.

Priority Mapping

A rule-based framework that translates scoring and ranking outputs into structured optimization sequences across enterprise content systems.

Visibility Scoring

A metric that evaluates how effectively a page aligns with AI interpretation models, including clarity, stability, and evidence integration signals.

Generative Signals

Structural and semantic cues extracted by AI models to interpret content relevance, including entity clarity, hierarchy logic, and factual patterns.

Workflow Chain

A sequential process connecting assessment, scoring, ranking, and deployment stages to coordinate structured optimization.

Structural Depth

The level of hierarchical organization within a page, including heading clarity, segmentation accuracy, and stable idea boundaries.

Page Value Scoring

The measurement of a page’s functional contribution to generative performance based on entity density, scope definition, and evidence presence.