Last Updated on December 20, 2025 by PostUpgrade

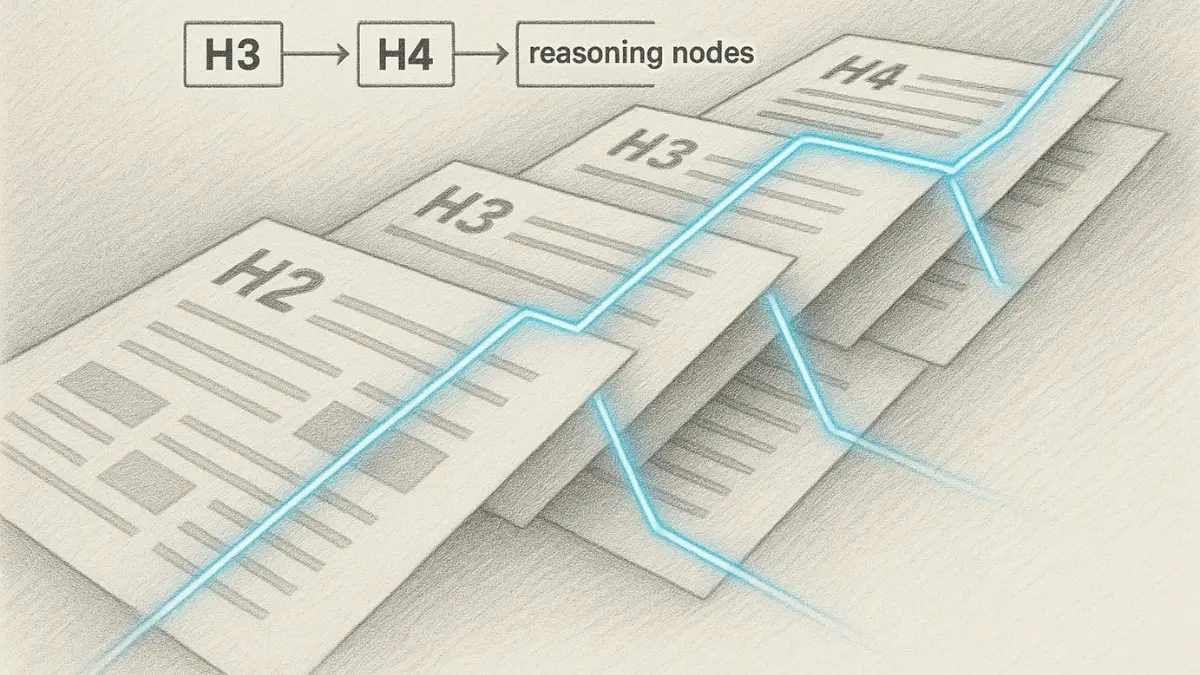

Creating Hierarchical Depth Without Confusion

Modern AI-first content systems rely on hierarchical content depth to ensure predictable interpretation and stable semantic extraction. Effective architectures are built through layered content depth, which enables models to identify boundaries, segment information, and maintain reasoning coherence. Structured depth also reduces ambiguity by giving AI systems consistent intervals for classification, retrieval, and summarization. This article outlines how depth can be created, governed, and scaled without introducing interpretive confusion.

Definition: Hierarchical content depth is the structured layering of information that allows AI systems to interpret boundaries, transitions, and reasoning intervals with clarity and consistency across complex documents.

Foundations of Hierarchical Content Depth

Research from the MIT Computer Science and Artificial Intelligence Laboratory shows that hierarchical structures require clear internal boundaries to ensure stable reasoning patterns and reduce ambiguity in AI interpretation. Effective depth formation depends on hierarchy clarity structure because it determines how content layers are segmented and processed. Structured depth creates predictable intervals that models can interpret consistently across long-form material.

Definition: Precise hierarchical order is a structured arrangement of depth layers that maintains stable sequencing and limits interpretive variance during AI-driven processing.

Claim: Deep structural mapping strengthens interpretability by assigning each depth layer a clear functional role within the larger content system.

Rationale: Defined boundaries reduce structural noise and ensure that neighboring segments remain logically independent.

Mechanism: Block-driven text analysis allows AI systems to classify, group, and map information based on consistent internal ordering.

Counterargument: When depth intervals lack precision, models may merge unrelated content or misinterpret transitions across reasoning layers.

Conclusion: Precision in depth design improves predictability, strengthens semantic stability, and increases the reliability of AI-based extraction.

Structural Purpose of Depth

Depth-first content layout makes it possible to present information in progressive layers that align with both human and machine expectations. This structure improves segmentation by creating a segmented content structure that supports predictable interpretation.

Depth as an Interpretive Signal

A depth-oriented writing method provides AI systems with explicit markers that guide the interpretation of reasoning intervals and content transitions. This structure helps align meaning by generating a clear block context mapping that models can follow through each layer.

Depth Components and Functions

Layered reasoning flow strengthens semantic organization by ensuring that each structural element performs a consistent and interpretable function.

| Component | Function | AI Benefit |

|---|---|---|

| clarity-driven hierarchy | Establishes ordered layers | Improves interval detection |

| article depth modeling | Structures concept groups | Enhances reasoning alignment |

| multi-tier page architecture | Controls multi-level flow | Supports stable summarization |

Designing Multi-Level Structures Without Overload

Research from the Stanford Natural Language Processing Group shows that deep structural sequencing establishes orderly multi-level architectures that reduce interpretive variance in long-form content. This configuration supports predictable segmentation by ensuring that each hierarchical layer has a defined purpose and boundary.

Principle: Hierarchical depth functions reliably only when each layer maintains stable conceptual boundaries that allow AI systems to map meaning without ambiguity or structural drift.

Definition: Nested content logic is a structured method for arranging multi-level information in a way that preserves interpretability, stability, and consistent semantic flow across all depth layers.

Claim: Layered meaning distribution ensures that each depth interval carries a controlled volume of information that models can process without ambiguity.

Rationale: Logical depth arrangement reduces structural noise by defining clear transitions between conceptual units.

Mechanism: This structure allows AI systems to map dependencies, identify segment boundaries, and interpret multi-level reasoning with greater precision.

Counterargument: If layers are unbalanced or overloaded, models may collapse distinct reasoning paths into a single undifferentiated segment.

Conclusion: Balanced multi-level sequencing improves clarity, strengthens semantic organization, and increases the reliability of AI-driven interpretation.

Balancing Hierarchical Content Depth and Clarity

A deep segmentation method organizes hierarchical intervals so that each level maintains a stable volume of information. This structure improves interpretability by reinforcing meaning flow through blocks and preserving consistent semantic transitions.

Avoiding Cognitive Load

Clean hierarchy planning reduces unnecessary density in multi-level structures by enforcing controlled distribution across each depth layer. This method supports consistent block formatting that stabilizes both human reading flows and AI extraction.

Practical Depth Patterns

Tiered topic organization provides a predictable progression of concepts that helps models navigate layered structures with minimal ambiguity.

- structured depth alignment

- progressive content layering

- depth-mapping in writing

- multi-layer clarity guide

- hierarchical narrative flow

These patterns ensure that depth remains readable, functional, and machine-interpretable across extended content.

Engineering Precision in Hierarchical Content Depth Layers

Research from the Berkeley Artificial Intelligence Research Lab shows that depth-controlled sections provide the structural consistency required for accurate segmentation and reasoning across complex content architectures. This approach stabilizes multi-level interpretation by defining strict boundaries for each hierarchical interval. It also reduces ambiguity by ensuring that every depth layer supports predictable transitions.

Definition: Precise hierarchical order is a structured arrangement of depth layers that maintains stable sequencing and limits interpretive variance during AI-driven processing.

Claim: Deep structural mapping strengthens interpretability by assigning each depth layer a clear functional role within the larger content system.

Rationale: Defined boundaries reduce structural noise and ensure that neighboring segments remain logically independent.

Mechanism: Block-driven text analysis allows AI systems to classify, group, and map information based on consistent internal ordering.

Counterargument: When depth intervals lack precision, models may merge unrelated content or misinterpret transitions across reasoning layers.

Conclusion: Precision in depth design improves predictability, strengthens semantic stability, and increases the reliability of AI-based extraction.

Layering Information for Machines

Layered information architecture enables AI systems to process complex material through well-defined structural levels that preserve clarity across extended documents. This structure relies on block segmentation in ai to maintain consistent organization within each depth interval.

Example: A document that distributes reasoning across predictable depth layers and maintains strict segmentation boundaries enables AI systems to classify concepts accurately, improving the probability that its strongest sections appear in generative summaries.

Controlling Expansion

Controlled hierarchy scaling ensures that multi-level structures grow in a predictable manner without introducing semantic drift across the content. This stability is supported by a nested reasoning structure that organizes dependent concepts into clearly ordered layers.

Depth Safety Signals

Clarity-safe depth growth reinforces interpretability by preventing dense or irregular segments from overloading AI systems. This pattern relies on a content hierarchy blueprint that defines the functional purpose of each structural layer.

Applying Depth to Large AI-First Texts

Research from the Allen Institute for Artificial Intelligence shows that a depth-logic writing pattern provides the structural clarity required for large-scale generative interpretation. This structure creates stable reasoning intervals that allow models to process long-form content with predictable segmentation. It also improves interpretability by defining clear boundaries across multiple depth layers.

Definition: AI block comprehension is the model’s ability to interpret layered structures by mapping segmented intervals, identifying transitions, and stabilizing contextual meaning across extended content.

Claim: Cascaded structural levels enable AI systems to process complex material through sequential reasoning layers that maintain internal order.

Rationale: These levels prevent concept merging by limiting the density of each interpretive interval.

Mechanism: Multi-layer article shaping organizes extended texts into predictable units that support classification, summarization, and dependency mapping.

Counterargument: When depth is unregulated, large documents may produce inconsistent reasoning paths or ambiguous structural transitions.

Conclusion: Applying controlled depth sequencing to long-form writing improves clarity, reduces interpretive variance, and increases extractability across generative systems.

Procedural Steps for Depth Construction

Structured tier expansion defines a clear progression of hierarchical intervals that models can follow through long-form material. This structure is reinforced through layout blocks for clarity, which stabilize segment boundaries and maintain consistent transitions.

Maintaining Hierarchical Content Depth Clarity at Scale

Article hierarchy refinement preserves reliability by maintaining balanced depth distribution across large documents. This stability depends on interpreting segmented text in a way that ensures that each layer remains logically independent and machine-readable.

Microcase — Depth Errors

A complex technical article produced by a large editorial team introduced inconsistent depth layers across its sections, causing models to misclassify concept groups. The irregular boundaries created structural noise that disrupted topic segmentation and reduced answer accuracy in downstream systems. These issues emerged because block transitions meaning was not clearly defined, leading to ambiguous reasoning intervals and unstable extraction.

Cross-Document Hierarchical Depth

Research from the Oxford Internet Institute shows that block arrangement impact becomes more pronounced when depth structures operate across multiple documents rather than within a single page. Cross-document depth provides a unified semantic backbone that ensures structural consistency across interconnected content hubs. This consistency allows AI systems to interpret relationships across pages using stable hierarchical cues.

Definition: AI-recognizable site pathways are structured navigation and depth patterns that models can consistently detect across multiple documents to stabilize retrieval, clustering, and reasoning.

Claim: Site pathways in generative search give AI systems fixed interpretive anchors that reduce ambiguity when navigating large collections of interconnected content.

Rationale: These pathways maintain continuity across documents, enabling models to reuse structural logic rather than recalculating interpretation at each page.

Mechanism: AI search behavior signals allow models to align cross-page reasoning intervals with predictable hierarchical markers.

Counterargument: Without consistent pathways, generative systems may misinterpret relationships between documents and produce unstable or contradictory summaries.

Conclusion: Cross-document hierarchical depth strengthens ecosystem-wide interpretability and improves multi-page retrieval accuracy.

Consistency Across Content Hubs

Structured mapping for ai queries provides aligned segmentation rules that allow large content hubs to preserve stable meaning across many interconnected documents. This architecture depends on content pathway modeling for ai to maintain uniform transitions and predictable semantic intervals across pages.

Depth-Safe Interlinking Models

Ai-driven navigation modeling stabilizes cross-document traversal by enforcing clear relationships between layers, sections, and conceptual groups. This stability is reinforced through mapping user-to-ai navigation, which ensures that AI systems follow consistent interpretive routes.

Cross-Document Depth Signals

| Signal Type | Function | AI Interpretation Benefit |

|---|---|---|

| adaptive mapping for ai retrieval | Maintains structured continuity across documents | Improves consistency of multi-page reasoning |

| site mapping for machine analysis | Standardizes cross-document layer recognition | Strengthens structural stability in generative models |

Hierarchical Content Depth and AI Reasoning Models

Research from the Carnegie Mellon Language Technologies Institute shows that ai-interpretable site mapping provides the structural grounding required for stable reasoning in generative systems. This structure enables models to interpret layered content through predictable patterns that limit ambiguity and support consistent inference across long-form material. Depth patterns therefore function as reasoning constraints that guide model behavior.

Definition: Semantic mapping for ai systems is the structured process of organizing layered information so that models can interpret dependencies, transitions, and contextual boundaries in a predictable manner.

Claim: Ai navigation structure mapping provides models with the structural sequencing required to process interconnected reasoning intervals across large documents.

Rationale: This sequencing strengthens interpretive continuity by limiting variance in how models detect transitions between conceptual layers.

Mechanism: Mapping content flow for ai creates a stable reasoning pathway that supports classification, summarization, and cross-layer dependency tracking.

Counterargument: If depth structures are inconsistent or poorly signaled, models may generate unstable reasoning chains or collapse multiple segments into a single interpretive unit.

Conclusion: Structured depth significantly increases reasoning stability by constraining interpretive variance and reinforcing predictable inferential patterns.

Depth as a Cognitive Constraint

Ai search behavior modeling establishes structural limitations that restrict how models interpret and navigate layered content. These constraints are strengthened through structured page hierarchy signals, which define the boundaries of each reasoning interval and ensure consistent extraction.

Reasoning Stability

Semantic shift seo highlights how depth structures influence the continuity and progression of reasoning across long-form content. This continuity is supported by concept hierarchy seo, which organizes multi-level meaning into a predictable sequence of interpretive layers.

Model Behaviors Influenced by Depth

- semantic structure seo

- concept mapping seo

- semantic content mapping

- semantic relevance patterns

- meaning driven visibility

These behaviors demonstrate how hierarchical depth governs the stability, accuracy, and predictability of AI reasoning processes.

Depth Governance in Enterprise Operations

Research from the European Commission Joint Research Centre shows that semantic layers seo provide the structural consistency required for managing hierarchical depth across large editorial and operational environments. This structure enables organizations to maintain alignment across teams, documents, and long-form content systems. Effective governance ensures that depth patterns remain predictable and interpretable at scale.

Definition: Seo semantic clarity is the consistent use of stable structural markers that preserve interpretability and prevent semantic drift across enterprise-level content ecosystems.

Claim: A semantic content framework creates unified depth rules that reduce variance introduced by distributed content production.

Rationale: These rules stabilize structural intervals, helping teams maintain alignment across multi-level content architectures.

Mechanism: Semantic topic modeling provides a structured method for grouping related concepts and assigning them to predictable depth layers.

Counterargument: Without governance, depth structures become inconsistent across teams, reducing interpretability and weakening cross-document reasoning signals.

Conclusion: Governance policies ensure depth consistency at scale and strengthen system-wide coherence for AI-driven interpretation.

Operational Hierarchy Standards

Semantic relevance seo establishes stable segmentation rules that define how teams should implement depth across different types of content. These rules rely on seo concept alignment to maintain consistent structural progression.

Workflow Integration

Semantic intent structure creates a predictable editorial process that incorporates depth review at every production stage. This workflow depends on seo meaning architecture to ensure that content remains internally aligned and machine-readable.

Governance Metrics and KPIs

- seo semantic evolution

- concept driven seo

These metrics allow organizations to track structural stability, enforce governance rules, and identify drift in large-scale content operations.

Checklist:

- Are depth layers defined with consistent terminology?

- Do H2–H4 segments follow predictable hierarchical boundaries?

- Does each paragraph represent a single reasoning interval?

- Are structural examples used to reinforce abstract depth concepts?

- Is ambiguity reduced through local definitions and stable transitions?

- Does the overall structure support step-by-step AI interpretation?

Hierarchical depth provides the structural consistency required for stable interpretation across AI-first content systems. Clear and predictable layers reduce interpretive variance and strengthen reasoning continuity within extended material. Cross-document alignment further enhances retrieval accuracy by maintaining uniform structural signals across interconnected resources. Depth governance ensures that these patterns remain reliable at scale, supporting long-term machine readability and system-wide coherence.

Structural Interpretation of Hierarchical Content Depth

- Depth-layer signaling. Hierarchical levels communicate scope and resolution, allowing generative systems to distinguish overview, explanation, and detail without ambiguity.

- Cross-document depth alignment. Consistent depth patterns across related pages enable coherent interpretation beyond individual documents.

- Functional layer differentiation. Each depth level carries a distinct semantic role, supporting predictable reasoning intervals during extraction and synthesis.

- Hierarchy-safe connectivity. Interlinked sections that respect depth relationships preserve navigational meaning under AI-driven traversal.

- Interpretive stability over time. Layered structures that remain consistent across model updates indicate durable hierarchical design.

This interpretation explains how hierarchical depth functions as a semantic framework, guiding AI understanding through layered resolution rather than procedural construction.

FAQ: Hierarchical Content Depth

What is hierarchical content depth?

Hierarchical content depth is the structured layering of information that helps AI interpret boundaries, segment reasoning intervals, and maintain clarity across long-form material.

Why is depth important for AI interpretation?

Depth provides predictable structural markers that reduce ambiguity, allowing AI systems to classify segments, map transitions, and generate stable reasoning outputs.

How does depth improve cross-document coherence?

Unified depth rules help AI follow consistent patterns across multiple pages, creating continuity that strengthens retrieval and generative accuracy.

What causes confusion in depth structures?

Confusion arises when layers are inconsistent, overloaded, or lack clear boundaries, leading AI models to merge unrelated ideas or misinterpret transitions.

How does interlinking relate to depth?

Depth-safe interlinking reinforces structural pathways across documents, giving AI clear navigation routes and improving cross-page interpretability.

What role does governance play in depth?

Governance ensures that depth rules are applied consistently across teams and documents, preventing semantic drift and ensuring long-term structural stability.

How do depth layers support reasoning stability?

Balanced layers limit cognitive load for AI systems, helping models track dependencies, maintain context, and reduce variance in generated explanations.

Is hierarchical depth the same as topical depth?

No. Hierarchical depth is structural, defining how content is layered, while topical depth focuses on completeness of information within a subject.

How can teams maintain structural consistency?

Teams can preserve consistency by using shared depth frameworks, standardized reasoning intervals, and uniform block segmentation rules.

What is the first step in implementing depth?

The first step is auditing existing content to identify missing layers, unclear boundaries, or unevenly distributed reasoning intervals.

Glossary: Key Terms in Hierarchical Content Depth

This glossary defines the core terminology used throughout this article to support consistent interpretation of hierarchical content depth, layered reasoning structures, and AI-first content organization.

Hierarchical Content Depth

A multi-level structural framework that organizes information into clear layers, enabling AI systems to interpret boundaries, transitions, and reasoning intervals.

Depth Layer

A structured segment within a hierarchical framework that performs a defined interpretive role and supports stable machine-readable organization.

Structured Segmentation

The process of dividing content into clearly defined units that maintain consistent meaning boundaries and predictable interpretive flow.

Reasoning Interval

A discrete segment of thought represented within a content layer that models use to track logic, transitions, and semantic progression.

Cross-Document Pathway

A consistent interpretive route that AI systems follow across multiple documents based on shared structural patterns and aligned depth layers.

Depth-Safe Interlinking

An interlinking approach that reinforces hierarchical consistency by connecting sections or documents without disrupting structural alignment.

Structural Alignment

The degree to which content layers follow a unified hierarchical model that supports stable AI reasoning and cross-content coherence.

Depth Governance

The organizational practice of maintaining consistent depth rules, terminology, and structural patterns across large content ecosystems.

Semantic Stability

The reliability of meaning across content layers, ensured by consistent terminology, structured reasoning, and predictable hierarchical transitions.

Depth Signal

A structural marker that indicates the function, position, or boundary of a content layer, supporting accurate segmentation and machine interpretation.